当前位置:网站首页>Build a highly available database

Build a highly available database

2022-06-12 21:36:00 【NGC73】

1、 A master-slave mode

1.1、 Why set master-slave synchronization

- Read / write separation , Reduce the burden of database . In the development work , Sometimes I meet someone sql Statement needs lock table , Resulting in temporary inability to use the read service , This will affect the existing business , Use master-slave replication , Let the main database be responsible for writing , Read from the library , such , Even if the main database appears to lock the table , The normal operation of the business can also be ensured by reading from the database

- Database real-time backup . When a node in the system fails , It's easy to fail over ( Master slave switch ).

- High availability

- With the increase of business access in the system , If it is a stand-alone deployment database , It will lead to I/O Access frequency is too high . With master-slave replication , Add multiple data storage nodes , Distribute the load across multiple slave nodes , Reduce the cost of stand-alone disk I/O Frequency of visits , Improve the... Of a single machine I/O performance .

1.2、 Master-slave form

- One master, many followers — Read / write separation , Provide the concurrency capability of the cluster ( In general , The scenario of reading data is redundant to the scenario of writing business )

- Multi master and one slave — The slave database is mainly used for database backup

- Double master replication

- Cascade replication

1.3、 Realization principle

Follow the two-phase commit protocol , The main process is as follows :

- Update of main database SQL(update、insert、delete) Written to binlog

- Initiate connection from library , Connect to the main library .

- At this time, the main library creates a binlog dump thread , hold bin log Content sent from library .

- After starting from the library , Create a I/O Threads , Read from the main library bin log Content and write to relay log

- From the library will also create a SQL Threads , from relay log Read the contents inside , from ExecMasterLog_Pos The location starts executing the read update event , Write the update to slave Of db

1.4、 Data high availability solution

- Dual active standby : Two machines A and B,A Give priority to the library , Read and write ,B For backup , Backup data only . If A Library failure ,B The library becomes the main library and is responsible for reading and writing . After fixing the fault ,A Become a standby warehouse , Main library B Synchronize data to the standby database A

- A master from : Two machines A and B,A Give priority to the library , Read and write ,B For from the library , Read the data . If A Library failure ,B The library becomes the main library and is responsible for reading and writing . After fixing the fault ,A Become a slave , Main library B Synchronize data to slave Library A.

- One master, many followers : One master library and multiple slave libraries ,A Give priority to the library , Read and write ,B、C、D For from the library , Read the data . If A Library failure ,B The library becomes the main library and is responsible for reading and writing ,C、D Responsible for reading . After fixing the fault ,A Also become from the library , Main library B Synchronize data to slave Library A.

- MariaDB Synchronize multiple hosts : There is an agent layer to realize load balancing , Multiple databases can be read and written at the same time ; Various databases can be connected through Galera Replication Method for data synchronization , Theoretically, the data of each library is completely consistent .

- database middleware :mycat Fragmentation storage , Each partition is configured with a master-slave cluster .

1.5、 Comparison between one master multi-slave mode and cascade replication mode

In one master multi slave mode , The transmission delay between master and slave will be smaller , But there will be too many binlog dump Threads , It will aggravate the main database IO load .

If cascading replication is used , There is only one master dump Threads , Do not read from the master binlog The content of , Only responsible for the master's binlog Transfer to slave Library . Set the slave tables and all kinds to BLACKHOLE engine , The data generated from the main application log is thrown into the black hole , You only need to apply the master database from the master database binlog When the binlog, So set log_slave_updates=on, Open from the master binlog, Only in this way can the logs generated from the master application be recorded binlog Transferred to the ( second level ) From the library .

2、 Sub database and sub table

As the business grows ,MySQL More and more data will be saved in . here , Databases can easily become a bottleneck in system performance , Stand alone storage capacity 、IO、CPU Processing power is limited , When the amount of data in a single table reaches 1000W or 100G in the future , The operation of adding, deleting, changing and querying database tables is faced with the problem of significant performance degradation . This is to consider the method of database and table optimization .

2.1 vertical partitioning

2.1.1 Vertical sub database

Split the system into multiple businesses , Each business uses its own separate database .

2.1.2 Vertical sub table

Vertical table splitting is based on the table fields in the database . There may be some tables with many fields in the business , Some fields in the table are long , We only need these long fields occasionally , At this point, we can consider splitting the table vertically .

Some of the less commonly used , But the fields with large length are taken out and put into another table .

MySQL The bottom layer is stored through data pages , A record takes up too much space, which leads to page spread , Cause additional performance overhead . In addition, the database loads data into memory in behavioral units , In this way, the length of the fields in the table is shorter and the access frequency is higher , Memory can load more data , More hits , Reduced disk IO, Thus, the database performance is improved .

2.2 horizontal partitioning

2.2.1 Sub table in the warehouse

The sub tables in the library are in the same DB On , Split a table into multiple tables according to certain conditions . Tables can be divided according to the stored date ( The data stored in January is 1 A watch , The data stored in February is a table ……), It can also be stored according to id Sequence numbers are divided into tables (1-1000000 by 1 A watch ,1000001-2000000 by 1 A watch ……). The specific expression shall be subject to the business , On demand sub table . The chart below shows the daily schedule .

2.2.2 Sub database and sub table

Splitting databases and tables means splitting tables , And split into different machines .

For example, Tencent cloud DCDB This is the way to deal with it . You can specify the of a table shardKey, Then on shardKey take hash, according to hash Values put data in different databases , It can solve the bottleneck problem of single machine physical resources .

There are two kinds of Middleware in the market , That is, Dangdang sharding-jdbc Ali mycat, The differences between the two are as follows :

3、 Distributed cache

3.1 Optimization principle

After receiving the query request , Let's query the cache first , Determine whether there is data in the cache , Data is directly returned to the application , If you do not query the database again , And load it into the cache , This greatly reduces the number of visits to the database , Naturally, it also improves database performance .

Be careful : After the introduction of distributed cache, the system needs to consider how to deal with cache penetration 、 Cache breakdown and cache avalanche .

3.2 High performance

Suppose the scene , A request to come , Save the database query results and return them to the user , Assumed time consuming 600ms. But that may not change in the next few hours , Or it can be changed without immediate feedback to users . So what to do at this time ?

Then consider using caching , One key Corresponding to one value, Next time someone checks , No more spending 600ms from mysql Query in , Directly from the cache , Through one key Find out one value,2ms Get it done . Performance improvement 300 times .

That is to say, for some results that need complex operation and time-consuming , And make sure it doesn't change much later , But there are a lot of read requests , Then directly put the results of the query in the cache , Just read the cache directly later .

3.3 High concurrency

Suppose the scene , Traffic access peak one second request 1 Ten thousand times , about mysql Come on , The load is quite large ,mysql It is likely to be down or the efficiency of the query will be greatly reduced . At this point, if you cache , Cache a lot of data , No more searching mysql, Read directly from the cache , Can greatly increase the amount of concurrency .

4 an account of happenings after the event being told

Subsequent updates mycat Sub database and sub table , Distributed cache redis 's blog post

边栏推荐

- Can flush open an account? Can you directly open the security of securities companies on the app

- Digital intelligence data depth | Bi goes down the altar? It's not that the market has declined, it's that the story has changed

- Delphi XE7的蓝牙 Bluetooth

- Can tonghuashun open an account? Can the security of securities companies be directly opened on the app? How to open an account for securities accounts

- 如何自己动手写一个vscode插件,实现插件自由!

- SQL调优指南笔记10:Optimizer Statistics Concepts

- ICML2022 | GALAXY:极化图主动学习

- A blog written clearly by vit

- [target detection] |dive detector into box for object detection new training method based on fcos

- ORM 实现类与表,类属性与字段的映射关系

猜你喜欢

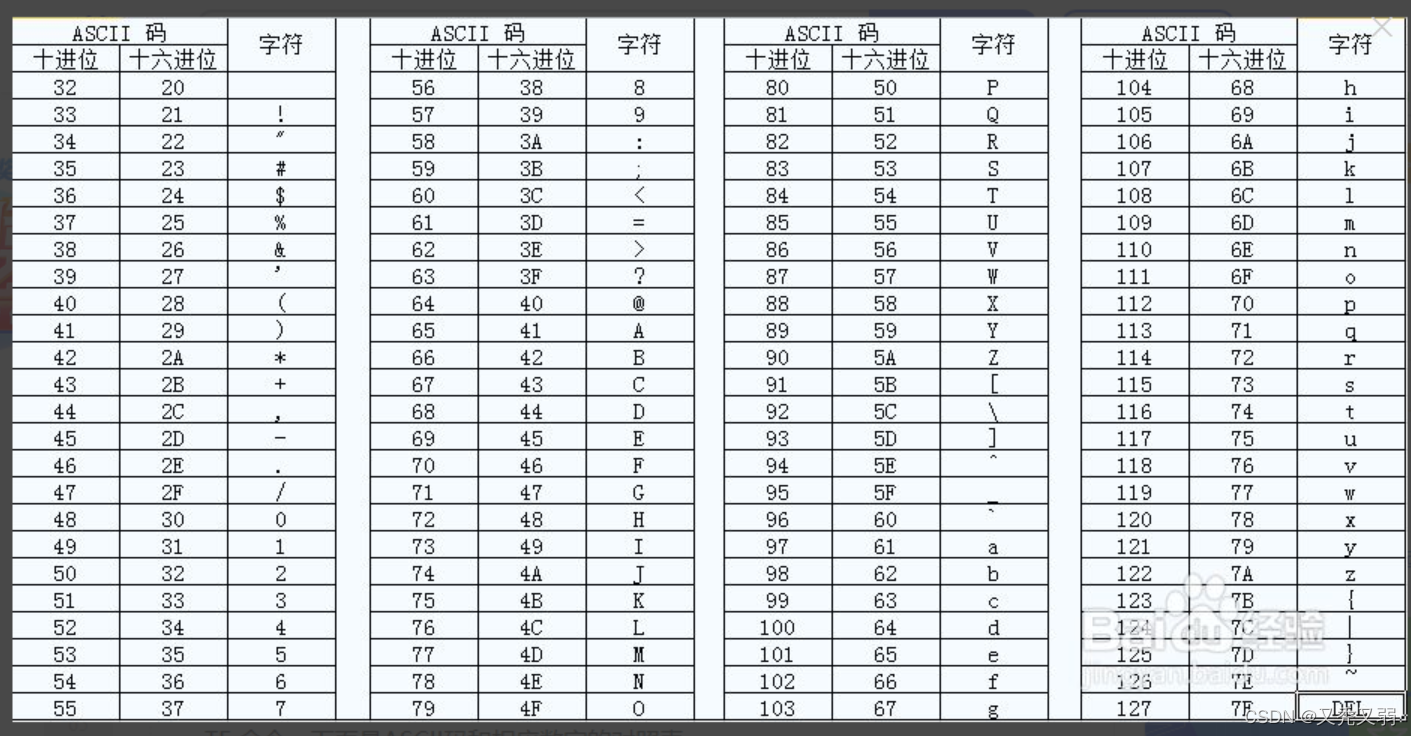

ASCII 码对照表

#141 Linked List Cycle

How do complex systems detect anomalies? North Carolina UNCC and others' latest overview of graph based deep learning anomaly detection methods in complex distributed systems describes the latest prog

ICML2022 | GALAXY:极化图主动学习

Npoi create word

复杂系统如何检测异常?北卡UNCC等最新《复杂分布式系统中基于图的深度学习异常检测方法综述》,阐述最新图异常检测技术进展

JUC并发工具包使用指南

makefile 的ifeq,filter,strip 简单使用

图灵奖得主:想要在学术生涯中获得成功,需要注意哪些问题?

SQL调优指南笔记6:Explaining and Displaying Execution Plans

随机推荐

Main stages of garbage collection in ZGC

SQL调优指南笔记10:Optimizer Statistics Concepts

Ubuntu16.04 completely delete MySQL database

Cookies and sessions

Common error in script execution: build sh: caller: not found

linux备份mysql

(4) Pyqt designs and implements the [factory production management system] order page - add, delete, modify and query (including source code analysis)

Oracle 19c 安装文档

Solve one-dimensional array prefix sum

Cv2.lut() (populates the output array with values from the lookup table)

@loadbalance annotation of resttemplate

How do complex systems detect anomalies? North Carolina UNCC and others' latest overview of graph based deep learning anomaly detection methods in complex distributed systems describes the latest prog

Deep Hough voting for 3D object detection in point clouds

Leetcode: 210. Programme II

Bubble sort

DRF receives nested data and creates objects. Solution: DRF not NULL constraint failed

Risk control modeling X: Discussion on problems existing in traditional modeling methods and Exploration on improvement methods

Simple understanding of cap and base theory

USB机械键盘改蓝牙键盘

Pytorch how to set random number seed to make the result repeatable