HAT [Paper Link]

Activating More Pixels in Image Super-Resolution Transformer

Xiangyu Chen, Xintao Wang, Jiantao Zhou and Chao Dong

BibTeX

@article{chen2022activating,

title={Activating More Pixels in Image Super-Resolution Transformer},

author={Chen, Xiangyu and Wang, Xintao and Zhou, Jiantao and Dong, Chao},

journal={arXiv preprint arXiv:2205.04437},

year={2022}

}

Environment

Installation

pip install -r requirements.txt

python setup.py develop

How To Test

- Refer to

./options/testfor the configuration file of the model to be tested, and prepare the testing data and pretrained model. - The pretrained models are available at Google Drive or Baidu Netdisk (access code: qyrl).

- Then run the follwing codes (taking

HAT_SRx4_ImageNet-pretrain.pthas an example):

python hat/test.py -opt options/test/HAT_SRx4_ImageNet-pretrain.yml

The testing results will be saved in the ./results folder.

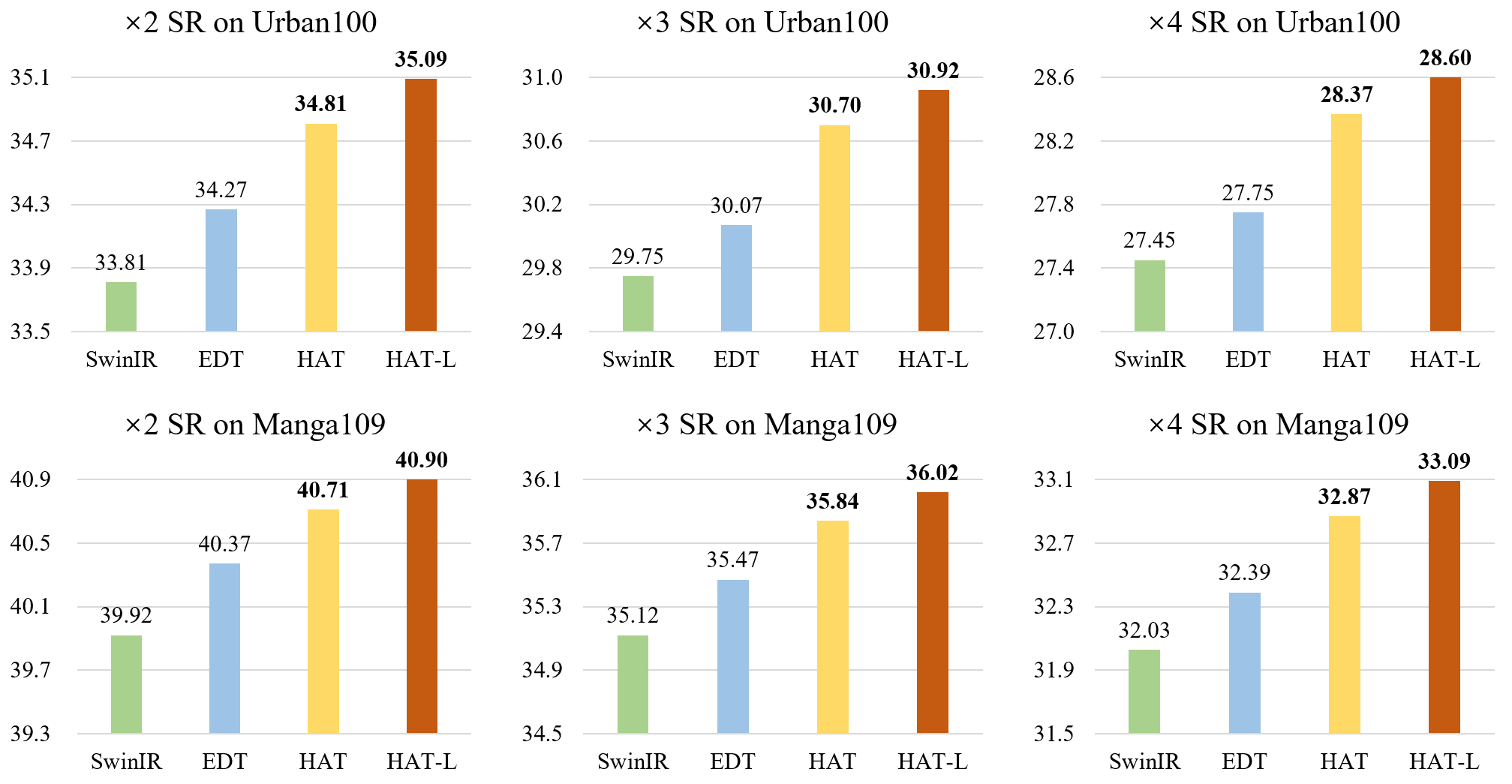

Results

The inference results on benchmark datasets are available at Google Drive or Baidu Netdisk (access code: 63p5).