Model Serving Made Easy

BentoML is a flexible, high-performance framework for serving, managing, and deploying machine learning models.

- Supports multiple ML frameworks, including Tensorflow, PyTorch, Keras, XGBoost and more

- Cloud native deployment with Docker, Kubernetes, AWS, Azure and many more

- High-Performance online API serving and offline batch serving

- Web dashboards and APIs for model registry and deployment management

BentoML bridges the gap between Data Science and DevOps. By providing a standard interface for describing a prediction service, BentoML abstracts away how to run model inference efficiently and how model serving workloads can integrate with cloud infrastructures. See how it works!

Join our community on Slack

Documentation

BentoML documentation: https://docs.bentoml.org/

- Quickstart Guide, try it out on Google Colab

- Core Concepts

- API References

- FAQ

- Example projects: bentoml/Gallery

Key Features

Production-ready online serving:

- Support multiple ML frameworks including PyTorch, TensorFlow, Scikit-Learn, XGBoost, and many more

- Containerized model server for production deployment with Docker, Kubernetes, OpenShift, AWS ECS, Azure, GCP GKE, etc

- Adaptive micro-batching for optimal online serving performance

- Discover and package all dependencies automatically, including PyPI, conda packages and local python modules

- Serve compositions of multiple models

- Serve multiple endpoints in one model server

- Serve any Python code along with trained models

- Automatically generate REST API spec in Swagger/OpenAPI format

- Prediction logging and feedback logging endpoint

- Health check endpoint and Prometheus

/metricsendpoint for monitoring

Standardize model serving and deployment workflow for teams:

- Central repository for managing all your team's prediction services via Web UI and API

- Launch offline batch inference job from CLI or Python

- One-click deployment to cloud platforms including AWS EC2, AWS Lambda, AWS SageMaker, and Azure Functions

- Distributed batch or streaming serving with Apache Spark

- Utilities that simplify CI/CD pipelines for ML

- Automated offline batch inference job with Dask (roadmap)

- Advanced model deployment for Kubernetes ecosystem (roadmap)

- Integration with training and experimentation management products including MLFlow, Kubeflow (roadmap)

ML Frameworks

- Scikit-Learn - Docs | Examples

- PyTorch - Docs | Examples

- Tensorflow 2 - Docs | Examples

- Tensorflow Keras - Docs | Examples

- XGBoost - Docs | Examples

- LightGBM - Docs | Examples

- FastText - Docs | Examples

- FastAI - Docs | Examples

- H2O - Docs | Examples

- ONNX - Docs | Examples

- Spacy - Docs | Examples

- Statsmodels - Docs | Examples

- CoreML - Docs

- Transformers - Docs

- Gluon - Docs

- Detectron - Docs

- PaddlePaddle - Docs | Example

- EvalML - Docs

- EasyOCR -Docs

- ONNX-MLIR - Docs

Deployment Options

Be sure to check out deployment overview doc to understand which deployment option is best suited for your use case.

-

One-click deployment with BentoML:

-

Deploy with open-source platforms:

-

Manual cloud deployment guides:

Introduction

BentoML provides APIs for defining a prediction service, a servable model so to speak, which includes the trained ML model itself, plus its pre-processing, post-processing code, input/output specifications and dependencies. Here's what a simple prediction service look like in BentoML:

import pandas as pd

from bentoml import env, artifacts, api, BentoService

from bentoml.adapters import DataframeInput, JsonOutput

from bentoml.frameworks.sklearn import SklearnModelArtifact

# BentoML packages local python modules automatically for deployment

from my_ml_utils import my_encoder

@env(infer_pip_packages=True)

@artifacts([SklearnModelArtifact('my_model')])

class MyPredictionService(BentoService):

"""

A simple prediction service exposing a Scikit-learn model

"""

@api(input=DataframeInput(), output=JsonOutput(), batch=True)

def predict(self, df: pd.DataFrame):

"""

An inference API named `predict` that takes tabular data in pandas.DataFrame

format as input, and returns Json Serializable value as output.

A batch API is expect to receive a list of inference input and should returns

a list of prediction results.

"""

model_input_df = my_encoder.fit_transform(df)

predictions = self.artifacts.my_model.predict(model_input_df)

return list(predictions)

This can be easily plugged into your model training process: import your bentoml prediction service class, pack it with your trained model, and call save to persist the entire prediction service at the end, which creates a BentoML bundle:

from my_prediction_service import MyPredictionService

svc = MyPredictionService()

svc.pack('my_model', my_sklearn_model)

svc.save() # saves to $HOME/bentoml/repository/MyPredictionService/{version}/

The generated BentoML bundle is a file directory that contains all the code files, serialized models, and configs required for reproducing this prediction service for inference. BentoML automatically captures all the python dependencies information and have everything versioned and managed together in one place.

BentoML automatically generates a version ID for this bundle, and keeps track of all bundles created under the $HOME/bentoml directory. With a BentoML bundle, user can start a local API server hosting it, either by its file path or its name and version:

bentoml serve MyPredictionService:latest

# alternatively

bentoml serve $HOME/bentoml/repository/MyPredictionService/{version}/

A docker container image that's ready for production deployment can be created now with just one command:

bentoml containerize MyPredictionService:latest -t my_prediction_service:v3

docker run -p 5000:5000 my_prediction_service:v3 --workers 2

The container image produced will have all the required dependencies installed. Besides the model inference API, the containerized BentoML model server also comes with Prometheus metrics, health check endpoint, prediction logging, and tracing support out-of-the-box. This makes it super easy for your DevOps team to incorporate your models into production systems.

BentoML's model management component is called Yatai, it means food cart in Japanese, and you can think of it as where you'd store your bentos

Read the Quickstart Guide to learn more about the basic functionalities of BentoML. You can also try it out here on Google Colab.

Why BentoML

Moving trained Machine Learning models to serving applications in production is hard. It is a sequential process across data science, engineering and DevOps teams: after a model is trained by the data science team, they hand it over to the engineering team to refine and optimize code and creates an API, before DevOps can deploy.

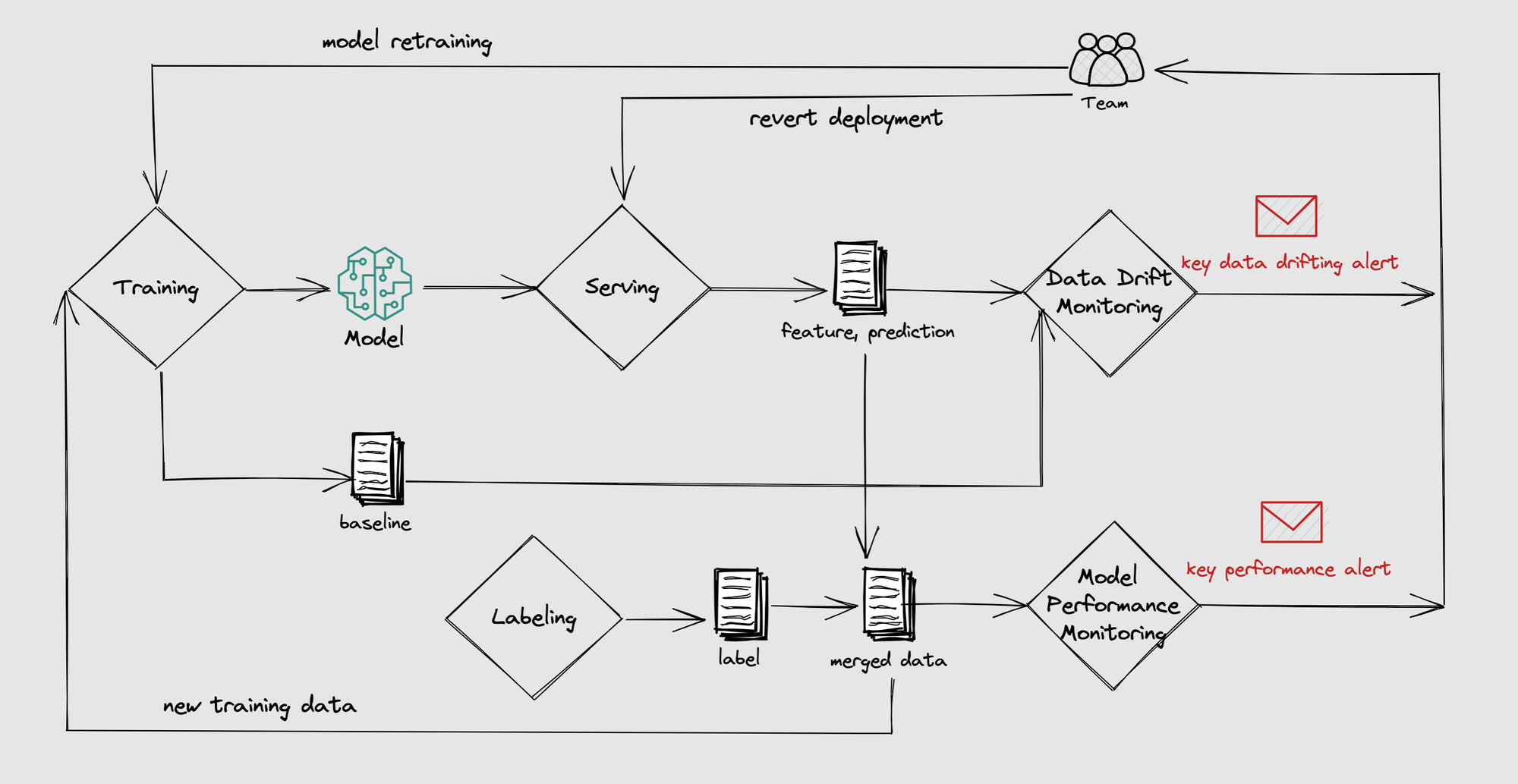

And most importantly, Data Science teams want to continuously repeat this process, monitor the models deployed in production and ship new models quickly. It often takes months for an engineering team to build a model serving & deployment solution that allow data science teams to ship new models in a repeatable and reliable way.

BentoML is a framework designed to solve this problem. It provides high-level APIs for Data Science team to create prediction services, abstract away DevOps' infrastructure needs and performance optimizations in the process. This allows DevOps team to seamlessly work with data science side-by-side, deploy and operate their models packaged in BentoML format in production.

Check out Frequently Asked Questions page on how does BentoML compares to Tensorflow-serving, Clipper, AWS SageMaker, MLFlow, etc.

Contributing

Have questions or feedback? Post a new github issue or discuss in our Slack channel:

Want to help build BentoML? Check out our contributing guide and the development guide.

Releases

BentoML is under active development and is evolving rapidly. It is currently a Beta release, we may change APIs in future releases and there are still major features being worked on.

Read more about the latest updates from the releases page.

Usage Tracking

BentoML by default collects anonymous usage data using Amplitude. It only collects BentoML library's own actions and parameters, no user or model data will be collected. Here is the code that does it.

This helps BentoML team to understand how the community is using this tool and what to build next. You can easily opt-out of usage tracking by running the BentoML commands with the --do-not-track option.

% bentoml [command] --do-not-track

or by setting the BENTOML_DO_NOT_TRACK environment variable to True.

% export BENTOML_DO_NOT_TRACK=True