15 Repositories

Latest Python Libraries

TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP

TextAttack 🐙 Generating adversarial examples for NLP models [TextAttack Documentation on ReadTheDocs] About • Setup • Usage • Design About TextAttack

Code for "Adversarial attack by dropping information." (ICCV 2021)

AdvDrop Code for "AdvDrop: Adversarial Attack to DNNs by Dropping Information(ICCV 2021)." Human can easily recognize visual objects with lost informa

This library is testing the ethics of language models by using natural adversarial texts.

prompt2slip This library is testing the ethics of language models by using natural adversarial texts. This tool allows for short and simple code and v

Adversarial Robustness Toolbox (ART) - Python Library for Machine Learning Security - Evasion, Poisoning, Extraction, Inference - Red and Blue Teams

Adversarial Robustness Toolbox (ART) is a Python library for Machine Learning Security. ART provides tools that enable developers and researchers to defend and evaluate Machine Learning models and ap

PyTorch implementation of our method for adversarial attacks and defenses in hyperspectral image classification.

Self-Attention Context Network for Hyperspectral Image Classification PyTorch implementation of our method for adversarial attacks and defenses in hyp

Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark

Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark Yong

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models.

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models

Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness?

Adversrial Machine Learning Benchmarks This code belongs to the papers: Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness? Det

A Python toolbox to create adversarial examples that fool neural networks in PyTorch, TensorFlow, and JAX

Foolbox Native: Fast adversarial attacks to benchmark the robustness of machine learning models in PyTorch, TensorFlow, and JAX Foolbox is a Python li

Code for the CVPR2022 paper "Frequency-driven Imperceptible Adversarial Attack on Semantic Similarity"

Introduction This is an official release of the paper "Frequency-driven Imperceptible Adversarial Attack on Semantic Similarity" (arxiv link). Abstrac

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models (published in ICLR2018)

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models Pouya Samangouei*, Maya Kabkab*, Rama Chellappa [*: authors co

![[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️](https://github.com/sungyoon-lee/LossLandscapeMatters/raw/master/media/fig.png)

[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️

Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples This repository is the official implementation of "Tow

[ICML 2021] A fast algorithm for fitting robust decision trees.

GROOT: Growing Robust Trees Growing Robust Trees (GROOT) is an algorithm that fits binary classification decision trees such that they are robust agai

Short PhD seminar on Machine Learning Security (Adversarial Machine Learning)

Short PhD seminar on Machine Learning Security (Adversarial Machine Learning)

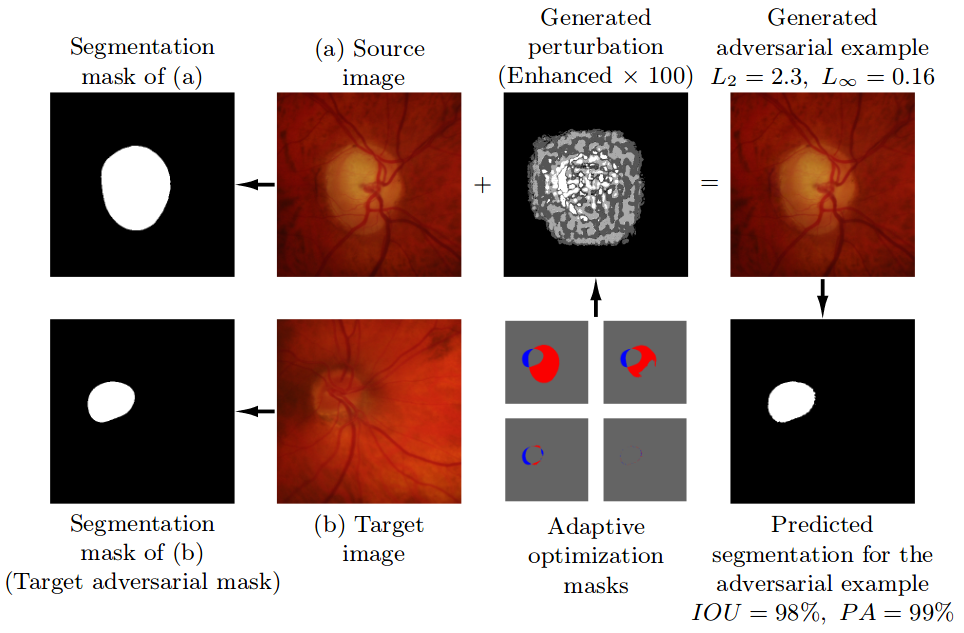

Pre-trained model, code, and materials from the paper "Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation" (MICCAI 2019).

Adaptive Segmentation Mask Attack This repository contains the implementation of the Adaptive Segmentation Mask Attack (ASMA), a targeted adversarial