当前位置:网站首页>One of yolox improvements: add CBAM, Se, ECA attention mechanism

One of yolox improvements: add CBAM, Se, ECA attention mechanism

2022-07-27 14:06:00 【Artificial Intelligence Algorithm Research Institute】

front said : The series released before has a pair of 2020 Published in YOLOv5 Improvement , Many friends consult YOLOX How to improve , This series focuses on YOLOX How to improve is introduced in detail , Basic heel YOLOv5 Agreement , There are subtle differences . Subsequent articles , Focus on YOLOX How to improve is introduced in detail , The purpose is to provide their own meager help and reference for those who need innovation in scientific research or friends who need to achieve better results in engineering projects .

You are welcome to pay attention to more procedural information and answer questions —— WeChat official account : Artificial intelligence AI Algorithm engineer

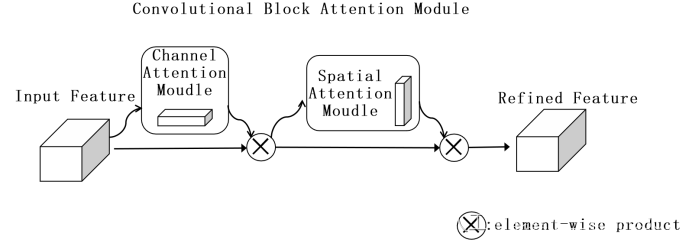

solve the problem : This article is added with CBAM Take the dual channel attention mechanism as an example , It can make the network pay more attention to the target to be detected , Improve the detection effect , Solve the situation that it is easy to miss detection under the background of complex environment .

Add method :

First step : Determine where to add , As a plug and play attention module , Can be added to YOLOX Anywhere in the network . This article adds convolution Conv Module as an example .

The second step :darknet.py structure CBAM modular .

class SE(nn.Module):

def __init__(self, channel, ratio=16):

super(SE, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(channel, channel // ratio, bias=False),

nn.ReLU(inplace=True),

nn.Linear(channel // ratio, channel, bias=False),

nn.Sigmoid()

)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

return x * y

class ECA(nn.Module):

def __init__(self, channel, b=1, gamma=2):

super(ECA, self).__init__()

kernel_size = int(abs((math.log(channel, 2) + b) / gamma))

kernel_size = kernel_size if kernel_size % 2 else kernel_size + 1

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv = nn.Conv1d(1, 1, kernel_size=kernel_size, padding=(kernel_size - 1) // 2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

y = self.avg_pool(x)

y = self.conv(y.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1)

y = self.sigmoid(y)

return x * y.expand_as(x)

class ChannelAttention(nn.Module):

def __init__(self, in_planes, ratio=8):

super(ChannelAttention, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.max_pool = nn.AdaptiveMaxPool2d(1)

# utilize 1x1 Convolution instead of full connection

self.fc1 = nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False)

self.relu1 = nn.ReLU()

self.fc2 = nn.Conv2d(in_planes // ratio, in_planes, 1, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = self.fc2(self.relu1(self.fc1(self.avg_pool(x))))

max_out = self.fc2(self.relu1(self.fc1(self.max_pool(x))))

out = avg_out + max_out

return self.sigmoid(out)

class SpatialAttention(nn.Module):

def __init__(self, kernel_size=7):

super(SpatialAttention, self).__init__()

assert kernel_size in (3, 7), 'kernel size must be 3 or 7'

padding = 3 if kernel_size == 7 else 1

self.conv1 = nn.Conv2d(2, 1, kernel_size, padding=padding, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = torch.mean(x, dim=1, keepdim=True)

max_out, _ = torch.max(x, dim=1, keepdim=True)

x = torch.cat([avg_out, max_out], dim=1)

x = self.conv1(x)

return self.sigmoid(x)

# CBAM Attention mechanism

class CBAM(nn.Module):

def __init__(self, channel, ratio=8, kernel_size=7):

super(CBAM, self).__init__()

self.channelattention = ChannelAttention(channel, ratio=ratio)

self.spatialattention = SpatialAttention(kernel_size=kernel_size)

def forward(self, x):

x = x*self.channelattention(x)

x = x*self.spatialattention(x)

return xThe third step :yolo_pafpn.py Register our modification CBAM modular

self.cbam_1 = CBAM(int(in_channels[2] * width)) # Corresponding dark5 Output 1024 Dimension channel

self.cbam_2 = CBAM(int(in_channels[1] * width)) # Corresponding dark4 Output 512 Dimension channel

self.cbam_3 = CBAM(int(in_channels[0] * width)) # Corresponding dark3 Output 256 Dimension channel

def forward(self, input):

"""

Args:

inputs: input images.

Returns:

Tuple[Tensor]: FPN feature.

"""

# backbone

out_features = self.backbone(input)

features = [out_features[f] for f in self.in_features]

[x2, x1, x0] = features

# 3、 Use the attention mechanism directly on the input feature map

x0 = self.cbam_1(x0)

x1 = self.cbam_2(x1)

x2 = self.cbam_3(x2)junction fruit : I have done a lot of experiments on multiple data sets , For different data sets, the effect is different , There are also differences in the methods of adding locations to the same dataset , You need to experiment . Most cases are effective and improved .

You are welcome to pay attention to more procedural information and answer questions —— WeChat official account : Artificial intelligence AI Algorithm engineer

PS:CBAM And other attention mechanisms , Not only can it be added YOLOX, It can also be added to any other deep learning network , Whether it is classification, detection or segmentation , Mainly in the field of computer vision , May have different degrees of improvement effect .

Last , I hope I can powder each other , Be a friend , Learn and communicate together .

边栏推荐

- What open source projects of go language are worth learning

- Redis cluster setup - use docker to quickly build a test redis cluster

- Keras deep learning practice - recommend system data coding

- 小程序毕设作品之微信校园洗衣小程序毕业设计成品(6)开题答辩PPT

- 小程序毕设作品之微信校园洗衣小程序毕业设计成品(1)开发概要

- 【笔记】逻辑斯蒂回归

- 小程序毕设作品之微信校园洗衣小程序毕业设计成品(5)任务书

- Application layer World Wide Web WWW

- Lighting 5g in the lighthouse factory, Ningde era is the first to explore the way made in China

- Thinkphp+ pagoda operation environment realizes scheduled tasks

猜你喜欢

第3章业务功能开发(添加线索备注,自动刷新添加内容)

GoPro access - control and preview GoPro according to GoPro official document /demo

West test Shenzhen Stock Exchange listing: annual revenue of 240million, fund-raising of 900million, market value of 4.7 billion

Realize the basic operations such as the establishment, insertion, deletion and search of linear tables based on C language

Thinkphp+ pagoda operation environment realizes scheduled tasks

Charles tutorial

592. Fraction addition and subtraction

Good architecture is evolved, not designed

The salary level of programmers in various countries is a little miserable

建议收藏,PMP应战篇(2)之易混淆知识点

随机推荐

Sword finger offer II 041. Average value of sliding window

Excellent basic methods of URL parsing using C language

13、用户web层服务(一)

[luogu_p4556] [Vani has an appointment] tail in rainy days / [template] segment tree merging

【多线程的相关内容】

YOLOX改进之一:添加CBAM、SE、ECA注意力机制

正掩码、反掩码、通配符

Zoom, translation and rotation of OpenCV image

Negative ring

[luogu_p5431] [template] multiplicative inverse 2 [number theory]

MySQL startup options and configuration files

Structural thinking

Fifth, download the PC terminal of personality and solve the problem of being unable to open it

【2022-07-25】

In the "meta cosmic space", utonmos will open the digital world of the combination of virtual and real

Zhishang technology IPO meeting: annual revenue of 600million, book value of accounts receivable of 270Million

井贤栋等蚂蚁集团高管不再担任阿里合伙人 确保独立决策

[training day3] reconstruction of roads [SPFA]

Matlab digital image processing experiment 2: single pixel spatial image enhancement

RSS tutorial: aggregate your own information collection channels, rshub, freshrss, NetNewsWire