当前位置:网站首页>Visual slam lecture notes (I): Lecture 1 + Lecture 2

Visual slam lecture notes (I): Lecture 1 + Lecture 2

2022-07-27 10:01:00 【yfy2022yfy】

2019/08/08

The first lecture is an overview of the content of this book , The second is right SLAM Overview

Lesson one Preface

The content of the book is mainly divided into two parts :

1. The first part is the foundation of mathematics , We will do it in a simple and understandable way , Bedding and vision SLAM Related mathematical knowledge , Include :

- The first lecture is the preface , Introduce the basic information of this book , The exercises mainly include some self-test questions .

- The second lesson is SLAM System Overview , Introduce a SLAM Which modules does the system consist of , What is the specific work of each module . The practice part introduces the construction process of the programming environment and IDE Use .

- The third lecture introduces three-dimensional space movement , You will touch the rotation matrix 、 Four yuan number 、 Relevant knowledge of Euler angle , And in Eigen Use them .

- The fourth lesson is lie groups and Lie algebras . If you don't know what lie algebra is now , It doesn't matter . You will learn the definition and use of Lie algebra , And then through Sophus Operate them .

- The fifth lecture introduces the model of pinhole camera and the expression of image in computer . You will use OpenCV To retrieve the internal and external parameters of the camera .

- The sixth lecture introduces nonlinear optimization , Including the theoretical basis of state estimation 、 The least squares problem 、 Gradient descent method . You will complete a use Ceres and g2o Experiment on curve fitting .

These are all our mathematics , Of course, you have learned advanced mathematics and linear algebra before . I promise they won't look hard —— Of course, if you want to dig deeper , We will provide some reference materials for you to read , Those materials may be a little more difficult than those in the text .

2. The second part is SLAM Technology . We will use the theory introduced in the first part , Tell about vision SLAM Working principle of each module in .

- The seventh lesson is the visual odometer of feature point method . There is a lot to talk about , Including feature point extraction and matching 、 Calculation of epipolar geometric constraints 、 PnP and ICP etc. . In practice , You will use these methods to estimate the motion between two images .

- The eighth lesson is visual odometer of direct method . You will learn the principles of optical flow and direct method , And then use it g2o Implement a simple one RGB-D direct method .

- The ninth lesson is the practice chapter of visual odometer , You will build a visual odometer framework , Comprehensively apply the previously learned knowledge , Realize its basic functions . You will encounter some problems , For example, the necessity of optimization 、 Selection of key frames .

- The tenth lesson is back-end optimization , Mainly for Bundle Adjustment In depth discussion , Including the basic BA And how to use sparsity to speed up the solution process . You will use Ceres and g2o Write one respectively BA Program .

- The eleventh lecture mainly focuses on the pose map in the back-end optimization . Pose graph is a more compact form of expressing constraints between keyframes . You will use g2o and gtsam Optimize a pose ball .

- The twelfth lesson is loop detection , We mainly introduce loop detection based on word bag method . You will use dbow3 Writing dictionary training program and loop detection program .

- The thirteenth lesson is map construction . We will discuss how to use monocular to estimate dense depth map ( And how unreliable it is ), Then discuss RGB-D Dense map building process . You can write polar search and block matching program , And then in RGB-D Encountered in the construction of point cloud map and octree map .

- Lecture 14 mainly introduces the current open source SLAM Project and future development direction . I believe that after reading the previous knowledge , It will be easier for you to understand their principle , Realize your new ideas .

Last , If you can't understand what it says , congratulations ! This book suits you very well ! come on. !

All the source code of this book is managed to github On :https://github.com/gaoxiang12/slambook

The second is First time to know SLAM

2.1 SLAM Camera in

SLAM Camera used in , We divide cameras into monocular (Monocular)、 Binocular (Stereo) And depth cameras (RGB-D) Three categories: . Besides , SLAM There is also a panoramic camera 、 Event Special or emerging types such as cameras . Although I can see them occasionally SLAM Application in , But so far, it has not become the mainstream . The disadvantages of these three types of cameras :

- Monocular camera : The depth can only be calculated after translation , And the inability to determine the true scale .

- Binocular and multi camera : Configuration and calibration are complex , Its depth range and accuracy are limited by the baseline and resolution of binocular , And the calculation of parallax consumes a lot of computing resources , Need to use GPU and FPGA After the equipment accelerates , In order to output the distance information of the whole image in real time . So under the existing conditions , The amount of calculation is one of the main problems of binocular .

- RGBD The camera : Narrow measuring range 、 It's noisy 、 Small field of vision 、 Susceptible to sunlight 、 We can't measure the transmission material and so on , stay SLAM aspect , Mainly used for indoor SLAM, Outdoor applications are more difficult .

2.2 Classic vision SLAM frame

We put the whole vision SLAM The process is divided into the following steps :

- Sensor information reading . In vision SLAM Mainly for the camera image information reading and preprocessing . If in a robot , There may also be a code disk 、 Reading and synchronization of information such as inertial sensors .

- Visual Odometry (Visual Odometry, VO). The task of visual odometer is to estimate the motion of camera between adjacent images , And the local map . VO Also known as the front end (Front End).

- Back end optimization (Optimization). The back end receives the camera pose measured by the visual odometer at different times , And loop back detection information , Optimize them , Get globally consistent trajectories and maps . Because of the connection VO after , Also known as the back end (Back End).

- Loop back detection (Loop Closing). Loop back detection determines whether the robot has ever reached the previous position . If a loopback is detected , It will provide information to the back end for processing .

- Drawing (Mapping). It's based on the estimated trajectory , Create a map corresponding to the task requirements . Classic vision SLAM The framework is in the past ten years , The results summarized by the researchers .

The framework itself , And the algorithm it contains has been basically finalized , And it has been provided in many visual libraries and robot libraries . Rely on these algorithms , We can build a vision SLAM System , Make it locate and map in real time in the normal working environment . therefore , We said , If the working environment is limited to static 、 rigid body , The change of light is not obvious 、 A scene without human interference , that , This SLAM The system is quite mature .

2.2.1 Visual Odometry

Visual odometers care about camera motion between adjacent images , The simplest case, of course, is the motion relationship between the two images .

VO The camera motion can be estimated by the image between adjacent frames , And restore the spatial structure of the scene . Call it “ Odometer ” Because it's the same as the actual odometer , Only the motion of the adjacent time is calculated , And there's nothing to do with the information of the past .

Suppose we have a visual odometer to estimate the camera motion between two images . that , Just put the motion of the adjacent moment “ strand ” get up , It's the trajectory of the robot , thus Solved the positioning problem . On the other hand , We depend on the position of the camera at each moment , Calculate the position of the space points corresponding to each pixel , You get the map .

However , Only the visual odometer is used to estimate the trajectory , There will inevitably be cumulative drift (Accumulating Drift). This is due to the visual odometer ( In the simplest case ) Only the motion between two images is estimated . Every estimation has a certain error , And because of the way the odometer works , The error of the previous moment will be transmitted to the next , Cause after a period of time , The estimated trajectory will no longer be accurate .

Back end optimization 、 Map and location after loop detection ( Right )

The so-called drift (Drift), It will make it impossible for us to build consistent maps . You will find that the straight corridor has become inclined , And the original 90 The right angle of degree becomes crooked —— This is really a very unbearable thing ! To solve the problem of drift , We need two more technologies : Back end optimization and loopback detection . Loop back detection is responsible for “ The robot goes back to its original position ” It's something that's detected , Back end optimization is based on this information , Correct the shape of the whole track .

2.2.2 Back end optimization

Backend optimization mainly refers to processing SLAM Noise in the process .

Back end optimization to consider , It's how to get from these noisy data , Estimate the state of the whole system , And how uncertain this state estimate is —— This is called the maximum posterior probability estimate (Maximum-a-Posteriori, MAP), The state here includes the trajectory of the robot itself , It also contains maps .

In vision SLAM in , The front end is more relevant to the field of computer vision , For example, image feature extraction and matching , The back end is mainly filter and nonlinear optimization algorithm .

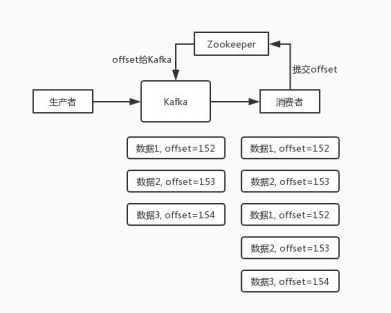

2.2.3 Loop back detection

Loop back detection , Also known as closed-loop detection (Loop Closure Detection), It mainly solves the problem of position estimation drifting with time .

In order to achieve loopback detection , We need robots Have the ability to recognize the scene that has been reached . We can judge the similarity between images , To complete the loopback test . If the loopback detection is successful , Can significantly reduce the cumulative error . So visual loop detection , In essence, it is an algorithm to calculate the similarity of image data . After the loop is detected , We will reduce the “A And B It's the same point ” This information tells the back-end optimization algorithm . then , Back end based on this new information , Adjust the track and map to match the result of loopback detection . such , If we have sufficient and correct loopback detection , It can eliminate the accumulated error , Get globally consistent trajectories and maps .

2.2.4 Drawing

Drawing (Mapping) The process of building a map . A map is a description of the environment , But the description is not fixed , Need to see SLAM The application depends on .

A set of spatial points can also be called a map , A beautiful 3D The model is also a map , A sign of the city 、 village 、 The railway 、 The picture of the river course is also a map . Map

The form of follows SLAM It depends on the application . In general , They can be divided into Measurement map And Topological map Two kinds of .

Measurement map (Metric Map)

Metric map emphasizes accurately representing the position relationship of objects in the map , Usually we use sparse (Sparse) And dense (Dense) Classify them .

- Sparse maps are abstracted to a certain extent , You don't need to express all objects . for example , We choose some representative things , Call it a road sign (Landmark), Then a sparse map is a map composed of road signs , Not the part of the road sign can be ignored .

- Relative , Dense maps focus on modeling everything you see .

For positioning , Sparse landmark maps are enough . When used for navigation , We often need dense maps ( Otherwise, what if I hit the wall between two road signs ?).

Dense maps are usually at some resolution , It consists of many small pieces . A two-dimensional metric map consists of many small grids (Grid), Three dimensional is a lot of small squares (Voxel). In a general way , A small piece contains 、 Free 、 Three unknown states , To express whether there are objects in the cell . When we query a spatial location , The map can give information about whether the location can pass . Such a map can be used for various navigation algorithms , Such as A*,D*‹ wait , It is valued by robot researchers . But we also see , This kind of map needs to store the state of each grid point , Consume a lot of storage space , And in most cases, many details of the map are useless . On the other hand , Consistency problems sometimes occur in large-scale measurement maps . A small steering error , It may cause the walls of two rooms to overlap , Make the map invalid .

Topological map (Topological Map)

Compared to measuring the accuracy of the map , Topological map emphasizes the relationship between map elements .

A topological map is a graph (Graph), It consists of nodes and edges , Only consider the connectivity between nodes , for example A, B Points are connected , Without considering how to A O 'clock B Point process . It eases the need for precise location in maps , Remove the details of the map , It is a more compact way of expression . However , Topological maps are not good at expressing maps with complex structures . How to segment the map to form nodes and edges , How to use topology map for navigation and Path Planning , It is still a problem to be studied .

2.3 SLAM The mathematical representation of the problem

Through the introduction of the previous part , Yes SLAM We have an intuitive understanding of the composition and main functions of each module in . Now? , We should raise it to the level of rationality —— That is to say In mathematical terms SLAM The process .

Suppose the robot ( Carrying some kind of sensor ) Moving in an unknown environment ,![]() Represents a moment ,

Represents a moment , Indicates the position of the robot at the corresponding time , They form the trajectory of the robot .

On the map , The map is made up of many road signs (Landmark) Composed of , And every moment , The sensor will measure part of the waypoint , Get their observations . It's better to set up road markings N individual , use That means they .

In this setting ,“ Robots carry sensors to move in the environment ”, Described by the following two things :

- motion : from

Moment to

Moment to  moment , The position of the robot

moment , The position of the robot  How it changed .

How it changed . - observation : Suppose the robot is

moment , On

moment , On  A signpost was detected at

A signpost was detected at  , How is this thing described in mathematical language .

, How is this thing described in mathematical language .

Equation of motion The formula —— Indicates the position of the last moment With this moment position

The relationship between :

The relationship between :

![]()

among : Is the motion sensor reading ( Also called input ),

Is the motion sensor reading ( Also called input ), It's noise . This formula is called Equation of motion .

It's noise . This formula is called Equation of motion .

The observation equation The formula —— The robot is  I see a road mark on the position

I see a road mark on the position ![]() , An observation data is generated

, An observation data is generated  , Use an abstract function h To describe the relationship :

, Use an abstract function h To describe the relationship :

![]()

among : It's observation noise .

It's observation noise .

These two equations describe The most basic SLAM problem : Know the reading of motion measurement u, And sensor readings z when , Solve the positioning problem ( It is estimated that x) And drawing problems ( It is estimated that y). hold SLAM The problem is modeled as a State estimation problem : How to pass the measurement data with noise , Estimate internal 、 Hidden state variables ?

According to whether the equation of motion and observation is linear , Is the noise classified according to Gaussian distribution , It is divided into linear / Nonlinearity and Gauss / Non Gaussian system . among Linear Gaussian system (Linear Gaussian, LG System ) It's the simplest , its The unbiased optimal estimation can be made by Kalman filter (Kalman Filter, KF) give . And in complex Nonlinear non Gaussian system (Non-Linear Non-Gaussian, NLNG System ) in , We will Use to extend Kalman filter (Extended Kalman Filter, EKF) and Nonlinear optimization There are two kinds of methods to solve it .

The earliest real-time vision SLAM The system is based on EKF Developed . And then , In order to overcome EKF The shortcomings of ( For example, the assumption of Gaussian distribution of linearization error and noise ), People began to use Particle filter (Particle Filter) And other filters , Even use Nonlinear optimization Methods . today , Mainstream vision SLAM Use to optimize (Graph Optimization) State estimation for representative optimization techniques . We believe that the optimization technology has been significantly better than the filter technology , As long as computing resources allow , We usually prefer to use optimization methods ( See tenth 、 Eleventh lecture ).

边栏推荐

- 并发之线程状态转换

- Voice live broadcast system - Principles to be followed in developing push notifications

- 7/26 thinking +dp+ suffix array learning

- Snowflake vs. Databricks谁更胜一筹?2022年最新战报

- July training (day 09) - two point search

- What happens if the MySQL disk is full? I really met you!

- 7/26 思维+dp+后缀数组的学习

- 数据分析如何解决商业问题?这里有份超详细攻略

- Review summary of engineering surveying examination

- Brush the title "sword finger offer" day04

猜你喜欢

Concurrent thread state transition

Nacos configuration center dynamically refreshes the data source

拜托!面试请不要再问我 Ribbon 的架构原理

3D restoration paper: shape painting using 3D generative advantageous networks and recurrent revolutionary networks

PCL各模块概述(1.6)

How to install cpolar intranet penetration on raspberry pie

A ride into Qinchuan -- a brief talk on how beego Autorouter works

Interview JD T5, was pressed on the ground friction, who knows what I experienced?

Live countdown 3 days sofachannel 29 P2P based file and image acceleration system Dragonfly

Oracle RAC 19C PDB instance is down

随机推荐

Understand chisel language. 26. Chisel advanced input signal processing (II) -- majority voter filtering, function abstraction and asynchronous reset

wordpress禁止指定用户名登录或注册插件【v1.0】

LeetCode.1260. 二维网格迁移____原地暴力 / 降维+循环数组直接定位

Review of in vivo detection

Talk about 10 scenarios of index failure. It's too stupid

GBase 8a MPP集群扩容实战

食品安全 | 无糖是真的没有糖吗?这些真相要知道

Snowflake vs. databricks who is better? The latest war report in 2022

July training (day 13) - two way linked list

Looking for a job for 4 months, interviewing 15 companies and getting 3 offers

At the end of the year, I'll teach you how to get high performance!

oracle rac 19c pdb实例当掉

35 spark streaming backpressure mechanism, spark data skew solution and kylin's brief introduction

Exercises --- quick arrangement, merging, floating point number dichotomy

食品安全 | 菜板环境很重要,这些使用细节你知道吗?

Shell综合应用案例,归档文件、发送消息

Concurrent Park and unpark description

Qt 学习(二) —— Qt Creator简单介绍

July training (day 07) - hash table

3D restoration paper: shape painting using 3D generative advantageous networks and recurrent revolutionary networks

Moment to

Moment to  moment , The position of the robot

moment , The position of the robot  How it changed .

How it changed . , How is this thing described in mathematical language .

, How is this thing described in mathematical language .