当前位置:网站首页>Pytorch —— 分布式模型训练

Pytorch —— 分布式模型训练

2022-08-01 13:52:00 【CyrusMay】

1.数据并行

1.1 单机单卡

import torch

from torch import nn

import torch.nn.functional as F

import os

model = nn.Sequential(nn.Linear(in_features=10,out_features=20),

nn.ReLU(),

nn.Linear(in_features=20,out_features=2),

nn.Sigmoid())

data = torch.rand([100,10])

optimizer = torch.optim.Adam(model.parameters(),lr = 0.001)

print(torch.cuda.is_available())

# 指定只用一张显卡

# 可在终端运行 CUDA_VISIBLE_DEVICES="0"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

# 选定显卡

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 模型拷贝

model.to(device)

# 数据拷贝

data = data.to(device)

# 模型存储

torch.save({

"model_state_dict":model.state_dict(),

"optimizer_state_dict":optimizer.state_dict()},"./model")

# 模型加载

checkpoint = torch.load("./model",map_location=device)

model.load_state_dict(checkpoint["model_state_dict"])

optimizer.load_state_dict(checkpoint["optimizer_state_dict"])

1.2 单机多卡

代码

import torch

import torch.nn.functional as F

from torch import nn

import os

# 获取当前gpu的编号

local_rank = int(os.environ["LOCAL_RANK"])

torch.cuda.set_device(local_rank)

device = torch.device("cuda",local_rank)

dataset = torch.rand([1000,10])

model = nn.Sequential(

nn.Linear(),

nn.ReLU(),

nn.Linear(),

nn.Sigmoid()

)

optimizer = torch.optim.Adam(model.parameters,lr=0.001)

# 检测GPU的数目

n_gpus = torch.cuda.device_count()

# 初始化一个进程组

torch.distributed.init_process_group(backend="nccl",init_method="env://") # backend为通讯方式

# 模型拷贝,放入DistributedDataParallel

model = torch.nn.parallel.DistributedDataParallel(model,device_ids=[local_rank],output_device=local_rank)

# 构建分布式的sampler

sampler = torch.utils.data.distributed.DistributedSampler(dataset)

# 构建dataloader

BATCH_SIZE = 128

dataloader = torch.utils.data.DataLoader(dataset=dataset,

batch_size=BATCH_SIZE,

num_workers = 8,

sampler = sampler)

for epoch in range(1000):

for x in dataloader:

sampler.set_epoch(epoch) # 起到不同的shuffle作用

if local_rank == 0:

# 模型存储

torch.save({

"model_state_dict":model.module.state_dict()

},"./model")

# 模型加载

checkpoint = torch.load("./model",map_location=local_rank)

model.load_state_dict(checkpoint["model_state_dict"],

)

在终端起任务

torchrun --nproc_per_node=n_gpus train.py

1.3 多机多卡

代码

import torch

import torch.nn.functional as F

from torch import nn

import os

# 获取当前gpu的编号

local_rank = int(os.environ["LOCAL_RANK"])

torch.cuda.set_device(local_rank)

device = torch.device("cuda",local_rank)

dataset = torch.rand([1000,10])

model = nn.Sequential(

nn.Linear(),

nn.ReLU(),

nn.Linear(),

nn.Sigmoid()

)

optimizer = torch.optim.Adam(model.parameters,lr=0.001)

# 检测GPU的数目

n_gpus = torch.cuda.device_count()

# 初始化一个进程组

torch.distributed.init_process_group(backend="nccl",init_method="env://") # backend为通讯方式

# 模型拷贝,放入DistributedDataParallel

model = torch.nn.parallel.DistributedDataParallel(model,device_ids=[local_rank],output_device=local_rank)

# 构建分布式的sampler

sampler = torch.utils.data.distributed.DistributedSampler(dataset)

# 构建dataloader

BATCH_SIZE = 128

dataloader = torch.utils.data.DataLoader(dataset=dataset,

batch_size=BATCH_SIZE,

num_workers = 8,

sampler = sampler)

for epoch in range(1000):

for x in dataloader:

sampler.set_epoch(epoch) # 起到不同的shuffle作用

if local_rank == 0:

# 模型存储

torch.save({

"model_state_dict":model.module.state_dict()

},"./model")

# 模型加载

checkpoint = torch.load("./model",map_location=local_rank)

model.load_state_dict(checkpoint["model_state_dict"],

)

终端起任务

在每个节点上都执行一次

torchrun --nproc_per_node=n_gpus --nodes=2 --node_rank=0 --master_addr="主节点IP" --master_port="主节点端口号" train.py

2 模型并行

略

by CyrusMay 2022 07 29

边栏推荐

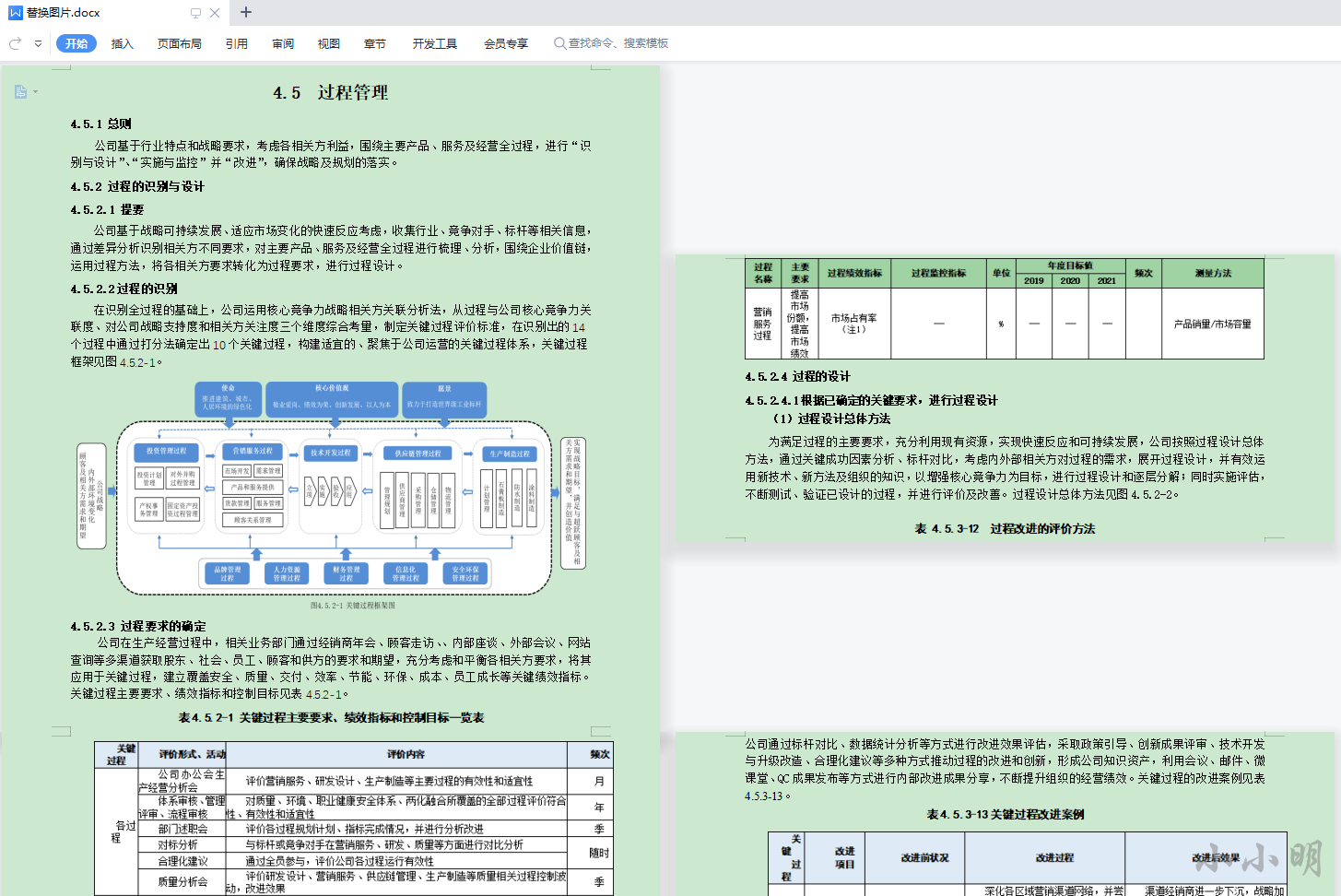

- Batch replace tables in Word with pictures and save

- Windows 安装PostgreSQL

- gpio模拟串口通信

- 微服务原生案例搭建

- 牛客刷SQL--7

- Gradle series - Gradle tests, Gradle life cycle, settings.gradle description, Gradle tasks (based on Groovy documentation 4.0.4) day2-3

- LeetCode_动态规划_中等_377.组合总和 Ⅳ

- Qt实战案例(55)——利用QDir删除选定文件目录下的空文件夹

- 人像分割技术解析与应用

- 热心肠:关于肠道菌群和益生菌的10个观点

猜你喜欢

随机推荐

windows IDEA + PHP+xdebug 断点调试

考研大事件!这6件事考研人必须知道!

fh511小风扇主控芯片 便携式小风扇专用8脚IC 三档小风扇升压芯片sop8

shell 中的 分发系统 expect脚本 (传递参数、自动同步文件、指定host和要传输的文件、(构建文件分发系统)(命令批量执行))

【每日一题】952. 按公因数计算最大组件大小

重磅!国内首个开放式在线绘图平台Figdraw突破10万用户!发布《奖学金激励计划》:最高5000元!...

Istio Pilot代码深度解析

postgresql之page分配管理(一)

iPhone难卖,被欧洲反垄断的服务业务也难赚钱了,苹果的日子艰难

态路小课堂丨浅谈优质光模块需要具备的条件!

The basic knowledge of scripting language Lua summary

Efficiency tools to let programmers get off work earlier

观察者模式

四足机器人软件架构现状分析

【5GC】5G网络切片与5G QoS的区别?

LeetCode_位运算_简单_405.数字转换为十六进制数

lua脚本关键

对标丰田!蔚来又一新品牌披露:产品价格低于20万

关于Request复用的那点破事儿。研究明白了,给你汇报一下。

gpio analog serial communication