当前位置:网站首页>Basic use of text preprocessing library Spacy (quick start)

Basic use of text preprocessing library Spacy (quick start)

2022-06-29 15:00:00 【iioSnail】

List of articles

spaCy brief introduction

spaCy( Official website ,github link ) It's a NLP Text preprocessing in domain Python library , Include participle (Tokenization)、 Part of speech tagging (Part-of-speech Tagging, POS Tagging)、 dependency analysis (Dependency Parsing)、 Morphological reduction (Lemmatization)、 Sentence boundary detection (Sentence Boundary Detection,SBD)、 Named entity recognition (Named Entity Recognition, NER) And so on . Specific support function reference links .

spaCy Characteristics :

- Support A variety of languages

- Use Deep learning The model performs tasks such as word segmentation

- Each language basically provides Models of different sizes To choose from , Users can choose according to their actual needs . User access The website links or github link The query model .

- Simple and easy to use

spaCy install

pip install spacy -i https://pypi.tuna.tsinghua.edu.cn/simple

If you want to install GPU edition , May refer to Official documents

spaCy Basic use of

spacy For all tasks, it is basically 4 Walking :

- Download model

- Load model

- Process sentences

- To get the results

give an example , Use spacy Divide words in English :

1. First, download the model through the command :

python -m spacy download en_core_web_sm

en_core_web_sm It's the name of the model , You can go to This link Search model .

Because at home , There may be a problem of slow download , You can go to github Search model , And then use

pip install some_model.whlManual installation

2. load 、 Use models and get results

import spacy # Guide pack

# Load model

nlp = spacy.load("en_core_web_sm")

# Using the model , Just pass in the sentence

doc = nlp("Apple is looking at buying U.K. startup for $1 billion")

# Get word segmentation results

print([token.text for token in doc])

The final output is :

['Apple', 'is', 'looking', 'at', 'buying', 'U.K.', 'startup', 'for', '$', '1', 'billion']

spaCy Several important classes in

In the last section , There are several key objects

nlp: The object ofspacy.Languageclass ( Official document link ).spacy.loadMethod will return this kind of object .nlp("...")The essence is to adjustLanguage.__call__Methoddoc: The object ofspacy.tokens.Doc( Official document link ), It contains participles 、 Part of speech tagging 、 Word form reduction and other results ( For details, please refer to link ).docIs an iterative object .token: The object ofspacy.tokens.token.Token( Official document link ), You can get the specific attributes of each word through this object ( word 、 Part of speech, etc ), For details, please refer to link .

spaCy Processing of (Processing Pipeline)

call nlp(...) It will be executed in the order shown in the above figure ( First participle , Then part of speech tagging and so on ). For components that are not needed , You can choose to exclude... When loading the model :

nlp = spacy.load("en_core_web_sm", exclude=["ner"])

Or disable it :

nlp = spacy.load("en_core_web_sm", disable=["tagger", "parser"])

For disable , You can disable it when you want to use it later :

nlp.enable_pipe("tagger")

All built-in components can be referred to link

actual combat : Chinese word segmentation and Word Embedding

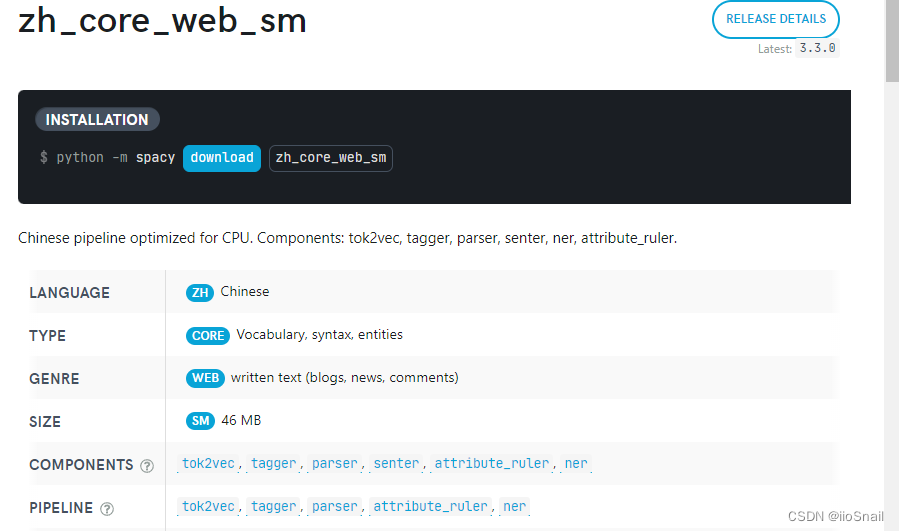

1. First of all to Chinese module of official documents Find the right model . Choose the smallest one here .

2. Download model

python -m spacy download zh_core_web_sm

3. Write code

import spacy # Guide pack

# Load model , And eliminate unnecessary components

nlp = spacy.load("zh_core_web_sm", exclude=("tagger", "parser", "senter", "attribute_ruler", "ner"))

# Process sentences

doc = nlp(" Natural language processing is an important direction in the field of computer science and artificial intelligence . It studies various theories and methods that can realize effective communication between human and computer with natural language .")

# for Loop to get each token The vector corresponding to it

for token in doc:

# Here for the convenience of display , Intercept only 5 position , But in fact, the model encodes Chinese words into 96 Dimension vector

print(token.text, token.tensor[:5])

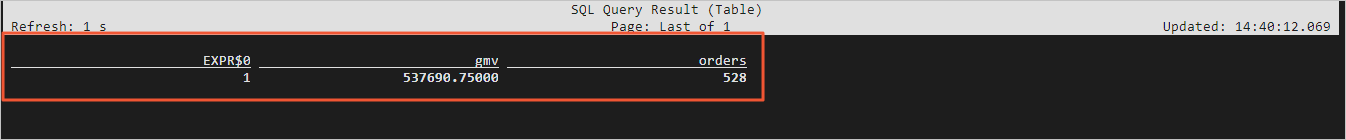

In the official model , have tok2vec This component , It shows that the model can embedding, Very convenient . The final output is :

natural [-0.16925007 -0.8783153 -1.4360809 0.14205566 -0.76843846]

Language [ 0.4438781 -0.82981354 -0.8556605 -0.84820974 -1.0326502 ]

Handle [-0.16880168 -0.24469137 0.05714838 -0.8260342 -0.50666815]

yes [ 0.07762825 0.8785285 2.1840482 1.688557 -0.68410844]

... // A little

and [ 0.6057179 1.4358768 2.142096 -2.1428592 -1.5056412]

Method [ 0.5175674 -0.57559186 -0.13569726 -0.5193214 2.6756258 ]

. [-0.40098143 -0.11951387 -0.12609476 -1.9219975 0.7838618 ]

边栏推荐

- Interview shock 61: tell me about MySQL transaction isolation level?

- 宜明昂科冲刺港股:年内亏损7.3亿 礼来与阳光人寿是股东

- ModStartBlog 现代化个人博客系统 v5.2.0 主题开发增强,新增联系方式

- Chapter 8 pixel operation of canvas

- 华理生物冲刺科创板:年营收2.26亿 拟募资8亿

- 信息学奥赛一本通1000:入门测试题目

- 阿里云体验有奖:使用PolarDB-X与Flink搭建实时数据大屏

- Mysql database - general syntax DDL DML DQL DCL

- 第五届中国软件开源创新大赛 | openGauss赛道直播培训

- Chinese garbled code output from idea output station

猜你喜欢

MCS:多元随机变量——多项式分布

Slow bear market, bit Store provides stable stacking products to help you cross the bull and bear

synchronized 与多线程的哪些关系

Alibaba cloud experience Award: use polardb-x and Flink to build a large real-time data screen

EXCEL的查询功能Vlookup

MCS:离散随机变量——Hyper Geometric分布

June 27 talk SofiE

Campus errands wechat applet errands students with live new source code

华为软件测试笔试真题之变态逻辑推理题【二】华为爆火面试题

又拍云 Redis 的改进之路

随机推荐

Indice d'évaluation du logiciel hautement simultané (site Web, interface côté serveur)

konva系列教程4:图形属性

China soft ice cream market forecast and investment prospect research report (2022 Edition)

Evaluation index of high concurrency software (website, server interface)

Chapter 10 of canvas path

openGauss社区成立SIG KnowledgeGraph

投资reits基金是靠谱吗,reits基金安全吗

两个字的名字如何变成有空格的3个字符的名字

What should phpcms do when it sends an upgrade request to the official website when it opens the background home page?

mysql 备份与还原

Is 100W data table faster than 1000W data table query in MySQL?

curl: (56) Recv failure: Connection reset by peer

第九章 APP项目测试(此章完结)

Build your own website (19)

I want to search the hundreds of nodes in the data warehouse. Can I check a table used in the SQL

EXCEL的查询功能Vlookup

Illustration of Ctrip quarterly report: net revenue of RMB 4.1 billion has been "halved" compared with that before the outbreak

The 5th China software open source innovation competition | opengauss track live training

bash汇总线上日志

论文学习——考虑场次降雨年际变化特征的年径流总量控制率准确核算