当前位置:网站首页>Emnlp2021 𞓜 a small number of data relation extraction papers of deepblueai team were hired

Emnlp2021 𞓜 a small number of data relation extraction papers of deepblueai team were hired

2022-06-11 04:56:00 【Shenlan Shenyan AI】

In recent days, ,EMNLP 2021 The review results of this year's papers are published on the official website in advance ,DeepBlueAI The team's paper 《MapRE: An Effective Semantic Mapping Approach for Low-resource Relation Extraction》 Be hired . This paper proposes a method to integrate the sentence correlation information and relationship label semantic information between contract category samples in the low resource relationship extraction task , In the experiments of several public data sets of relation extraction tasks, we get SOTA result .

EMNLP( Full name Conference on Empirical Methods in Natural Language Processing) from ACL SIGDAT( Special interest group on linguistic data ) The host , Once a year , stay Google Scholar Ranked second in the index of computational linguistics journals . This paper focuses on the application of statistical machine learning methods in naturallanguageprocessing , In recent years, with the development of machine learning methods for large-scale data , The number of people attending this meeting has increased year by year , It has attracted more and more attention .

EMNLP The criteria for selecting papers are extremely strict ,EMNLP 2021 A total of valid submissions have been received 3114 piece , Employment 754 piece , The employment rate is only 24.82%. By convention ,EMNLP 2021 Selected the best long paper 、 Best short paper 、 Outstanding papers and best Demo Four awards for papers , common 7 Papers were selected .

This year, EMNLP 2021 Will be in 11 month 7 Japan - 11 Japan Jointly held in Punta Cana, Dominican Republic, and online , The meeting lasted five days , Huangxuanjing, Professor of the school of computer science of Fudan University, will serve as the program chairman of this meeting . At the upcoming EMNLP The academic conference will show the cutting-edge research results in the field of naturallanguageprocessing , These achievements will also represent the research level and future development direction in relevant fields and technology segmentation .

DeepBlueAI The team's paper proposed a method to integrate the sentence correlation information and the semantic information of relationship labels between contract category samples in the low resource relationship extraction task , In the experiments of several public data sets of relation extraction tasks, we get SOTA result .

Relationship extraction aims to find the correct relationship between two entities in a given sentence , yes NLP One of the basic tasks in . This problem is usually regarded as a supervised classification problem , Training with large-scale labeled data . In recent years , The relationship extraction model has been obviously developed . However , When there are too few training samples , The performance of the model will drop sharply .

In recent work ,DeepBlueAI The team uses the progress of small sample learning to solve the problem of low resources . The key idea of small sample learning is to learn a comparison query and support set samples The model of sample similarity in , such , The goal of relation extraction is to learn a general 、 An accurate relation classifier becomes a mapping model that maps instances with the same relation to adjacent regions .

Under the setting of small sample learning , Tag information , That is, the relationship label containing the semantic knowledge of the relationship itself , It is not used by the model in training and prediction .DeepBlueAI The team's experimental results show that , In pre training and fine-tuning, combining the above label information and the sample mapping of each relationship category can significantly improve the performance of the model in the task of extracting relationships with few samples .

One 、 Semantic mapping pre training

The objective function of the pre training part consists of three parts :

CCR: The sample represents the loss between

CRR: Loss between sample and label

MLM: Language model loss , Same as BERT

DeepBlueAI The team takes a similar CP (Peng et al., 2020) The model is pre trained . The difference is that the team also considers label information , Use Wikidata As a pre training corpus , In addition to the Wikidata and DeepBlueAI The repeated parts between data sets used by the team for subsequent experiments .

In this part ,DeepBlueAI The team used BERT base As a basic model , use AdamW Optimizer , The maximum input length is set to 60.DeepBlueAI The team has trained 11,000 Step , The top 500 Step: warmup,batch size Set to 2040, The learning rate is 3e-5.

Two 、 Supervised relationship extraction

This part DeepBlueAI The team experimented with MapRE Two ways to use the pre training model , namely MapRE-L( Directly use the full connection layer to encode the text and output the prediction relationship ) and MapRE-R( Encode relation labels with relation encoders , Then do similarity matching ), The structure of the model is shown in the figure :

In the supervised relationship extraction task DeepBlueAI The team evaluates two benchmark datasets :ChemProt and Wiki80. The former includes 56,000 Instances and 80 Kind of relationship , The latter includes 10,065 Instances and 13 Kind of relationship .

The experimental results are as follows :

here DeepBlueAI The team focuses on low resource relationship extraction , Select the following three representative models for comparison .

1)BERT: In this model, special tags are added to the head entity and the tail entity of the text token, stay BERT The output is followed by several full connection layers for relationship classification .

2)MTB (Soares et al., 2019):MTB The model assumes that sentences with the same head entity and tail entity in unsupervised data are positive sample pairs , Have the same relationship . In the test phase , Yes query and support set Ranking by similarity score , Take the relationship with the highest score as the prediction result .

3)CP (Peng et al., 2020): Same as MTB similar , Our method is the same as CP The difference between the models is , We have considered label information during pre training and fine-tuning .

We can observe that :1) stay BERT Pre training on ( namely MTB, CP and MapRE) It can improve the performance of the model 2) Compare MapRE-L And CP and MTB, Adding tag information during pre training can significantly improve model performance , Especially when resources are scarce , For example, only 1% Training set for fine tuning 3) Compare MapRE-R and MapRE-L, The former also considers label information in fine-tuning , Show better and more stable experimental results

The results show that the use of label information in pre training and fine-tuning can significantly improve the model performance in low resource supervised relationship extraction tasks .

3、 ... and 、 Small sample and zero sample relation extraction

In the case of small sample learning , The model needs to be based on a given relationship category , Forecast with a small number of samples in each category . about N way K shot problem ,Support set S contain N A relationship , Each relationship has K Samples , Query set contains Q Samples , Each sample belongs to N One of the relationships .

The structure of the model is as follows :

The prediction result of the model is obtained by the following formula :

DeepBlueAI The team evaluated the proposed approach on two data sets :FewRel and NYT-25.FewRel Data set containing 70,000 Two sentences and 100 A relationship ( Each relationship has 700 A sentence ), The data source is Wikipedia . among 64 Relationships for training ,16 For validation , as well as 20 For testing . The test data set contains 10,000 A sentence , Must be evaluated online .NYT-25 Data sets are created by Gao et al., 2019.DeepBlueAI Team randomly selected 10 Relationships for training ,5 For validation ,10 For testing .

The experimental results are as follows :

As shown in the table above , Under all experimental settings ,DeepBlueAI The team put forward MapRE, Since both pre training and fine tuning are considered support set Sample sentences and relation tag information , Provides stable performance , And substantially better than a range of baseline Method . The results proved the effectiveness of the framework proposed by the team , It also shows the importance of semantic mapping information of relation labels in relation extraction .

DeepBlueAI The team further considered the extreme conditions of low resource relationship extraction , That is, zero samples . Under this setting , The model input does not contain any support set sample . Under zero sample conditions , Most of the above few sample relation extraction frameworks are not applicable , Because there must be at least one sample in each relationship category of other models of this kind .

It turns out that , Compared with other recent zero sample learning work ,DeepBlueAI The team put forward MapRE Excellent performance in all settings , Proved MapRE The effectiveness of the .

summary

In this work ,DeepBlueAI The team proposed a relationship extraction model considering both label information and sample information ,MapRE. A lot of experimental results show that ,MapRE Model pair supervised relation extraction 、 Excellent performance in the task of few sample relation extraction and zero sample relation extraction . The results show that both sample and label information play an important role in pre training and fine tuning . In this work ,DeepBlueAI The team did not study the potential impact of field migration , We will take correlation analysis as the next step .

Sum up , Proposed by Shenyan technology MapRE The model combines the characteristics of zero sample and small sample learning , It combines the information of the same relation samples and the relation semantics , At present, it has been applied in the text relation extraction function of Shenyan technology intelligent data annotation platform , Greatly improved the performance of the model under a small number of training samples , It can greatly save manpower in fields such as intelligent data annotation , Improve labeling efficiency and labeling quality .

| About Shenyan Technology |

Shenyan technology was founded in 2018 year , It's Shenlan technology (DeepBlue) Its subsidiaries , With “ Artificial intelligence enables enterprises and industries ” For the mission , Help partners reduce costs 、 Improve efficiency and explore more business opportunities , Further develop the market , Serving the people's livelihood . The company launched four platform products —— Deep extension intelligent data annotation platform 、 Deep extension AI Development platform 、 Deep extension automatic machine learning platform 、 Deep extension AI Open platform , It covers data annotation and processing , To model building , And then to the whole process service of industry applications and solutions , One stop help enterprises “AI” turn .

边栏推荐

- [Transformer]MViTv2:Improved Multiscale Vision Transformers for Classification and Detection

- go MPG

- Relational database system

- Leetcode question brushing series - mode 2 (datastructure linked list) - 206:reverse linked list

- New product release: Lianrui launched a dual port 10 Gigabit bypass network card

- Sealem Finance打造Web3去中心化金融平台基础设施

- New library goes online | cnopendata immovable cultural relic data

- What is a smart network card? What is the function of the smart network card?

- Cartographer learning record: cartographer Map 3D visualization configuration (self recording dataset version)

- Parametric contractual learning: comparative learning in long tail problems

猜你喜欢

Redis master-slave replication, sentinel, cluster cluster principle + experiment (wait, it will be later, but it will be better)

Apply the intelligent OCR identification technology of Shenzhen Yanchang technology to break through the bottleneck of medical bill identification at one stroke. Efficient claim settlement is not a dr

![[Transformer]On the Integration of Self-Attention and Convolution](/img/64/59f611533ebb0cc130d08c596a8ab2.jpg)

[Transformer]On the Integration of Self-Attention and Convolution

免费数据 | 新库上线 | CnOpenData全国文物商店及拍卖企业数据

![[NIPS2021]MLP-Mixer: An all-MLP Architecture for Vision](/img/89/66c30ea8d7969fef76785da1627ce5.jpg)

[NIPS2021]MLP-Mixer: An all-MLP Architecture for Vision

Win10+manjaro dual system installation

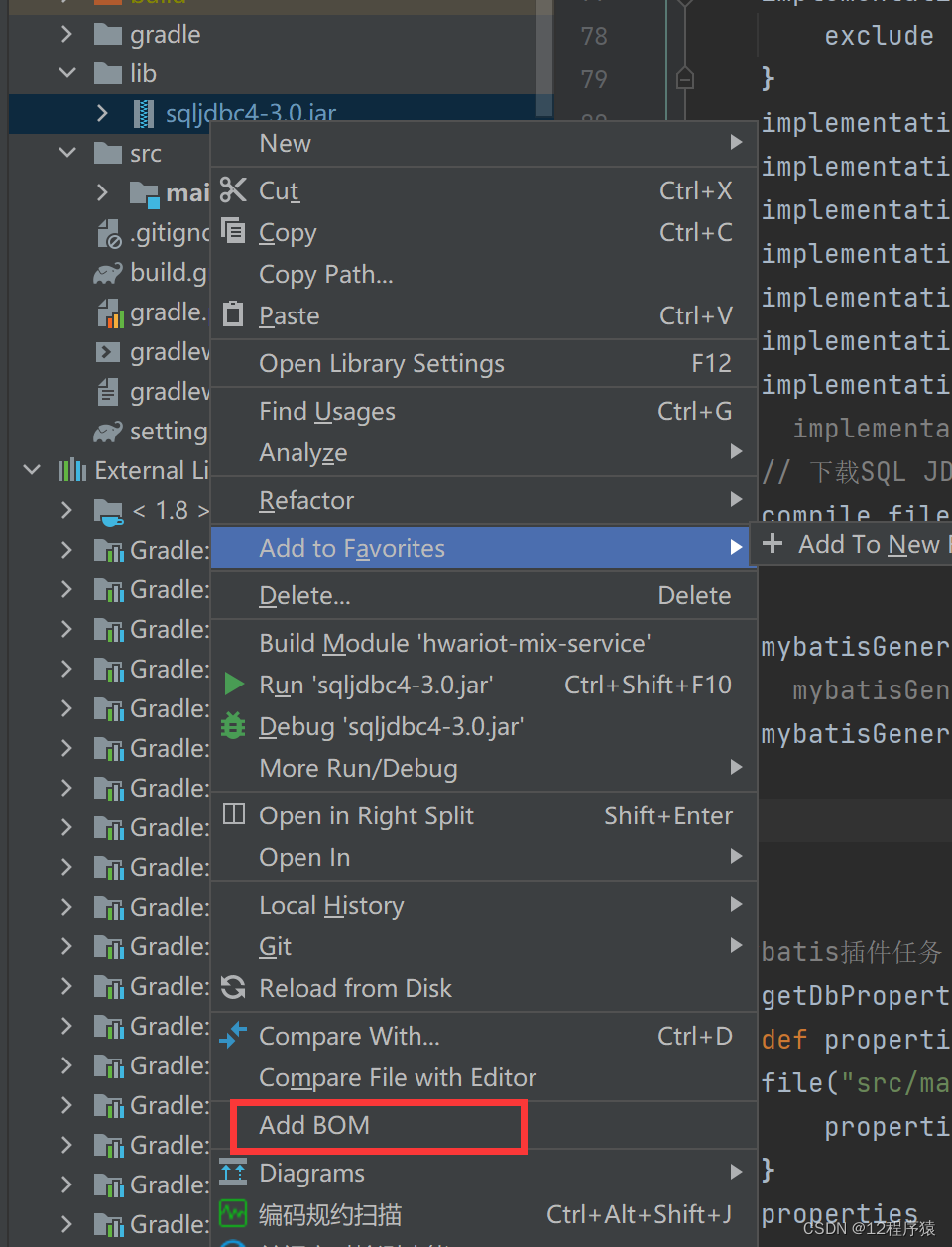

How the idea gradle project imports local jar packages

BP neural network derivation + Example

Huawei equipment is configured with bgp/mpls IP virtual private network

Technology | image motion drive interpretation of first order motion model

随机推荐

Mindmanager22 professional mind mapping tool

[Transformer]AutoFormerV2:Searching the Search Space of Vision Transformer

go MPG

Thesis 𞓜 jointly pre training transformers on unpaired images and text

USB to 232 to TTL overview

KD-Tree and LSH

Description of construction scheme of Meizhou P2 Laboratory

RGB image histogram equalization and visualization matlab code

Tianchi - student test score forecast

PostgreSQL database replication - background first-class citizen process walreceiver receiving and sending logic

Support vector machine -svm+ source code

New UI learning method subtraction professional version 34235 question bank learning method subtraction professional version applet source code

华为设备配置BGP/MPLS IP 虚拟专用网地址空间重叠

Real time update of excellent team papers

Lianrui electronics made an appointment with you with SIFA to see two network cards in the industry's leading industrial automation field first

The first master account of Chia Tai International till autumn

C language test question 3 (advanced program multiple choice questions _ including detailed explanation of knowledge points)

Leetcode question brushing series - mode 2 (datastructure linked list) - 24 (m): swap nodes in pairs exchange nodes in the linked list

[Transformer]MViTv2:Improved Multiscale Vision Transformers for Classification and Detection

go单元测试实例;文件读写;序列化