当前位置:网站首页>Hands on deep learning (44) -- seq2seq principle and Implementation

Hands on deep learning (44) -- seq2seq principle and Implementation

2022-07-04 09:41:00 【Stay a little star】

List of articles

One 、 What is? seq2seq( Sequence to sequence learning )

Use two RNN Design Encoder-Decoder structure , And apply it to machine translation Sutskever.Vinyals.Le.2014,Cho.Van Merrienboer.Gulcehre.ea.2014.RNN Of Encoder You can use a variable length sequence as input , Convert it into a fixed length hidden state .

In other words , Input sequence ( Source ) The information of is code To the hidden state of the cyclic neural network encoder . To generate the tags of the output sequence one by one , The independent cyclic neural network decoder is based on the encoded information of the input sequence and the generated tags of the output sequence ( For example, in the task of language model ) To predict the next marker . The following figure shows how to use two recurrent neural networks for sequence to sequence learning in machine translation .

In the diagram above , specific “<eos>” Express Sequence end tag . Once the output sequence generates this tag , The model can stop executing the prediction . In the initialization time step of the cyclic neural network decoder , There are two specific design decisions . First , specific “<bos>” Express Sequence start tag , It is the first mark of the input sequence of the decoder . secondly , The final hidden state of the cyclic neural network encoder is used to initialize the hidden state of the decoder . For example Sutskever.Vinyals.Le.2014 In the design of , It is based on this design that the encoded information of the input sequence is sent into the decoder to generate the output sequence ( The goal is ) Of . In others such as Cho.Van-Merrienboer.Gulcehre.ea.2014 In the design of , In each time step , The final hidden state of the encoder is part of the input sequence of the decoder , As shown in the figure above . Similar to the language model trained in the language model , Tags can be allowed to become the original output sequence , Based on one tag “<bos>”、“Ils”、“regardent”、“.” → \rightarrow → “Ils”、“regardent”、“.”、“<eos>” To move the predicted position .

details :

- The encoder is a RNN, Read the input sentence ( It can be two-way )

- The decoder uses another RNN To output

- The encoder has no output RNN

- The hidden state of the last time step of the encoder is used as the initial hidden state of the decoder

Two 、 Do it Seq2Seq

import collections

import math

import torch

from torch import nn

from d2l import torch as d2l

1. Encoder

Technically speaking , The encoder converts a variable length input sequence into a fixed shape Context variable c \mathbf{c} c, And encode the information of the input sequence in the context variable . Such as seq2seq As shown in the schematic diagram of , A cyclic neural network can be used to design an encoder .

Let's consider a sequence sample ( Batch size :1). Suppose the input sequence is x 1 , … , x T x_1, \ldots, x_T x1,…,xT, among x t x_t xt Is the... In the input text sequence t t t A sign . In time step t t t, The recurrent neural network will x t x_t xt( That is, input the eigenvector x t \mathbf{x}_t xt) and h t − 1 \mathbf{h} _{t-1} ht−1( That is, the hidden state of the previous time step ) Convert to h t \mathbf{h}_t ht( That is, the current hidden state ). Use a function f f f To describe the transformation made by the cyclic neural network layer :

h t = f ( x t , h t − 1 ) . \mathbf{h}_t = f(\mathbf{x}_t, \mathbf{h}_{t-1}). ht=f(xt,ht−1).

All in all , The encoder passes the selected function q q q Convert the hidden state of all time steps into context variables ( This is actually written to better understand the later attention mechanism ):

c = q ( h 1 , … , h T ) . \mathbf{c} = q(\mathbf{h}_1, \ldots, \mathbf{h}_T). c=q(h1,…,hT).

for example , When choosing q ( h 1 , … , h T ) = h T q(\mathbf{h}_1, \ldots, \mathbf{h}_T) = \mathbf{h}_T q(h1,…,hT)=hT when , The context variable is only the hidden state of the input sequence at the last time step h T \mathbf{h}_T hT.

up to now , We use a unidirectional recurrent neural network to design the encoder , The hidden state only depends on the input subsequence , This sub sequence is from the beginning of the input sequence to the position of the time step where the hidden state is located ( Including the time step of the hidden state ) form . We can also use bidirectional recurrent neural network to construct encoder , The hidden state depends on two input subsequences , The two subsequences are the sequence before and after the position of the time step where the hidden state is located ( Including the time step of the hidden state ), Therefore, the hidden state encodes the information of the whole sequence . Now realize the cyclic neural network encoder . Be careful , Use Embedded layer (embedding layer) To get the eigenvector of each tag in the input sequence . The weight of the embedded layer is a matrix , The number of lines is equal to the size of the input vocabulary (vocab_size), The number of columns is equal to the dimension of the eigenvector (embed_size). For the index of any input tag i i i, The embedding layer obtains the second part of the weight matrix i i i That's ok ( from 0 0 0 Start ) To return its eigenvector . in addition , In this paper, a multi-layer gating cycle unit is selected to realize the encoder .

class Seq2SeqEncoder(d2l.Encoder):

""" For sequence to sequence learning RNN Network encoder """

def __init__(self,vocab_size,embed_size,num_hiddens,num_layers,dropout=0,**kwargs):

super(Seq2SeqEncoder,self).__init__(**kwargs)

# Embedded layer

# embedding It is to segment word vectors for each word ,embed_size Is to abandon the words that are not commonly used

self.embedding = nn.Embedding(vocab_size,embed_size)

self.rnn = nn.GRU(embed_size,num_hiddens,num_layers,dropout=dropout)

def forward(self,X,*args):

# Output 'X' The shape of the :(`batch_size`, `num_steps`, `embed_size`)

X = self.embedding(X)

# In the cyclic neural network model , The first axis corresponds to the time step

X = X.permute(1, 0, 2)

# If the status is not mentioned , The default is 0

output, state = self.rnn(X)

# `output` The shape of the : (`num_steps`, `batch_size`, `num_hiddens`)

# `state[0]` The shape of the : (`num_layers`, `batch_size`, `num_hiddens`)

return output, state

# Build a hidden unit as 16 The two layers GRU Encoder . Given a small batch sequence input X( Batch size 4, Time step 7),

# The hidden state of the last layer outputs a tensor after completing all the time steps ( Time steps , Batch size , Number of hidden units )

# The multi-level hidden state shape of the last time step is ( Number of hidden layers , Batch size , Number of hidden cells )

encoder = Seq2SeqEncoder(vocab_size=10,embed_size=8,num_hiddens=16,num_layers=2)

encoder.eval() # dropout Don't take effect

X = torch.zeros((4,7),dtype=torch.long)

output,state = encoder(X)

output.shape,state.shape

(torch.Size([7, 4, 16]), torch.Size([2, 4, 16]))

2. decoder

As mentioned above , The context variable output by the encoder c \mathbf{c} c For the whole input sequence x 1 , … , x T x_1, \ldots, x_T x1,…,xT Encoding . The output sequence from the training data set y 1 , y 2 , … , y T ′ y_1, y_2, \ldots, y_{T'} y1,y2,…,yT′, For each time step t ′ t' t′( Time step with input sequence or encoder t t t Different ), Decoder output y t ′ y_{t'} yt′ The probability of depends on the previous output subsequence y 1 , … , y t ′ − 1 y_1, \ldots, y_{t'-1} y1,…,yt′−1 And context variables c \mathbf{c} c, namely P ( y t ′ ∣ y 1 , … , y t ′ − 1 , c ) P(y_{t'} \mid y_1, \ldots, y_{t'-1}, \mathbf{c}) P(yt′∣y1,…,yt′−1,c).

In order to model this conditional probability in the sequence , We can use another recurrent neural network as the decoder . Any time step on the output sequence t ′ t^\prime t′, The recurrent neural network will output from the previous time step y t ′ − 1 y_{t^\prime-1} yt′−1 And context variables c \mathbf{c} c As its input , They are then compared to the previous hidden state in the current time step s t ′ − 1 \mathbf{s}_{t^\prime-1} st′−1 Transition to hidden state s t ′ \mathbf{s}_{t^\prime} st′. therefore , You can use functions g g g To represent the transformation of the hidden layer of the decoder :

s t ′ = g ( y t ′ − 1 , c , s t ′ − 1 ) . \mathbf{s}_{t^\prime} = g(y_{t^\prime-1}, \mathbf{c}, \mathbf{s}_{t^\prime-1}). st′=g(yt′−1,c,st′−1).

After obtaining the hidden state of the decoder , We can use the output layer and softmax Operation to calculate the time step t ′ t^\prime t′ Conditional probability distribution of the output at P ( y t ′ ∣ y 1 , … , y t ′ − 1 , c ) P(y_{t^\prime} \mid y_1, \ldots, y_{t^\prime-1}, \mathbf{c}) P(yt′∣y1,…,yt′−1,c).

According to the formula, when implementing the decoder , We directly use the hidden state of the last time step of the encoder to initialize the hidden state of the decoder . This requires that the cyclic neural network encoder and the cyclic neural network decoder have the same number of layers and hidden units . In order to further contain the information of the encoded input sequence , The context variable is spliced with the input of the decoder in all time steps (concatenate). To predict the probability distribution of output markers , In the last layer of the recurrent neural network decoder, the full connection layer is used to transform the hidden state .

class Seq2SeqDecoder(d2l.Decoder):

""" Cyclic neural network decoder for sequence to sequence learning ."""

def __init__(self,vocab_size,embed_size,num_hiddens,num_layers,dropout=0,**kwargs):

super(Seq2SeqDecoder,self).__init__(**kwargs)

self.embedding = nn. Embedding(vocab_size,embed_size)

# Notice the assumption here encoder and decoder The size of the hidden layer is the same

self.rnn = nn.GRU(embed_size+num_hiddens,num_hiddens,num_layers,dropout=dropout)

self.dense = nn.Linear(num_hiddens,vocab_size)

def init_state(self,enc_outputs,*args):

return enc_outputs[1]

def forward(self,X,state):

# Output 'X' The shape of the :(`batch_size`, `num_steps`, `embed_size`)

X = self.embedding(X).permute(1, 0, 2)

# radio broadcast `context`, Make it have a connection with `X` same `num_steps`

context = state[-1].repeat(X.shape[0], 1, 1)

X_and_context = torch.cat((X, context), 2)

output, state = self.rnn(X_and_context, state)

output = self.dense(output).permute(1, 0, 2)

# `output` The shape of the : (`batch_size`, `num_steps`, `vocab_size`)

# `state[0]` The shape of the : (`num_layers`, `batch_size`, `num_hiddens`)

return output, state

# Illustrate with examples Decoder

decoder = Seq2SeqDecoder(vocab_size=10, embed_size=8, num_hiddens=16,

num_layers=2)

decoder.eval()

state = decoder.init_state(encoder(X))

output, state = decoder(X, state)

output.shape, state.shape

(torch.Size([4, 7, 10]), torch.Size([2, 4, 16]))

appeal encoder-decoder The process can be described as :

3. Loss function

Every time step , The decoder predicts the probability distribution of the output tag , have access to softmax Get distribution , Use the cross entropy loss function to optimize . In machine translation, we say , A specific padding tag is added to the end of the sequence , Therefore, sequences of different lengths can be loaded in small batches of the same shape . however , The prediction of filling marks should be excluded from the loss calculation .

So , We can mask irrelevant items with zero values , So that the product of any irrelevant prediction and zero is equal to zero . for example , If the effective length of two sequences ( Do not include padding marks ) Respectively 1 and 2, Then the remaining items after the first item and the first two items will be cleared to zero .

def sequence_mask(X, valid_len, value=0):

""" Mask irrelevant items in the sequence ."""

maxlen = X.size(1)

mask = torch.arange((maxlen), dtype=torch.float32,

device=X.device)[None, :] < valid_len[:, None]

X[~mask] = value

return X

# The default with 0 fill

X = torch.tensor([[1, 2, 3], [4, 5, 6]])

print(sequence_mask(X, torch.tensor([1, 2])))

# You can also specify what to fill

X = torch.ones(2, 3, 4)

print(sequence_mask(X, torch.tensor([1, 2]), value=-1))

tensor([[1, 0, 0],

[4, 5, 0]])

tensor([[[ 1., 1., 1., 1.],

[-1., -1., -1., -1.],

[-1., -1., -1., -1.]],

[[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.],

[-1., -1., -1., -1.]]])

# By extending the softmax Cross entropy to mask irrelevant predictions . first , All predictive tag masks are 1, Once the effective length is given

# The mask corresponding to the fill mark is set to 0, Finally, the loss of all tags multiplied by the mask filters out irrelevant predictions

class MaskedSoftmaxCELoss(nn.CrossEntropyLoss):

""" Covered Softmax Cross entropy loss function """

# `pred` The shape of the :(`batch_size`, `num_steps`, `vocab_size`)

# `label` The shape of the :(`batch_size`, `num_steps`)

# `valid_len` The shape of the :(`batch_size`,)

def forward(self,pred,label,valid_len):

weights = torch.ones_like(label)

weights = sequence_mask(weights,valid_len)

self.reduction = 'none'

unweighted_loss = super(MaskedSoftmaxCELoss,self).forward(pred.permute(0,2,1),label)

weighted_loss = (unweighted_loss * weights).mean(dim=1)

return weighted_loss

loss = MaskedSoftmaxCELoss()

pred=torch.ones(3,4,10)

label = torch.ones(3,4,dtype=torch.long)

valid_len = torch.tensor([4,2,0])

label,pred,loss(pred,label,valid_len)

(tensor([[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1]]),

tensor([[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]]]),

tensor([2.3026, 1.1513, 0.0000]))

4. Training

def train_seq2seq(net,data_iter,lr,num_epochs,tgt_vocab,device):

""" Training sequence to network model """

def xavier_init_weights(m):

if type(m) == nn.Linear:

nn.init.xavier_uniform_(m.weight)

if type(m) == nn.GRU:

for param in m._flat_weights_names:

if "weight" in param:

nn.init.xavier_uniform_(m._parameters[param])

net.apply(xavier_init_weights)

net.to(device)

optimizer = torch.optim.Adam(net.parameters(),lr=lr)

loss = MaskedSoftmaxCELoss()

net.train()

animator = d2l.Animator(xlabel='epoch', ylabel='loss',

xlim=[10, num_epochs])

for epoch in range(num_epochs):

timer = d2l.Timer()

metric = d2l.Accumulator(2) # The sum of training losses , Number of tags

for batch in data_iter:

X,X_valid_len,Y,Y_valid_len = [x.to(device) for x in batch]

# Add a begin of sentense

bos = torch.tensor([tgt_vocab['<bos>']]*Y.shape[0],device=device).reshape(-1,1)

# take input Move all data back

dec_input = torch.cat([bos,Y[:,:-1]],1) # Teachers force ,teacher force

# Prediction is actually not used X_valid_len Of , The error will be used

Y_hat, _ = net(X, dec_input, X_valid_len)

l = loss(Y_hat, Y, Y_valid_len)

l.sum().backward() # Scalar of the loss function “ Back transmission ”

d2l.grad_clipping(net, 1)

num_tokens = Y_valid_len.sum()

optimizer.step()

with torch.no_grad():

metric.add(l.sum(), num_tokens)

if (epoch + 1) % 10 == 0:

animator.add(epoch + 1, (metric[0] / metric[1],))

print(f'loss {

metric[0] / metric[1]:.3f}, {

metric[1] / timer.stop():.1f} '

f'tokens/sec on {

str(device)}')

# Define super parameters for training

embed_size, num_hiddens, num_layers, dropout = 32, 32, 2, 0.1

batch_size, num_steps = 64, 10

lr, num_epochs, device = 0.005, 300, d2l.try_gpu()

train_iter, src_vocab, tgt_vocab = d2l.load_data_nmt(batch_size, num_steps)

encoder = Seq2SeqEncoder(len(src_vocab), embed_size, num_hiddens, num_layers,

dropout)

decoder = Seq2SeqDecoder(len(tgt_vocab), embed_size, num_hiddens, num_layers,

dropout)

net = d2l.EncoderDecoder(encoder, decoder)

train_seq2seq(net, train_iter, lr, num_epochs, tgt_vocab, device)

loss 0.019, 21070.0 tokens/sec on cuda:0

5. forecast

def predict_seq2seq(net, src_sentence, src_vocab, tgt_vocab, num_steps,

device, save_attention_weights=False):

""" Prediction from sequence to sequence model """

# When forecasting, it will `net` Set to evaluation mode

net.eval()

src_tokens = src_vocab[src_sentence.lower().split(' ')] + [src_vocab['<eos>']]

enc_valid_len = torch.tensor([len(src_tokens)], device=device)

src_tokens = d2l.truncate_pad(src_tokens, num_steps, src_vocab['<pad>'])

# Add batch axis

enc_X = torch.unsqueeze(torch.tensor(src_tokens, dtype=torch.long, device=device), dim=0)

enc_outputs = net.encoder(enc_X, enc_valid_len)

dec_state = net.decoder.init_state(enc_outputs, enc_valid_len)

# Add batch axis

dec_X = torch.unsqueeze(torch.tensor([tgt_vocab['<bos>']], dtype=torch.long, device=device),dim=0)

output_seq, attention_weight_seq = [], []

for _ in range(num_steps):

Y, dec_state = net.decoder(dec_X, dec_state)

# We use the marker with the highest probability of prediction , As the input of the decoder in the next time step

dec_X = Y.argmax(dim=2)

pred = dec_X.squeeze(dim=0).type(torch.int32).item()

# Save attention weight ( Discussed later )

if save_attention_weights:

attention_weight_seq.append(net.decoder.attention_weights)

# Once the sequence end tag is predicted , The generation of the output sequence is completed

if pred == tgt_vocab['<eos>']:

break

output_seq.append(pred)

return ' '.join(tgt_vocab.to_tokens(output_seq)), attention_weight_seq

6. Forecasting and evaluating

We can do this with the tag sequence ( Real label ) Compare to evaluate the prediction sequence . Although at first BLEU(Bilingual Evaluation Understudy) Is proposed to evaluate the results of machine translation Papineni.Roukos.Ward.ea.2002 , But now it has been widely used to measure the quality of output sequences for a variety of applications . For any in the prediction sequence n n n Metagrammar (n-grams),BLEU The evaluation principle of is this n n n Whether the meta syntax appears in the tag sequence .

use p n p_n pn Express n n n The precision of meta grammar , It is a match between the prediction sequence and the tag sequence n n n The number of meta grammars matches that in the prediction sequence n n n The ratio of the number of meta grammars . Explain in detail , That is, the given tag sequence A A A、 B B B、 C C C、 D D D、 E E E、 F F F And prediction sequence A A A、 B B B、 B B B、 C C C、 D D D, We have p 1 = 4 / 5 p_1 = 4/5 p1=4/5、 p 2 = 3 / 4 p_2 = 3/4 p2=3/4、 p 3 = 1 / 3 p_3 = 1/3 p3=1/3 and p 4 = 0 p_4 = 0 p4=0. in addition , l e n label \mathrm{len}_{\text{label}} lenlabel Represents the number of tags in the tag sequence and l e n pred \mathrm{len}_{\text{pred}} lenpred Represents the number of tags in the prediction sequence . that ,BLEU Is defined as :

exp ( min ( 0 , 1 − l e n label l e n pred ) ) ∏ n = 1 k p n 1 / 2 n , \exp\left(\min\left(0, 1 - \frac{\mathrm{len}_{\text{label}}}{\mathrm{len}_{\text{pred}}}\right)\right) \prod_{n=1}^k p_n^{1/2^n}, exp(min(0,1−lenpredlenlabel))n=1∏kpn1/2n,

among k k k It is the longest one that can be matched n n n Metagrammar . according to BLEU The definition of , When the prediction sequence is the same as the tag sequence ,BLEU by 1. and , Because of the match n n n The longer the metagrammar is, the more difficult it is ,BLEU For longer n n n The precision of meta grammar assigns greater weight . say concretely , When p n p_n pn When fixed , p n 1 / 2 n p_n^{1/2^n} pn1/2n Will follow n n n Increase with the increase of ( The original paper uses p n 1 / n p_n^{1/n} pn1/n). Besides , Because the shorter the predicted sequence, the p n p_n pn The higher the value , therefore BLEU The coefficient before the multiplication term in the formula punishes the short prediction sequence . for example , When k = 2 k=2 k=2 when , Given tag sequence A A A、 B B B、 C C C、 D D D、 E E E、 F F F And prediction sequence A A A、 B B B, Even though p 1 = p 2 = 1 p_1 = p_2 = 1 p1=p2=1, Punishment factor exp ( 1 − 6 / 2 ) ≈ 0.14 \exp(1-6/2) \approx 0.14 exp(1−6/2)≈0.14 It will reduce BLEU.

BLEU The implementation code of is as follows .

def bleu(pred_seq, label_seq, k): #@save

""" Calculation BLEU"""

pred_tokens, label_tokens = pred_seq.split(' '), label_seq.split(' ')

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i:i + n])] += 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i:i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i:i + n])] -= 1

score *= math.pow(num_matches / (len_pred - n + 1), math.pow(0.5, n))

return score

7. With training Seq2Seq The model translates English into French

engs = ['go .', "i lost .", 'he\'s calm .', 'i\'m home .']

fras = ['va !', 'j\'ai perdu .', 'il est calme .', 'je suis chez moi .']

for eng, fra in zip(engs, fras):

translation, attention_weight_seq = predict_seq2seq(

net, eng, src_vocab, tgt_vocab, num_steps, device)

print(f'{

eng} => {

translation}, bleu {

bleu(translation, fra, k=2):.3f}')

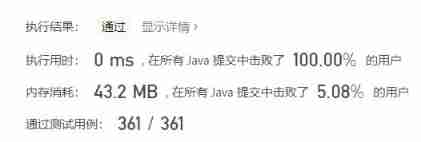

go . => va !, bleu 1.000

i lost . => j'ai perdu ., bleu 1.000

he's calm . => il est paresseux ., bleu 0.658

i'm home . => je suis chez moi de de de de de de, bleu 0.481

3、 ... and 、 summary

- Seq2Seq It's from one sentence to another

- Both encoder and decoder are RNN

- Hide the encoder's last time state to initialize the decoder state to complete information transmission

- Commonly used BLEU To measure the quality of the generated sequence .

边栏推荐

- Log cannot be recorded after log4net is deployed to the server

- QTreeView+自定义Model实现示例

- lolcat

- 智慧路灯杆水库区安全监测应用

- UML sequence diagram [easy to understand]

- Qtreeview+ custom model implementation example

- Web端自动化测试失败原因汇总

- How to write unit test cases

- At the age of 30, I changed to Hongmeng with a high salary because I did these three things

- ASP. Net to access directory files outside the project website

猜你喜欢

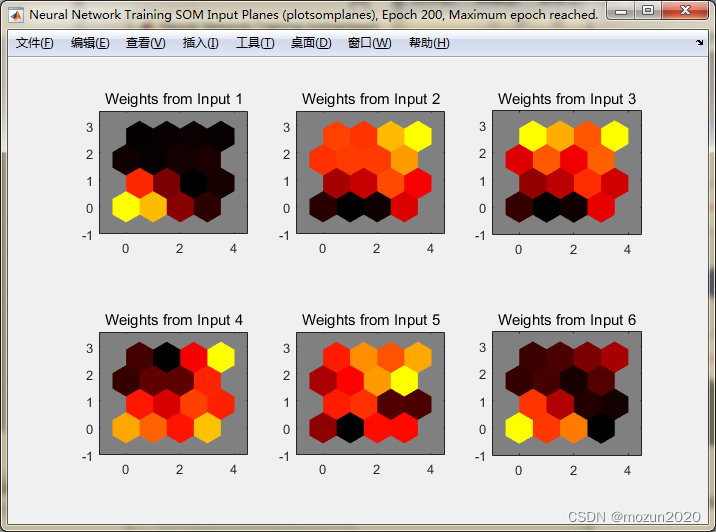

Matlab tips (25) competitive neural network and SOM neural network

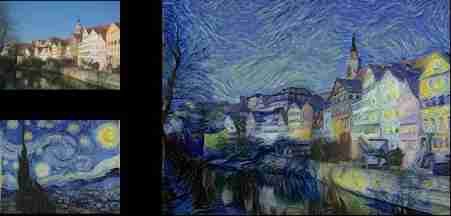

Hands on deep learning (33) -- style transfer

Mmclassification annotation file generation

26. Delete duplicates in the ordered array (fast and slow pointer de duplication)

自动化的优点有哪些?

C # use gdi+ to add text with center rotation (arbitrary angle)

JDBC and MySQL database

Hands on deep learning (36) -- language model and data set

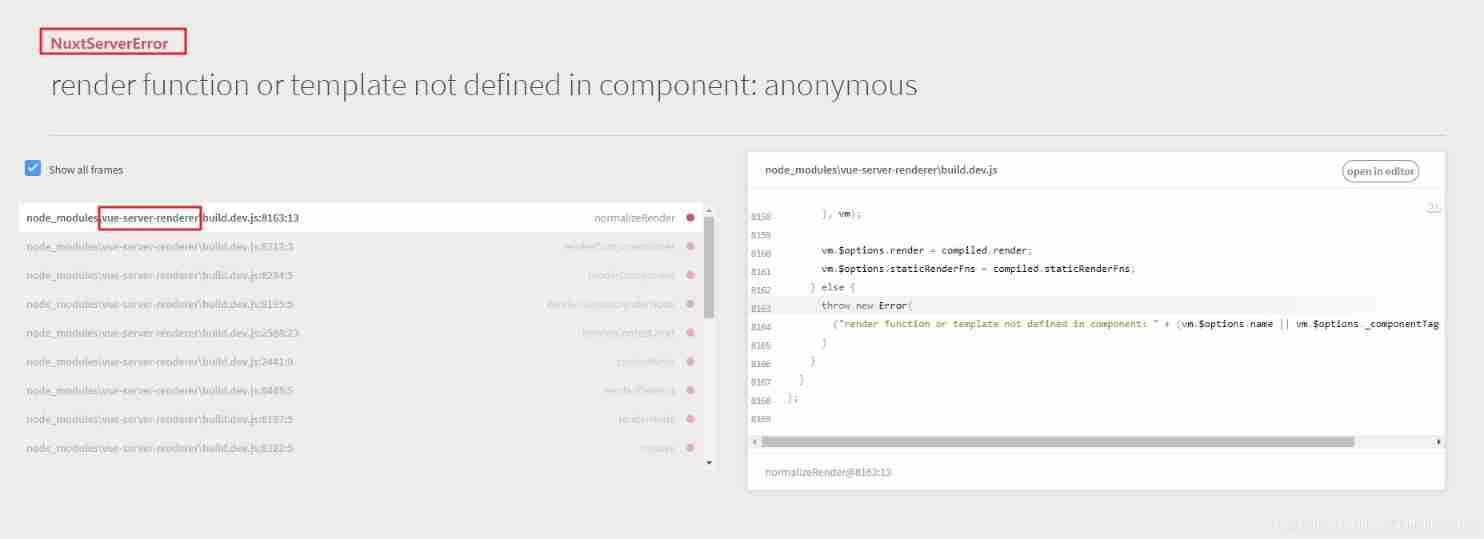

Nuxt reports an error: render function or template not defined in component: anonymous

MySQL foundation 02 - installing MySQL in non docker version

随机推荐

Sort out the power node, Mr. Wang he's SSM integration steps

PMP registration process and precautions

libmysqlclient.so.20: cannot open shared object file: No such file or directory

2022-2028 global tensile strain sensor industry research and trend analysis report

华为联机对战如何提升玩家匹配成功几率

Basic data types in golang

SQL replying to comments

26. Delete duplicates in the ordered array (fast and slow pointer de duplication)

Summary of the most comprehensive CTF web question ideas (updating)

Fatal error in golang: concurrent map writes

Global and Chinese markets of thrombography hemostasis analyzer (TEG) 2022-2028: Research Report on technology, participants, trends, market size and share

2022-2028 global small batch batch batch furnace industry research and trend analysis report

Rules for using init in golang

Golang Modules

自动化的优点有哪些?

Get the source code in the mask with the help of shims

C语言指针面试题——第二弹

Explanation of for loop in golang

Launpad | Basics

Hands on deep learning (36) -- language model and data set