当前位置:网站首页>Repvgg paper explanation and model reproduction using pytoch

Repvgg paper explanation and model reproduction using pytoch

2022-07-28 15:11:00 【deephub】

RepVGG: Making VGG-style ConvNets Great Again yes 2021 CVPR A paper on , Just like his name , Use structural re-parameterization Let the class VGG Our architecture regains the best performance and faster speed . In this paper, first of all, a detailed introduction to the paper , And then use Pytorch Reappear RepVGG Model .

Detailed explanation of the paper

1、 The problem of multi branch model

Speed :

As you can see in the picture above 3×3 conv The theoretical calculated density of is about that of other calculated densities 4 times , This shows that the theory always FLOPs It is not a comparable indicator of the actual speed between different architectures . for example ,VGG-16 Than effentnet - b3 Big 8.4×, But in 1080Ti It runs fast on 1.8×.

stay Inception In the automatic generation architecture of , Use several small operators , Instead of several large operators, the multi branch topology is widely used .

NASNet-A The amount of fragmentation in is 13, This is right GPU Devices with powerful parallel computing capabilities are not friendly .

Memory :

Memory efficiency of multi branch is very low , Because the results of each branch need to be saved until the residual connection or connection , This will significantly increase the peak memory usage . Shown above , The input of a residual block needs to be maintained until addition . Suppose the block keeps feature map Size , The peak of extra memory usage is input 2 times .

2、RepVGG

(a) ResNet: It gets the multi-path topology in the process of training and reasoning , Slow speed , Low memory efficiency .

(b) RepVGG Training : Only get the multipath topology during training .

RepVGG Reasoning : Only when reasoning, we can get the single path topology , Reasoning time is fast .

For multiple branches ,ResNets The successful explanation of such a multi branch architecture makes the model implicitly integrate many shallow models . say concretely , When there is n When it's a block , The model can be interpreted as 2^n A collection of models , Because each block branches the flow into two paths . Due to the defects of multi branch topology in reasoning , But branching is good for training , Therefore, using multiple branches to realize the integration of many models only takes a lot of time during training .

repvgg Use similar to identity layer ( When the size matches , Input is output , No operation ) and 1×1 Convolution , Therefore, the training time information flow of the building block is y = x+g(x)+f(x), As above, (b) . So the model becomes 3^n A collection of submodels , contain n Such a block .

Reset the parameters for the general inferential time model :

BN In each branch, use before adding .

Set the size to C2×C1×3×3 Of W(3) Express 3×3 nucleus , Its C1 Input channels and C2 Output channel , And the size is C2×C1 Of W(1) Express 1×1 Branching nucleus

μ(3)、 σ(3)、γ(3)、β(3) Respectively 3×3 After convolution BN Cumulative mean value of layers 、 Standard deviation 、 Learn scale factors and deviations .

1×1 conv After BN Parameters and μ(1)、 σ(1)、γ(1)、β(1) be similar , Of the same branch BN Parameters and μ(0)、(0)、γ(0)、β(0) be similar .

set up M(1) The size is N×C1×H1×W1, M(2) The size is N×C2×H2×W2, Input and output respectively , set up * Is the convolution operator .

If C1=C2, H1=H2, W1=W2, We get :

In style bn For reasoning time bn function :

BN And Conv Merge : First of all, put each one BN And the previous convolution layer is converted into convolution with offset vector . set up {W ', b '} For the converted core and bias :

When reasoning bn by :

All branches merge : This transformation also applies to identity Branch , Because you can put identity Layer as 1×1 conv, Take the identity matrix as the core . After these transformations, you will have a 3×3 nucleus 、 Two 1×1 Kernel and three offset vectors . Then we add the three offset vectors , Get the final offset . And finally 3×3 nucleus , take 1×1 The core is added to 3×3 At the center of the nucleus , This can be achieved by combining two 1×1 Zero padding of the kernel to 3×3 And add the three cores to realize , As shown in the figure above .

RepVGG The structure is as follows

3×3 The layers are divided into 5 Stages , The first level of the stage is stride= 2. For image classification , After the global average merge , Then use the connected layer as the classification header . For other tasks , Task specific parts can be used on features generated at any level ( For example, segmentation 、 Detect multiple features needed ).

The five stages have 1、2、4、14、1 layer , The build name is RepVGG-B.

Deeper RepVGG-B, In the 2、3 and 4 There are stages in 2 layer .

You can also use different a and b Produce different variants .A For scaling the first four stages , and B For the final stage , But make sure that b> a. In order to further reduce the amount of parameters and calculation , Adopted interleave groupwise Of 3×3 Convolution layer in exchange for efficiency . among ,RepVGG-A Of the 3、5、7、…、21 Layers and RepVGG-B Additional second 23、25、27 Set the number of layers g. For the sake of simplicity , For these layers ,g Set globally to 1、2 or 4, Without hierarchical adjustment .

3、 experimental result

REPVGG-A0 In terms of accuracy and speed RESNET-18 good 1.25% and 33%,REPVGGA1 Than RESNET-34 good 0.29%/64%,REPVGG-A2 Than Resnet-50 good 0.17%/83%.

Through the grouping layer (g2/g4) Interleaving ,RepVGG The speed of the model is further accelerated , The decrease in accuracy is more reasonable :RepVGG- b1g4 Than ResNet-101 Improved 0.37%/101%,RepVGGB1g2 With the same accuracy, it is better than ResNet-152 Improved 2.66 times .

Although the number of parameters is not the main problem , But you can see all of the above RepVGG Models are better than ResNets Use parameters more effectively .

And classic VGG-16 comparison ,RepVGG-B2 The parameter of is only 58%, Speed up 10%, Improved accuracy 6.57%.

RepVGG Model in 200 individual epoch The accuracy of is 80% above .RepVGG-A2 Than effecentnet - b0 Good performance 1.37%/59%,RepVGG-B1 Than RegNetX-3.2GF Good performance 0.39%, The running speed is also slightly faster .

4、 Melting research

After removing the two branches shown in the above figure , The training time model degenerates into a general model , The accuracy is only 72.39%.

Use only 1×1 Convolution sum identity The layer accuracy has decreased to 74.79% and 73.15%

Full function RepVGGB0 The accuracy of the model is 75.14%, Higher than the ordinary model 2.75%.

Division :

The above figure shows the modified PSPNET Framework results , The modified PSPNET Running speed ratio of Resnet-50/101-backbone Much faster .REPVGG Of backbone Perform better than Resnet-50 and Resnet-101.

Now let's start using Pytorch Realization

Pytorch Realization RepVGG

1、 Single and multi branch models

To achieve RepVGG First of all, we need to understand multiple branches , Multi branch is where input passes through different layers , Then summarize in some way ( Usually additive ).

It is also mentioned in the paper that it makes many implicit sets of shallow models create multi branch models . More specifically , The model can be interpreted as 2^n A collection of models , Because each block divides the traffic into two paths .

The multi branch model is slower and consumes more memory than the single branch model . Let's create a classic block to understand the reason

import torch

from torch import nn, Tensor

from torchvision.ops import Conv2dNormActivation

from typing import Dict, List

torch.manual_seed(0)

class ResNetBlock(nn.Module):

def __init__(self, in_channels: int, out_channels: int, stride: int = 1):

super().__init__()

self.weight = nn.Sequential(

Conv2dNormActivation(

in_channels, out_channels, kernel_size=3, stride=stride

),

Conv2dNormActivation(

out_channels, out_channels, kernel_size=3, activation_layer=None

),

)

self.shortcut = (

Conv2dNormActivation(

in_channels,

out_channels,

kernel_size=1,

stride=stride,

activation_layer=None,

)

if in_channels != out_channels

else nn.Identity()

)

self.act = nn.ReLU(inplace=True)

def forward(self, x):

res = self.shortcut(x) # <- 2x memory

x = self.weight(x)

x += res

x = self.act(x) # <- 1x memory

return x

The storage residuals will have 2 Times memory consumption . In the image below , Use the figure above

Multi branch structure is only useful in training . therefore , If you can delete it at the predicted time , It can improve the model speed and memory consumption , Let's see how the code does :

2、 From multi branch to single branch

Consider the following , There are two by two 3x3 Convs A branch of

class TwoBranches(nn.Module):

def __init__(self, in_channels: int, out_channels: int):

super().__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3)

self.conv2 = nn.Conv2d(in_channels, out_channels, kernel_size=3)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2(x)

return x1 + x2

Look at the results

two_branches = TwoBranches(8, 8)

x = torch.randn((1, 8, 7, 7))

two_branches(x).shape

torch.Size([1, 8, 5, 5])

Now? , Create a convv, We call it “ conv_fused”,conv_fused(x) = conv1(x) + conv2(x). We can sum the weights and offsets of the two convolutions , According to the characteristics of convolution, this is no problem .

conv1 = two_branches.conv1

conv2 = two_branches.conv2

conv_fused = nn.Conv2d(conv1.in_channels, conv1.out_channels, kernel_size=conv1.kernel_size)

conv_fused.weight = nn.Parameter(conv1.weight + conv2.weight)

conv_fused.bias = nn.Parameter(conv1.bias + conv2.bias)

# check they give the same output

assert torch.allclose(two_branches(x), conv_fused(x), atol=1e-5)

Let's talk about its speed !

from time import perf_counter

two_branches.to("cuda")

conv_fused.to("cuda")

with torch.no_grad():

x = torch.randn((4, 8, 7, 7), device=torch.device("cuda"))

start = perf_counter()

two_branches(x)

print(f"conv1(x) + conv2(x) tooks {perf_counter() - start:.6f}s")

start = perf_counter()

conv_fused(x)

print(f"conv_fused(x) tooks {perf_counter() - start:.6f}s")

Twice as fast

conv1(x) + conv2(x) tooks 0.000421s

conv_fused(x) tooks 0.000215s

3、Fuse Conv and Batschorm

BATGNORM Used as the layer after convolution block . They are integrated in the paper , namely conv_fused(x) = batchnorm(conv(x)).

Of the paper 2 Two formulas explain the screenshots here , For easy viewing :

The code looks like this :

def get_fused_bn_to_conv_state_dict(

conv: nn.Conv2d, bn: nn.BatchNorm2d

) -> Dict[str, Tensor]:

# in the paper, weights is gamma and bias is beta

bn_mean, bn_var, bn_gamma, bn_beta = (

bn.running_mean,

bn.running_var,

bn.weight,

bn.bias,

)

# we need the std!

bn_std = (bn_var + bn.eps).sqrt()

# eq (3)

conv_weight = nn.Parameter((bn_gamma / bn_std).reshape(-1, 1, 1, 1) * conv.weight)

# still eq (3)

conv_bias = nn.Parameter(bn_beta - bn_mean * bn_gamma / bn_std)

return {"weight": conv_weight, "bias": conv_bias}

Let's see how it works :

conv_bn = nn.Sequential(

nn.Conv2d(8, 8, kernel_size=3, bias=False),

nn.BatchNorm2d(8)

)

torch.nn.init.uniform_(conv_bn[1].weight)

torch.nn.init.uniform_(conv_bn[1].bias)

with torch.no_grad():

# be sure to switch to eval mode!!

conv_bn = conv_bn.eval()

conv_fused = nn.Conv2d(conv_bn[0].in_channels,

conv_bn[0].out_channels,

kernel_size=conv_bn[0].kernel_size)

conv_fused.load_state_dict(get_fused_bn_to_conv_state_dict(conv_bn[0], conv_bn[1]))

x = torch.randn((1, 8, 7, 7))

assert torch.allclose(conv_bn(x), conv_fused(x), atol=1e-5)

This is how the thesis integrates Conv2D and BatchRorm2D layer .

In fact, we can see that the goal of the paper is : Integrate the whole model into a single data flow ( There are no branches ), Make the network faster !

The author proposes a new RepVgg block . And ResNet Similarly, there are residuals , But through identity Layers make it faster .

Continue with the above figure ,pytorch The code for is as follows :

class RepVGGBlock(nn.Module):

def __init__(self, in_channels: int, out_channels: int, stride: int = 1):

super().__init__()

self.block = Conv2dNormActivation(

in_channels,

out_channels,

kernel_size=3,

padding=1,

bias=False,

stride=stride,

activation_layer=None,

# the original model may also have groups > 1

)

self.shortcut = Conv2dNormActivation(

in_channels,

out_channels,

kernel_size=1,

stride=stride,

activation_layer=None,

)

self.identity = (

nn.BatchNorm2d(out_channels) if in_channels == out_channels else None

)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

res = x # <- 2x memory

x = self.block(x)

x += self.shortcut(res)

if self.identity:

x += self.identity(res)

x = self.relu(x) # <- 1x memory

return x

4、 Remodeling of parameters

One 3x3 conv-> bn, One 1x1 conv-bn and ( Sometimes ) One batchnorm(identity Branch ). To integrate them , Create a conv_fused,conv_fused

=

3x3conv-bn(x) + 1x1conv-bn(x) + bn(x), Or if not identity layer ,conv_fused

=

3x3conv-bn(x) + 1x1conv-bn(x).

To create this conv_fused, We need to do the following :

- take 3x3conv-bn(x) Merge into one 3x3conv in

- 1x1conv-bn(x), Then convert it to 3x3conv

- take identity Of BN Convert to 3x3conv

- All three 3x3convs Add up

The following figure is the summary of the paper :

The first step is easy , We can do it in RepVGGBlock.block( Lord 3x3 Conver-bn) Upper use get_fused_bn_to_conv_state_dict.

The second step is similar , stay RepVGGBlock.shortcut On (1x1 cons-bn) Use get_fused_bn_to_conv_state_dict. This is what the paper says to use in every dimension 1 Fill the fused 1x1 The core of , To form a 3x3.

identity Of bn More trouble . Paper skills (trick) Is to create 3x3 Conv To simulate the identity, It will be treated as an identity function , And then use get_fused_bn_to_conv_state_dict With the identity bn The fusion . Or set the weight of the corresponding channel at the center of the corresponding kernel to 1 To achieve .

Conv The weight of is in_channels, out_channels, kernel_h, kernel_w. If we want to create a identity ,conv(x) = x, I just need to set the weight to 1 that will do , The code is as follows :

with torch.no_grad():

x = torch.randn((1,2,3,3))

identity_conv = nn.Conv2d(2,2,kernel_size=3, padding=1, bias=False)

identity_conv.weight.zero_()

print(identity_conv.weight.shape)

in_channels = identity_conv.in_channels

for i in range(in_channels):

identity_conv.weight[i, i % in_channels, 1, 1] = 1

print(identity_conv.weight)

out = identity_conv(x)

assert torch.allclose(x, out)

result

torch.Size([2, 2, 3, 3])

Parameter containing:

tensor([[[[0., 0., 0.],

[0., 1., 0.],

[0., 0., 0.]], [[0., 0., 0.],

[0., 0., 0.],

[0., 0., 0.]]],

[[[0., 0., 0.],

[0., 0., 0.],

[0., 0., 0.]], [[0., 0., 0.],

[0., 1., 0.],

[0., 0., 0.]]]], requires_grad=True)

We created one Conv, It acts like an identity function . Put everything together , It is the parameter remodeling in the paper .

def get_fused_conv_state_dict_from_block(block: RepVGGBlock) -> Dict[str, Tensor]:

fused_block_conv_state_dict = get_fused_bn_to_conv_state_dict(

block.block[0], block.block[1]

)

if block.shortcut:

# fuse the 1x1 shortcut

conv_1x1_state_dict = get_fused_bn_to_conv_state_dict(

block.shortcut[0], block.shortcut[1]

)

# we pad the 1x1 to a 3x3

conv_1x1_state_dict["weight"] = torch.nn.functional.pad(

conv_1x1_state_dict["weight"], [1, 1, 1, 1]

)

fused_block_conv_state_dict["weight"] += conv_1x1_state_dict["weight"]

fused_block_conv_state_dict["bias"] += conv_1x1_state_dict["bias"]

if block.identity:

# create our identity 3x3 conv kernel

identify_conv = nn.Conv2d(

block.block[0].in_channels,

block.block[0].in_channels,

kernel_size=3,

bias=True,

padding=1,

).to(block.block[0].weight.device)

# set them to zero!

identify_conv.weight.zero_()

# set the middle element to zero for the right channel

in_channels = identify_conv.in_channels

for i in range(identify_conv.in_channels):

identify_conv.weight[i, i % in_channels, 1, 1] = 1

# fuse the 3x3 identity

identity_state_dict = get_fused_bn_to_conv_state_dict(

identify_conv, block.identity

)

fused_block_conv_state_dict["weight"] += identity_state_dict["weight"]

fused_block_conv_state_dict["bias"] += identity_state_dict["bias"]

fused_conv_state_dict = {

k: nn.Parameter(v) for k, v in fused_block_conv_state_dict.items()

}

return fused_conv_state_dict

Finally, define a RepVGGFastBlock. It's just made up of conv + relu form

class RepVGGFastBlock(nn.Sequential):

def __init__(self, in_channels: int, out_channels: int, stride: int = 1):

super().__init__()

self.conv = nn.Conv2d(

in_channels, out_channels, kernel_size=3, stride=stride, padding=1

)

self.relu = nn.ReLU(inplace=True)

And in RepVGGBlock Add to_fast Method to quickly create RepVGGFastBlock

class RepVGGBlock(nn.Module):

def __init__(self, in_channels: int, out_channels: int, stride: int = 1):

super().__init__()

self.block = Conv2dNormActivation(

in_channels,

out_channels,

kernel_size=3,

padding=1,

bias=False,

stride=stride,

activation_layer=None,

# the original model may also have groups > 1

)

self.shortcut = Conv2dNormActivation(

in_channels,

out_channels,

kernel_size=1,

stride=stride,

activation_layer=None,

)

self.identity = (

nn.BatchNorm2d(out_channels) if in_channels == out_channels else None

)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

res = x # <- 2x memory

x = self.block(x)

x += self.shortcut(res)

if self.identity:

x += self.identity(res)

x = self.relu(x) # <- 1x memory

return x

def to_fast(self) -> RepVGGFastBlock:

fused_conv_state_dict = get_fused_conv_state_dict_from_block(self)

fast_block = RepVGGFastBlock(

self.block[0].in_channels,

self.block[0].out_channels,

stride=self.block[0].stride,

)

fast_block.conv.load_state_dict(fused_conv_state_dict)

return fast_block

5、RepVGG

switch_to_fast Method to define RepVGGStage( A collection of blocks ) and RepVGG:

class RepVGGStage(nn.Sequential):

def __init__(

self,

in_channels: int,

out_channels: int,

depth: int,

):

super().__init__(

RepVGGBlock(in_channels, out_channels, stride=2),

*[RepVGGBlock(out_channels, out_channels) for _ in range(depth - 1)],

)

class RepVGG(nn.Sequential):

def __init__(self, widths: List[int], depths: List[int], in_channels: int = 3):

super().__init__()

in_out_channels = zip(widths, widths[1:])

self.stages = nn.Sequential(

RepVGGStage(in_channels, widths[0], depth=1),

*[

RepVGGStage(in_channels, out_channels, depth)

for (in_channels, out_channels), depth in zip(in_out_channels, depths)

],

)

# omit classification head for simplicity

def switch_to_fast(self):

for stage in self.stages:

for i, block in enumerate(stage):

stage[i] = block.to_fast()

return self

That's it , Let's take a look at the test

6、 Model test

benchmark.py A benchmark has been created in , stay gtx 1080ti Run models with different batch sizes on , This is the result :

Each stage of the model has two layers , Four stages , Width is 64,128,256,512.

In their paper , They scale these values in a certain proportion ( be called a and b), And use grouping convolution . Because I am more interested in reparameterization , So here we skip , Because this is a parameter adjustment process , We can use the method of super parameter search to get .

Basically, compared with the ordinary model, the model of reshaping parameters has significantly improved on different time scales

You can see , about batch_size=128, Default model ( Multiple branches ) Occupy 1.45 second , And the parametric model ( Fast ) Occupy only 0.0134 second . namely 108 Double the rise

summary

In this paper , First of all, it introduces in detail RepVGG The paper of , Then I gradually learned how to create RepVGG, And focuses on the method of reshaping the weight , And use Pytorch Reproduce the model of the paper ,RepVGG This reshaping weight technology actually uses the method of breaking bridges through rivers , White whores the performance of multiple branches , And it can also improve , You're not angry . such “ Whoring for nothing ” Technology can also be transplanted to other architectures .

The address of the thesis is here :

http://arxiv.org/abs/2101.03697

The code is here :

https://avoid.overfit.cn/post/f9263685607b40df80e5c4f949a28b42

Thank you for reading !

边栏推荐

- Mlx90640 infrared thermal imager sensor module development notes (VIII)

- VTK annotation class widget vtkborderwidget

- Multi merchant mall system function disassembly lecture 17 - platform side order list

- SQL learning

- 21、 TF coordinate transformation (I): coordinate MSG message

- MITK create module

- Wonderful frog -- how simple can it be to abandon the float and use the navigation bar set by the elastic box

- SystemVerilog

- Development status of security and privacy computing in China

- 10、 C enum enumeration

猜你喜欢

6、 C language circular statement

Introduction to MITK

Instant experience | further improve application device compatibility with cts-d

![UTF-8、UTF-16 和 UTF-32 字符编码之间的区别?[图文详解]](/img/a9/336390db64d871fa1655800c1e0efc.png)

UTF-8、UTF-16 和 UTF-32 字符编码之间的区别?[图文详解]

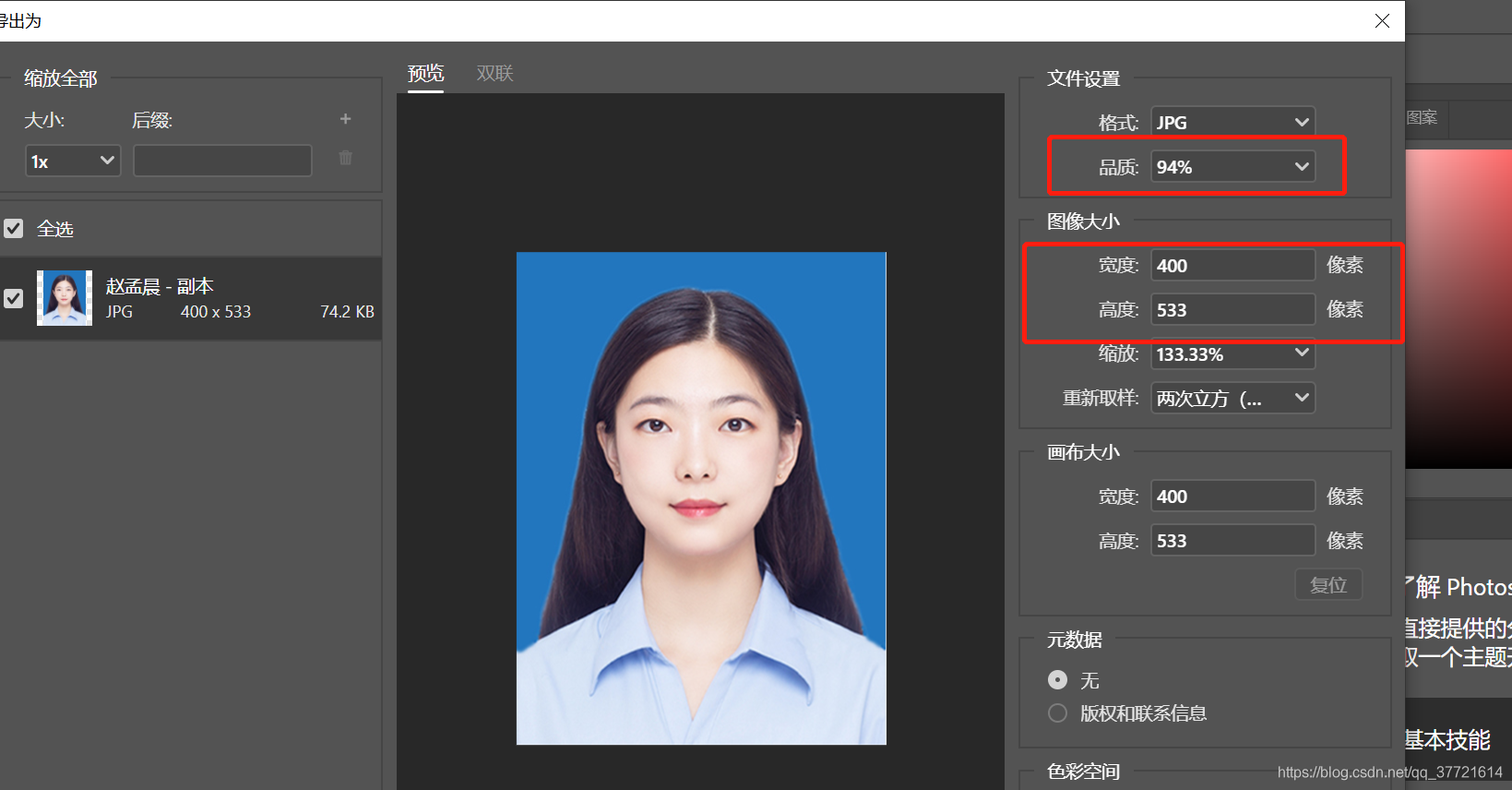

PS modify the length and width pixels and file size of photos

iPhone苹果手机上一些不想让他人看到的APP应用图标怎么设置手机桌面上的APP应用设置隐藏不让显示在手机桌面隐藏后自己可以正常使用的方法?

Application of edge technology and applet container in smart home

C language related programming exercises

![What is the difference between UTF-8, utf-16 and UTF-32 character encoding? [graphic explanation]](/img/a9/336390db64d871fa1655800c1e0efc.png)

What is the difference between UTF-8, utf-16 and UTF-32 character encoding? [graphic explanation]

VTK notes - picker picker summary

随机推荐

NCBI experience accumulation

Privacy computing summary

Machine learning related concepts

URP下使用GL进行绘制方法

Shell command

@DS('slave') 多数据源兼容事务问题解决方案

JS study notes 18-23

Mysql易错知识点整理(待更新)

16、 Launch file label of ROS (II)

经典Dijkstra与最长路

Reptile: from introduction to imprisonment (I) -- Concept

9、 C array explanation

19、 ROS parameter name setting

手摸手实现Canal如何接入MySQL实现数据写操作监听

云计算需要考虑的安全技术列举

Keras reported an error using tensorboard: cannot stop profiling

【游戏测试工程师】初识游戏测试—你了解它吗?

The second 1024, come on!

Examples of Pareto optimality and Nash equilibrium

Introduction to mqtt protocol