当前位置:网站首页>Cvpr2022 𞓜 future transformer with long-term action expectation

Cvpr2022 𞓜 future transformer with long-term action expectation

2022-06-29 13:09:00 【CV technical guide (official account)】

Preface In this paper , An end-to-end attention model for motion prediction is proposed , be called Future Transformer(FUTR), The model uses the global attention on all input frames and output markers to predict the minute long sequence of future actions . Different from previous autoregressive models , This method learns to predict the whole sequence of future actions in parallel decoding , So as to provide more accurate and fast reasoning for long-term prediction .

Welcome to the official account CV Technical guide , Focus on computer vision technology summary 、 The latest technology tracking 、 Interpretation of classic papers 、CV Recruitment information .

The paper :Future Transformer for Long-term Action Anticipation

The paper :http://arxiv.org/pdf/2205.14022

Code : Unpublished

background

Long term motion prediction in video has recently become a basic task of advanced intelligent systems . It aims to predict a series of future behaviors through limited observation of past behaviors in video . Although there are more and more researches on motion prediction , But most recent work has focused on predicting an action in a few seconds . by comparison , Long term action expectations are designed to predict the sequence of multiple actions in the next few minutes . The task is challenging , Because it needs to understand the long-term dependence between past and future actions .

Recent long-term prediction methods encode observed video frames into compressed vectors , And through recurrent neural network (RNN) Decode it , Predict the future action sequence by autoregressive method . Although the performance on the standard benchmark is impressive , But they have the following limitations :

1. The encoder over compresses the input frame feature , Thus, the fine-grained time relationship between observation frames cannot be preserved .

2.RNN The decoder is limited in modeling the long-term dependence of input sequences and considering the global relationship between past and future actions .

3. The sequence prediction of autoregressive decoding may accumulate errors from previous results , And it will increase the reasoning time .

To address these limitations , An end-to-end attention neural network is introduced (FUTR), For long-term motion prediction . This method effectively captures the long-term relationship of the whole motion sequence . Not only observed past actions , But also found potential future actions . Pictured 1 Encoder shown - Decoder structure (FUTR); The encoder learns to capture fine-grained long-distance temporal relationships between past observation frames , The decoder learns to capture the global relationship between upcoming actions in the future and the observation characteristics of the encoder .

chart 1 FUTR

contribution

1. An end-to-end attention neural network is introduced , be called FUTR, It effectively uses fine-grained features and global interaction to predict long-term actions .

2. It is proposed to predict a series of actions in parallel decoding , So as to achieve accurate and fast inference .

3. Developed an integration model , The model learns different feature representations by segmenting actions in the encoder and predicting actions in the decoder .

4. The proposed approach is a long-term action expectation 、 Breakfast and 50 The standard benchmark for salad sets a new level of skill .

Related work

1、 Action expectations

Motion prediction aims to predict future motion through limited observation of video . With the emergence of large data sets , Many methods have been proposed to solve the next action prediction , Predict individual future actions in seconds . Recently, someone put forward a long-term action forecast , A series of actions to predict the distant future from remote video .

Farha Et al. First introduced the expected tasks of long-term operations , Two models are proposed ,RNN and CNN To handle the task .Farha and Gall Introduced GRU The Internet , The uncertainty of future activities is modeled by autoregression . They predict multiple possible sequences of future actions during testing .Ke Et al. Introduced a model , The model can predict the actions in a specific future timestamp , There is no need to predict intermediate actions . They show that , Iterative prediction of intermediate action will lead to error accumulation . The previous method usually takes the action label of the observed frame as the input , Use the action segmentation model to extract action labels . by comparison , Recent studies have used visual features as input .Farha Et al. Proposed an end-to-end model for long-term motion prediction , The motion segmentation model is applied to the visual features in training . They also introduced a GRU Model , The model has periodic consistency between past and future actions .Sener A multi-scale time aggregation model was proposed by et al , The model aggregates the past visual features into a compressed vector , And then use LSTM Network iterations predict future behavior . Recent work usually takes advantage of RNN Compressed representation of past frames . by comparison , The author proposes an end-to-end attention model , The model uses fine-grained visual features of past frames to predict all future parallel actions .

2. Self attention mechanism

Pay attention to yourself (Self attention) It was first introduced into neural machinetranslation , To relieve RNN Learning about long-term dependence , It has been widely used in various computer vision tasks . Self attention can effectively learn the global interaction between image pixels or patches in the image domain . There are several ways to use the attention mechanism in the video field to simulate the temporal dynamics in short-term video and long-term video . Related to motion prediction ,Girdhar and Grauman A predictive video converter has recently been introduced (VT), It uses a self - attention decoder to predict the next action . And VT Different ,VT Autoregressive prediction is required for long-term prediction , Author's encoder - The decoder model effectively predicts the parallel action sequence in the next few minutes .

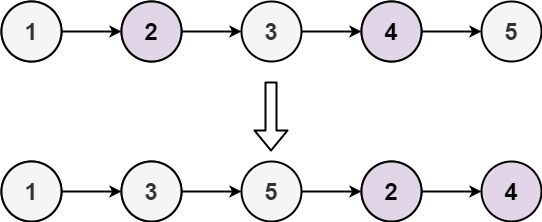

3. Parallel decoding

transformer Designed to predict output in sequence , Autoregressive decoding . As the reasoning cost increases with the length of the output sequence , The latest method in naturallanguageprocessing replaces autoregressive decoding with parallel decoding . With parallel decoding transformer Models are also used in computer vision tasks , Such as target detection 、 Camera calibration and dense video captioning . We use it to predict long-term actions , At the same time, predict a series of actions in the future . In long-term motion prediction , Parallel decoding can not only achieve faster reasoning , It can also capture the two-way relationship between future actions .

Problem specification

The problem of long-term motion prediction is to predict the motion sequence of future video frames from a given observable part of the video . chart 2 Describes the problem setting . Those who have T The frame of the video , Observe the first αT frame , And expect the next βT Action sequence of frames ;α Is the video observation rate , and β Is the forecast ratio .

chart 2 The problem of long-term action prediction

Method

Future Transformer (FUTR)

In this paper , The author proposes a completely attention based network , be called FUTR, For long-term action expectations . The overall architecture includes transformer encoder and decoder , Pictured 3 Shown .

chart 3 FUTR The overall architecture of

1. Encoder

The encoder takes visual features as input , The act of splitting past frames , Learning different characteristics through self - attention .

The author uses the visual features extracted from the input frame , For a time span of τ For sampling , The sampled frame features are fed to the linear layer , Then the function will be activated ReLU To E, Create an input tag :

Each encoder layer consists of a multi head self focusing (MHSA)、 Layer normalization (LN) And a feedforward network with residual connections (FFN) form . Defines a kind of multi headed attention (MHA), This note is based on the scaled dot product note :

The final output of the last encoder layer is used to generate action segments , By applying full connectivity (FC) layer , Followed by softmax:

2. decoder

The decoder takes a learnable tag as input , It is called action query , And predict the future action label and corresponding duration in parallel , Learn the long-term relationship between past and future actions through self attention and cross attention .

Query operations are embedded M Learnable markers . The time sequence of queries is fixed to be the same as that of future operations , That is to say i The query corresponds to the i Future operations .

Each decoder layer consists of a MHSA、 A multi headed crossover (MHCA)、LN and FFN form . Output query Ql+1 From the decoder layer :

The final output of the final decoder layer is used to generate future actions , By applying the FC layer , Heel softmax And duration vector :

3. Target action segment loss

The author uses the motion segment loss to learn the feature representation of past actions in the encoder , As an auxiliary loss . Action segment loss Lseg Definition :

Definition of expected loss of action :

Duration regression loss definition :

The ultimate loss :

experiment

surface 1 Comparison with the latest technology

surface 2 Parallel decoding and autoregressive decoding

surface 3 Global self focus and local self focus

surface 5 Loss ablation

chart 4 Cross attention map visualization at breakfast

chart 5 Qualitative results of breakfast

Conclusion

The author introduces an end-to-end attention neural network FUTR, It uses the overall relationship between past and future actions to predict long-term actions . This method uses fine-grained visual features as input , And predict the future actions in parallel decoding , So as to achieve accurate and fast reasoning . Through a large number of experiments on two benchmarks , The advantages of the author's method are proved , Reached the latest level .

CV The technical guide creates a computer vision technology exchange group and a free version of the knowledge planet , At present, the number of people on the planet has 700+, The number of topics reached 200+.

The knowledge planet will release some homework every day , It is used to guide people to learn something , You can continue to punch in and learn according to your homework .

CV Every day in the technology group, the top conference papers published in recent days will be sent , You can choose the papers you are interested in to read , continued follow Latest technology , If you write an interpretation after reading it and submit it to us , You can also receive royalties . in addition , The technical group and my circle of friends will also publish various periodicals 、 Notice of solicitation of contributions for the meeting , If you need it, please scan your friends , And pay attention to .

Add groups and planets : Official account CV Technical guide , Get and edit wechat , Invite to join .

Other articles of official account

Introduction to computer vision

Summary of common words in computer vision papers

YOLO Series carding ( Four ) About YOLO Deployment of

YOLO Series carding ( 3、 ... and )YOLOv5

YOLO Series carding ( Two )YOLOv4

YOLO Series carding ( One )YOLOv1-YOLOv3

CVPR2022 | Thin domain adaptation

CVPR2022 | Based on egocentric data OCR assessment

CVPR 2022 | Using contrast regularization method to deal with noise labels

CVPR2022 | Loss problem in weakly supervised multi label classification

CVPR2022 | iFS-RCNN: An incremental small sample instance divider

CVPR2022 | A ConvNet for the 2020s & How to design neural network Summary

CVPR2022 | PanopticDepth: A unified framework for depth aware panoramic segmentation

CVPR2022 | Reexamine pooling : Your feeling field is not ideal

CVPR2022 | Unknown target detection module STUD: Learn about unknown targets in the video

CVPR2022 | Ranking based siamese Visual tracking

Build from scratch Pytorch Model tutorial ( Four ) Write the training process -- Argument parsing

Build from scratch Pytorch Model tutorial ( 3、 ... and ) build Transformer The Internet

Build from scratch Pytorch Model tutorial ( Two ) Build network

Build from scratch Pytorch Model tutorial ( One ) data fetch

Some personal thinking habits and thought summary about learning a new technology or field quickly

边栏推荐

- How to calculate win/tai/loss in paired t-test

- C # realize the hierarchical traversal of binary tree

- MFC dialog program core -isdialogmessage function -msg message structure -getmessage function -dispatchmessage function

- CVPR2022 | PanopticDepth:深度感知全景分割的统一框架

- [环境配置]PWC-Net

- 23、 1-bit data storage (delay line / core /dram/sram/ tape / disk / optical disc /flash SSD)

- Adjacency matrix and adjacency table structure of C # realization graph

- YOLO系列梳理(九)初尝新鲜出炉的YOLOv6

- Aes-128-cbc-pkcs7padding encrypted PHP instance

- 倍福控制第三方伺服走CSV模式--以汇川伺服为例

猜你喜欢

LeetCode_双指针_中等_328.奇偶链表

Detailed explanation on configuration and commissioning of third-party servo of Beifu TwinCAT -- Taking Huichuan is620n as an example

Qt中的UI文件介绍

Aes-128-cbc-pkcs7padding encrypted PHP instance

Qt的信号与槽

Proteus Software beginner notes

Yolo series combs (IX) first taste of newly baked yolov6

QT signal and slot

CVPR2022 | PanopticDepth:深度感知全景分割的统一框架

Cvpr2022 | reexamine pooling: your receptive field is not the best

随机推荐

QT custom control: value range

C # implementation of binary tree non recursive middle order traversal program

从Mpx资源构建优化看splitChunks代码分割

CVPR 2022 | 未知目标检测模块STUD:学习视频中的未知目标

Recommended model recurrence (I): familiar with torch rechub framework and use

netdata数据持久化配置

23、 1-bit data storage (delay line / core /dram/sram/ tape / disk / optical disc /flash SSD)

云龙开炮版飞机大战(完整版)

LR、CR纽扣电池对照表

Testing -- automated testing: about the unittest framework

PyGame accurately detects image collision

Hystrix断路器

Nacos startup error

Cnpm reports an error 'cnpm' is not an internal or external command, nor is it a runnable program or batch file

RT-Thread内存管理

Cvpr2022 𞓜 thin domain adaptation

SCHIEDERWERK电源维修SMPS12/50 PFC3800解析

面试突击61:说一下MySQL事务隔离级别?

Golang image/png processing image rotation writing

Evaluation of powerful and excellent document management software: image management, book management and document management