当前位置:网站首页>Yolox enhanced feature extraction network panet analysis

Yolox enhanced feature extraction network panet analysis

2022-07-02 23:22:00 【Said the shepherdess】

In the last article , Shared YOLOX Of CSPDarknet The Internet , See YOLOX backbone——CSPDarknet The implementation of the

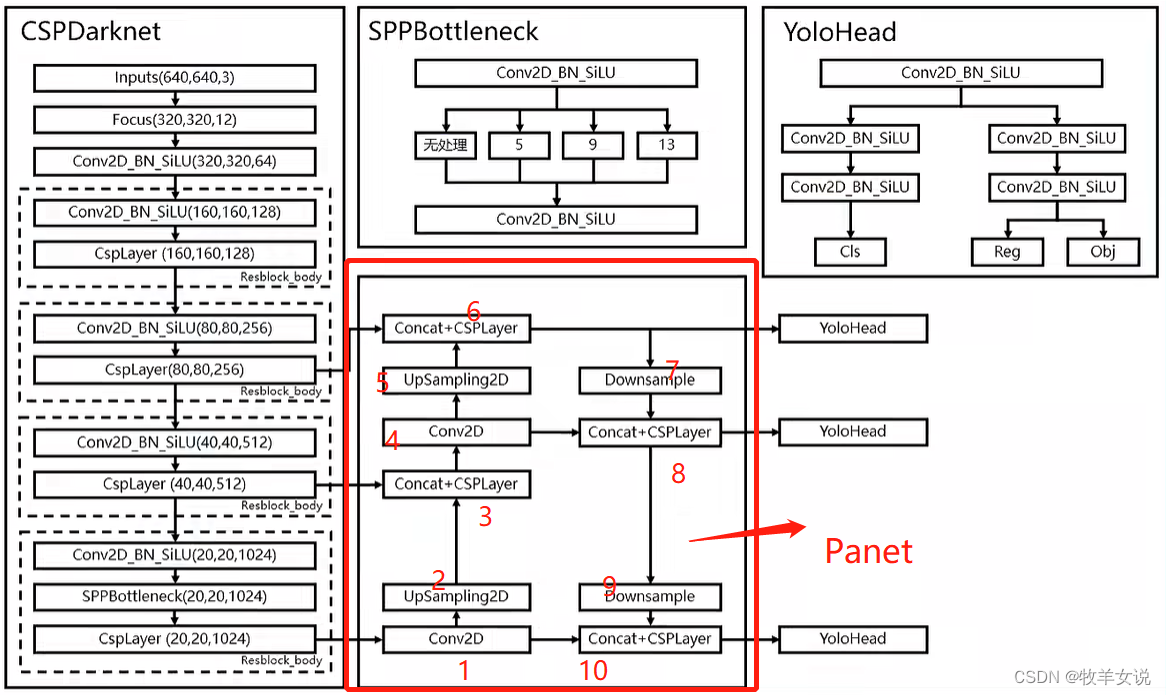

stay CSPDarknet in , There are three levels of output , Namely dark5(20x20x1024)、dark4(40x40x512)、dark3(80x80x256). Output of these three levels , Will enter a network of enhanced feature extraction Panet, Further feature extraction , See the part marked in the red box in the following figure :

Panet The basic idea is , Upsampling deep features , And fuse with shallow features ( See figure above 1~6 Annotation part ), The fused shallow features are then down sampled , Then integrate with deep features ( See figure above 6~10 part ).

stay YOLOX On the official implementation code ,Panet Implementation in yolo_pafpn.py In the document . Combined with the above numbers , The official code is commented :

class YOLOPAFPN(nn.Module):

"""

YOLOv3 model. Darknet 53 is the default backbone of this model.

"""

def __init__(

self,

depth=1.0,

width=1.0,

in_features=("dark3", "dark4", "dark5"),

in_channels=[256, 512, 1024],

depthwise=False,

act="silu",

):

super().__init__()

self.backbone = CSPDarknet(depth, width, depthwise=depthwise, act=act)

self.in_features = in_features

self.in_channels = in_channels

Conv = DWConv if depthwise else BaseConv

self.upsample = nn.Upsample(scale_factor=2, mode="nearest")

# 20x20x1024 -> 20x20x512

self.lateral_conv0 = BaseConv(

int(in_channels[2] * width), int(in_channels[1] * width), 1, 1, act=act

)

# 40x40x1024 -> 40x40x512

self.C3_p4 = CSPLayer(

int(2 * in_channels[1] * width),

int(in_channels[1] * width),

round(3 * depth),

False,

depthwise=depthwise,

act=act,

) # cat

# 40x40x512 -> 40x40x256

self.reduce_conv1 = BaseConv(

int(in_channels[1] * width), int(in_channels[0] * width), 1, 1, act=act

)

# 80x80x512 -> 80x80x256

self.C3_p3 = CSPLayer(

int(2 * in_channels[0] * width), # 2x256

int(in_channels[0] * width), # 256

round(3 * depth),

False,

depthwise=depthwise,

act=act,

)

# bottom-up conv

# 80x80x256 -> 40x40x256

self.bu_conv2 = Conv(

int(in_channels[0] * width), int(in_channels[0] * width), 3, 2, act=act

)

# 40x40x512 -> 40x40x512

self.C3_n3 = CSPLayer(

int(2 * in_channels[0] * width), # 2*256

int(in_channels[1] * width), # 512

round(3 * depth),

False,

depthwise=depthwise,

act=act,

)

# bottom-up conv

# 40x40x512 -> 20x20x512

self.bu_conv1 = Conv(

int(in_channels[1] * width), int(in_channels[1] * width), 3, 2, act=act

)

# 20x20x1024 -> 20x20x1024

self.C3_n4 = CSPLayer(

int(2 * in_channels[1] * width), # 2*512

int(in_channels[2] * width), # 1024

round(3 * depth),

False,

depthwise=depthwise,

act=act,

)

def forward(self, input):

"""

Args:

inputs: input images.

Returns:

Tuple[Tensor]: FPN feature.

"""

# backbone

out_features = self.backbone(input)

features = [out_features[f] for f in self.in_features]

[x2, x1, x0] = features

# The first 1 Step , For output feature map Convolution

# 20x20x1024 -> 20x20x512

fpn_out0 = self.lateral_conv0(x0) # 1024->512/32

# The first 2 Step , Right. 1 Output in step feature map Sample up

# Upsampling, 20x20x512 -> 40x40x512

f_out0 = self.upsample(fpn_out0) # 512/16

# The first 3 Step ,concat + CSP layer

# 40x40x512 + 40x40x512 -> 40x40x1024

f_out0 = torch.cat([f_out0, x1], 1) # 512->1024/16

# 40x40x1024 -> 40x40x512

f_out0 = self.C3_p4(f_out0) # 1024->512/16

# The first 4 Step , Right. 3 Step output feature map Convolution

# 40x40x512 -> 40x40x256

fpn_out1 = self.reduce_conv1(f_out0) # 512->256/16

# The first 5 Step , Continue sampling

# 40x40x256 -> 80x80x256

f_out1 = self.upsample(fpn_out1) # 256/8

# The first 6 Step ,concat+CSPLayer, Output to yolo head

# 80x80x256 + 80x80x256 -> 80x80x512

f_out1 = torch.cat([f_out1, x2], 1) # 256->512/8

# 80x80x512 -> 80x80x256

pan_out2 = self.C3_p3(f_out1) # 512->256/8

# The first 7 Step , Down sampling

# 80x80x256 -> 40x40x256

p_out1 = self.bu_conv2(pan_out2) # 256->256/16

# The first 8 Step ,concat + CSPLayer, Output to yolo head

# 40x40x256 + 40x40x256 = 40x40x512

p_out1 = torch.cat([p_out1, fpn_out1], 1) # 256->512/16

# 40x40x512 -> 40x40x512

pan_out1 = self.C3_n3(p_out1) # 512->512/16

# The first 9 Step , Continue downsampling

# 40x40x512 -> 20x20x512

p_out0 = self.bu_conv1(pan_out1) # 512->512/32

# The first 10 Step ,concat + CSPLayer, Output to yolo head

# 20x20x512 + 20x20x512 -> 20x20x1024

p_out0 = torch.cat([p_out0, fpn_out0], 1) # 512->1024/32

# 20x20x1024 -> 20x20x1024

pan_out0 = self.C3_n4(p_out0) # 1024->1024/32

outputs = (pan_out2, pan_out1, pan_out0)

return outputsReference resources :Pytorch Build your own YoloX Target detection platform (Bubbliiiing Deep learning course )_ Bili, Bili _bilibili

边栏推荐

- 基于Pyqt5工具栏按钮可实现界面切换-2

- Antd component upload uploads xlsx files and reads the contents of the files

- 基于Pyqt5工具栏按钮可实现界面切换-1

- [favorite poems] OK, song

- 采用VNC Viewer方式远程连接树莓派

- Solution to boost library link error

- 抖音实战~点赞数量弹框

- Explain promise usage in detail

- Go project operation method

- 4 special cases! Schools in area a adopt the re examination score line in area B!

猜你喜欢

設置單擊右鍵可以選擇用VS Code打開文件

理想汽车×OceanBase:当造车新势力遇上数据库新势力

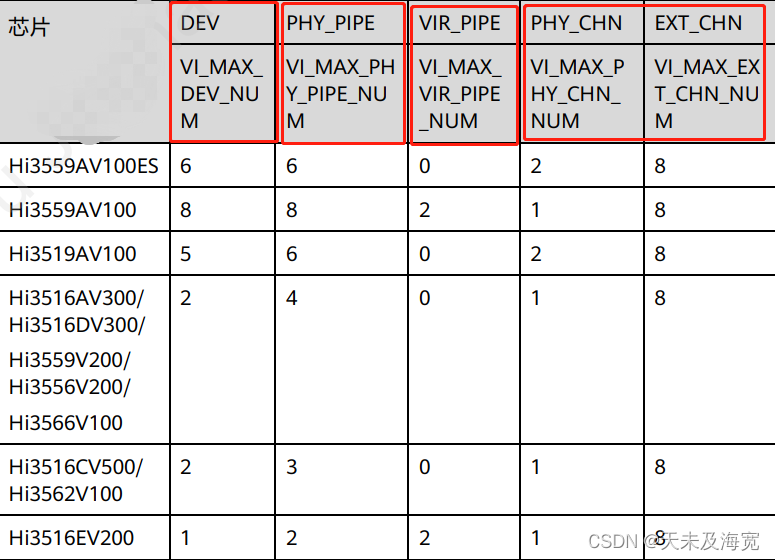

海思 VI接入视频流程

为什么RTOS系统要使用MPU?

Is 408 not fragrant? The number of universities taking the 408 examination this year has basically not increased!

“一个优秀程序员可抵五个普通程序员!”

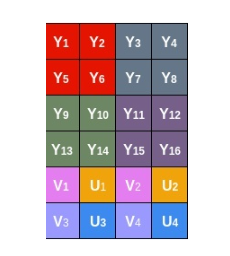

Construction of Hisilicon 3559 universal platform: rotation operation on the captured YUV image

Chow-Liu Tree

Go basic anonymous variable

![[npuctf2020]ezlogin XPath injection](/img/6e/dac4dfa0970829775084bada740542.png)

[npuctf2020]ezlogin XPath injection

随机推荐

数字图像处理实验目录

Introduction to the latest plan of horizon in April 2022

YOLOX加强特征提取网络Panet分析

Eight honors and eight disgraces of the programmer version~

Realize the linkage between bottomnavigationview and navigation

【STL源码剖析】仿函数(待补充)

BBR encounters cubic

Boost库链接错误解决方案

Arduino - character judgment function

What if win11 can't turn off the sticky key? The sticky key is cancelled but it doesn't work. How to solve it

ADC of stm32

Loss function~

实现BottomNavigationView和Navigation联动

golang入门:for...range修改切片中元素的值的另类方法

Looking at Ctrip's toughness and vision from the Q1 financial report in 2022

Solution: exceptiole 'xxxxx QRTZ_ Locks' doesn't exist and MySQL's my CNF file append lower_ case_ table_ Error message after names startup

Why does RTOS system use MPU?

ping域名报错unknown host,nslookup/systemd-resolve可以正常解析,ping公网地址通怎么解决?

@BindsInstance在Dagger2中怎么使用

20220527_ Database process_ Statement retention