当前位置:网站首页>Spark 3.0 测试与使用

Spark 3.0 测试与使用

2022-07-27 14:23:00 【wankunde】

Compatibility with hadoop and hive

Spark 3.0 官方默认支持的Hadoop最低版本为2.7, Hive最低版本为 1.2。我们平台使用的CDH 5.13,对应的版本分别为hadoop-2.6.0, hive-1.1.0。所以尝试自己去编译Spark 3.0 来使用。

编译环境: Maven 3.6.3, Java 8, Scala 2.12

Hive版本预先编译

因为Hive 1.1.0 实在是太久远了,很多依赖包和Spark3.0中不兼容,需要需要重新编译。

commons-lang3 包版本太旧了,缺少JAVA_9以上的支持

hive exec 模块编译: mvn clean package install -DskipTests -pl ql -am -Phadoop-2

代码改动:

diff --git a/pom.xml b/pom.xml

index 5d14dc4..889b960 100644

--- a/pom.xml

+++ b/pom.xml

@@ -248,6 +248,14 @@

<enabled>false</enabled>

</snapshots>

</repository>

+ <repository>

+ <id>spring</id>

+ <name>Spring repo</name>

+ <url>https://repo.spring.io/plugins-release/</url>

+ <releases>

+ <enabled>true</enabled>

+ </releases>

+ </repository>

</repositories>

<!-- Hadoop dependency management is done at the bottom under profiles -->

@@ -982,6 +990,9 @@

<profiles>

<profile>

<id>thriftif</id>

+ <properties>

+ <thrift.home>/usr/local/opt/[email protected]</thrift.home>

+ </properties>

<build>

<plugins>

<plugin>

diff --git a/ql/pom.xml b/ql/pom.xml

index 0c5e91f..101ef11 100644

--- a/ql/pom.xml

+++ b/ql/pom.xml

@@ -736,10 +736,7 @@

<include>org.apache.hive:hive-exec</include>

<include>org.apache.hive:hive-serde</include>

<include>com.esotericsoftware.kryo:kryo</include>

- <include>com.twitter:parquet-hadoop-bundle</include>

- <include>org.apache.thrift:libthrift</include>

<include>commons-lang:commons-lang</include>

- <include>org.apache.commons:commons-lang3</include>

<include>org.jodd:jodd-core</include>

<include>org.json:json</include>

<include>org.apache.avro:avro</include>

Spark 编译

# Apache

git clone [email protected]:apache/spark.git

git checkout v3.0.0

# Leyan Version 主要设计spark hive的兼容性改造

git clone [email protected]:HDP/spark.git

git checkout -b v3.0.0_cloudera origin/v3.0.0_cloudera

./dev/make-distribution.sh --name cloudera --tgz -DskipTests -Phive -Phive-thriftserver -Pyarn -Pcdhhive

--本地仓库更新

mvn clean install -DskipTests=true -Phive -Phive-thriftserver -Pyarn -Pcdhhive

# deploy

rm -rf /opt/spark-3.0.0-bin-cloudera

tar -zxvf spark-3.0.0-bin-cloudera.tgz

rm -rf /opt/spark-3.0.0-bin-cloudera/conf

ln -s /etc/spark3/conf /opt/spark-3.0.0-bin-cloudera/conf

cd /opt/spark/jars

zip spark-3.0.0-jars.zip ./*

HADOOP_USER_NAME=hdfs hdfs dfs -put -f spark-3.0.0-jars.zip hdfs:///deploy/config/spark-3.0.0-jars.zip

rm spark-3.0.0-jars.zip

# add config : spark.yarn.archive=hdfs:///deploy/config/spark-3.0.0-jars.zip

Configuration

# 开启AE模式

spark.sql.adaptive.enabled=true

# spark生成parquet文件使用legacy模式,否则生成的文件无法被Hive或其他组件读取

spark.sql.parquet.writeLegacyFormat=true

# 兼容使用Spark2的 external shuffle service

spark.shuffle.useOldFetchProtocol=true

spark.sql.storeAssignmentPolicy=LEGACY

# 现在默认datasource v2不支持数据源表和目标表是同一个表,通过下面参数跳过校验

#spark.sql.hive.convertInsertingPartitionedTable=false

spark.sql.sources.partitionOverwriteVerifyPath=false

Tips

- 在使用Maven编译的时候,以前版本支持多CPU并发编译,现在不可以了,否则编译的时候会导致死锁

- 在使用maven命令进行编译的使用不能同时指定

package和install,否则编译时会有冲突 - 模版编译命令

mvn clean install -DskipTests -Phive -Phive-thriftserver -Pyarn -DskipTests -Pcdhhive,可以自定义编译模块和编译target - 想要使用Spark3.0,还是需要进行魔改的。

yarn模块要稍微改动。mvn clean install -DskipTests=true -pl resource-managers/yarn -am -Phive -Phive-thriftserver -Pyarn -Pcdhhive - 所有Spark3.0 包在本地全部安装完毕后,可以继续编译above-board项目

- 删除Spark3.0 中对高版本hive的支持

- 当切换到CDH的hive版本时发现,该hive版本shade的commons jar太旧了,进行重新打包

TroubleShooting

需要更新本地Hive-exec包的依赖,打包的时候减少shade thrift包的代码

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 1.0 failed 4 times, most recent failure: Lost task 0.3 in stage 1.0 (TID 5, prd-zboffline-044.prd.leyantech.com, executor 1): java.lang.NoSuchMethodError: shaded.parquet.org.apache.thrift.EncodingUtils.setBit(BIZ)B

at org.apache.parquet.format.FileMetaData.setVersionIsSet(FileMetaData.java:349)

at org.apache.parquet.format.FileMetaData.setVersion(FileMetaData.java:335)

at org.apache.parquet.format.Util$DefaultFileMetaDataConsumer.setVersion(Util.java:122)

at org.apache.parquet.format.Util$5.consume(Util.java:161)

at org.apache.parquet.format.event.TypedConsumer$I32Consumer.read(TypedConsumer.java:78)

边栏推荐

- Spark Bucket Table Join

- 反射

- cap理论和base理论

- Reading notes of lifelong growth (I)

- Network equipment hard core technology insider router Chapter 4 Jia Baoyu sleepwalking in Taixu Fantasy (Part 2)

- 魔塔项目中的问题解决

- Network equipment hard core technology insider router Chapter 13 from deer by device to router (Part 1)

- Inside router of network equipment hard core technology (10) disassembly of Cisco asr9900 (4)

- STM32F10x_硬件I2C读写EEPROM(标准外设库版本)

- Watermelon book machine learning reading notes Chapter 1 Introduction

猜你喜欢

JUC(JMM、Volatile)

Unity mouse controls the first person camera perspective

reflex

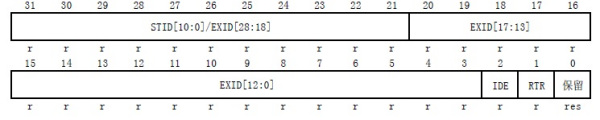

STM32 can communication filter setting problem

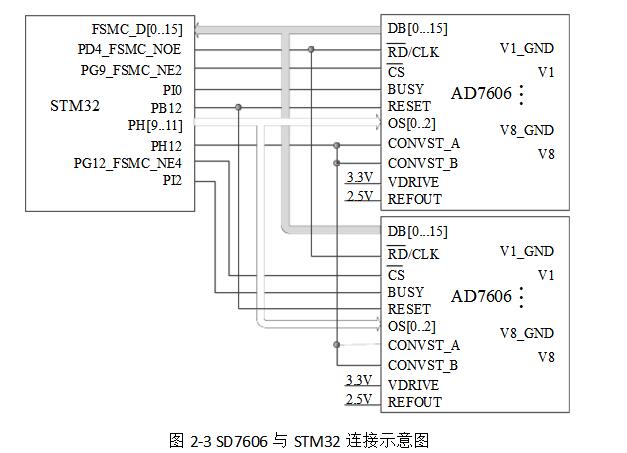

Introduction of the connecting circuit between ad7606 and stm32

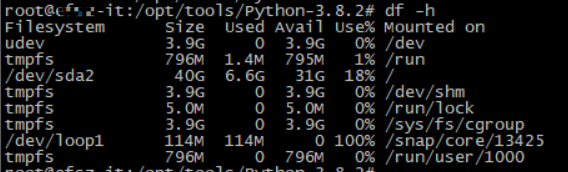

/dev/loop1占用100%问题

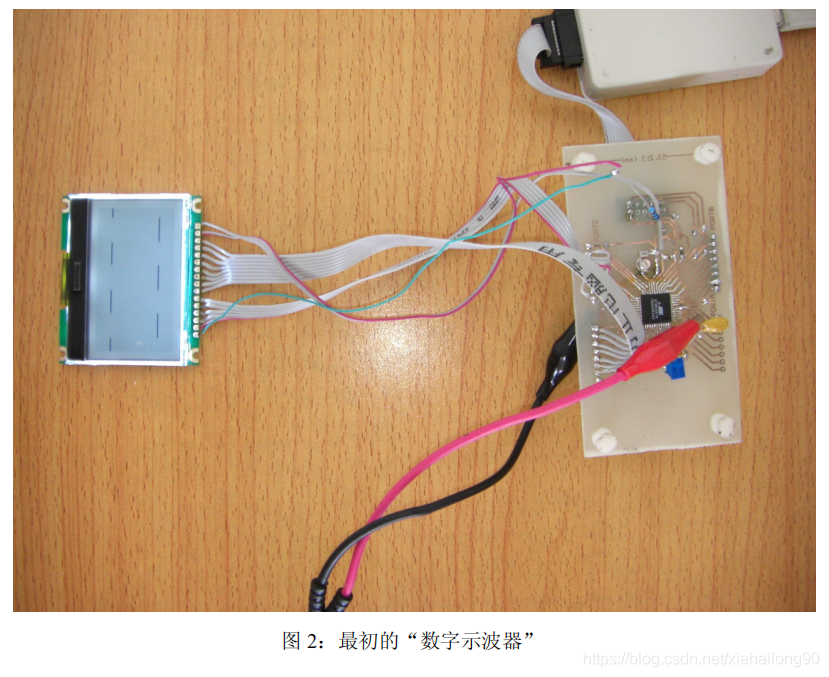

Design scheme of digital oscilloscope based on stm32

泛型

DIY制作示波器的超详细教程:(一)我不是为了做一个示波器

ADB command (install APK package format: ADB install APK address package name on the computer)

随机推荐

Basic usage of kotlin

Multi table query_ Exercise 1 & Exercise 2 & Exercise 3

Data warehouse project is never a technical project

Lua study notes

基于FIFO IDT7202-12的数字存储示波器

Notice of Shenzhen Municipal Bureau of human resources and social security on the issuance of employment related subsidies for people out of poverty

How to package AssetBundle

Watermelon book machine learning reading notes Chapter 1 Introduction

npm install错误 unable to access

HJ8 合并表记录

Network equipment hard core technology insider router Chapter 21 reconfigurable router

Network equipment hard core technology insider router Chapter 16 dpdk and its prequel (I)

Adaptation verification new occupation is coming! Huayun data participated in the preparation of the national vocational skill standard for information system adaptation verifiers

Singles cup, web:web check in

Unity performance optimization ----- drawcall

华云数据打造完善的信创人才培养体系 助力信创产业高质量发展

After configuring corswebfilter in grain mall, an error is reported: resource sharing error:multiplealloworiginvalues

STM32 can -- can ID filter analysis

DIY制作示波器的超详细教程:(一)我不是为了做一个示波器

Two stage submission and three stage submission