当前位置:网站首页>Research on multi model architecture of ads computing power chip

Research on multi model architecture of ads computing power chip

2022-07-01 16:00:00 【Advanced engineering intelligent vehicle】

author :Nathan J, Chief architect of Furui microelectronics UK R & D Center , Resident in Cambridge, England . I was in ARM The headquarters has been engaged in high performance for more than ten years CPU Architecture research and AI architecture research .

In the past decade , Deep neural network (DNN) It has been widely used , For example, mobile phones ,AR/VR,IoT And automatic driving . Complex use cases lead to many DNN The emergence of model application , for example VR The application of contains many subtasks : Avoid collision with nearby obstacles through target detection , Predict input through the tracking of opponents or gestures , Through the eye tracking to complete the center point rendering , These subtasks can use different DNN Model to complete . Like autonomous vehicle, it also uses a series of DNN Algorithm to realize the perception function , Every DNN To accomplish a specific task . But different DNN The network layer and operator of the model are also very different , Even in a DNN Heterogeneous operators and types may also be used in the model .

Besides ,Torch、TensorFlow and Caffe Wait for the mainstream deep learning framework , It is still handled in a sequential way inference Mission , One process per model . Therefore, it also leads to the current NPU Architecture is still focused on a single DNN Task acceleration and optimization , This is far from enough DNN Performance requirements of model application , There is an urgent need for new models at the bottom NPU Computing architecture accelerates and optimizes multi model tasks . And reconfigurable NPU Although it can adapt to the diversity of neural network layer , But additional hardware resources are needed to support ( For example, switching unit , Interconnection and control module ), It will also lead to additional power consumption caused by reconfiguration of the network layer .

Development NPU To support multitasking models faces many challenges :DNN The diversity of load is improved NPU The complexity of design ; Multiple DNN Linkage between , Lead to DNN Scheduling between becomes difficult ; How to balance reconfigurability and customization becomes more challenging . In addition, this kind of NPU Additional performance criteria are also introduced in the design : Because of multiple DNN Delay caused by data sharing between models , Multiple DNN How to allocate resources effectively between models .

The current direction of design research can be roughly divided into the following points : Multiple DNN Parallel execution between models , The redesign NPU Architecture to effectively support DNN Diversity of models , Optimization of scheduling strategy .

Edit search map

DNN Parallelism and scheduling strategy :

Parallel strategies such as time division multiplexing and spatial cooperative positioning can be used . Scheduling algorithm can be roughly divided into three directions : Static and dynamic scheduling , Scheduling for time and space , And scheduling based on software or hardware .

Time division multiplexing It is an upgraded version of the traditional priority preemption strategy , allow inter-DNN Assembly line operation , To improve the utilization of system resources (PE and memory etc. ). This strategy focuses on the optimization of scheduling algorithm , The advantage is to NPU There are few changes to the hardware .

Spatial collaborative positioning Focus on multiple DNN Parallelism of model execution , That's different DNN The model can occupy NPU Different parts of hardware resources . It requires design NPU Each stage should be predicted DNN Network characteristics and priorities , Take the predefined part NPU Hardware units are assigned to specific DNN Internet use . The assigned strategy can be selected DNN Dynamic allocation during operation , Or static allocation . Static allocation depends on the hardware scheduler , Less software intervention . The advantage of spatial collaborative positioning is that it can better improve the performance of the system , But the hardware changes are relatively large .

Dynamic scheduling and static scheduling Dynamic scheduling or static scheduling is selected according to the specific goals of user use cases .

Dynamic scheduling is more flexible , According to the reality DNN Reallocate resources according to the needs of the task . Dynamic scheduling mainly depends on time division multiplexing , Or use a dynamically composable engine ( You need to add a dynamic scheduler to your hardware ), Most algorithms choose preemptive Strategy or AI-MT Early expulsion algorithm of .

For customized static scheduling strategy , Can better improve NPU Performance of . This scheduling strategy refers to NPU At the design stage, specific hardware modules have been customized to deal with specific neural network layers or specific operations . This scheduling strategy has high performance , But the hardware changes are relatively large .

Edit search map

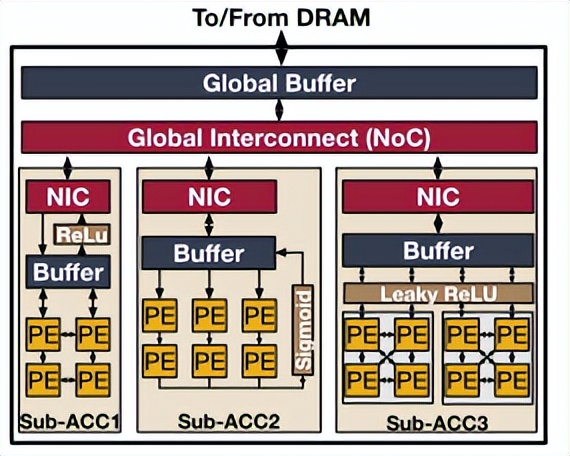

isomerism NPU framework :

Static scheduling strategy combining dynamic reconfiguration and customization , stay NPU Design multiple sub accelerators in , Each sub accelerator is targeted at a specific neural network layer or specific network operations . In this way, the scheduler can adapt to multiple DNN The network layer of the model runs on the appropriate sub accelerator , You can also schedule from different DNN The network layer of the model runs synchronously on multiple sub accelerators . This can not only save the additional hardware resource consumption brought by the reconfiguration architecture , It can also improve the flexibility of processing in different network layers .

isomerism NPU The research and design of architecture can be mainly considered from these three aspects :

1) How to design multi seed accelerator according to the characteristics of different network layers ;

2) How to distribute resources among different sub accelerators ;

3) How to schedule the specific network layer that meets the memory limit to execute on the appropriate sub accelerator .

reference :

[1] Stylianos I. Venieris, and etc.“Multi-DNN Accelerators for Next-Generation AI Systems”

https://arxiv.org/pdf/2205.09376.pdf

[2] Hyoukjun K. Liangzhen L and etc. “Heterogeneous Dataflow Accelerators for Multi-DNN- Workloads”

https://arxiv.org/abs/1909.07437

边栏推荐

- 微服务追踪SQL(支持Isto管控下的gorm查询追踪)

- One revolution, two forces, three links: the "carbon reduction" roadmap behind the industrial energy efficiency improvement action plan

- 七夕表白攻略:教你用自己的专业说情话,成功率100%,我只能帮你们到这里了啊~(程序员系列)

- Crypto Daily:孙宇晨在MC12上倡议用数字化技术解决全球问题

- 跨平台应用开发进阶(二十四) :uni-app实现文件下载并保存

- Win11如何设置用户权限?Win11设置用户权限的方法

- STM32ADC模拟/数字转换详解

- What is the forkjoin framework in the concurrent programming series?

- 硬件开发笔记(九): 硬件开发基本流程,制作一个USB转RS232的模块(八):创建asm1117-3.3V封装库并关联原理图元器件

- GaussDB(for MySQL) :Partial Result Cache,通过缓存中间结果对算子进行加速

猜你喜欢

Go语学习笔记 - gorm使用 - 表增删改查 | Web框架Gin(八)

大龄测试/开发程序员该何去何从?是否会被时代抛弃?

Please, stop painting star! This has nothing to do with patriotism!

高端程序员上班摸鱼指南

Microservice tracking SQL (support Gorm query tracking under isto control)

ATSS:自动选择样本,消除Anchor based和Anchor free物体检测方法之间的差别

MySQL高级篇4

MySQL advanced 4

Redis high availability principle

嵌入式开发:5个修订控制最佳实践

随机推荐

【显存优化】深度学习显存优化方法

Task.Run(), Task.Factory.StartNew() 和 New Task() 的行为不一致分析

Huawei issued hcsp-solution-5g security talent certification to help build 5g security talent ecosystem

微服务追踪SQL(支持Isto管控下的gorm查询追踪)

Smart Party Building: faith through time and space | 7.1 dedication

MySQL backup and restore single database and single table

[IDM] IDM downloader installation

[target tracking] |stark

表格存储中tablestore 目前支持mysql哪些函数呢?

She is the "HR of others" | ones character

Équipe tensflow: Nous ne sommes pas abandonnés

Pico,是要拯救还是带偏消费级VR?

【Pygame实战】你说神奇不神奇?吃豆人+切水果结合出一款你没玩过的新游戏!(附源码)

Introduction to RT thread env tool (learning notes)

C#/VB.NET 合并PDF文档

vim 从嫌弃到依赖(22)——自动补全

Go语学习笔记 - gorm使用 - 表增删改查 | Web框架Gin(八)

硬件开发笔记(九): 硬件开发基本流程,制作一个USB转RS232的模块(八):创建asm1117-3.3V封装库并关联原理图元器件

跨平台应用开发进阶(二十四) :uni-app实现文件下载并保存

基于PHP的轻量企业销售管理系统