当前位置:网站首页>Transformer Tracking

Transformer Tracking

2022-06-11 06:50:00 【A Xuan is going to graduate~】

Xin Chen, Bin Yan, Jiawen Zhu, Dong Wang, Xiaoyun Yang and Huchuan Lu

1School of Information and Communication Engineering, Dalian University of Technology, China

2Peng Cheng Laboratory Remark AI

CVPR 2021

Abstract :

Correlation acts It plays a key role in the tracking field , Especially in the recent popular based on Siamese The tracker of twin networks .correlation operation It is a simple fusion considering the similarity between the template and the search area . However ,correlation operation It is a local linear matching process , Semantic information is lost , Prone to local optimality , This may be the bottleneck of designing high-precision tracking algorithm . There is no correlation (correlation) Better feature fusion methods ? To solve this problem , suffer Transformer Inspired by the , This paper proposes a new attention based feature aggregation network , Use attention only , It effectively aggregates the features of templates and search areas . To be specific , The proposed method includes a method based on self-attention Of ego-context augment module Module and based on cross-attention Of a cross-feature augment module. Last , Based on Siamese-like feature extraction backbone、 Designed attention based fusion mechanism 、 and the classification and regression head(??) Of Transformer Tracking method ( name TransT).

1. introduction

(1) For most popular trackers ( Such as SiamFC、SiamRPN、ATOM),correlation Integrating template and target information into ROI Play a key role in . However ,correlation The operation itself is a linear matching process , It will lead to the loss of semantic information , This limits the tracker template and ROI Complex nonlinear interaction between . therefore , The previous model must be introduced fashion structure、 Additional modules used , Design effective online update to improve the ability of nonlinear representation . This naturally leads to a problem : Compared with none correlation Better feature fusion methods ?

In this paper , suffer Transformer Inspired by the core idea , By designing an attention based feature fusion network , Propose a novel Transformer Tracking algorithm (TransT) To solve the above problems . The proposed feature aggregation network includes a self attention based network ego-context augment module And based on cross-attention Of cross-feature augment module. This fusion mechanism effectively integrates templates and ROI features , Produced a better than correlation More semantic feature maps .

2. contribution :

(1) A novel Transformer Tracking framework , Including feature extraction ,Transformer-like fusion And head prediction module ,Transformer-like fusion The module uses attention only , Not used correlation, It combines the features of template and search area .

(2) The design is based on the an ego-context augment module And have cross-attention Of a cross-feature augment module Feature aggregation network . And based on correlation Feature aggregation compared to , The attention based method proposed in this paper focuses on useful information adaptively , Such as edges and similar targets , And establish association between distance features , Make the tracker get better classification and regression results .

3. Related work

(1) The method based on twin network is very popular in the field of tracking in recent years . The mainstream tracking architecture can be divided into two parts :

- One part is the backbone network used to extract image features

- The other part is based on correlation The similarity between the web computing template and the search area

At present , Many popular trackers rely on correlation operation , But two problems are ignored :

- be based on correlation Our network does not take full advantage of the global environment , It is easy to fall into local optimal solution .

- through too correlation, Semantic information is lost to some extent , This may lead to inaccurate prediction of the target boundary .

therefore , In this paper, we design an attention - based Transformer Variant structure , Instead of based on correlation Network of , For feature fusion .

(2)Transformer and Attention:

Transformer First proposed for machinetranslation . In short Transformer It is a kind of encoder and decoder with the help of attention , A structure that transforms one sequence into another . Attention mechanism attention input sequence , And at each step decide which other parts of the sequence are important . therefore , Helps capture global information from input sequences .Transformer Already in many sequential tasks ( natural language processing 、 Speech processing and computer vision ) Instead of recurrent neural networks (R-CNN), And gradually expand to deal with non sequential tasks .

This paper attempts to put Transformer Structure introduces the tracking domain , But not directly Transformer The encoder - Decoder structure , instead Transformer The core idea of , The attention mechanism is used to design ego-context augment (ECA) Module and cross-feature augment (CFA) modular .ECA and FCA The integration focuses on the feature fusion between the template and the search area , Instead of extracting information from just one image . This design ideal is more suitable for target tracking .( You can try RGBT, Extract information from two modes .)

3. Method

Put forward Transformer Tracking method ,TransT.TransT It's made up of three parts : Backbone network , Feature fusion network and prediction head . The backbone network extracts the features of the template and the search area respectively . then , The proposed feature fusion network is used to enhance and fuse features . Last , The prediction head performs binary classification and bounding box regression on the enhanced features , To generate trace structures .

3.1 The overall structure

3.1.1 feature extraction

Backbone network use Siamese The Internet , The input is the template image block z And search area x Image block . The template block is twice as long as the side expanded at the center of the target in the first frame , It contains the appearance information of the target and the local surrounding environment . The search area is four times the side length of the target center coordinate in the previous frame , The search area usually covers the possible moving range of the target . The search area and template are reshaped into squares , Then it is sent to the backbone network for processing . Use resnet50 A modified version of is used to extract features . To be precise , In addition to the resnet50 The last stage of , Take the output of the fourth stage as the final output . The convolution step size of the fourth stage is determined by 2 Set to 1 To achieve greater feature resolution . meanwhile , In the fourth stage 3x3 The convolution of is modified to a step size of 2 To increase receptive field . The main part is mainly used to deal with the search area and template area , In order to get their characteristic map .

![]()

![]()

3.1.2 Feature fusion network

A feature fusion network is designed to effectively fuse features ![]() and

and ![]() , First , Use 1x1 Reduction

, First , Use 1x1 Reduction ![]() and

and ![]() Dimension of feature channel , Get two low dimensional characteristic graphs

Dimension of feature channel , Get two low dimensional characteristic graphs ![]() , Use... In the experiment d = 256, Because the attention based feature fusion network takes a set of feature vectors as input , take

, Use... In the experiment d = 256, Because the attention based feature fusion network takes a set of feature vectors as input , take ![]() and

and ![]() Planarization in spatial dimension , obtain

Planarization in spatial dimension , obtain ![]() ,

,![]() and

and ![]() It can be regarded as having a length of d The eigenvector set of . As shown in the figure , The feature fusion networks are represented by

It can be regarded as having a length of d The eigenvector set of . As shown in the figure , The feature fusion networks are represented by ![]() and

and ![]() As template branch and search area branch .

As template branch and search area branch .

First , Two two ego-context augment (ECA) The enhancement module adopts the multi head self attention mechanism (multi-head self-attention) Focus adaptively on useful semantic context , To enhance feature representation . then ,cross-feature augment (CFA) The module accepts the characteristic graph of its own branch and another branch at the same time , And pay attention by crossing heads (multi-head cross-attention) Fuse the two feature maps . In this way , Two ECA And two CFA Form a feature fusion layer . The fusion layer repeats N Time , Then add one more CFA The characteristic graph of merging two branches , Decode to get the characteristic graph ![]() , I'm going to set it to N by 4 Time .

, I'm going to set it to N by 4 Time .

3.1.3 Prediction head module (Prediction Head Network.)

The prediction header consists of a classification branch and a regression branch , Each branch is a three-layer perceptron (a three-layer perceptron with hidden dimension d and a ReLU activation function). For the feature graph generated by the feature fusion network ![]() Come on ,head Predict for each vector , get

Come on ,head Predict for each vector , get ![]() Classification results of foreground and background and

Classification results of foreground and background and ![]() Normalized coordinates for the size of the search region , The tracker in this paper predicts the normalized coordinates directly .

Normalized coordinates for the size of the search region , The tracker in this paper predicts the normalized coordinates directly .

3.2 Ego-Context Augment and Cross-Feature Augment Modules

3.2.1 Multi-head Attention

Attention is the basic module of designing feature fusion network .

Transformer It says : Extend the attention mechanism to multiple head, Make the mechanism consider different attention distributions , Make the model focus on different aspects of information . The formula of the multi head attention mechanism is as follows :

3.2.2 Ego-Context Augment (ECA)

ECA Information from different positions on the feature map is adaptively integrated by using multi head self attention in the form of residual . The attention mechanism has no ability to distinguish the position information of the input feature sequence . therefore , For input ![]() , Introduce the spatial location coding process . Use sine function to generate spatial location code . Last ,ECA The mechanism can be summarized as :

, Introduce the spatial location coding process . Use sine function to generate spatial location code . Last ,ECA The mechanism can be summarized as :

3.2.3 Cross-Feature Augment (CFA)

CFA In the form of residuals , Multi head cross attention is used to fuse two input eigenvectors . Be similar to ECA,CFA Spatial location coding is also used . Besides , use FFA The module enhances the fitting ability of the model , The model is a fully connected feedforward network , It consists of two linear transformations , One in the middle RELU, namely :

![]()

W and b Represents weight matrix and basis vector , Subscripts represent different layers . therefore ,CFA The mechanism can be summarized as :

among ,![]() Is the input to the module branch ,

Is the input to the module branch ,![]() Is for

Is for ![]() The encoding of the spatial position of .

The encoding of the spatial position of .![]() Is the input of another branch ,

Is the input of another branch ,![]() Is for

Is for ![]() Space coding of coordinates of .

Space coding of coordinates of .![]() yes CFA Output . According to the formula (6),CFA according to

yes CFA Output . According to the formula (6),CFA according to ![]() Multiple scales between the calculated attention maps , Then reweighted according to the attention map

Multiple scales between the calculated attention maps , Then reweighted according to the attention map ![]() , And add it to

, And add it to ![]() , In order to enhance the representation ability of the feature graph .‘

, In order to enhance the representation ability of the feature graph .‘

3.2.4 What does attention want to see?

To explore how the attention module works in the framework of this article , This paper visualizes the attention maps of all attention modules , As shown in the figure :

3.3 Loss of training

The prediction header received ![]() Eigenvector , Output

Eigenvector , Output ![]() Binary classification and regression results . For the prediction of eigenvectors , Select the eigenvector corresponding to the pixel in the truth bounding box as the positive sample , The rest are negative samples . All samples contribute to the classification loss , Only positive samples contribute to regression loss . In order to reduce the imbalance of positive and negative samples , The loss caused by negative samples is reduced 16 times , Using the standard binary classification cross entropy loss :

Binary classification and regression results . For the prediction of eigenvectors , Select the eigenvector corresponding to the pixel in the truth bounding box as the positive sample , The rest are negative samples . All samples contribute to the classification loss , Only positive samples contribute to regression loss . In order to reduce the imbalance of positive and negative samples , The loss caused by negative samples is reduced 16 times , Using the standard binary classification cross entropy loss :

For return , use ![]()

![]() and

and ![]() Linear union of .

Linear union of .

The regression loss is calculated as follows :

among yj= 1 Positive sample ,bj For the first time j A prediction bounding box ,ˆb Is normalized ground-truth Bounding box .λG= 2 and λ1= 5 Is the regularization parameter in the experiment .

边栏推荐

- Reconstruction and preheating of linked list information management system (2) how to write the basic logic using linear discontinuous structure?

- Redux learning (I) -- the process of using Redux

- fatal: refusing to merge unrelated histories

- Learn a trick to use MySQL functions to realize data desensitization

- Required reading 1: the larger the pattern, the better they know how to speak

- 538.把二叉搜索树转换成累加树

- 搜狐员工遭遇工资补助诈骗 黑产与灰产有何区别 又要如何溯源?

- [probability theory and mathematical statistics] Dr. monkey's notes p41-44 statistics related topics, determination of three distributions, properties, statistics subject to normal distribution in gen

- Scripy web crawler series tutorials (I) | construction of scripy crawler framework development environment

- 核查医药代表备案信息是否正确

猜你喜欢

Redux learning (I) -- the process of using Redux

![Illustration of JS implementation from insertion sort to binary insertion sort [with source code]](/img/e5/1956af15712ac3e89302d7dd73f403.jpg)

Illustration of JS implementation from insertion sort to binary insertion sort [with source code]

【迅为干货】龙芯2k1000开发板opencv 测试

What are the differences and usages of break and continue?

搜狐员工遭遇工资补助诈骗 黑产与灰产有何区别 又要如何溯源?

Moment time plug-in tips -js (super detailed)

617. 合并二叉树

563. 二叉树的坡度

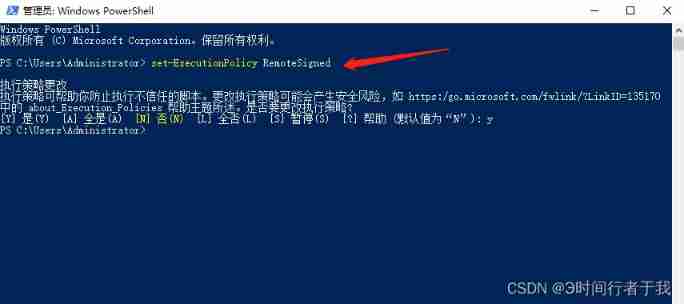

NPM upgrade: unable to load file c:\users\administrator\appdata\roaming\npm\npm-upgrade ps1

Luogu p1091 chorus formation (longest ascending subsequence)

随机推荐

[turn] flying clouds_ Qt development experience

VTK-vtkPlane和vtkCutter使用

021 mongodb database from getting started to giving up

3.1 naming rules of test functions

SQL language - query statement

Count the time-consuming duration of an operation (function)

About the designer of qtcreator the solution to the problem that qtdesigner can't pull and hold controls normally

Learn a trick to use MySQL functions to realize data desensitization

Unity 全景漫游过程中使用AWSD控制镜头移动,EQ控制镜头升降,鼠标右键控制镜头旋转。

Summary and review

What are the differences and usages of break and continue?

双周投融报:资本抢滩元宇宙游戏

The nearest common ancestor of 235 binary search tree

关于 QtCreator的设计器QtDesigner完全无法正常拽托控件 的解决方法

六月集训(第11天) —— 矩阵

[matlab WSN communication] a_ Star improved leach multi hop transmission protocol [including source code phase 487]

Post exam summary

Wan Zichang's article shows you promise

必读1:格局越大的人,越懂得说话

【Matlab图像加密解密】混沌序列图像加密解密(含相关性检验)【含GUI源码 1862期】