当前位置:网站首页>Facebook AI | learning reverse folding from millions of prediction structures

Facebook AI | learning reverse folding from millions of prediction structures

2022-06-10 17:07:00 【DrugAI】

compile | Liumingquan reviewing | Xia Xinyan

This paper introduces an article Facebook AI Recent work of the laboratory 《Learning inverse folding from millions of predicted structures》, The task of the model is to predict the protein sequence from the protein skeleton coordinates . On the basis of the protein structure determined experimentally , They use AlphaFold2 Predicted protein structure as additional data , Train a with geometric invariant processing layer seq2seq Transformer Model . The model achieves the goal of 51% The recurrence rate of the original sequence , The recurrence rate of hidden residues reached 72%, On the whole, it is better than the existing methods 10 percentage .

1

Introduce

The proportion of experimentally determined structures in the known protein sequence space is insufficient 0.1%, This limits the use of deep learning methods . They use AlphaFold2 Yes UniRef50 Medium 12M Sequence for structural prediction , Increased training data by nearly 3 A data level , To explore whether the prediction structure can overcome the limitations of experimental data .

Besides , The author defines reverse folding as sequence-to-sequence problem , And use autoregressive codec architecture to model . The task of the model is to predict the protein sequence from the protein skeleton coordinates , The process is as follows :

2

Model

Problem definition

framework

Use Geometric Vector Perceptron(GVP) Layer to learn the equivariant transformation of vector features and the invariant transformation of scalar features . Concrete , There are three architectures :(1)GVP-GNN;(2)GVP-GNN-large, Wider and deeper GVP-GNN;(3) from GVP-GNN Structure encoder and general purpose Transformer A hybrid model composed of . In order to ensure that the predicted sequence is independent of the reference frame of the structural coordinates ,GVP-GNN and GVP-Transformer All meet the following characteristics : Rotation translation transformation of given input coordinates T, The output should be invariant with respect to these transformations , namely .GVP and GVP-GNN Refer to the following papers :

GVP Structure aims to improve the geometric reasoning ability of biomolecular structure , combination CNN and GNN The advantages of the method in studying biological molecular structure .GNN By using rotation invariant scalars to encode vector features ( Such as node direction and edge direction ) To encode proteins 3D Geometry , Usually by defining the local coordinate system of each node . contrary , The author suggests that these features be expressed directly as R3 Geometric vector features in , These features are used in all steps of graphic propagation , Make proper transformation under the change of spatial coordinates . This brings two benefits . First , Input means more effective : It is not necessary to encode the direction of a node by its relative direction to all its neighbors , Instead, you just need to represent an absolute direction for each node . secondly , It standardizes the global coordinate system of the whole structure , Allow direct propagation of geometric features , There is no need to convert between local coordinates . for example , Representation of any position in space , Include points that are not nodes themselves , It can be easily propagated in a graph by Euclidean vector addition . However , The key challenge to this representation is , While maintaining the rotation invariance provided by scalar notation , In a way that preserves the original GNN The full expressive power of the way to perform graph propagation . So , The author introduces a new module , Geometric vector perceptron (GVP), To replace GNN Linear layer in .

Here are GVP The structure of :

(A) Given a variable and vector input characteristic tuple , The perceptron computes the disease update tuple , Is a function of and .(B) Structure based prediction task description . In computational protein design tasks (top), The goal is to predict the amino acid sequence that can fold into a given protein . Individual atoms are represented as colored spheres . In the model quality assessment task (bottom), The goal is to predict the mass fraction of the candidate structure , Used to measure candidate structures and experimentally determine structures ( gray ) The similarity . The algorithm is described as follows :

GVP The core of is two independent linear variations and , It is used for scalar and vector features and the following nonlinear layers and . Before scalar features are converted , It will be spliced with the norm of the converted vector feature , This allows the model to extract rotation invariant information from the input vector . Linear variation is only used to control the dimension of the output vector .

GVP Although the concept is simple , However, it can be verified that it has the required equal deformation / Invariance and expressiveness . First ,GVP The scalar and vector outputs of a random combination of rotation and reflection R Having the properties of equivariant and invariant . That is, if , be . Besides ,GVP The architecture can approximate any information about V Continuous reflection 、 Rotation invariant scalar valued functions .

3

experimental result

Two general settings are used to evaluate the model : Fixed skeleton sequence design and mutation zero-shot forecast .

3.1 Fixed skeleton protein design

Perplexity And recurrence rate are two commonly used indicators to evaluate this task .Perplexity Measure the inverse likelihood of the original sequence in the distribution of the predicted sequence ( low Perplexity Means high likelihood ). Sequence recurrence ( precision ) Measure how often the sampling sequence matches the original sequence at each position . The results are shown below :

Fixed skeleton sequence design . stay CATH 4.3 Evaluate on the topology partition test set . The model is based on each residue Perplexity( The lower the better ; Minimum complexity bold ) And sequence recovery rate ( The higher, the better ; Highest sequence recovery bold ) Compare . Large models can make better use of the predicted UniRef50 structure . Use the best model for predictive structural training (GVP Transformer) Than using only CATH The best model for training (GVP-GNN) Improved 8.9 Percent sequence recovery rate .

Partially masked skeleton : Masking in the training process can effectively predict the sequence of the covered areas in the test set .

Of masking coordinate regions of different lengths Perplexity.GVP-GNN There are more than a few architectural masking areas tokns It degenerates into background distribution Perplexity, and GVP Transformer Maintain medium accuracy over long masking spans , Especially when training on the data set of mask span .

Protein complexes : The model has good generalization performance for multi chain protein complexes . it turns out to be the case that GVP-GNN and GVP-Transformer It can effectively use the chain to chain information from amino acids to improve the prediction accuracy of each chain sequence .

stay CATH Topology testing , When only one chain is given (“Chain” Column ) When the trunk coordinates of , And when a given complex (“Complex” Column ) All trunk coordinates of , The sequence design performance of the complex is also divided accordingly . Finally, for two columns , On the same chain in the complex Perplexity To assess the .

Multi conformation : Given two states of the same protein A,B, To predict its sequence . The geometric mean of the two conditional likelihood is used as the proxy of the expected distribution , And ensure that the sequence is compatible with both States . Results show , Multi state designs have lower sequences than single state designs Perplexity, The results are shown below .

Two state design . stay PDBFlex Data set , Compared with the single conformation condition , Under the condition of double conformation GVP Transformer Sequences at local flexible residues Perplexity A lower .

3.2 zero-shot forecast

Next , We will show that the reverse folding model is an effective zero order of mutation effect in practical design applications (zero-shot) predictor , Including complex stability 、 Prediction of binding affinity and insertion effect . for example , about SARS-CoV-2 Spike Receptor binding domain (RBD) Zero degree of binding energy prediction (zero-shot) The performance is as follows :

Zero order prediction is based on receptor binding motifs (RBM) Log likelihood of the sequence ,RBM yes RBD And ACE2 Direct contact part (Lan wait forsomeone ,2020 year ). Evaluate in four cases :

1) Only given sequence data (“No coords”);

2) Given ACE2 and RBD The trunk coordinates of the , But does not include RBM, And no sequence (“No RBM coords”);

3) in consideration of RBD Complete trunk of , But there is no ACE2 Information about (“No ACE2 coords”);

4) Given RBD and ACE2 All the coordinates of (All coords).

4

summary

They explored whether protein structures predicted by deep learning methods could be used to train protein design models together with experimental structures . So , They use AlphaFold2 Generated 12M UniRef50 And use this to train , stay perplexity And sequence recurrence , And it is proved that it can be used in longer protein complexes 、 A protein with multiple conformations 、 Of the binding energy under the action of mutation zero-shot Forecast and AAV packging Generalization performance in tasks such as prediction . These results suggest that , In the reverse folding task, besides the geometric inductive bias, the main problem needs to be solved , It is equally important to try to use more training data sources to improve the model capacity . By integrating trunk span masking into the reverse fold task , And use a sequence to sequence Converter , Reasonable sequence prediction can be achieved for short masking spans .

Reference material

https://doi.org/10.1101/2022.04.10.487779

https://github.com/facebookresearch/esm

边栏推荐

- Take you to play completablefuture asynchronous programming

- Desai wisdom number - words (text wall): 25 kinds of popular toys for the post-80s children

- 【BSP视频教程】BSP视频教程第17期:单片机bootloader专题,启动,跳转配置和调试下载的各种用法(2022-06-10)

- AHK common functions

- Fiddler过滤会话

- Postman switching topics

- AIChE | 集成数学规划方法和深度学习模型的从头药物设计框架

- AlphaFold 和 NMR 测定溶液中蛋白质结构的准确性

- 智慧景区视频监控 5G智慧灯杆网关组网综合杆

- MFC basic knowledge and course design ideas

猜你喜欢

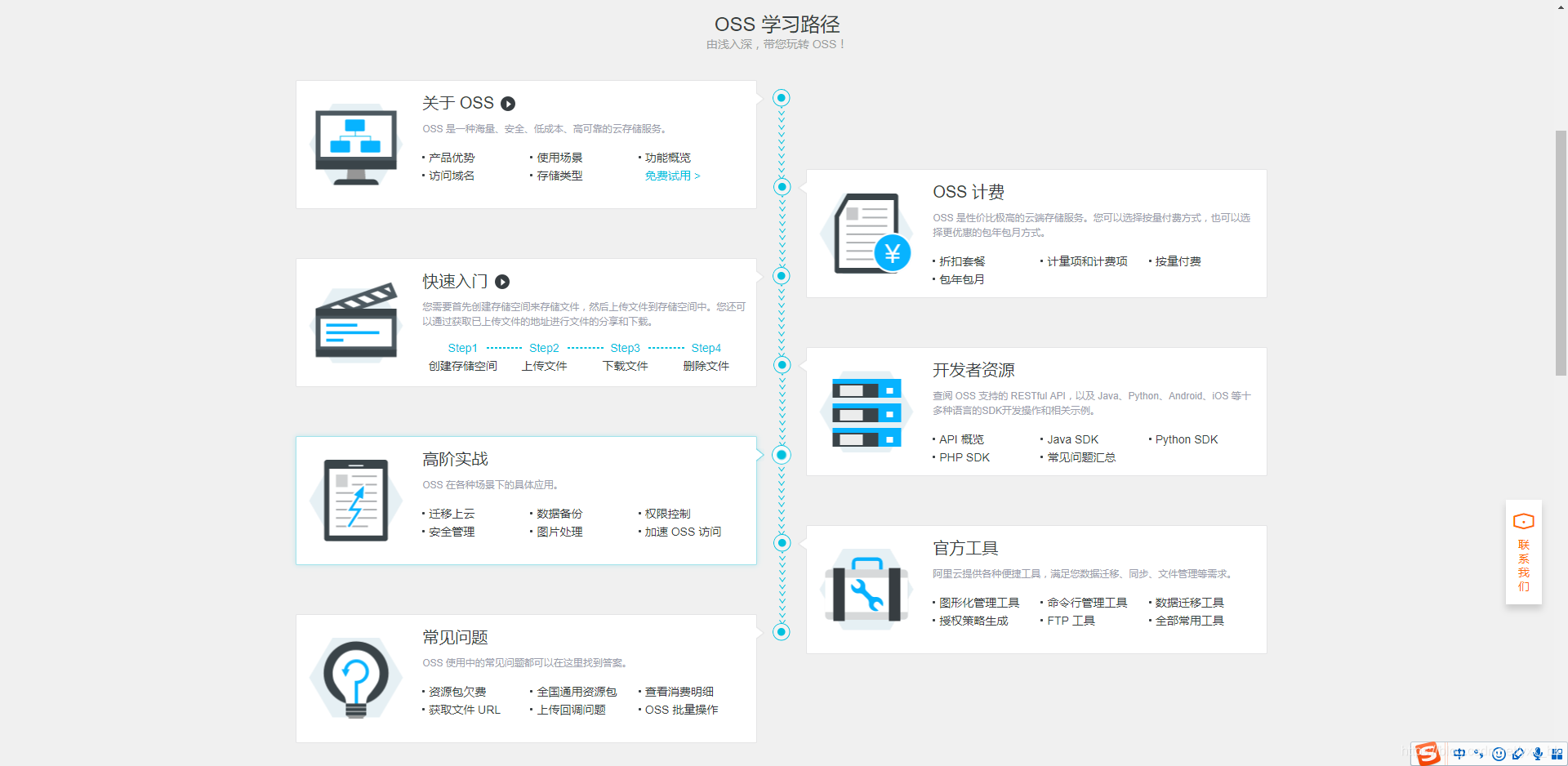

oss存储引出的相关内容

Thread interview related questions

![[web security self-study] section 1 building of basic Web Environment](/img/f8/f2d13c2879cdbc03ad261c6569bc1d.jpg)

[web security self-study] section 1 building of basic Web Environment

Do you know the five GoLand shortcuts to improve efficiency?

Docker安装Redis镜像详细步骤(简单易懂,适合新手快速上手)

智慧景区视频监控 5G智慧灯杆网关组网综合杆

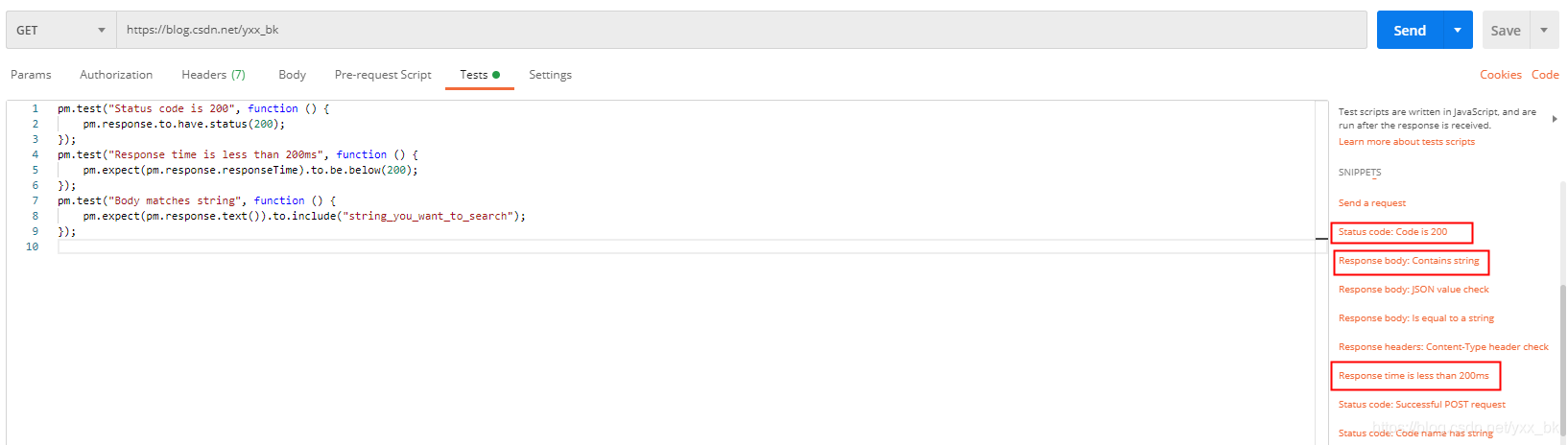

postman常用断言

![Download and install pycharm integrated development environment [picture]](/img/a1/a978f215517436c608f29a7d99c30c.jpg)

Download and install pycharm integrated development environment [picture]

IDEA的Swing可视化插件JFormDesigner

Shit, jialichuang's price is reduced again

随机推荐

Hidden Markov model and its training (1)

Fiddler set breakpoint

postman常用断言

adb不是内部或外部命令,也不是可运行的程序或批处理文件

Fiddler为测试做什么

线程面试相关问题

How does Dao achieve decentralized governance?

[quick code] define the new speed of intelligent transportation with low code

Fiddler设置断点

象形动态图图像化表意数据

提高效率的 5 个 GoLand 快捷键,你都知道吗?

Why do I need a thread pool? What is pooling technology?

卷起來,突破35歲焦慮,動畫演示CPU記錄函數調用過程,進互聯大廠如此簡單

Who is using my server? what are you doing? when?

从零开始,如何拥有自己的博客网站【华为云至简致远】

Fiddler配置

Print linked list from end to end (6)

Fabric.js 缩放画布

How to build an enterprise middle office? You need a low code platform!

script 标签自带的属性