当前位置:网站首页>[deep learning] video classification technology sorting

[deep learning] video classification technology sorting

2022-07-27 20:36:00 【Demeanor 78】

Recently, I am doing multimodal video classification , This paper sorts out the technology of video classification , Share with you .

In traditional image classification tasks , Generally, the input is a HxWxC Two dimensional image of , After convolution and other operations, the category probability is output .

For video tasks , The input is a sequence of two-dimensional images with temporal relationship, which is composed of continuous two-dimensional image frames , Or as a TxHxWxC Four dimensional tensor of .

Such data has the following characteristics :

1. The change information between frames can often reflect the content of video ;

2. The change between adjacent frames is generally small , There is a lot of redundancy .

The most intuitive and simplest way is to use the static image classification method , Treat each video frame directly as an independent two-dimensional image , Using neural network to extract the features of each video frame , Average the eigenvectors of all frames of this video to get the eigenvectors of the whole video , Then classify and recognize , Or get a prediction result directly for each frame , Finally, a consensus is reached in all frame results .

The advantage of this approach is that the amount of calculation is very small , The computational cost is similar to that of general two-dimensional image classification , And the implementation is very simple . But this approach does not consider the relationship between frames , The methods of average pooling or consensus are relatively simple , A lot of information will be lost . The video duration is very short , It is a good method when the change between frames is relatively small .

Another scheme also uses the method of static image classification , Treat each video frame directly as an independent two-dimensional image , But use in fusion VLAD The way .VLAD yes 2010 An algorithm for image retrieval in large-scale image database proposed in , It can put a NxD The characteristic matrix of is transformed into KxD(K<d). In this way , We cluster each frame feature of a video to get multiple clustering centers , Assign all features to the designated cluster center , Calculate the eigenvectors in each clustering region separately , Final concat Or weighted sum the eigenvectors of all clustering regions as the eigenvectors of the whole video .

In the original VLAD in ak Item is a non derivable item , At the same time, clustering is also a non derivative operation , We use NetVLAD Improvement , Calculate the distance between each eigenvector and all clustering centers softmax Obtain the probability of the nearest clustering center of the eigenvector , The clustering center is determined by learnable parameters .

Compared with average pooling ,NetVLAD Video sequence features can be transformed into multiple video shot features through the clustering center , Then the global feature vector is obtained by weighted summation of multiple video shots through the weight that can be learned . But in this way, the eigenvector of each frame is still calculated independently , The timing relationship and change information between frames cannot be considered .

For the sequence with temporal relation , Use RNN It's a common practice . The specific method is to use the network ( It's usually CNN) Extract each video frame sequence as a feature sequence , Then input the feature sequence in chronological order, such as LSTM Of RNN in , With RNN The final output of is classified output .

This approach can consider the timing relationship between frames , Theoretically, it has better effect . But in actual experiments , This solution has no obvious advantages over the first solution , This may be because RNN There is a forgetting problem for long sequences , The effect of short sequence video using simple static method is good enough .

Double flow method is a better method . This method uses two network branches, one of which extracts the feature vector of the video frame for the image Branch , The other is optical flow Branch , Using the optical flow graph between multiple frames to extract optical flow features , The fusion of image branch and optical flow branch feature vectors is used for classification and prediction .

This method is static , The amount of calculation is relatively small , The change information between frames can also be extracted from optical flow . But the calculation of optical flow will introduce additional overhead .

We can also directly transform the traditional two-dimensional convolution kernel into a three-dimensional convolution kernel , Treat the input image sequence as a four-dimensional tensor .

Representative practices include C3D,I3D,Slow-Fast etc. .

The experimental effect of this method is better , However, the complexity of three-dimensional convolution is increased by an order of magnitude , It often requires a lot of data to achieve good results , When the data is insufficient, the effect may be poor or even the training may fail .

Based on this , A series of methods are also proposed to simplify the computation of three-dimensional convolution , For example, based on low rank approximation P3D etc. .

TimeSformer stay ViT On the basis of , Five different attention calculation methods are proposed , Achieve computational complexity and Attention In the field of vision trade-off:

1. Spatial attention mechanism (S): Only the image blocks in the same frame are selected for self attention mechanism ;

2. Space time common attention mechanism (ST): Take all image blocks in all frames for attention mechanism ;

3. Separate spatiotemporal attention mechanisms (T+S): First, all the image blocks in the same frame are given self attention mechanism , Then the attention mechanism is applied to the image blocks at corresponding positions in different frames ;

4. Sparse local global attention mechanism (L+G): Use all the frames first , The adjacent H/2 and W/2 The image block calculates the local attention , And then in space , Use 2 The step size of each image block , The self attention mechanism is calculated in the whole sequence , This can be seen as a faster approximation of global spatiotemporal attention ;

5. The axial attention mechanism (T+W+H): First, the self attention mechanism is analyzed in the time dimension , Then the self attention mechanism is implemented on the image block with the same ordinate , Finally, the self attention mechanism is implemented on the image block with the same abscissa .

After the experiment , The author found T+S The effect of attention style is the best , Greatly reduced Attention At the same time , The effect is even better than all calculations patch Of ST attention .

Video Swin Transformer yes Swin Transformer 3D expanded version of , This method simply expands the window of computational attention from two dimensions to three dimensions , In the experiment, quite good results have been achieved .

In our experiment ,Video Swin Transformer It is a relatively good video understanding at present backbone, The experimental results are significantly better than the above methods .

The full version of the source file can be clicked Read the original obtain .

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download machine learning and deep learning notes and other information printing 《 Statistical learning method 》 Code reproduction album machine learning communication qq Group 955171419, Please scan the code to join wechat group

边栏推荐

- 康佳半导体首款存储主控芯片量产出货,首批10万颗

- Office automation solution - docuware cloud is a complete solution to migrate applications and processes to the cloud

- Koin simple to use

- access control

- C语言pow函数(c语言中指数函数怎么打)

- Idea: solve the problem of code without prompt

- Common methods of object learning [clone and equals]

- My approval of OA project (Query & meeting signature)

- Knowledge dry goods: basic storage service novice Experience Camp

- 多点双向重发布及路由策略的简单应用

猜你喜欢

图解LeetCode——592. 分数加减运算(难度:中等)

Pyqt5 rapid development and practice 4.3 qlabel and 4.4 text box controls

Pyqt5 rapid development and practice 4.5 button controls and 4.6 qcombobox (drop-down list box)

I'm also drunk. Eureka delayed registration and this pit

Simple application of multipoint bidirectional republication and routing strategy

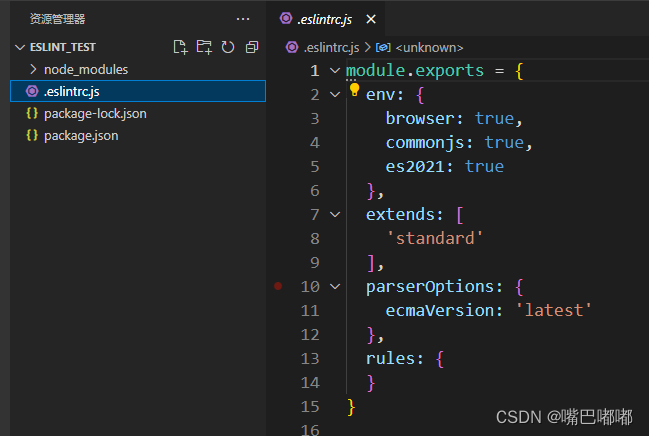

一看就懂的ESLint

PyQt5快速开发与实战 4.5 按钮类控件 and 4.6 QComboBox(下拉列表框)

Check the internship salary of Internet companies: with it, you can also enter the factory

JS realizes video recording - Take cesium as an example

Session attack

随机推荐

【深度学习】视频分类技术整理

图解LeetCode——592. 分数加减运算(难度:中等)

ES6 -- Deconstruction assignment

Mlx90640 infrared thermal imager temperature sensor module development notes (VII)

Idea: solve the problem of code without prompt

[rctf2015]easysql-1 | SQL injection

IE11 下载doc pdf等文件的方法

Koin simple to use

用户和权限创建普通用户

JVM overview and memory management (to be continued)

Why do we need third-party payment?

C语言--数组

What are the apps of regular futures trading platforms in 2022, and are they safe?

[RCTF2015]EasySQL-1|SQL注入

传英特尔将停掉台积电16nm代工的Nervana芯片

Two years after its release, the price increased by $100, and the reverse growth of meta Quest 2

Introduction to zepto

Simple application of multipoint bidirectional republication and routing strategy

JD: search product API by keyword

Understand the wonderful use of dowanward API, and easily grasp kubernetes environment variables