当前位置:网站首页>Understand the autograd package in pytorch

Understand the autograd package in pytorch

2022-07-07 22:27:00 【Eva215665】

This article mainly referred to pytorch Official website :pytroch Official website

as well as Dvie into deep learning A Book Pytorch The second chapter of the edition torch.Tensor yes autograd The core class of the package . If the tensor x Properties of .requires_grad Set to True, Then it will start tracking (track) All operations on it ( In this way, the gradient propagation can be carried out by using the chain rule ). When complete tensor x After a series of calculations ( here x Has become y), call y.backward() Method can automatically calculate the gradient . tensor x The gradient of will add up to x Of .grad Properties of the .

Pay attention to

y.backward()when , If y It's scalar , It doesn't need to bebackward()Pass in any parameters , otherwise , You need to pass in a with y Homomorphic Tensor. See the explanation of the remaining problems for the reasons

If you don't want to be tracked , You can call .detach() Separate it from the tracking record , This prevents future calculations from being tracked , In this way, the gradient cannot pass . Besides , You can also use withtorch.no_grad() Wrap up the operation code that you don't want to be tracked , This method is very useful in evaluating models , Because when evaluating the model , We do not need to calculate the gradient of the trainable parameter .Function Is another very important class .Tensor and Function Connect with each other and establish a acyclic graph , This figure encodes the complete calculation history . Every tensor has a .grad_fn attribute , This attribute refers to the function that creates the tensor ( Except for user created tensors - Their grad_fn by None).

Each one created by an operation tensor, There is one. grad_fcn sex , This attribute points to creating this tensor Of Function Memory address of . Be careful , Created by the user tensor It's not .grad_fcn Of this property .

Here are some examples to understand these concepts

Create a Tensor X, And set its requires_grad=True

x = torch.ones(2,2, requries_grad = True)

z = (x+2) * (x+2) * 3

out = z.mean()

Let's see out About x Gradient of d(out)/dx

out.backward()

x.grad

among out.backward yes out Reverse gradient ,x.grad Check the gradient calculation results .x.grad It's different from x Another new tensor of , This tensor stores x Relative to a scalar ( for example out) Gradient of .

tensor([[4.5, 4.5], [4.5, 4.5]])

Now let's explain the remaining problems , Why is it y.backward() when , If y It's scalar , It doesn't need to be backward() Pass in any parameters ; otherwise , You need to pass in a with y Homomorphic Tensor? Simply to avoid vectors ( Even higher dimensional tensors ) Take the derivative of the tensor , And convert it to scalar to take the derivative of the tensor . for instance , Suppose the shape is m x n Matrix X After calculation, we get p x q Matrix Y,Y After calculation, we get s x t Matrix Z. Then, according to the above rules ,dZ/dY It should be a s x t x p x q Four dimensional tensor ,dY/dX It's a p x q x m x n Four dimensional tensor of . The problem is coming. , How to back spread ? How to multiply two four-dimensional tensors ??? How do I get by ??? Even if we can solve the problem of how to multiply two four-dimensional tensors , How to multiply the four-dimensional and three-dimensional tensors ? How to find the derivative of the derivative , This series of questions , I feel like I'm going crazy …… To avoid this problem , We don't allow a tensor to derive from a tensor , Only scalar derivatives of tensors are allowed , The derivative is a tensor isomorphic to the independent variable . Therefore, if necessary, we need to convert the tensor into scalar by weighted summation of all tensor elements , for instance , hypothesis y By independent variable x Come by calculation ,w Is and y Isomorphic tensors , be y.backward(w) The meaning is : To calculate l = torch.sum(y * w), be l It's a scalar , Then seek l For arguments x The derivative of .

This is not a good input formula , To be continued ...

边栏推荐

- 新版代挂网站PHP源码+去除授权/支持燃鹅代抽

- Vs custom template - take the custom class template as an example

- Paint basic graphics with custompaint

- Kirin Xin'an operating system derivative solution | storage multipath management system, effectively improving the reliability of data transmission

- Pdf document signature Guide

- How does win11 time display the day of the week? How does win11 display the day of the week today?

- Revit secondary development - modify wall thickness

- [JDBC Part 1] overview, get connection, CRUD

- MIT6.S081-Lab9 FS [2021Fall]

- Failed to initialize rosdep after installing ROS

猜你喜欢

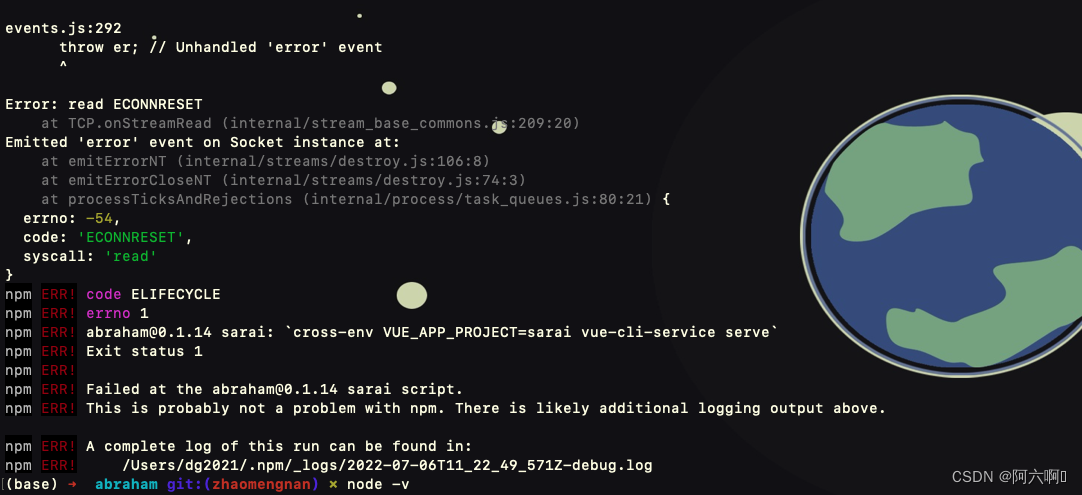

Node:504 error reporting

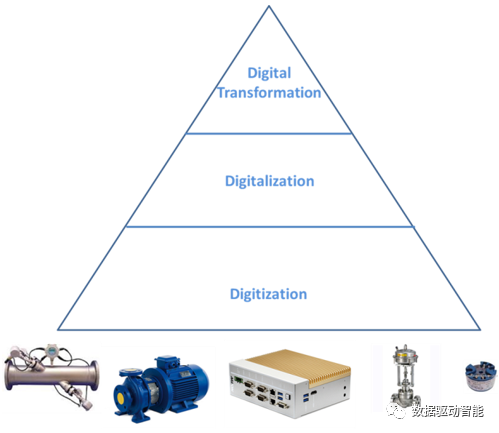

How to make agile digital transformation strategy for manufacturing enterprises

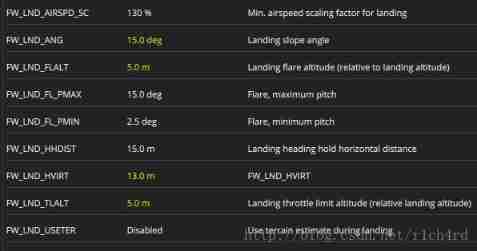

Px4 autonomous flight

反爬通杀神器

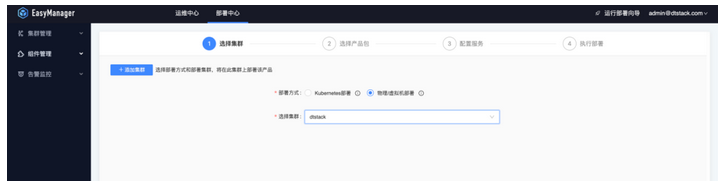

大数据开源项目,一站式全自动化全生命周期运维管家ChengYing(承影)走向何方?

Antd date component appears in English

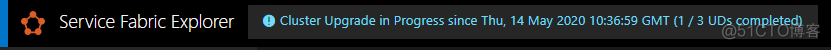

【Azure微服务 Service Fabric 】如何转移Service Fabric集群中的种子节点(Seed Node)

![[JDBC Part 1] overview, get connection, CRUD](/img/53/d79f29f102c81c9b0b7b439c78603b.png)

[JDBC Part 1] overview, get connection, CRUD

Embedded development: how to choose the right RTOS for the project?

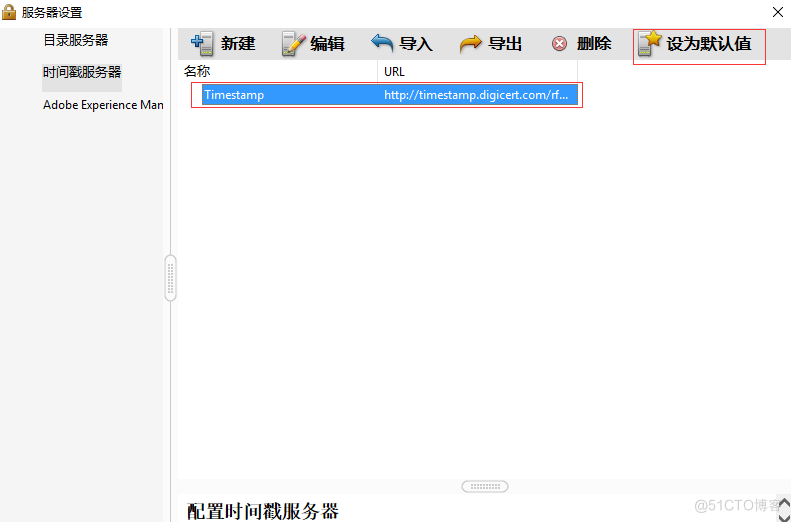

PDF文档签名指南

随机推荐

Two kinds of updates lost and Solutions

php 记录完整对接腾讯云直播以及im直播群聊 所遇到的坑

DNS series (I): why does the updated DNS record not take effect?

Ternary expressions, generative expressions, anonymous functions

Jerry's manual matching method [chapter]

OpenGL jobs - shaders

What does it mean to prefix a string with F?

Px4 autonomous flight

Unity development --- the mouse controls the camera to move, rotate and zoom

100million single men and women "online dating", supporting 13billion IPOs

Two methods of calling WCF service by C #

OpenGL homework - Hello, triangle

Use json Stringify() to realize deep copy, be careful, there may be a huge hole

Xcode modifies the default background image of launchscreen and still displays the original image

#DAYU200体验官#MPPT光伏发电项目 DAYU200、Hi3861、华为云IotDA

Jerry's configuration of TWS cross pairing [article]

Revit secondary development - shielding warning prompt window

null == undefined

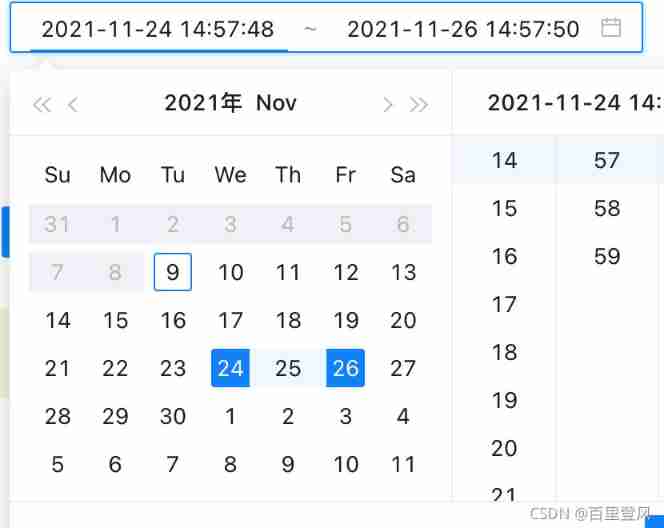

Get the week start time and week end time of the current date

MIT6.S081-Lab9 FS [2021Fall]