当前位置:网站首页>Label smoothing

Label smoothing

2022-07-24 04:23:00 【Billie studies hard】

Catalog

1. What problems does label smoothing mainly solve ?

2. How does label smoothing work ?

Label smoothing (label smoothing) come from GoogleNet v3

About one-hot For detailed knowledge of coding, see :One-hot code

1. What problems does label smoothing mainly solve ?

Conventional one-hot code It will bring problem : The generalization ability of the model cannot be guaranteed , Making the network overconfident can lead to over fitting .

Total probability and 0 probability Encourage between the category and other categories The gap should be widened as much as possible , And from the boundedness of the gradient , It's hard to adapt. Meeting Cause the model to believe too much in the category of prediction . and Label smoothing Can alleviate this problem .

2. How does label smoothing work ?

Label smoothing is hold one-hot Medium probability is 1 The item of is attenuated , Avoid overconfidence , The decaying part of confidence Be divided equally into each category .

for example :

One 4 Classification task ,label = (0,1,0,0)

labeling smoothing = ( ,1-0.001+

,1-0.001+ ,

, ,

, )=(0.00025,0.99925,0.00025,0.00025)

)=(0.00025,0.99925,0.00025,0.00025)

among , The probability adds up to 1.

3. Label smoothing formula

Cross entropy (Cross Entropy):

among ,q For tag value ,p To predict the result ,k For the category . namely q by one-hot Coding results .

labeling smothing: take q Smooth the label to q', Let the model output p Distribution to approximate q'.

, among u(k) Is a probability distribution , Here the Uniform distribution (

, among u(k) Is a probability distribution , Here the Uniform distribution ( ), Then we get

), Then we get

among , Is the original distribution q, ϵ ∈(0,1) It's a super parameter .

Is the original distribution q, ϵ ∈(0,1) It's a super parameter .

It can be seen from the above formula that , In this way label Yes ϵ Probability comes from uniform distribution , 1−ϵ The probability comes from the original distribution . This is equivalent to In the original label Add noise to the , Let the predicted value of the model Don't focus too much on categories with high probability , Put some probabilities in the lower probability category .

Therefore, the cross entropy loss function after label smoothing is :

How did you get this formula ?

take q'(k|x) Bring in the cross entropy loss function :

![=-\sum_{k=1}^{k}log(p_k)[(1-\varepsilon )\delta _{k,y}+\frac{\varepsilon }{k}]](http://img.inotgo.com/imagesLocal/202207/23/202207221953347716_11.gif)

![=-\sum_{k=1}^{k}log(p_k)(1-\varepsilon )\delta _{k,y}+[-\sum_{k=1}^{k}log(p_k)\frac{\varepsilon }{k}]](http://img.inotgo.com/imagesLocal/202207/23/202207221953347716_6.gif)

![=(1-\varepsilon )*[-\sum_{k=1}^{k}log(p_k)\delta _{k,y}]+\varepsilon *[-\sum_{k=1}^{k}log(p_k)\frac{1}{k}]](http://img.inotgo.com/imagesLocal/202207/23/202207221953347716_5.gif)

In this way, we get the label smoothing formula .

4. Code implementation

class LabelSmoothingCrossEntropy(nn.Module):

def __init__(self, eps=0.1, reduction='mean', ignore_index=-100):

super(LabelSmoothingCrossEntropy, self).__init__()

self.eps = eps

self.reduction = reduction

self.ignore_index = ignore_index

def forward(self, output, target):

c = output.size()[-1]

log_pred = torch.log_softmax(output, dim=-1)

if self.reduction == 'sum':

loss = -log_pred.sum()

else:

loss = -log_pred.sum(dim=-1)

if self.reduction == 'mean':

loss = loss.mean()

return loss * self.eps / c + (1 - self.eps) * torch.nn.functional.nll_loss(log_pred, target,

reduction=self.reduction,

ignore_index=self.ignore_index)

边栏推荐

- Successfully solved: error: SRC refspec master doors not match any

- Exploration of new mode of code free production

- 致-.-- -..- -

- Alibaba Taobao Department interview question: how does redis realize inventory deduction and prevent oversold?

- Codeforces Round #807 (Div. 2) A - D

- [untitled]

- Shell syntax (1)

- Post it notes --46{hbuildx connect to night God simulator}

- 归并排序(Merge sort)

- What if Adobe pr2022 doesn't have open subtitles?

猜你喜欢

短视频本地生活版块,有哪些新的机会存在?

buu web

The second anniversary of open source, opengauss Developer Day 2022 full highlights review!

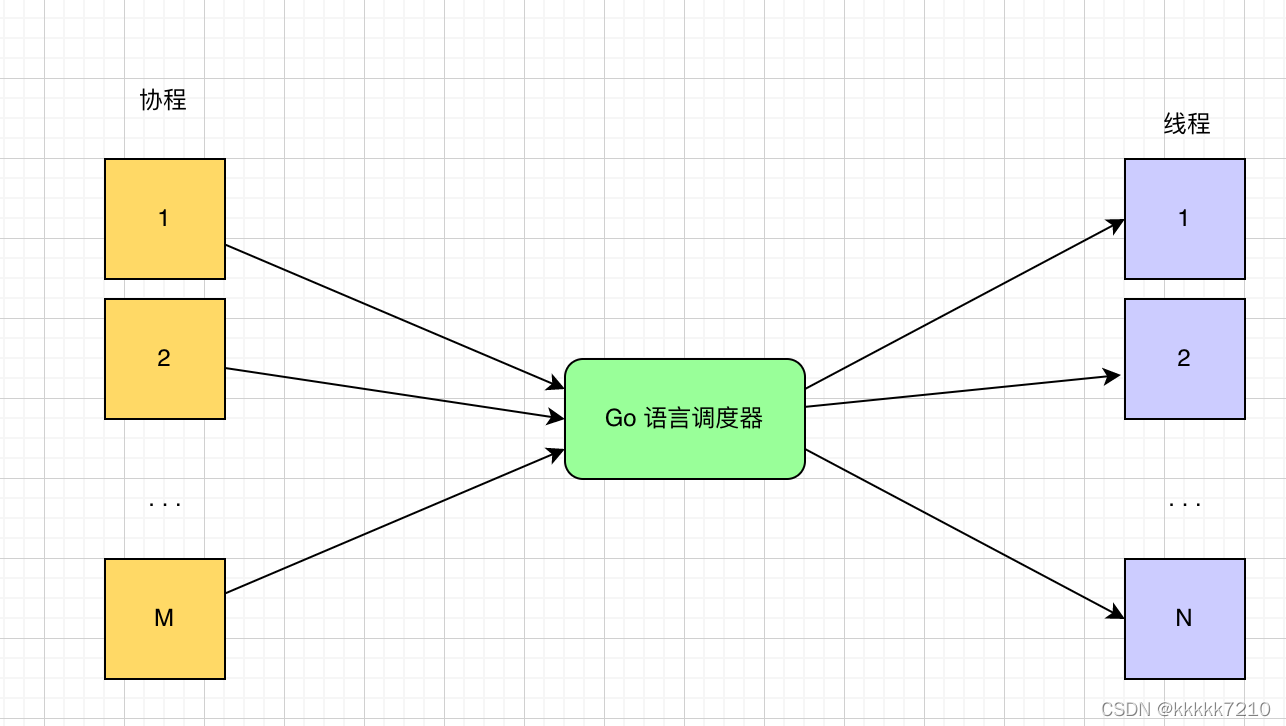

Go language series - synergy GMP introduction - with ByteDance interpolation

The pit trodden by real people tells you to avoid the 10 mistakes often made in automated testing

buu web

002_ Kubernetes installation configuration

Remember an online sql deadlock accident: how to avoid deadlock?

Could NOT find Doxygen (missing: DOXYGEN_EXECUTABLE)

Iqoo 10 series attacks originos original system to enhance mobile phone experience

随机推荐

LAN SDN technology hard core insider 10 cloud converged matchmaker evpn

How does the trend chart of spot silver change?

Mongo from start to installation and problems encountered

How did I get four offers in a week?

Upgrade POI TL version 1.12.0 and resolve the dependency conflict between the previous version of POI (4.1.2) and easyexcel

Graduation thesis on enterprise production line improvement [Flexsim simulation example]

Codeforces Round #807 (Div. 2) A - D

Ship test / IMO a.799 (19) incombustibility test of marine structural materials

CONDA common commands

postgresql源码学习(32)—— 检查点④-核心函数CreateCheckPoint

Combinatorial number (number of prime factors of factorials, calculation of combinatorial number)

Embedded system transplantation [6] - uboot source code structure

How to prevent SQL injection in PHP applications

Privacy protection federal learning framework supporting most irregular users

eCB接口,其实质也 MDSemodet

Live classroom system 04 create service module

What if Adobe pr2022 doesn't have open subtitles?

Vscode configuration user code snippet

Conversational technology related

Particle Designer: particle effect maker, which generates plist files and can be used normally in projects