当前位置:网站首页>Revisiting Self-Training for Few-Shot Learning of Language Model,EMNLP2021

Revisiting Self-Training for Few-Shot Learning of Language Model,EMNLP2021

2022-06-11 09:34:00 【Echo in May】

Method

This paper proposes a self supervised language model based framework for text learning with few samples SFLM. Given text samples , Learning methods by shielding the prompts of the language model , Generate weak enhancement and strong enhancement views for the same sample ,SFLM Generate a pseudo tag on the weakly enhanced version . then , When fine tuning with the enhanced version , The model predicts the same pseudo tag .

The overall model is as follows :

First , Given a labeled dataset X \mathcal{X} X, The number of each category is N N N, The unlabeled training data set is U \mathcal{U} U, The number of each class is μ N \mu N μN, μ \mu μ Is a greater than 1 The integer of , Used to ensure that the number of labeled samples is always less than that of unlabeled samples . As shown in the figure , The loss function of the whole model can be divided into three categories :

L S \mathcal{L}_S LS Indicates a supervised loss , The latter two represent the loss of self supervision . There are two kinds of self supervision , One is based on MLM To predict the sentence being mask Missing words , That is to say λ 2 L s s l \lambda_2\mathcal{L}_{ssl} λ2Lssl; The second is the pseudo tag generated by weak enhancement , Let the prediction results on the strongly enhanced version of the same sample be consistent with the pseudo tags . ad locum ,Prompt Learn how to predict labels , So let's talk about that Prompt Method .

Prompt-based supervised loss

Prompt learning (Prompt learning) Build a template with the predicted tag and the text body , This article USES “It is [Mask]” Type suffix template , That is to say :

So the model can predict [Mask], Get the word corresponding to the emotional tendency . This word is mapped M ′ \mathcal{M}' M′ Related to the corresponding classification , So by forecasting [Mask] Then you can get the specific categories . The formal description is :

after , The predicted words can be adjusted by cross entropy :

Self-training loss

For the same unlabeled sample u i u_i ui, Weak enhancement and strong enhancement are respectively expressed as α ( u i ) \alpha(u_i) α(ui) as well as A ( u i ) \mathcal{A}(u_i) A(ui). For strong enhancement , According to and Bert The same probability is 15% Go to masktoken. after , Both enhanced views go through a layer 0.1 Of dropout Reprocessing . The calculation of pseudo tags is the same as prompt Learn to predict specific words : And in order to distribute q i q_i qi Get the corresponding label , Need to carry out max:

And in order to distribute q i q_i qi Get the corresponding label , Need to carry out max:

after , The whole loss of self-monitoring can be expressed as :

among τ \tau τ It's a threshold , Only when the maximum probability is higher than this value can it be predicted as 1. And then the surface H H H It is indicated under the pseudo tag LM Predicted results .

Experiment

The method introduction is very simple , Then there are a lot of experiments , This paper chooses two different experiments of text classification and text pair matching , And set up N = 6 , μ = 4 N=6,\mu=4 N=6,μ=4. The overall results are as follows :

About parameters N , μ N,\mu N,μ The experiment of :

Comparison of different data augmentation strategies :

In another language model DistilRoBERTa Effect on :

Cross dataset Zero-shot Study :

边栏推荐

- 2161. divide the array according to the given number

- [FAQ for novices on the road] about data visualization

- 企业需要考虑的远程办公相关问题

- openstack详解(二十二)——Neutron插件配置

- 基于SIC32F911RET6设计的腕式血压计方案

- 1400. 构造 K 个回文字符串

- CUMT learning diary - theoretical analysis of uCOSII - Textbook of Renzhe Edition

- [TiO websocket] IV. the TiO websocket server implements the custom cluster mode

- Some learning records I=

- 移动端页面使用rem来做适配

猜你喜欢

PD chip ga670-10 for OTG while charging

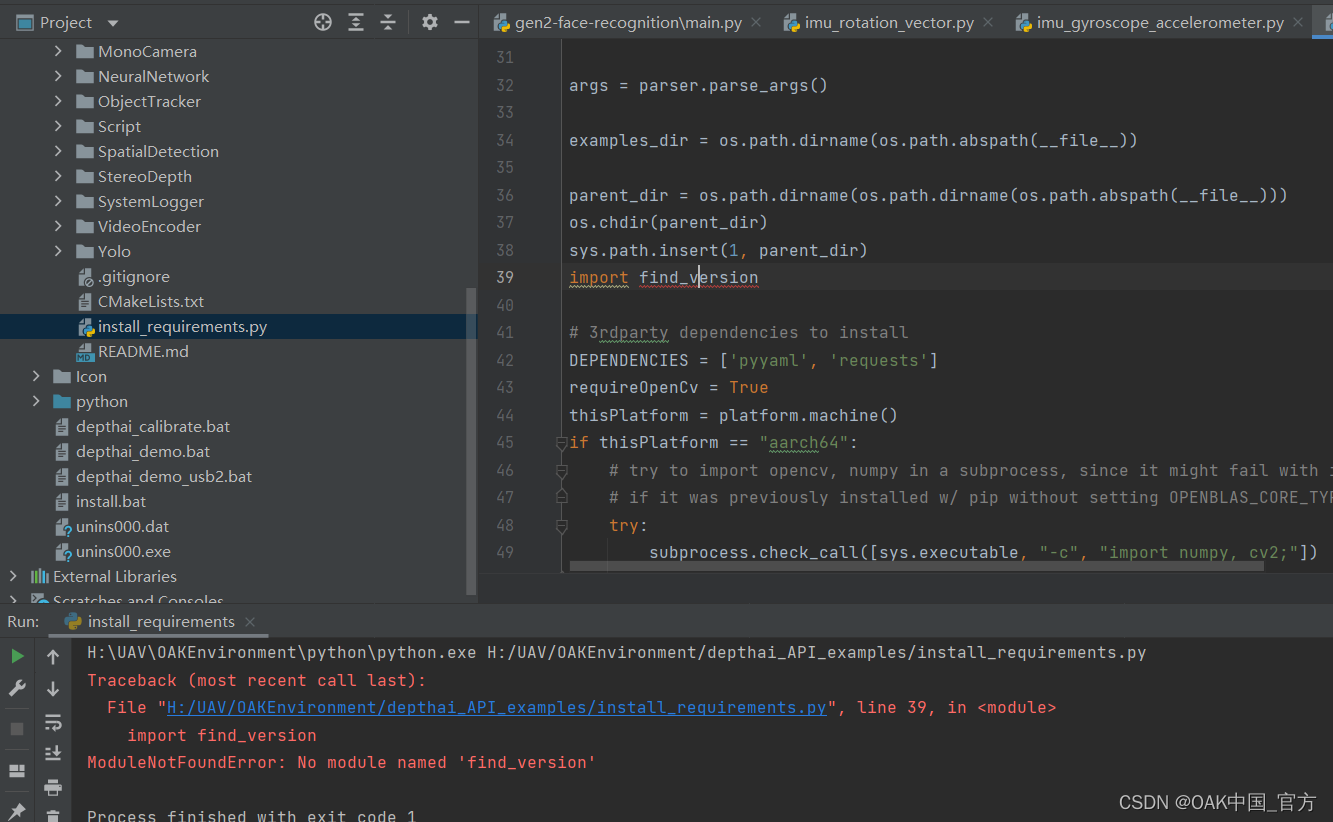

Modularnotfounderror: no module named 'find_ version’

Shandong University project training (IV) -- wechat applet scans web QR code to realize web login

Pytorch installation for getting started with deep learning

DOS command virtual environment

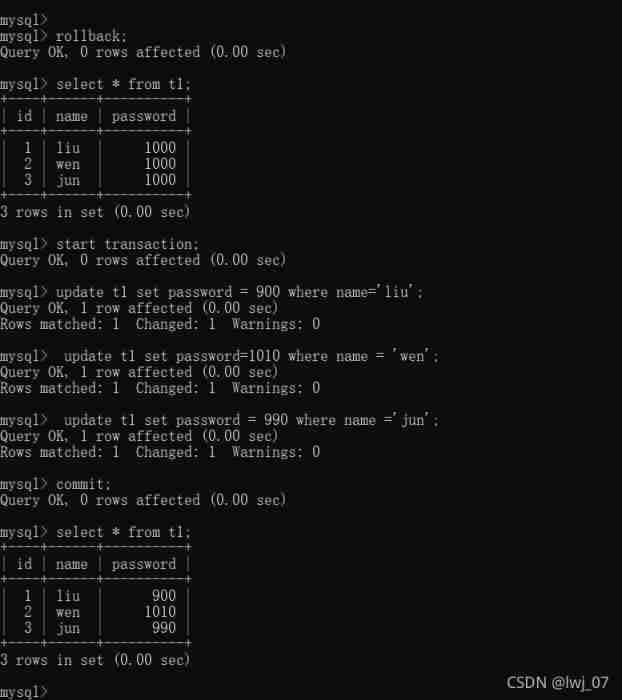

affair

Identifier keyword literal data type base conversion character encoding variable data type explanation operator

CUMT learning diary - theoretical analysis of uCOSII - Textbook of Renzhe Edition

OpenCV CEO教你用OAK(五):基于OAK-D和DepthAI的反欺骗人脸识别系统

Type-C docking station adaptive power supply patent protection case

随机推荐

【智能开发】血压计方案设计与硬件开发

【方案开发】血压计方案压力传感器SIC160

Résumé de la méthode d'examen des mathématiques

市场上的服装ERP体系到底是哪些类型?

Opencv CEO teaches you to use oak (IV): create complex pipelines

山东大学项目实训(四)—— 微信小程序扫描web端二维码实现web端登录

Identifier keyword literal data type base conversion character encoding variable data type explanation operator

【软件】ERP体系价值最大化的十点技巧

1493. the longest subarray with all 1 after deleting an element

关于原型及原型链

Error [error] input tesnor exceeded available data range [neuralnetwork (3)] [error] input tensor '0' (0)

【软件】大企业ERP选型的方法

Output image is bigger (1228800b) than maximum frame size specified in properties (1048576b)

MySQL:Got a packet bigger than ‘max_ allowed_ packet‘ bytes

Remote office related issues to be considered by enterprises

Machine learning notes - the story of master kaggle Janio Martinez Bachmann

Opencv CEO teaches you to use oak (V): anti deception face recognition system based on oak-d and depthai

MySQL啟動報錯“Bind on TCP/IP port: Address already in use”

Blinn Phong reflection model

Design of wrist sphygmomanometer based on sic32f911ret6