当前位置:网站首页>Machine learning notes - circular neural network memo list

Machine learning notes - circular neural network memo list

2022-06-12 08:59:00 【Sit and watch the clouds rise】

One 、RNN summary

1、 Tradition RNN The architecture of

Tradition RNN Architecture of recurrent neural network , Also known as RNN, It is a kind of neural network that allows the previous output to be used as input and has a hidden state . They usually look like this :

For each time step  , Activate

, Activate  And the output

And the output  Shown by the following :

Shown by the following :

among ,

,

,

,

,  Is the coefficient shared in time and

Is the coefficient shared in time and  ,

,  Activation function .

Activation function .

The following table summarizes the typical RNN Advantages and disadvantages of Architecture :

advantage : Can handle any length of input 、 Model size does not increase with input size 、 The calculation takes into account historical information 、 Weights are shared across time

shortcoming : The calculation is slow 、 It's hard to get information from a long time ago 、 Any future input of the current state cannot be considered

2、RNN Application

RNN The model is mainly used in naturallanguageprocessing and speech recognition . The following table summarizes the different applications :

| Type of RNN | Illustration | Example |

| One-to-one |  | Traditional neural networks |

One-to-many |  | Music generation |

Many-to-one |  | Emotion classification |

Many-to-many |  | Name entity recognition |

Many-to-many |  | Machine translation |

3、 Loss function

In the case of recurrent neural networks , The loss function of all time steps  The loss based on each time step is defined as follows :

The loss based on each time step is defined as follows :

4、 Time back propagation

Back propagation at every point in time . In time step  , Loss

, Loss  About the weight matrix

About the weight matrix  The derivative of is expressed as follows :

The derivative of is expressed as follows :

Two 、 Dealing with long-term dependencies

1、 Common activation functions

RNN The most commonly used activation functions in the module are as follows :

2、 disappear / Explosion gradient

stay RNN The phenomena of gradient disappearance and explosion are often encountered in the context of . They occur because it is difficult to capture long-term dependencies , Because the multiplicative gradient can decrease exponentially with respect to the number of layers / increase .

3、Gradient clipping

It is a technique used to deal with the gradient explosion problem sometimes encountered when performing back propagation . By limiting the maximum value of the gradient , This phenomenon is controlled in practice .

4、Types of gates

In order to solve the problem of gradient vanishing , In some types of RNN Specific doors are used in , And usually has a definite purpose . They are usually recorded as  And equal to :

And equal to :

among W, U, b It's a gate specific factor , yes sigmoid function . The main conclusions are as follows :

yes sigmoid function . The main conclusions are as follows :

| Type of gate | Role | Used in |

| Update gate \Gamma_uΓu | How much past should matter now? | GRU, LSTM |

| Relevance gate \Gamma_rΓr | Drop previous information? | GRU, LSTM |

| Forget gate \Gamma_fΓf | Erase a cell or not? | LSTM |

| Output gate \Gamma_oΓo | How much to reveal of a cell? | LSTM |

5、GRU/LSTM

Door control cycle unit (GRU) And long-term and short-term memory units (LSTM) Dealing with tradition RNN The gradient vanishing problem encountered ,LSTM yes GRU Generalization . The following table summarizes the characteristic equations for each architecture :

remarks : Symbol  Represents the element multiplication between two vectors .

Represents the element multiplication between two vectors .

6、RNN A variation of the

The following table summarizes other commonly used RNN framework :

3、 ... and 、 Learn words to express

1、 Motivation and symbols

Represent Technology : The following table summarizes the two main word expressions :

2、 Embedded matrix

For a given word  , Embedded matrix

, Embedded matrix  Is to 1-hot Express

Is to 1-hot Express  Map to its embedded

Map to its embedded Matrix , As shown below :

remarks : Learning to embed a matrix can use goals / Context likelihood model .

Four 、Word embeddings

1、Word2vec

Word2vec Is a framework designed to learn word embedding by estimating the probability that a given word is surrounded by other words . Popular models include skip-gram、 Negative sampling and CBOW.

2、Skip-gram

skip-gram word2vec A model is a supervised learning task , It does this by evaluating any given target word  And context words

And context words  The possibility of occurrence to learn word embedding . By paying attention to

The possibility of occurrence to learn word embedding . By paying attention to  And

And  Related parameters , probability P(t|c) Given by the following formula :

Related parameters , probability P(t|c) Given by the following formula :

remarks : stay softmax Sum the whole vocabulary in the denominator of the part , This makes the calculation cost of this model very high . CBOW Is another word2vec Model , It uses surrounding words to predict a given word .

3、Negative sampling

It is a set of binary classifiers using logistic regression , It aims to evaluate how a given context and a given target word may appear at the same time , Model in  Negative samples and 1 Training on a positive sample set . Given a context word

Negative samples and 1 Training on a positive sample set . Given a context word  And a target word

And a target word  , Forecast expressed as :

, Forecast expressed as :

remarks : The calculation cost of this method is lower than skip-gram Model

4、GloVe

GloVe A model is an abbreviation for a global vector of words , Is a word embedding technology , It uses a co-occurrence matrix  , Each of them

, Each of them It means a goal

In context

In context  Is the number of times . Its cost function

Is the number of times . Its cost function  as follows :

as follows :

among  Is a weighting function , bring

Is a weighting function , bring .

Whereas  and

and  Symmetry played in this model , The final word is embedded

Symmetry played in this model , The final word is embedded Given by the following formula :

5、Comparing words

(1)Cosine similarity

word  and

and  The cosine similarity of is expressed as follows :

The cosine similarity of is expressed as follows :

remarks : Is the word

Is the word  and

and  The angle between

The angle between

(2)t-SNE

t-SNE(t-distributed Stochastic Neighbor Embedding) It is a technology that aims to reduce high-dimensional embedding to low-dimensional space . In practice , It's usually used in 2D Visual word vector in space .

5、 ... and 、 Language model

Language models are designed to estimate sentences P(y) Probability .

1、n-gram model

This model is a simple way , This paper aims to quantify the probability of an expression appearing in the corpus by calculating the number of times an expression appears in the training data .

2、Perplexity

Language models often use a measure of confusion ( Also known as PP) To assess the , It can be interpreted as the number of words T Inverse probability of normalized data set . The lower the confusion, the better , The definition is as follows :

remarks :PP Commonly used in t-SNE.

6、 ... and 、 Machine translation

The machinetranslation model is similar to the language model , Just before it is placed an encoder network . For this reason , It is sometimes called the conditional language model .

The goal is to find a sentence y bring :

1、Beam search

It is a heuristic search algorithm , For machinetranslation and speech recognition , In the given input  Find the most likely sentence

Find the most likely sentence  .

.

The first 1 Step : Find the most likely  word

word

The first 2 Step : Calculate the conditional probability

The first 3 Step : Leave the B Combine

remarks : If beam width Set to 1, So this is equivalent to a naive greedy search.

2、Beam width

Beam width B Is the parameter of beam search . The larger B Value will produce better results , But it will reduce performance and increase memory . smaller B Values can lead to worse results , But the amount of calculation is small . B The standard value of is about 10.

3、Length normalization

To improve numerical stability , Beam search is usually applied to the following normalized targets , It is usually called normalized log likelihood target , Defined as :

![\boxed{\textrm{Objective } = \frac{1}{T_y^\alpha}\sum_{t=1}^{T_y}\log\Big[p(y^{< t>}|x,y^{< 1>}, ..., y^{< t-1>})\Big]}](http://img.inotgo.com/imagesLocal/202206/12/202206120853411535_17.gif)

notes : Parameters α Can be seen as a softener , Its value is usually in 0.5 To 1 Between .

4、Error analysis

When getting a bad forecast translation  when , One may wonder why we did not get a good translation by performing the following error analysis

when , One may wonder why we did not get a good translation by performing the following error analysis  :

:

5、Bleu score

Bilingual evaluation substitute (bleu) The score is calculated based on n-gram The similarity score of accuracy is used to quantify the quality of machinetranslation . The definition is as follows :

among  yes n-gram Upper bleu fraction , Only the following are defined :

yes n-gram Upper bleu fraction , Only the following are defined :

remarks : Brevity penalties can be applied to short predictive translations , To prevent artificial exaggeration bleu fraction .

7、 ... and 、 attention

1、 Attention model

The model allows RNN Focus on specific parts of the input that are considered important , Thus, the performance of the final model in practice is improved . By paying attention to  Output

Output  Should be activated for

Should be activated for  and

and  In time t The context of , We have :

In time t The context of , We have :

remarks : Attention score is often used in image captioning and machinetranslation .

2、 Attention weight

Output  Should be activated for

Should be activated for  The amount of attention given is determined by

The amount of attention given is determined by  give The calculation is as follows :

give The calculation is as follows :

remarks : The computational complexity is The second power of

边栏推荐

- Set up redis sentinel cluster (instance):

- List < string > sort

- 2024. 考试的最大困扰度-滑动窗口

- Occupied occupied occupied occupied occupied

- Encapsulate the amount input box component.

- 剑指 Offer II 016. 不含重复字符的最长子字符串-滑动窗口

- RuntimeError:Input and parameter tensors are not at the same device, found input tensor at cuda:0 an

- Engineers learn music theory (II) scale and tendency

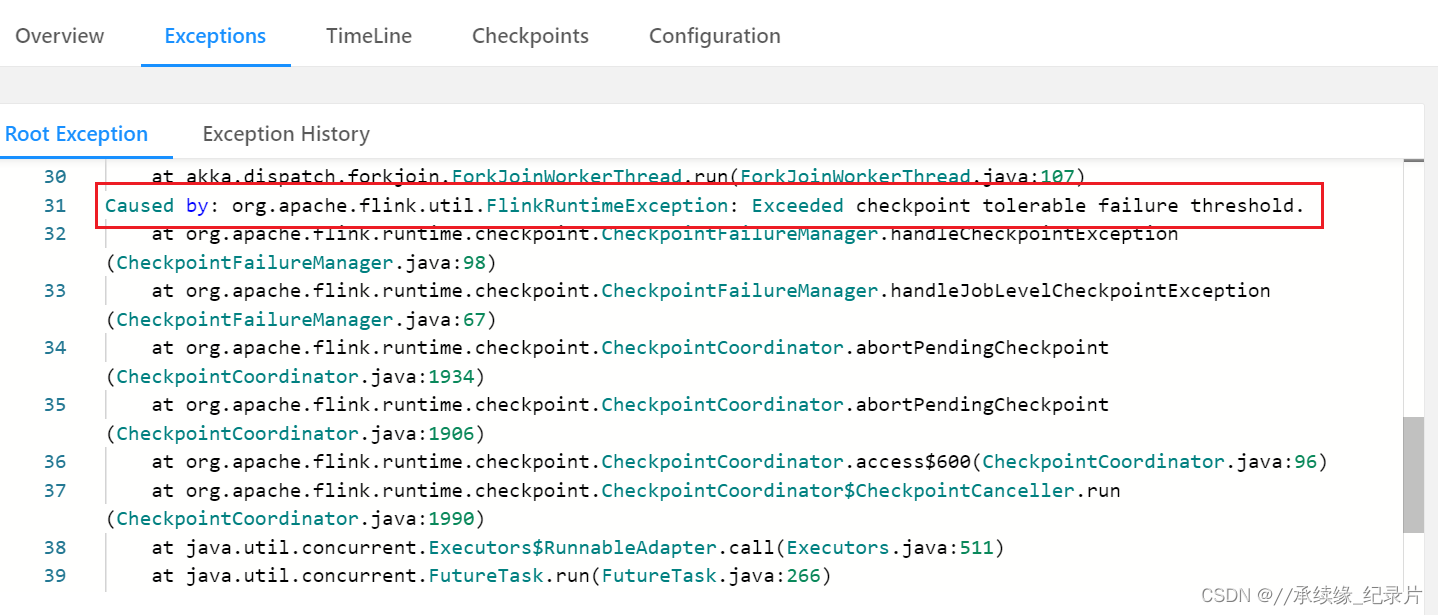

- Flink CheckPoint : Exceeded checkpoint tolerable failure threshold

- Implementing architecture caching in MySQL under redis server environment

猜你喜欢

Application method of new version UI of idea + use method of non test qualification and related introduction

![(node:22344) [DEP0123] DeprecationWarning: Setting the TLS ServerName to an IP address is not permit](/img/c1/d56ec09663857afa52f20848aeadac.png)

(node:22344) [DEP0123] DeprecationWarning: Setting the TLS ServerName to an IP address is not permit

数据库不知道哪里出问题

![[computer use] how to change a computer disk into a mobile disk?](/img/ff/843f4220fcaefc00980a6edc29aebf.jpg)

[computer use] how to change a computer disk into a mobile disk?

Loading font component loading effect

Binlog in mysql:

Summary of common character sets

Flink CheckPoint : Exceeded checkpoint tolerable failure threshold

Jupyter notebook sets the default browser to open with an error syntaxerror: (Unicode error) 'UTF-8' codec can't decode byte 0xd4

![[character set 7] what are the wide character codes and multi byte codes of Chinese characters](/img/8c/6d375d90234e6094b6930c2cefefa1.png)

[character set 7] what are the wide character codes and multi byte codes of Chinese characters

随机推荐

Notes used by mqtt (combined with source code)

Use NVM to dynamically adjust the nodejs version to solve the problem that the project cannot be run and packaged because the node version is too high or too low

Occupied occupied occupied occupied occupied

Inheritance of row height

Loading circling effect during loading

Bash tutorial

Can you migrate backwards before the first migration in the south- Can you migrate backwards to before the first migration in South?

MySQL - Import / export operation

(node:22344) [DEP0123] DeprecationWarning: Setting the TLS ServerName to an IP address is not permit

Error: ER_ NOT_ SUPPORTED_ AUTH_ MODE: Client does not support authentication protocol requested ... ...

Wechat applet image saving function

动态线段树leetcode.699

2024. 考试的最大困扰度-滑动窗口

Analysis of 43 cases of MATLAB neural network: Chapter 8 prediction of GRNN Network - Freight Volume Prediction Based on generalized regression neural network

Loading font component loading effect

第六章-包含多个段的程序

Knowledge points of 2022 system integration project management engineer examination: project cost management

Graphic analysis of viewbox in SVG

Chapter 8 - two basic problems of data processing

[character set 9] will GBK be garbled when copied to unicode?