当前位置:网站首页>RuntimeError:Input and parameter tensors are not at the same device, found input tensor at cuda:0 an

RuntimeError:Input and parameter tensors are not at the same device, found input tensor at cuda:0 an

2022-06-12 08:51:00 【Hey, it's me】

The reason for the error : Input x And the output y( Or model parameters ) Different storage locations

This error is mainly due to input x And the output y( Or model parameters ) Different storage locations

If you are error 1: Input x stay cuda(gpu) in , The model parameters are in cpu in

Want to input x Put in gpu in , Generally, it is to find the input parameters x, Then call the use parameter x Add a line of code before x.to(device)( among device=“cuda”)

If you are error 2: Input x stay cpu in , Model parameters cuda(gpu) In the middle

Find the definition model Code for , Add a line of code after the definition model.to(device)

The specific operation is as follows :

error 1:RuntimeError: Input and parameter tensors are not at the same device, found input tensor at cuda:0 and parameter tensor at cpu

1.1 Input x stay cuda(gpu) in , The model parameters are in cpu in

Test code demo: :

When the input x stay gpu, however model Store in cpu in So running the following code will report an error 1

import torch

import torch.nn as nn

from torch.nn import LSTM

device = "cuda" if torch.cuda.is_available() else "cpu" # Yes gpu use gpu, It doesn't work cpu

x = torch.Tensor([[1,2,3], [2,3,4]]) # x shape (2,3) (seq_len, Word vector dimension )

class Testmodel(nn.Module):

def __init__(self, input_dim, lstm_layer, lstm_hidden_dim, dropout):

super(Testmodel, self).__init__()

self.lstm_encoding = LSTM(input_dim, num_layers=lstm_layer, hidden_size=lstm_hidden_dim,

dropout=0.5) #

def forward(self, x: torch.Tensor):

output, (hn, cn) = self.lstm_encoding(x)

return output

model = Testmodel(

input_dim=3,

lstm_layer=2,

lstm_hidden_dim=4,

dropout=0.5,

)

# When the input x stay gpu, however model Store in cpu in So there's an error

x = x.to(device) # take x Put in gpu In the memory

output = model(x) # call forward Method x (2,3) lstm Input dimensions 3, Output dimension 4,

print(output) # output shape (2,4)

1.2 resolvent

Method 1: Comment the following code directly , Enter x Put in cpu Memory and output are consistent

x = x.to(device) # take x Put in gpu In the memory

Method 2( recommend ): Add a line of code model.to(device), Put the parameters of the model into gpu in , And the input x Consistent position , The case code after modification is as follows

import torch

import torch.nn as nn

from torch.nn import LSTM

device = "cuda" if torch.cuda.is_available() else "cpu" # Yes gpu use gpu, It doesn't work cpu

x = torch.Tensor([[1,2,3], [2,3,4]]) # x shape (2,3) (seq_len, Word vector dimension )

class Testmodel(nn.Module):

def __init__(self, input_dim, lstm_layer, lstm_hidden_dim, dropout):

super(Testmodel, self).__init__()

self.lstm_encoding = LSTM(input_dim, num_layers=lstm_layer, hidden_size=lstm_hidden_dim,

dropout=0.5) #

def forward(self, x: torch.Tensor):

output, (hn, cn) = self.lstm_encoding(x)

return output

model = Testmodel(

input_dim=3,

lstm_layer=2,

lstm_hidden_dim=4,

dropout=0.5,

)

model.to(device) # !!!!!!!!!!!! The newly added code is here

# When the input x stay gpu, however model stay cpu in So there's an error

x = x.to(device) # take x Put in gpu In the memory

output = model(x) # call forward Method x (2,3) lstm Input dimensions 3, Output dimension 4,

print(output) # output shape (2,4)

error 2.RuntimeError: Input and parameter tensors are not at the same device, found input tensor at cpu and parameter tensor at cuda:0

2.1 Enter in cpu in , Output ( Model parameters ) stay cuda(gpu) in

Test code demo:

When the input x stay cpu, however model Store in gpu in So running the following code will report an error 2

import torch

import torch.nn as nn

from torch.nn import LSTM

device = "cuda" if torch.cuda.is_available() else "cpu" # Yes gpu use gpu, It doesn't work cpu

x = torch.Tensor([[1,2,3], [2,3,4]]) # x shape (2,3) (seq_len, Word vector dimension )

class Testmodel(nn.Module):

def __init__(self, input_dim, lstm_layer, lstm_hidden_dim, dropout):

super(Testmodel, self).__init__()

self.lstm_encoding = LSTM(input_dim, num_layers=lstm_layer, hidden_size=lstm_hidden_dim,

dropout=0.5) #

def forward(self, x: torch.Tensor):

output, (hn, cn) = self.lstm_encoding(x)

return output

model = Testmodel(

input_dim=3,

lstm_layer=2,

lstm_hidden_dim=4,

dropout=0.5,

)

model.to(device) # Put the model parameters in gpu in

# When the input x stay gpu, however model stay cpu in So there's an error

output = model(x) # call forward Method x (2,3) lstm Input dimensions 3, Output dimension 4,

print(output) # output shape (2,4)

2.2 resolvent

Method 1: Find the code model.to(device) Direct comments , Put model parameters into cpu In memory and input x Consistent position

model.to(device) # Put the model parameters in gpu in

Method 2( recommend ): Add a line of code x = x.to(device) , Put the parameters of the model into gpu in , And the input x Consistent position , The case code after modification is as follows

import torch

import torch.nn as nn

from torch.nn import LSTM

device = "cuda" if torch.cuda.is_available() else "cpu" # Yes gpu use gpu, It doesn't work cpu

x = torch.Tensor([[1,2,3], [2,3,4]]) # x shape (2,3) (seq_len, Word vector dimension )

class Testmodel(nn.Module):

def __init__(self, input_dim, lstm_layer, lstm_hidden_dim, dropout):

super(Testmodel, self).__init__()

self.lstm_encoding = LSTM(input_dim, num_layers=lstm_layer, hidden_size=lstm_hidden_dim,

dropout=0.5) #

def forward(self, x: torch.Tensor):

output, (hn, cn) = self.lstm_encoding(x)

return output

model = Testmodel(

input_dim=3,

lstm_layer=2,

lstm_hidden_dim=4,

dropout=0.5,

)

model.to(device) # Put model parameters into gpu In the memory

# When the input x stay gpu, however model stay cpu in So there's an error

x = x.to(device) # !!!!!!!!!!!! The newly added code is here

output = model(x) # call forward Method x (2,3) lstm Input dimensions 3, Output dimension 4,

print(output) # output shape (2,4)

My level is limited , If you have any mistakes, please correct them

边栏推荐

- Install iptables services and open ports

- Background location case 1

- JVM learning notes: garbage collection mechanism

- Background color translucent

- Building a cluster: and replacing with error

- 【sklearn学习】LightGBM

- 2022.6.9-----leetcode. four hundred and ninety-seven

- At present, MES is widely used. Why are there few APS scheduling systems? Why?

- 【字符集八】char8_t、char16_t、char32_t、wchar、char

- [GUI development] browsing function implementation model of image processing software

猜你喜欢

报错:清除网站内搜索框中的历史记录?

【指針進階三】實現C語言快排函數qsort&回調函數

ERROR 1630 (42000): FUNCTION a.avg does not exist. Check the ‘Function Name Parsing and Resolution‘

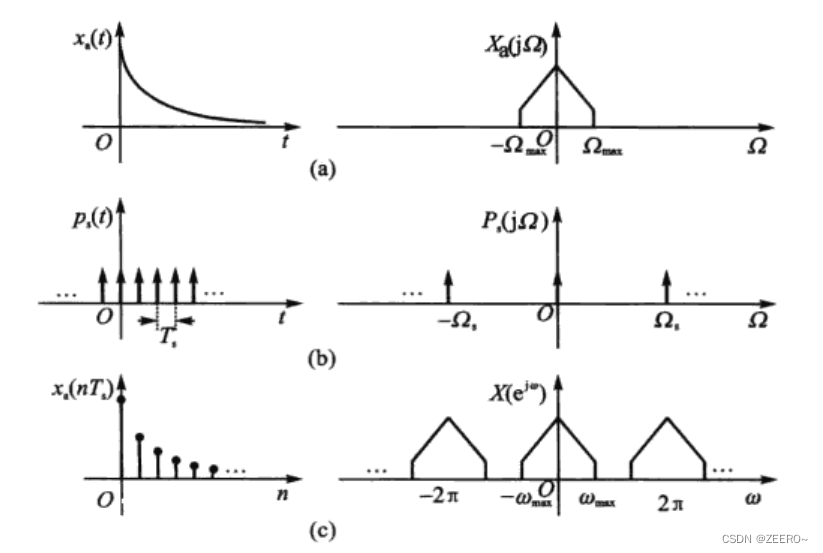

通俗理解时域采样与频域延拓

The difference between deep copy and shallow copy

JVM learning notes: three local method interfaces and execution engines

Webrtc series - mobile terminal hardware coding supports simulcast

Construction of memcached cache service under Linux:

Error: what if the folder cannot be deleted when it is opened in another program

【进阶指针二】数组传参&指针传参&函数指针&函数指针数组&回调函数

随机推荐

Redis installation test

第八章-数据处理的两个基本问题

JVM学习笔记:三 本地方法接口、执行引擎

What should be paid attention to when establishing MES system? What benefits can it bring to the enterprise?

第四章-第一个程序

Notes used by mqtt (combined with source code)

Engineers learn music theory (III) interval mode and chord

JS to refresh the page after loading

About weights exercise

第六章-包含多个段的程序

Application method of new version UI of idea + use method of non test qualification and related introduction

分库分表会带来读扩散问题?怎么解决?

Construction of memcached cache service under Linux:

正则校验用户名

Engineers learn music theory (II) scale and tendency

43 cas d'analyse du réseau neuronal MATLAB: chapitre 7 régression du réseau RBF - - réalisation de la régression fonctionnelle non linéaire

(p15-p16) optimization of the right angle bracket of the template and the default template parameters of the function template

Shell基本语法--算数运算

[advanced pointer I] character array & array pointer & pointer array

JVM learning notes: three local method interfaces and execution engines