当前位置:网站首页>深度学习Course2第一周Practical aspects of Deep Learning习题整理

深度学习Course2第一周Practical aspects of Deep Learning习题整理

2022-08-01 21:59:00 【l8947943】

Practical aspects of Deep Learning

- If you have 10,000,000 examples, how would you split the train/dev/test set?

- 33% train. 33% dev. 33% test

- 60% train. 20% dev. 20% test

- 98% train. 1% dev. 1% test

- When designing a neural network to detect if a house cat is present in the picture, 500,000 pictures of cats were taken by their owners. These are used to make the training, dev and test sets. It is decided that to increase the size of the test set, 10,000 new images of cats taken from security cameras are going to be used in the test set. Which of the following is true?

- This will increase the bias of the model so the new images shouldn’t be used.

- This will be harmful to the project since now dev and test sets have different distributions.

- This will reduce the bias of the model and help improve it.

- If your Neural Network model seems to have high variance, what of the following would be promising things to try?

- Make the Neural Network deeper

- Get more training data

- Add regularization

- Get more test data

- Increase the number of units in each hidden layer

- You are working on an automated check-out kiosk for a supermarket, and are building a classifier for apples, bananas and oranges. Suppose your classifier obtains a training set error of 0.5%, and a dev set error of 7%. Which of the following are promising things to try to improve your classifier? (Check all that apply.)

- Increase the regularization parameter lambda

- Decrease the regularization parameter lambda

- Get more training data

- Use a bigger neural network

- In every case it is a good practice to use dropout when training a deep neural network because it can help to prevent overfitting. True/False?

- True

- False

- The regularization hyperparameter must be set to zero during testing to avoid getting random results. True/False?

- True

- False

- With the inverted dropout technique, at test time:

- You apply dropout (randomly eliminating units) but keep the 1/keep_prob factor in the calculations used in training.

- You do not apply dropout (do not randomly eliminate units), but keep the 1/keep_prob factor in the calculations used in training.

- You apply dropout (randomly eliminating units) and do not keep the 1/keep_prob factor in the calculations used in training

- You do not apply dropout (do not randomly eliminate units) and do not keep the 1/keep_prob factor in the calculations used in training

- Increasing the parameter keep_prob from (say) 0.5 to 0.6 will likely cause the following: (Check the two that apply)

- Increasing the regularization effect

- Reducing the regularization effect

- Causing the neural network to end up with a higher training set error

- Causing the neural network to end up with a lower training set error

- Which of the following actions increase the regularization of a model? (Check all that apply)

- Decrease the value of the hyperparameter lambda.

- Decrease the value of keep_prob in dropout.

Correct. When decreasing the keep_prob value, the probability that a node gets discarded during training is higher, thus reducing the regularization effect. - Increase the value of the hyperparameter lambda.

Correct. When increasing the hyperparameter lambda, we increase the effect of the L_2 penalization. - Increase the value of keep_prob in dropout.

- Use Xavier initialization.

- Which of the following is the correct expression to normalize the input x ? \mathbf{x}? x?

- x = x − μ σ x = \frac{x-\mu }{\sigma } x=σx−μ

边栏推荐

猜你喜欢

AI应用第一课:支付宝刷脸登录

AIDL communication

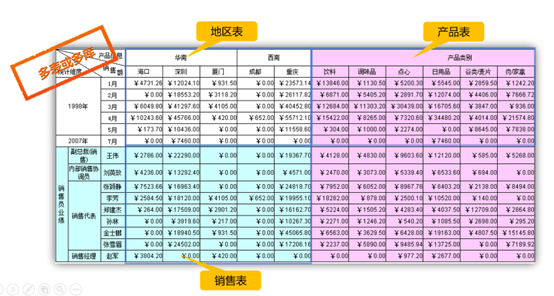

还在纠结报表工具的选型么?来看看这个

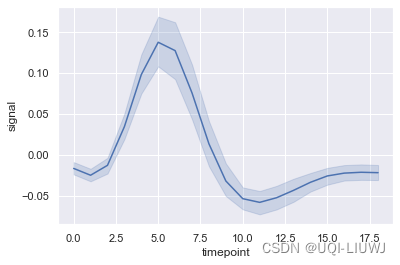

seaborn笔记:可视化统计关系(散点图、折线图)

Flutter基础学习(一)Dart语言入门

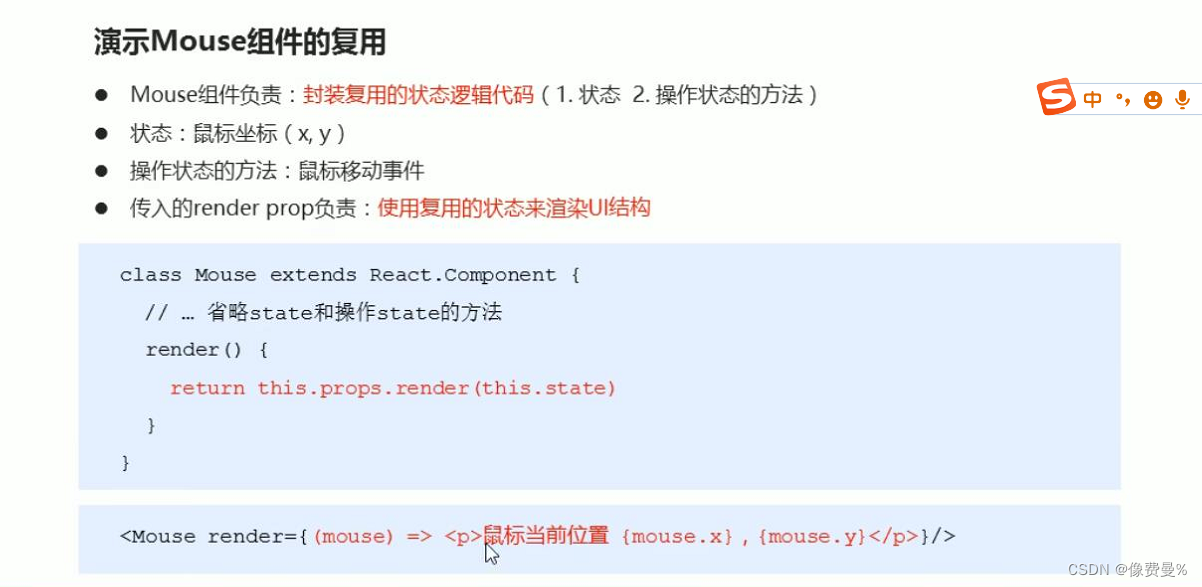

render-props and higher order components

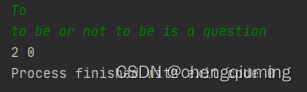

统计单词数

Advanced Algebra_Proof_The algebraic multiplicity of any eigenvalue of a matrix is greater than or equal to its geometric multiplicity

恒星的正方形问题

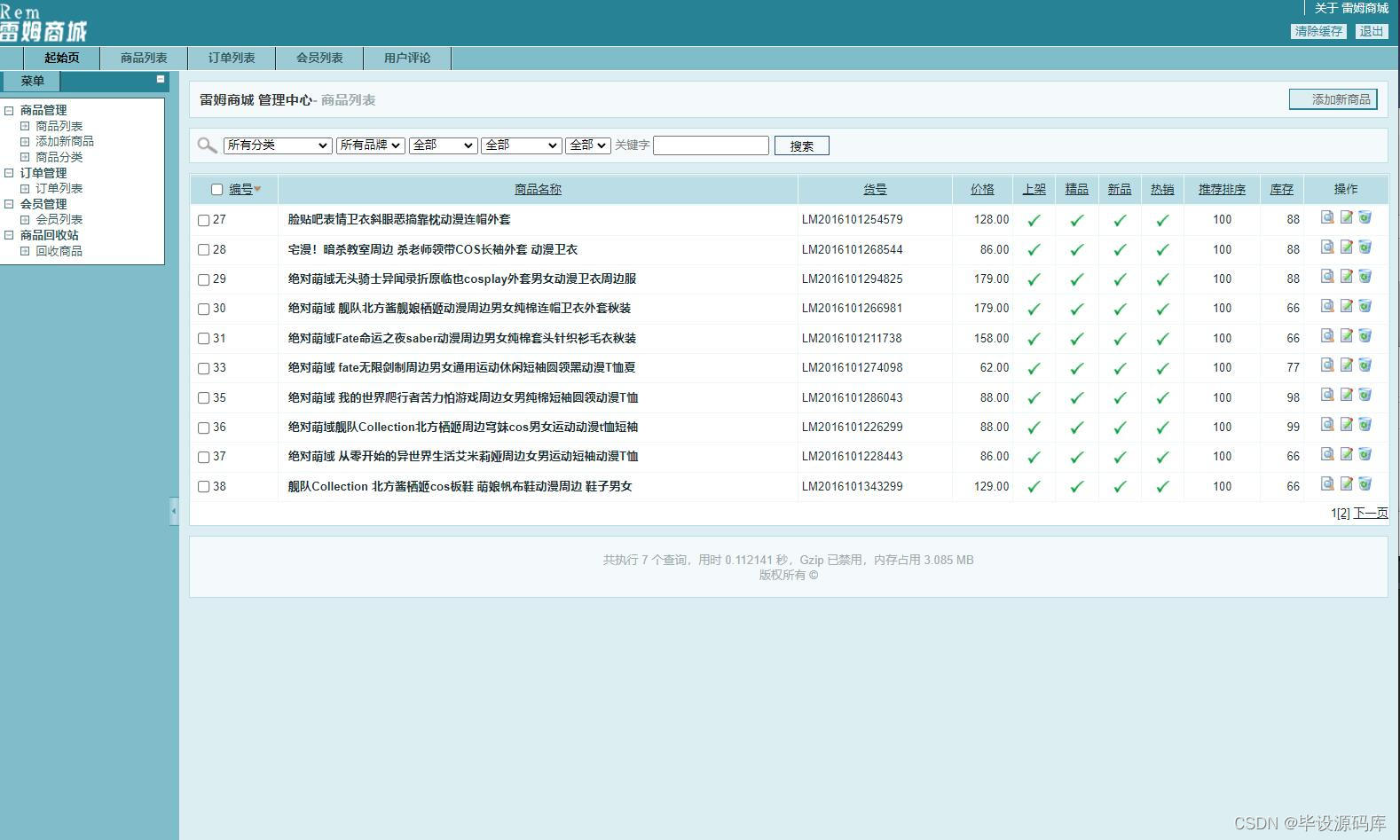

基于php动漫周边商城管理系统(php毕业设计)

随机推荐

[Mobile Web] Mobile terminal adaptation

leetcode 204. Count Primes 计数质数 (Easy)

小程序--分包

小程序--独立分包&分包预下载

迁移学习——Discriminative Transfer Subspace Learning via Low-Rank and Sparse Representation

程序员必备的 “ 摸鱼神器 ” 来了 !

【C语言实现】整数排序-四种方法,你都会了吗、

HCIP---Multiple Spanning Tree Protocol related knowledge points

Based on php online learning platform management system acquisition (php graduation design)

scikit-learn no moudule named six

365天挑战LeetCode1000题——Day 046 生成每种字符都是奇数个的字符串 + 两数相加 + 有效的括号

漫长的投资生涯

安全第五次课后练习

网络水军第一课:手写自动弹幕

Raspberry Pi information display small screen, display time, IP address, CPU information, memory information (C language), four-wire i2c communication, 0.96-inch oled screen

小程序容器+自定义插件,可实现混合App快速开发

selenium无头,防检测

游戏元宇宙发展趋势展望分析

毕业十年,财富自由:那些比拼命努力更重要的事,从来没人会教你

威纶通触摸屏如何打开并升级EB8000旧版本项目并更换触摸屏型号?