当前位置:网站首页>Deep learning non local neural networks

Deep learning non local neural networks

2022-06-25 05:24:00 【HheeFish】

Non-local Neural Networks Nonlocal neural network

- 0. summary

- 1. Related work

- 1.1.Non-local image processing.( Nonlocal image processing )

- 1.2.Graphical models( Graph model )

- 1.3.Feedforward modeling for sequences( Feed forward modeling of sequences )

- 1.4.Self-Attention( Self attention mechanism )

- 1.5.Interaction Networks( Interactive networks )

- 1.6.Video classification architectures( Video classification architecture )

- 2.Non-local neural network

0. summary

Capturing long-range dependence is of central importance in deep neural networks . For sequential data ( for example , In voice 、 In language ), Repetitive operation is the main solution of remote dependency modeling . For image data , Long distance dependence is caused by The large receptive field formed by deep convolution operation is modeled . Both convolution and loop operations deal with local neighborhoods , Whether in space or time ; therefore , Only repeat these operations , Gradually spread the signal through the data , To capture long-range dependencies . Repeated local operations have several limitations .

- It's computationally inefficient .

- Can lead to optimization difficulties that need to be carefully addressed .

- These challenges make multi hop dependency modeling more difficult , For example, when information needs to be transferred back and forth between remote locations .

In this paper , We will operate nonlocally (Non-local Operation) As an efficient 、 Simple and universal components , Used to capture remote dependencies using deep neural networks . Our nonlocal operation is a classical nonlocal averaging operation in computer vision (Non-local Means) Promotion of . Intuitively speaking , Nonlocal operations calculate the response of a location , It is through calculating the input feature mapping Weighted sum of features at all locations . Location sets can be set in space 、 In time or space , This means that our operation applies to images 、 Sequence and video problems .

Using nonlocal operations has several advantages :

- Compared with the asymptotic behavior of loop operation and convolution operation , Nonlocal operation calculates the interaction between any two positions Capture long-range dependencies directly , Regardless of their location and distance ;

- As we showed in the experiment , Nonlocal operations are effective , Even if there are only a few floors ( for example 5 layer ), Can also achieve the best results ;

- Last , Our nonlocal operations maintain variable input sizes , And it can be easily combined with other operations ( for example , We will use convolution ) Combine .

We demonstrate the effectiveness of nonlocal operations in video classification applications . In the video ,** Long distance interaction between distant pixels in space and time . A nonlocal block ( Our basic unit ) These can be captured directly in a feedforward manner Spatiotemporal dependencies .** For some nonlocal blocks , We call it the architecture of nonlocal neural networks 2D and 3D Convolution network ( Including expansion variables ) More accurate for video classification . Besides , Nonlocal neural network is more economical than three-dimensional convolutional neural network . In dynamics and Charades A comprehensive ablation study is presented on the data set . Use only RGB without any bells and whistles( for example , Optical flow , Multi scale testing ), Our method has achieved equivalent or better results than the winner of the latest competition on both data sets .

To prove the generality of nonlocal operations , We further propose target detection / Experiments on segmentation and pose estimation were carried out in COCO Data sets . In the powerful Mask R-CNN Above baseline , Our nonlocal block can improve the accuracy of all three tasks with a small additional computational cost . Combined with the evidence on the video , These image experiments show that , Nonlocal operations are often useful , It can be the basic component of designing deep neural network .

1. Related work

1.1.Non-local image processing.( Nonlocal image processing )

Nonlocal mean is a classical filtering algorithm , It calculates the weighted average of all pixels in the image . It allows remote pixel pairs to be based on patch Appearance similarity contributes to the filtering response of the location . The idea of nonlocal filtering developed into BM3D( Block match 3D), It applies to a group of similar but nonlocal patch To filter . Compared with deep neural networks ,BM3D It is a kind of solid image denoising baseline . Nonlocal matching is also one of the most successful texture synthesis , The essence of super-resolution and repair algorithm .

1.2.Graphical models( Graph model )

Long term dependencies can be achieved through Graphical model to model , For example, conditional random fields (CRF). In the context of deep neural network ,CRF It can be used for post-processing semantic segmentation prediction of network .CRF The iterative mean field reasoning can be transformed into a recursive network and trained . by comparison , Our method is a A simpler feedforward block computes nonlocal filtering . Different from these methods for segmentation , Our common components are used for classification and detection . These and our methods also involve a more abstract model , be called Figure neural network .

1.3.Feedforward modeling for sequences( Feed forward modeling of sequences )

Recently there has been a use of feedforward ( That is, non recursive ) Trends in network modeling of speech and language sequences . In these methods , Large receive fields contributed by very deep one-dimensional convolution capture long-term dependencies . These feedforward models are suitable for parallel implementation , And more effective than the widely used cycle model .

1.4.Self-Attention( Self attention mechanism )

The self - attention module focuses on all positions and takes their weighted average value in the embedded space , Calculate a position in the sequence ( for example , A sentence ) Response . As we will discuss in the next article , Self focus can be seen as Non-local A form of average , In this sense , Our work links the self-interest of machinetranslation with more general nonlocal filtering operations for image and video problems in computer vision .

1.5.Interaction Networks( Interactive networks )

Interactive networks (IN) Recently, it has been proposed for physical system modeling . They operate on graphics involving objects that interact in pairs .Hoshen[23] Under the background of multi-agent prediction modeling Vertex Attention IN ( Vertex attention interaction network ) Note the more efficient vertices in . Another variable , Name it relational network (Relation Networks), The feature is embedded with a computational function at all positions in its input . Our method also handles all pairs of , We will be in equation (1) Explained in (f(xi,xj)). Although our nonlocal network is associated with these methods , But our experiments show that , The nonlocality of the model , This is orthogonal to note / Interaction / The idea of relationship ( for example , A network can focus on a local area ), Is the key to its success . Nonlocal modeling is a long-term key element in image processing , It has been largely ignored in recent computer vision neural networks .

1.6.Video classification architectures( Video classification architecture )

A natural solution to frequency classification is to combine cnn The success of the image and rnn The success of the sequence . by comparison , The feedforward model is through three-dimensional convolution (3D convolutions, C3D) Realized in time and space , The three-dimensional filter can pass “ inflation ” Pre trained two-dimensional filter formation . In addition to modeling the original video input end-to-end , Optical flow and trajectories have been found to be useful . Flow and track are ready-made modules , Long term 、 Nonlocal dependencies .

2.Non-local neural network

We first give a general definition of nonlocal operations , Then provide several specific examples of it .

2.1. The formula

According to the definition of nonlocal mean , We define in deep neural networks non-local The operation is as follows :

- x It's the input signal ,cv It is generally used in feature map

- i Represents the output position , Such as space 、 Index of time or space time , His response should be right j The result of enumeration and calculation

- f Functional calculation i and j The similarity

- g Function calculation feature map stay j The representation of position

- The final y It's through the response factor C(x) After standardization

i Represents the response of the current location ,j For global response , A nonlocal response value is obtained by weighting .

f(xi,xj) To calculate i And all possible locations j Between pairwise The relationship between , This relationship can be, for example i and j The farther away you are ,f The smaller the value. , Express j The position is right i The less the impact .g(xj) Used to calculate the input signal at j Eigenvalue of position .C(x) It's a normalized parameter .

In the full connection layer (FC)xj and xi The relationship is not a function of input data , It's directly through Learn to gain weight , and Non-local In operation , Calculate the response according to the relationship between different locations . Besides , We're doing this (1) The formula in supports variable size input , And keep the corresponding output size . contrary ,fcl The layer requires a fixed size input / Output , And lost position correspondence . Nonlocal operations are a flexible building block , Can be easily convoluted with / Use with circulation layer . It can be added to the early part of deep neural network , It's not like what is usually used last fc layer . This allows us to build richer hierarchies , Combine nonlocal and local information .

2.2 example

Next we describe f and g Several versions of . Interestingly , We will go through experiments (Table2a) indicate , our Nonlocal models are insensitive to these choices , This indicates that the general nonlocal behavior is the main reason for the observed improvement . For the sake of simplicity , We only consider the form of linear embedding :g(xj) =Wgxj, among Wg Is a weight matrix that needs to be learned . This can be achieved as 1×1 Space convolution or 1×1×1 Spatiotemporal convolution . Next, let's talk about pairwise functions f The choice of .

2.2.1. gaussian

After nonlocal mean and bilateral filter , Natural selection Gaussian function . In this paper , We consider the :

XTi X j Is the calculated dot product similarity , The normalization factor is set to C(x)=∑∨j f(xi ,xj )

2.2.2. Embedded Gauss

The simple extension of Gaussian function is to calculate the similarity in the embedded space . In this paper , We consider the :

θ(xi)=Wθxi and ф(xj)=Wфxj Are two embedded , The normalization factor is set to C(x)=∑∨j f(xi ,xj )

self-attention The module is actually non-local Of embedded Gaussian A special case of version . For a given i,C(x)f(xi,xj) It becomes calculating all j Of softmax, namely y=softmax(xTWTθWϕx)g(x), This is it. self-attention The form of expression . So we will self-attention The model is connected with the traditional nonlocal mean , And will sequential self-attention network It is extended to the more general space/spacetime non-local network, Can be in the image 、 Used in video recognition tasks .

2.2.3. Point multiplication

f It can also be defined as point multiplication similarity , namely :

Here we use the embedded version . under these circumstances , We set the normalization factor C(x)=N, among N yes x The total number of elements . Standardization is necessary , Because the input can have a variable size . The main difference between dot product and embedded Gaussian version is softmax The existence of , It acts as an activation function .

2.2.4.Concatenation

Concat Is in Relation Networks Used in pairwise function. We also give a concat Formal f, as follows :

here [.,.] It means concat,wf Is to be able to concat The vector of is transformed into a scalar weight vector . Set up here C(x)=N.

The above variants demonstrate the flexibility of our generic nonlocal operations . We believe that alternative versions are possible , And may improve the results .

2.3. Nonlocal block

We will (1) Type in the non-local The operation becomes a non-local block, So that it can be inserted into the existing structure .

Let's define a non-local block by :

among yi By the equation (1) give ,xi Represents the residual link . Residual join allows us to insert new nonlocal blocks into any pre trained model , Without destroying its initial behavior .

chart 2

Time space nonlocal module . The characteristic graph is shown as the shape of its tensor T×H×W×1024 be used for 1024 Channels ( Perform proper shaping when paying attention ).“⊗” Representation matrix multiplication , and “⊕” Means sum by element . Execute... For each line softmax operation . The blue box indicates 1×1×1 Convolution . Here we show Embedded Gaussian version , The bottleneck is 512 Channels .

The normal Gauss version can be removed θ and φ To complete , Dot product version can be used by 1/N Zoom replacement for softmax To complete .

chart 2 A nonlocal block is illustrated in . equation (2)、(3) or (4) The pairwise computation in can be accomplished simply by matrix multiplication , Pictured 2 Shown ;(5) The connection version in is very simple . Pairwise computation of nonlocal blocks is lightweight when used in high-level subsampling feature mapping . for example , chart 2 The typical value in is T=4、H=W=14 or 7. The pairwise computation by matrix multiplication can be compared with the typical convolution layer in the standard network . We further adopt the following implementation , Make it more efficient .

We will Wg、Wθ and Wφ The number of channels represented is set to x Half the number of channels . This follows the bottleneck design , The computation of the block is reduced by about half . equation (6) Weight matrix in Wzi Calculation yi Position insertion (position-wise embedding ), Compare the number of channels with x matching . See chart 2. Subsampling techniques can be used to further reduce the amount of computation . We will work out the formula (1) It is amended as follows :yi=f(xi,x∧j)g(x∧j), among x∧ yes x Second sampling version of ( for example , Through pooling ). We do this in the spatial domain , This can reduce the amount of pairwise computation 1/4. This technique does not change nonlocal behavior , It will only make the calculation more sparse . This can be done by φ and g chart 2 Then add the maximum pool layer to achieve . We apply these effective modifications to all nonlocal blocks studied in this paper .

2.4. Understand and combine with the schematic diagram Non-local Efficient implementation strategy for

With Embeded Gaussian For example

- x representative feature map, xi It represents the information about the current location of interest ; xj Represents global information .

- θ It stands for θ(xi)=Wθxi , The actual operation is to use a 1×1 Convolution for learning

- φ It stands for ϕ(xj)=Wϕxj, The actual operation is to use a 1×1 Convolution for learning

- g Empathy

- C(x) It represents the normalization operation , stay embedding gaussian Is used in Sigmoid Realized .

non-local block Of pairwise The calculation of can be very lightweight Of , If it is used at the high level , smaller feature map What I said . such as , chart 2 The typical value on is T=4,H=W=14 or 7. To calculate by matrix operation parwise function The value of is the same as calculating a conv layer Similar amount of calculation . In addition, we also make it more efficient by .

We set up Wg,Wθ,Wϕ Of channel The number is x Of channel Half the number , And that's what makes a bottleneck, stay Channel domain Reduce the amount of data , So we can reduce the computation by half .Wz Zoom in again to x Of channel number , Ensure that the input and output dimensions are consistent .

One more subsampling Of trick Can be further used , Will be (1) Equation to :yi=∑∀jf(xi,x∧j)g(x∧j)/C(x∧j), among x∧ yes x I got it from down sampling ( Such as through pooling), We put this method in Space domain Upper use , Can reduce 1/4 Of pairwise function Amount of computation . This trick It doesn't change non-local act , It's making computing more sparse . This can be done through 2 Medium ϕ and g Add a... To the back max pooling Layer implementation .

All of us in this article non-local The above efficient strategies are used in all modules .

(2.4 Partial reference :【 Paper notes 】Non-local Neural Networks

边栏推荐

- Deep analysis of epoll reactor code

- Dynamic programming Backpack - 01 Backpack

- Electric store stores data

- Creation and use of MySQL index

- Example of dynamic programming 3 leetcode 55

- C language - minesweeping

- How micro engine uploads remote attachments

- Ranorex Studio 10.1 Crack

- How to choose the years of investment in financial products?

- Implementation of websocket long connection by workman under laravel

猜你喜欢

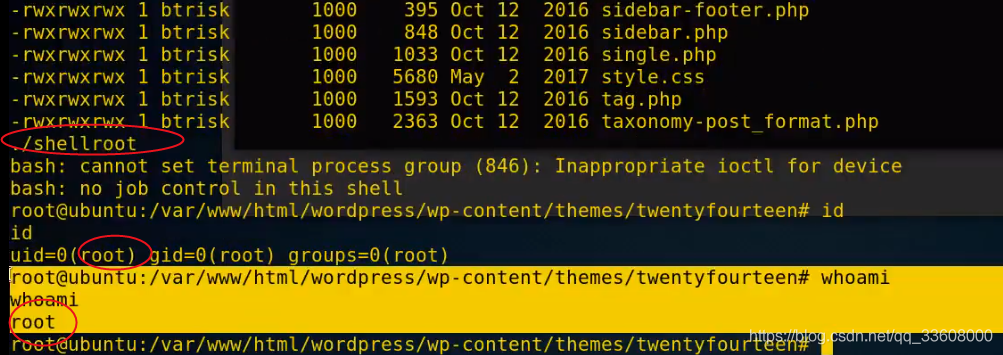

File upload vulnerability (III)

Double recursion in deep analysis merge sort

Professional things use professional people

Personalized Federated Learning with Moreau Envelopes

PHP calls map API

TX Text Control 30.0 ActiveX

Ranorex Studio 10.1 Crack

Essais de pénétration - sujets d'autorisation

Go deep into the working principle of browser and JS engine (V8 engine as an example)

CTFHUB SSRF

随机推荐

[Huawei machine test] hj16 shopping list

Abuse unlimited authorization -- is your address safe?

Enhanced paste quill editor

Array: force deduction dichotomy

Laravel's little knowledge

UVA816 Abbott’s Revenge

How to use the Magic pig system reinstallation master

电子协会 C语言 1级 28 、字符菱形

Basic knowledge of web pages (URL related)

JSON Library Tutorial from scratch (III): parsing strings, learning and sorting notes

Tanhaoqiang C language practice

TX Text Control 30.0 ActiveX

HR took the initiative to raise the salary of the test lady. How did she do it?

Go Concurrency

Dynamic programming full backpack

Svg code snippet of loading animation

Compatible with Internet Explorer

3.2.3 use tcpdump to observe TCP header information (supplement common knowledge of TCP protocol)

Characteristics of ES6 arrow function

Jason learning