当前位置:网站首页>Pytorch学习笔记(二)神经网络的使用

Pytorch学习笔记(二)神经网络的使用

2022-07-26 13:29:00 【小胡今天有变强吗】

神经网络的基本骨架–nn.Moudle的使用

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self, input):

output = input + 1

return output

tudui = Tudui()

x = torch.tensor(1)

output = tudui(x)

print(output)

卷积操作

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0 ,1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1)

print(output)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)

其中,input是输入图像,kernel是卷积核的大小,stride是步长,padding是填充的距离。

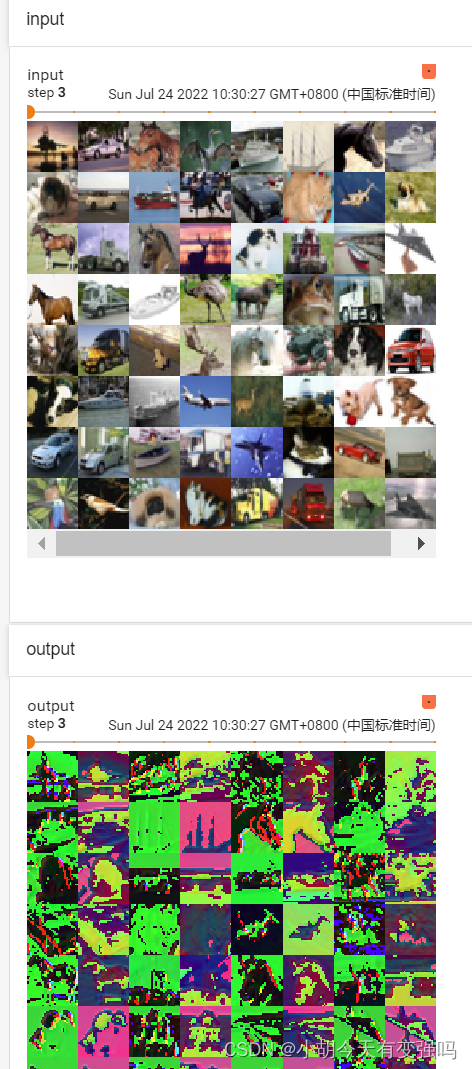

神经网络-卷积层

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data_conv2d", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

writer = SummaryWriter("../logs")

step = 0

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(imgs.shape)

print(output.shape)

# torch.Size([64, 3, 32, 32])

writer.add_images("input", imgs, step)

# torch.Size([64, 6, 30, 30]) -> [xxx, 3, 30, 30]

output = torch.reshape(output, (-1, 3, 30, 30))

writer.add_images("output", output, step)

step += 1

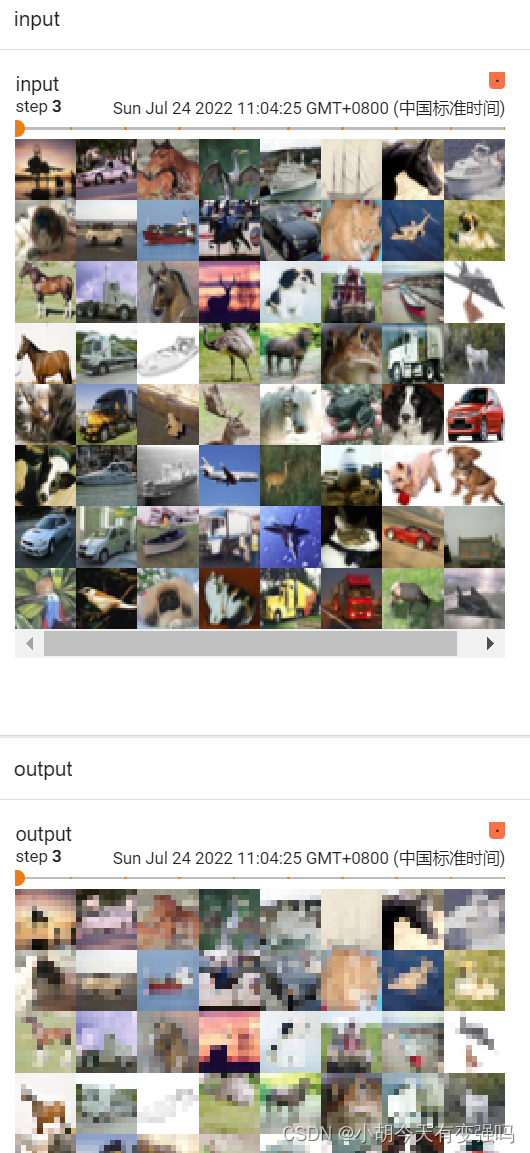

神经网络-最大池化的使用

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, download=True, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

# input = torch.tensor([[1, 2,0, 3, 1],

# [0, 1, 2, 3, 1],

# [1, 2, 1, 0, 0],

# [5, 2, 3, 1, 1],

# [2, 1, 0, 1, 1]], dtype=torch.float32)

# input = torch.reshape(input, (-1, 1, 5, 5))

# print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

# output = tudui(input)

# print(output)

writer = SummaryWriter("../logs_maxpool")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

神经网络-非线性激活

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

def forward(self, input):

output = self.relu1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output)

线性层

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64, drop_last=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = Linear(196608, 10)

def forward(self, input):

output = self.linear1(input)

return output

tudui = Tudui()

for data in dataloader:

imgs, target = data

print(imgs.shape)

output = torch.flatten(imgs)

print(output.shape)

output = tudui(output)

print(output.shape)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-1MwdaWtd-1658803724138)(C:\Users\Husheng\Desktop\学习笔记\image-20220724114411297.png)]](/img/8c/abef5abaf51b0a39020df10b290f99.png)

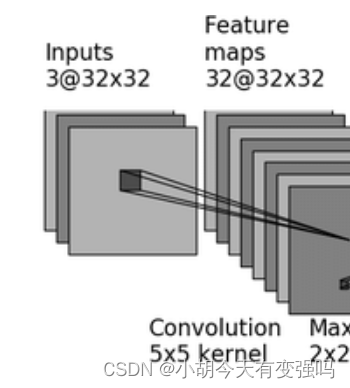

搭建实战与Sequential的使用

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(3, 32, 5, padding=2)

self.maxpool1 = MaxPool2d(2)

self.conv2 = Conv2d(32, 32, 5, padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32, 64, 5, padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024, 64)

self.linear2 = Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

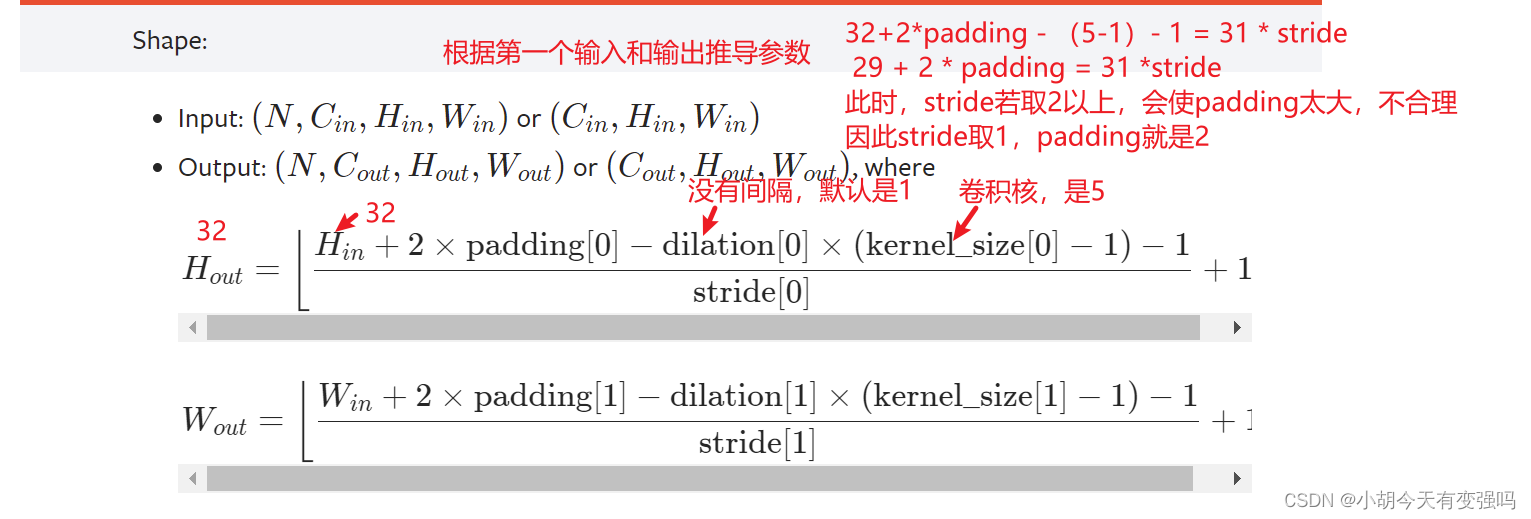

注意padding=2,需要通过下面公式计算:

使用Sequential:

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

# self.conv1 = Conv2d(3, 32, 5, padding=2)

# self.maxpool1 = MaxPool2d(2)

# self.conv2 = Conv2d(32, 32, 5, padding=2)

# self.maxpool2 = MaxPool2d(2)

# self.conv3 = Conv2d(32, 64, 5, padding=2)

# self.maxpool3 = MaxPool2d(2)

# self.flatten = Flatten()

# self.linear1 = Linear(1024, 64)

# self.linear2 = Linear(64, 10)

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

# x = self.conv1(x)

# x = self.maxpool1(x)

# x = self.conv2(x)

# x = self.maxpool2(x)

# x = self.conv3(x)

# x = self.maxpool3(x)

# x = self.flatten(x)

# x = self.linear1(x)

# x = self.linear2(x)

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("../logs_seq")

writer.add_graph(tudui, input)

writer.close()

双击模型中的模块可以展开细节:

损失函数与反向传播

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss = L1Loss(reduction='sum')

result = loss(inputs, targets)

print(result)

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

print(result_loss)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-qvBOz5kq-1658803724144)(C:\Users\Husheng\Desktop\学习笔记\image-20220724163551355.png)]](/img/67/cb2dbfb22f0c2d9e7694464f3ccfe1.png)

优化器

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

optim = torch.optim.SGD(tudui.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

optim.zero_grad()

result_loss.backward()

optim.step()

running_loss = running_loss + result_loss

print(running_loss)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-p25M6pGe-1658803724144)(C:\Users\Husheng\Desktop\学习笔记\image-20220725101701396.png)]](/img/59/2305bafef741feb1542b196c7d7bd5.png)

参考资料

边栏推荐

- 算法--连续数列(Kotlin)

- Huawei computer test ~ offset realizes string encryption

- Solution 5g technology helps build smart Parks

- Hcip day 11 comparison (BGP configuration and release)

- Unity中序列化类为json格式

- Square root of leetcode 69. x

- Learn about Pinia state getters actions plugins

- MySQL data directory (2) -- table data structure (XXV)

- JUC总结

- Flutter multi-channel packaging operation

猜你喜欢

Algorithm -- continuous sequence (kotlin)

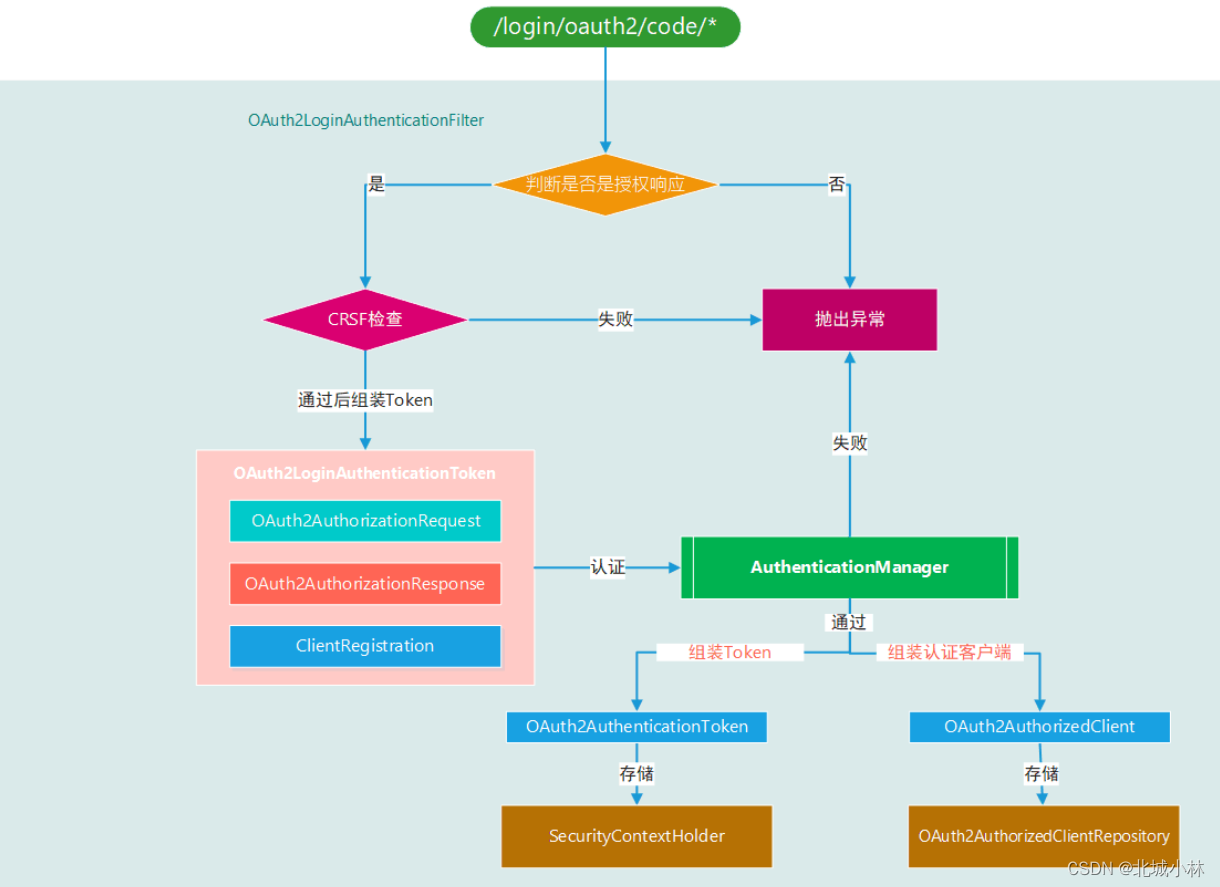

【Oauth2】五、OAuth2LoginAuthenticationFilter

Uncover the secret of white hat: 100 billion black products on the Internet scare musk away

【花雕动手做】有趣好玩的音乐可视化系列小项目(13)---有机棒立柱灯

File upload and download performance test based on the locust framework

Photoshop (cc2020) unfinished

Unity中序列化类为json格式

El table implements editable table

云智技术论坛工业专场 明天见!

Chat system based on webrtc and websocket

随机推荐

Huawei computer test ~ offset realizes string encryption

Leetcode 2119. number reversed twice

异步线程池在开发中的使用

HCIP第十一天比较(BGP的配置、发布)

从其他文件触发pytest.main()注意事项

Chat system based on webrtc and websocket

Algorithm -- continuous sequence (kotlin)

Concept and handling of exceptions

Photoshop(CC2020)未完

【开源之美】nanomsg(2) :req/rep 模式

JUC总结

【黑马早报】字节旗下多款APP下架;三只松鼠脱氧剂泄露致孕妇误食;CBA公司向B站索赔4.06亿;马斯克否认与谷歌创始人妻子有婚外情...

深度学习3D人体姿态估计国内外研究现状及痛点

AI-理论-知识图谱1-基础

从标注好的xml文件中截取坐标点(人脸框四个点坐标)人脸图像并保存在指定文件夹

Multi objective optimization series 1 --- explanation of non dominated sorting function of NSGA2

B+ tree index use (9) grouping, back to table, overlay index (21)

估值15亿美元的独角兽被爆裁员,又一赛道遇冷?

Tianjin emergency response Bureau and central enterprises in Tianjin signed an agreement to deepen the construction of emergency linkage mechanism

图扑 3D 可视化国风设计 | 科技与文化碰撞炫酷”火花“