当前位置:网站首页>[deep learning] was blasted by pytorch! Google abandons tensorflow and bets on JAX

[deep learning] was blasted by pytorch! Google abandons tensorflow and bets on JAX

2022-06-21 15:45:00 【Demeanor 78】

Reprinted from | The new intellectual yuan

I like some netizens' words very much :

「 The child is really no good , Let's have another one .」

Google did it .

For seven years TensorFlow Finally, I was Meta Of PyTorch I'm down , Up to a point .

Google sees wrong , I quickly asked for another one ——「JAX」, A new machine learning framework .

It's been very popular recently DALL·E Mini You know all about it , Its model is based on JAX Programming , To take full advantage of Google TPU Advantages .

1

『TensorFlow Dusk and PyTorch The rise of 』

2015 year , Machine learning framework developed by Google ——TensorFlow available .

at that time ,TensorFlow It's just Google Brain A small project of .

Nobody thought of it , Just came out ,TensorFlow It becomes very popular .

Uber 、 Big companies like abiying are using ,NASA Such national institutions are also using . They are also used in their most complex projects .

As of 2020 year 11 month ,TensorFlow The number of downloads has reached 1.6 100 million times .

however , Google doesn't seem to care much about the feelings of so many users .

The strange interface and frequent updates make TensorFlow Increasingly unfriendly to users , And more and more difficult to operate .

even to the extent that , Even inside Google , I also feel that this framework is going downhill .

In fact, Google has no choice but to update so frequently , After all, only in this way can we catch up with the rapid iteration in the field of machine learning .

therefore , More and more people have joined the project , As a result, the whole team slowly loses its focus .

And originally let TensorFlow The bright spots of being the tool of choice , It is also buried in a vast number of elements , No longer valued .

This phenomenon is Insider Described as a kind of 「 Cat and mouse game 」. The company is like a cat , The new requirements that emerge from iterations are like rats . Cats should always be vigilant , Jump at the mouse at any time .

This dilemma is unavoidable for companies that are the first to break into a certain market .

for instance , As far as search engines are concerned , Google isn't the first . So Google can learn from its predecessors (AltaVista、Yahoo wait ) Learn from your failures , Applied to its own development .

It's a pity that TensorFlow here , Google is the one trapped .

It is for these reasons , Developers who worked for Google , Slowly lost confidence in the old club .

It used to be everywhere TensorFlow Gradually falling , Lost to Meta The rising star of ——PyTorch.

2017 year ,PyTorch Beta open source .

2018 year ,Facebook The artificial intelligence research laboratory of PyTorch The full version of .

It is worth mentioning that ,PyTorch and TensorFlow It's all based on Python Developed , and Meta It pays more attention to maintaining the open source community , Even invest a lot of resources .

and ,Meta Concerned about Google's problems , Think we can't repeat the mistakes . They focus on a small number of functions , And make the best of these functions .

Meta Not like Google . This model was first introduced in Facebook Developed framework , It has gradually become an industry benchmark .

A research engineer at a machine learning startup said ,「 We basically use PyTorch. Its community and open source are doing the best . Not only questions but also answers , The example given is also very practical .」

In the face of this situation , Google developers 、 Hardware expert 、 Cloud provider , And anyone related to Google machine learning said the same thing in an interview , They think that TensorFlow Lost the developer's heart .

Experienced a series of overt and covert struggles ,Meta Finally, he got the upper hand .

Some experts say , The opportunity for Google to continue to lead machine learning in the future is slowly running away .

PyTorch It has gradually become the preferred tool for ordinary developers and researchers .

from Stack Overflow From the interactive data provided , On the developer forum PyTorch More and more questions , And about the TensorFlow Has been at a standstill in recent years .

Even Uber and other companies mentioned at the beginning of the article have turned to PyTorch 了 .

even to the extent that ,PyTorch Every subsequent update , They all seem to be fighting TensorFlow Face .

2

『 The future of Google machine learning --JAX』

It's just TensorFlow and PyTorch When the fight is in full swing , One inside Google 「 Small dark horse research team 」 Start working on a new framework , Can be used more conveniently TPU.

2018 year , An article entitled 《Compiling machine learning programs via high-level tracing》 The paper of , Give Way JAX The project surfaced , The author is Roy Frostig、Matthew James Johnson and Chris Leary.

From left to right are the three great gods

Then ,PyTorch One of the original authors Adam Paszke, Also in the 2020 At the beginning of the year, I joined JAX The team .

JAX It provides a more direct way to deal with one of the most complex problems in machine learning : Multi core processor scheduling problem .

Depending on the application ,JAX It will automatically combine several chips into a small group , Instead of letting one fight alone .

The benefit of this is , Let as many as possible TPU You can get a response in a moment , So as to burn our 「 Alchemy universe 」.

Final , Compared with the bloated TensorFlow,JAX Solved a big problem in Google : How to quickly access TPU.

Here is a brief introduction to the composition JAX Of Autograd and XLA.

Autograd It is mainly used in gradient based optimization , Can automatically distinguish Python and Numpy Code .

It can be used to deal with Python A subset of , Including cycle 、 Recursion and closures , You can also take the derivative of the derivative .

Besides ,Autograd Support gradient back propagation , This means that it can effectively obtain the gradient of scalar valued functions relative to array valued parameters , And forward mode differentiation , And the two can be combined arbitrarily .

XLA(Accelerated Linear Algebra) Can speed up TensorFlow Model without changing the source code .

When a program is running , All operations are performed by the actuator alone . Each operation has a precompiled GPU Kernel Implementation , The executor will be dispatched to the kernel implementation .

Take a chestnut :

def model_fn(x, y, z): return tf.reduce_sum(x + y * z)In the absence of XLA Operation in case of , This section starts three kernels : One for multiplication , One for addition , One for subtracting .

and XLA You can add 、 Multiplication and subtraction 「 The fusion 」 To single GPU The kernel , So as to realize optimization .

This fusion operation does not write intermediate values generated by memory to y*z Memory x+y*z; contrary , It takes the results of these intermediate calculations directly 「 Streaming 」 To the user , And keep them completely in GPU in .

In practice ,XLA Can realize the contract 7 Times performance improvement and about 5 Times batch Size improvement .

Besides ,XLA and Autograd Any combination of , You can even use pmap Method uses more than one at a time GPU or TPU Kernel programming .

And will be JAX And Autograd and Numpy In combination , You can get a face CPU、GPU and TPU Easy to program and high performance machine learning system .

obviously , Google has learned a lesson this time , In addition to the full deployment at home , In promoting the construction of open source Ecology , Is also extraordinarily positive .

2020 year DeepMind Officially put into JAX The arms of , This also announced the end of Google itself , Since then, various open source libraries have emerged in endlessly .

All over the place 「 fight both with open and secret means 」, Jia Yangqing said , Criticizing TensorFlow In the process of ,AI The system think Pythonic Scientific research is all that is needed .

But on the one hand, it is pure Python Can not achieve efficient software and hardware collaborative design , On the other hand, the upper distributed system still needs efficient abstraction .

and JAX Just looking for a better balance , Google is willing to subvert itself pragmatism It's worth learning .

causact R The authors of the software package and related Bayesian analysis textbooks say , I'm glad to see Google from TF The transition to JAX, A cleaner solution .

3

『 Google's challenge 』

As a rookie ,Jax Although we can learn from PyTorch and TensorFlow The merits of these two old timers , But sometimes a late starter may also bring disadvantages .

First ,JAX Still too 「 young 」, As an experimental framework , Far from reaching the standard of a mature Google product .

Except for all kinds of hidden bug outside ,JAX Still rely on other frameworks on some issues .

Take loading and preprocessing data , It needs to be used. TensorFlow or PyTorch To handle most of the settings .

obviously , This is different from the ideal 「 "One-stop" work style 」 The framework is far from complete .

secondly ,JAX Mainly aimed at TPU Highly optimized , But here we are GPU and CPU On , Much worse .

One side , Google in 2018 - 2021 Organizational and strategic chaos in , Result in the right GPU Insufficient funds for R & D support , And the priority of dealing with relevant issues is lower .

meanwhile , Maybe it's too focused on making your own TPU Can be in AI Speed up to get more cake , Naturally, the cooperation with NVIDIA is very scarce , Not to mention perfection GPU Support this kind of detail problem .

On the other hand , Google's own internal research , Don't think it's all about TPU On , This has led Google to lose its interest in GPU Good feedback loop used .

Besides , Longer debugging time 、 Not with Windows compatible 、 The risk of side effects is not tracked , Have increased Jax The threshold of use and the degree of friendliness .

Now? ,PyTorch It's almost there 6 Year old , But not at all TensorFlow The decline that appeared in those years .

So it seems , If you want to be a latecomer ,Jax There is still a long way to go .

Reference material :

https://www.businessinsider.com/facebook-pytorch-beat-google-tensorflow-jax-meta-ai-2022-6

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download Chinese University Courses 《 machine learning 》( Huang haiguang keynote speaker ) Print materials such as machine learning and in-depth learning notes 《 Statistical learning method 》 Code reproduction album machine learning communication qq Group 955171419, Please scan the code to join wechat group

边栏推荐

- [Yugong series] February 2022 wechat applet -app Networktimeout of JSON configuration attribute

- Taishan Office Technology Lecture: storage structure of domain in model

- Someone is storing credit card data - how do they do it- Somebody is storing credit card data - how are they doing it?

- Comprehensive learning notes for intermediate network engineer in soft test (nearly 40000 words)

- Practice of geospatial data in Nepal graph

- 2020-11-12 meter skipping

- 去中心化游戏如何吸引传统玩家?

- [cicadaplayer] read and write of HLS stream

- Somme factorielle

- Gold, silver and four interviews are necessary. The "brand new" assault on the real topic collection has stabilized Alibaba Tencent bytes

猜你喜欢

MNIST model training (with code)

对Integer进行等值比较时踩到的一个坑

Perfect partner of ebpf: cilium connected to cloud native network

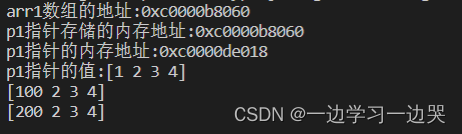

Go language - pointer

Apple was fined by Dutch regulators, totaling about RMB 180million

【数论】leetcode1006. Clumsy Factorial

Go language - Interface

Description of new features and changes in ABP Framework version 5.3.0

“我这个白痴,招到了一堆只会‘谷歌’的程序员!”

Score-Based Generative Modeling through Stochastic Differential Equations

随机推荐

鹅厂一面,有关 ThreadLocal 的一切

Retrieve the compressed package password

R语言使用fs包的file_access函数、file_exists函数、dir_exists函数、link_exists函数分别查看文件是否可以访问、文件是否存在、目录是否存在、超链接是否存在

微服务架构带来的分布式单体

The select drop-down box prohibits drop-down and does not affect presentation submission

My debug Path 1.0

Phantom star VR product details 32: Infinite War

“我这个白痴,招到了一堆只会‘谷歌’的程序员!”

Kubernetes deployment language

[go] time package

Write static multi data source code and do scheduled tasks to realize database data synchronization

A horse stopped a pawn

Xiao Lan does experiments (count the number of primes)

2022awe opened in March, and Hisense conference tablet was shortlisted for the review of EPLAN Award

I don't really want to open an account online. Is it safe to open an account online

我不太想在网上开户,网上股票开户安全吗

Algorithm question: interview question 32 - I. print binary tree from top to bottom (title + idea + code + comments) sequence traversal time and space 1ms to beat 97.84% of users once AC

Elastic stack Best Practices Series: a filebeat memory leak analysis and tuning

What is a good product for children's serious illness insurance? Please recommend it to a 3-year-old child

Not only products, FAW Toyota can give you "all-round" peace of mind