当前位置:网站首页>Multi gate mixture of experts and code implementation

Multi gate mixture of experts and code implementation

2022-06-24 11:03:00 【Goose】

background

When recommending prediction tasks online, it is often necessary to predict multiple behaviors of users , If you pay attention to 、 give the thumbs-up 、 Stay time, etc , So as to adjust the strategy for trade-offs . It involves multi task learning , This article will sort out some commonly used models, such as MMoE, ESMM, SNR Easy to understand and learn .

MMoE

Background and motivation

In industry, multi task learning based on neural network is widely used in recommendation and other scenarios , For example, when recommending items to users in the recommendation system , Not only to recommend items that users are interested in , We should also promote transformation and purchase as much as possible , Therefore, it is necessary to model both user scoring and purchase goals . Ali previously proposed ESMM The model is to model the click through rate and conversion rate at the same time , The proposed model is typical shared-bottom structure . There is a problem in multi task learning if the subtasks are very different , It often leads to poor effect of multi task model . The article to be introduced today is a proposal made by a Google content recommendation team considering the difference between multitasking MMoE Model , And achieved good results .

Multi task model by learning the connections and differences between different tasks , It can improve the learning efficiency and quality of each task . The framework of multi task learning is widely used shared-bottom Structure , Different tasks share the bottom hidden layer . This structure essentially reduces the risk of over fitting , But the effect may be affected by task differences and data distribution . There are also some other structures , For example, the parameters of two tasks are not shared , But by adding parameters to different tasks L2 The limitation of norm ; Some learn a set of hidden layers for each task and then learn the combination of all hidden layers . and shared-bottom Compared with the structure , These model pairs add task specific parameters , In the case that task differences will affect common parameters, the final effect will be improved . The disadvantage is that the model increases the amount of parameters, so it needs more data to train the model , And the model is more complex, which is not conducive to the actual deployment in the real production environment .

therefore , This paper puts forward a Multi-gate Mixture-of-Experts(MMoE) Multi task learning structure .MMoE The model describes the task relevance , Learning functions for specific tasks based on shared representations , It avoids the disadvantage of significantly increasing parameters .

Model is introduced

MMoE Structure of model ( The figure below c) Based on the widely used Shared-Bottom structure ( The figure below a) and MoE structure , Among them (b) The picture is (c) A special case of , I'll introduce you in turn .

Shared-Bottom Multi-task Model

Pictured above a Shown ,shared-bottom The Internet ( Expressed as a function f) At the bottom , Multiple tasks share this layer . Upward ,K Each subtask corresponds to a tower network( Expressed as h^k), The output of each subtask y_k=h^k(f(x))

Mixture-of-Experts(MoE)

MoE The model can be formalized as y=\sum^n_{i=1}g_i(x)f_i(x) , among \sum_{i=1}^ng_i(x)=1, And f_i,i=1,...,n yes n individual expert network(expert network It can be considered as a neural network ).

g It is a combination. experts The results of gating network, say concretely g produce n individual experts The probability distribution on the , The final output is all experts With power and . obviously ,MoE It can be seen as an integration method based on multiple independent models . Note here MoE It doesn't correspond to b part .

Some later articles will MoE As a basic unit , Will be multiple MoE Structures are stacked in a large network . For example, a MoE The upper layer can accept the upper layer MoE Layer output as input , Its output is used as the input of the next layer .

Multi-gate Mixture-of-Experts(MMoE)

MMoE The purpose is to be relative to shared-bottom The structure does not obviously increase the requirements of model parameters to capture different tasks . Its core idea is to shared-bottom Functions in the network f Replace with MoE layer , Pictured above c Shown , Formally expressed as :

among g^k(x)=softmax(W_{gk}x) , Input is input feature, The output is all experts The weight on the .

One side , because gating networks Usually lightweight , and expert networks It's shared by all tasks , So relative to some of the things mentioned in the paper baseline The method has advantages in calculation and parameter .

On the other hand , A gating network is common to all tasks (One-gate MoE model, Pictured above b), here MMoE( Upper figure c) Use separate... For each task in gating networks. For each task gating networks The final output weight is different experts The selective use of . Different tasks gating networks You can learn different combinations experts The pattern of , So the model takes into account the relevance and difference of the captured tasks .

model training

Model Trainability , Whether the model is robust enough to hyper parameters and initialization . The author conducted experiments on synthetic data sets , Observe the effects of different random seeds and model initialization methods on loss Influence . Here are two phenomena :

First of all ,Shared-Bottom models The variance of the effect is significantly greater than that based on MoE Methods , explain Shared-Bottom There are many local minima in the model ;

second , If the task correlation is very high , be OMoE and MMoE The effect is similar , But if the task relevance is low , be OMoE The effect is relative to MMoE Obvious decline , explain MMoE Medium multi-gate The structure of the task can alleviate the conflict caused by the difference of tasks effect .

On the whole , This article is an extension of multi task learning , Balancing multiple tasks through a gated network mechanism It can be used for reference in real business scenarios .

Model summary and application practice

MoE And MMoE What both have in common is that the original Hard-parameter sharing The middle and bottom layer full connection layer network is divided into several sub networks Expert, This approach is more like the idea of integrated learning , That is, under the same scale, a single network can not effectively learn the common expressions among all tasks, but each sub network can always learn some relevant and unique expressions in a task after it is divided into multiple sub networks , Re pass Gate Output (Softmax) Weight each Expert Output , By sending in their multi-layer full connections, they can learn specific tasks better .

MoE only one Gate Output , and MMoE There are multiple outputs . So the difference is MMoE A corresponding... Is set for different tasks Gate, In this way benefits Without adding a large number of new parameters Learn task specific functions to balance shared expressions to more clearly model the relationship between tasks .

Will be MMoE Apply to Youtube You can get different Expert The importance is different in different tasks ( By looking at each Gate Output of to determine which tasks correspond to Expert More important ), So if you want something Expert The higher the relevance to a task , You can type Gate Add some preset tasks and Expert Weight relation , Or directly customize Softmax function , Let the one with a large proportion Expert Larger output . This paper proposes wide&deep And effectively combine MMoE The advantages of ,wide A shallow network is partially introduced to mitigate the selection bias problem , This proved to be a very effective solution .

Application practice , Knowing and knowing 2019 Annual utilization MMoE To replace the Hard-parameter sharing And the user interaction rate has been improved 100% Great achievements , The interaction rate directly affects the user experience .

We know that the later efforts are mainly to use various strategy optimization methods to maximize the value of the model , That is to better improve the user experience . A good multi task learning method should have a most reasonable way to balance and integrate the goals , To maximize the benefits of users and platforms . This is what Zhihu is trying to do : That is, users are grouped , The weight of each goal can be dynamically adjusted by using users' different levels of satisfaction with different contents , Final fusion output , Give a final ranking .

Code implementation

import tensorflow as tf

from deepctr.feature_column import build_input_features, input_from_feature_columns

from deepctr.layers.utils import combined_dnn_input

from deepctr.layers.core import PredictionLayer, DNN

from tensorflow.python.keras.initializers import glorot_normal

from tensorflow.python.keras.layers import Layer

class MMOELayer(Layer):

def __init__(self, num_tasks, num_experts, output_dim, seed=1024, **kwargs):

self.num_experts = num_experts

self.num_tasks = num_tasks

self.output_dim = output_dim

self.seed = seed

super(MMOELayer, self).__init__(**kwargs)

def build(self, input_shape):

input_dim = int(input_shape[-1])

self.expert_kernel = self.add_weight(

name='expert_kernel',

shape=(input_dim, self.num_experts * self.output_dim),

dtype=tf.float32,

initializer=glorot_normal(seed=self.seed))

self.gate_kernels = []

for i in range(self.num_tasks):

self.gate_kernels.append(self.add_weight(

name='gate_weight_'.format(i),

shape=(input_dim, self.num_experts),

dtype=tf.float32,

initializer=glorot_normal(seed=self.seed)))

super(MMOELayer, self).build(input_shape)

def call(self, inputs, **kwargs):

outputs = []

expert_out = tf.tensordot(inputs, self.expert_kernel, axes=(-1, 0))

expert_out = tf.reshape(expert_out, [-1, self.output_dim, self.num_experts])

for i in range(self.num_tasks):

gate_out = tf.tensordot(inputs, self.gate_kernels[i], axes=(-1, 0))

gate_out = tf.nn.softmax(gate_out)

gate_out = tf.tile(tf.expand_dims(gate_out, axis=1), [1, self.output_dim, 1])

output = tf.reduce_sum(tf.multiply(expert_out, gate_out), axis=2)

outputs.append(output)

return outputs

def get_config(self):

config = {'num_tasks': self.num_tasks,

'num_experts': self.num_experts,

'output_dim': self.output_dim}

base_config = super(MMOELayer, self).get_config()

return dict(list(base_config.items()) + list(config.items()))

def compute_output_shape(self, input_shape):

return [input_shape[0], self.output_dim] * self.num_tasks

def MMOE(dnn_feature_columns, num_tasks, tasks, num_experts=4, expert_dim=8, dnn_hidden_units=(128, 128),

l2_reg_embedding=1e-5, l2_reg_dnn=0, task_dnn_units=None, seed=1024, dnn_dropout=0, dnn_activation='relu'):

"""Instantiates the Multi-gate Mixture-of-Experts architecture.

:param dnn_feature_columns: An iterable containing all the features used by deep part of the model.

:param num_tasks: integer, number of tasks, equal to number of outputs, must be greater than 1.

:param tasks: list of str, indicating the loss of each tasks, ``"binary"`` for binary logloss, ``"regression"`` for regression loss. e.g. ['binary', 'regression']

:param num_experts: integer, number of experts.

:param expert_dim: integer, the hidden units of each expert.

:param dnn_hidden_units: list,list of positive integer or empty list, the layer number and units in each layer of shared-bottom DNN

:param l2_reg_embedding: float. L2 regularizer strength applied to embedding vector

:param l2_reg_dnn: float. L2 regularizer strength applied to DNN

:param task_dnn_units: list,list of positive integer or empty list, the layer number and units in each layer of task-specific DNN

:param seed: integer ,to use as random seed.

:param dnn_dropout: float in [0,1), the probability we will drop out a given DNN coordinate.

:param dnn_activation: Activation function to use in DNN

:return: a Keras model instance

"""

if num_tasks <= 1:

raise ValueError("num_tasks must be greater than 1")

if len(tasks) != num_tasks:

raise ValueError("num_tasks must be equal to the length of tasks")

for task in tasks:

if task not in ['binary', 'regression']:

raise ValueError("task must be binary or regression, {} is illegal".format(task))

features = build_input_features(dnn_feature_columns)

inputs_list = list(features.values())

sparse_embedding_list, dense_value_list = input_from_feature_columns(features, dnn_feature_columns,

l2_reg_embedding, seed)

dnn_input = combined_dnn_input(sparse_embedding_list, dense_value_list)

dnn_out = DNN(dnn_hidden_units, dnn_activation, l2_reg_dnn, dnn_dropout,

False, seed=seed)(dnn_input)

mmoe_outs = MMOELayer(num_tasks, num_experts, expert_dim)(dnn_out)

if task_dnn_units != None:

mmoe_outs = [DNN(task_dnn_units, dnn_activation, l2_reg_dnn, dnn_dropout, False, seed)(mmoe_out) for mmoe_out in

mmoe_outs]

task_outputs = []

for mmoe_out, task in zip(mmoe_outs, tasks):

logit = tf.keras.layers.Dense(

1, use_bias=False, activation=None)(mmoe_out)

output = PredictionLayer(task)(logit)

task_outputs.append(output)

model = tf.keras.models.Model(inputs=inputs_list,

outputs=task_outputs)

return modelSNR

classical Shared-Bottom There is an obvious problem with the network structure : When the connection between multiple tasks of joint training and learning is not strong , Will seriously damage the effectiveness of their respective tasks . Because relative to training independent models for multiple targets ,Shared-Bottom The network structure will introduce... At the bottom of the shared network Bias.

As mentioned above MMoE A problem with the model , It can only target shared experts The sub networks are combined in a limited way . therefore , stay MMoE Based on the model structure ,SNR Model to realize more flexible network parameter sharing . And MMoE similar ,SNR The model modularizes the shared underlying network into sub networks . The difference is ,SNR The model uses coding variables to control the connection between subnetworks , And two types of connection methods are designed :SNR-Trans and SNR-Aver.

The experimental results are as follows :

ESMM Model

ESMM background

Task sequence dependencies One of the most representative modeling methods is that Ali's mother is 2018 It was put forward in 1986 Entire Space Multi-Task Model(ESMM).

be based on Multi-Task Learning The idea of , It effectively solves the problem of CVR Data sparsity and sample selection bias are two key problems in prediction .CVR Estimates and CTR Task compared to , There are two differences :

(1)Sample Selection Bias Conversion is after clicking “ There may be ” What happened , Tradition CVR The model usually takes click data as training set , The click is not converted to a negative example , Click and convert to positive example . But when the trained model is actually used , It's an estimate of the entire space , Instead of just estimating the click samples .

(2)Data Sparsity As CVR The click sample of training data is far less than CTR Estimate exposure samples used for training .

Some strategies can alleviate these two problems , But none of them has solved any of the above problems in essence .

In the recommendation system , There is usually a sequence dependency between different tasks . The multi-objective estimation in e-commerce is generally the click through rate and conversion rate , Among them, the purchase behavior will only occur after clicking . So this is a sequence dependency , It can be used to solve some problems in task estimation Sample selection bias SSB and The training data is sparse DS problem .

The first problem is that the conversion rate prediction model is trained by clicking + Transform data , But what users see directly after logging in is not the specific product details page , It's the home page or list page , Therefore, the conversion rate prediction model needs to be estimated in the scene of product exposure , This leads to training scenarios and prediction scenarios ( Full sample space ) The problem of inconsistency , Different scenarios will certainly produce biased estimates , This leads to the loss of application effect .

The second problem is that the training samples of the conversion rate prediction model are usually much smaller than the click through rate prediction model , As shown in the figure below .

ESMM Brief introduction of the model

ESMM The structure of the model is as follows :

In terms of model structure , The underlying embedded layer is shared by the conversion rate part and the click through rate part , The purpose of sharing is to solve the problem of sparse positive samples in the conversion rate estimation task , Use the click through rate data to generate more accurate feature expression of users and items .

In the middle layer, the conversion rate and click through rate respectively use the completely isolated neural network to fit their own optimization objectives , Finally, multiply the two to get pCTCVR.

The above formula can be changed into :

Therefore, it can be estimated separately pCTCVR and pCTR, And then we can solve it by dividing the two . At the same time, both can be trained and predicted in the full sample space . However, the former will be greater than the latter when estimating , Lead to pCVR The estimated value is greater than 1. To solve this problem , Introduced pCTCVR and pCTR Two auxiliary tasks , And cleverly change division to multiplication , During training ,Loss Add the two .

Use Cross entropy Loss function , stay CTR Tasks , Exposure events with click behavior are marked as positive samples , Exposure events without click behavior are marked as negative samples ; stay CTCVR Tasks , Exposure events with click and purchase behaviors are marked as positive samples , Otherwise, it is marked as negative sample .

In this way, the three objectives are integrated into a unified model at the same time , The values of all three optimization objectives can be obtained at one time , It's solved “ The training space and prediction space are inconsistent ” and “ At the same time, click and transform data for global optimization ” Two key questions . therefore ESMM The idea of is a more general idea of modeling sequence dependencies .

ESMM summary

in summary ,ESMM The model is a novel CVR Estimation method , It is the first to use the sequence characteristics of user behavior to model in the complete sample space , Avoid tradition CVR The model often encounters the problems of sample selection bias and sparse training data , Achieved remarkable results . meanwhile ,ESMM The subnetwork in the model can be replaced by any learning model , therefore ESMM The framework can be easily integrated with other learning models , So as to absorb the advantages of other learning models , Further improve the learning effect . Besides ,ESMM The modeling idea can also be easily generalized to the whole link prediction scenario of multi-stage behavior in e-commerce , Such as Sort → show → Click on → conversion Behavioral link estimation , The imagination is huge .

ESM2

ESM2 It is the latest sequence dependency modeling framework proposed by Ali , yes ESMM Improved version . because ESMM stay CVR The estimation scenario still faces a certain sample sparsity problem , Because the samples purchased by clicking are very few compared with the samples clicked . Fortunately, we can take advantage of a series of other behavioral information generated by a user before buying a product , For example, add items to the shopping cart or wish list , Pictured .

Then the act of adding to a shopping cart or wish list can be defined as Deterministic Action (DAction), It refers to a kind of behavior with clear purchase purpose . Other behaviors that are not very relevant to purchase are called Other Action (OAction). The original Impression→Click→Buy The shopping process becomes :Impression→Click→DAction/OAction→Buy The process .

ESM2 The structure of the model is as follows :

By introducing some intermediate behaviors, the two tasks can be split into more subtasks : Click through rate 、 Click to DAction Probability 、DAction Probability to purchase and OAction The probability of buying . The advantage of this is to better alleviate the problem of sparse samples .

You can see from the model structure , There are four subtasks in total , But there are three loss functions , Namely :Impression→Click、Impression→Click→DAction and Impression→Click→DAction/OAction→Buy

From the loss function form of two classification cross entropy, the first loss function expression is :

The second loss function expression is :

The third loss function expression is :

In the paper , The total loss function is obtained by the weighted addition of the above three loss functions , The weights in this paper are 1, In the actual business scenario, it can also be dynamically adjusted according to experience .

stay CVR and CTCVR Data set test of , The model shows more than the current SOTA The model has better effect on each index , And more effectively solve SSB and DS problem .

PLE Progressive Layered Extraction

tencent PCG stay RecSys2020 Published Best long paper PLE(Progressive Layered Extraction), It is a multi task model in the video recommendation scene . Relative to the front MMOE、SNR and ESMM Model ,PLE The model mainly solves two problems :

(1)MMOE All of the Expert Is shared by all tasks , This may not capture the more complex relationships between tasks , So as to bring some noise to some tasks ;

(2) Different Expert No interaction between , The effect of joint optimization is reduced .

As can be seen from the network structure in the figure ,CGC The underlying network mainly includes shared experts and task-specific expert constitute , every last expert module Are composed of multiple subnetworks , The number of subnetworks and the network structure are hyperparametric . The upper layer consists of a multitasking network , Every multitasking network (towerA and towerB) All inputs are made by gating The network performs weighted control , Of each subtask gating The input of the network consists of two parts , Some of them are under this task task-specific Part of the experts and shared Part of the experts form .

I can see CGC The Internet is a kind of single-level Network structure , A more intuitive idea is to stack multiple layers CGC The Internet , So as to obtain richer representation ability , and PLE The network structure is to CGC Extended to multi-level Layer .

It can be seen that MOE Different experts The weights are basically the same ,PLE Model sharing experts And unique experts The weight difference is greater , Explain for different tasks , Be able to make effective use of sharing Expert And unique Expert Information about , This also explains why it can achieve more than MMoE Better training results .

summary

Many problems in the real world can't be decomposed into independent sub problems , Even if it can be broken down , The subproblems are also interrelated , Linked by some shared factor or shared representation . Treat real problems as independent single tasks , Ignoring the rich correlation information between problems . Multi task learning is born to solve this problem .

The essence of multi task learning is an inductive transfer mechanism , Use additional sources of information to improve the learning performance of the current task , Including improving generalization accuracy 、 Learning rate and comprehensibility of the learned model . Different tasks of multi task learning have different local minimum positions in the shared layer , Through the interaction of unrelated parts between multiple tasks , It helps to escape from the local minimum point ; The related parts before multitasking are conducive to the learning of common feature representation in the bottom sharing layer , Therefore, multi task model can achieve better results than single task model . The future development of multi task learning may lead to more new ideas or the combination of existing ideas , The latter has been studied in the industry , For example, Ali published in KDD2020 Of M2GRL: After introducing multi view GRL And MTL Framework to better recommend . In addition, the design of loss function in multitasking is also a very important research direction , The narrative will be expanded .

Therefore, the possible reasons why multi task learning can improve generalization ability are :

First of all , The contribution of unrelated tasks to the aggregation gradient can be considered as noise compared to other tasks , Irrelevant tasks can also be used as noise sources to improve generalization ability .

second , Adding tasks will affect the update of network parameters , For example, it increases the effective learning rate of hidden layer .

Third , Multitask networks share the hidden layer at the bottom of the network among all tasks , Perhaps the same level or better generalization capability can be achieved with a smaller capacity .

Ref

- paper https://www.kdd.org/kdd2018/accepted-papers/view/modeling-task-relationships-in-multi-task-learning-with-multi-gate-mixture-

- https://blog.csdn.net/ty44111144ty/article/details/99068255

- https://zhuanlan.zhihu.com/p/55752344

- ESMM Model https://blog.csdn.net/sinat_15443203/article/details/83713802#

- https://blog.csdn.net/sinat_15443203/article/details/83713802#:~:text=%E6%8F%90%E5%87%BA%E7%9A%84ESMM%EF%BC%88%E5%AE%8C%E6%95%B4%E7%A9%BA%E9%97%B4%E5%A4%9A%E4%BB%BB%E5%8A%A1%EF%BC%89%E6%A8%A1%E5%9E%8B%E8%83%BD%E5%A4%9F%E5%9C%A8%E5%AE%8C%E6%95%B4%E7%9A%84%E6%A0%B7%E6%9C%AC%E6%95%B0%E6%8D%AE%E7%A9%BA%E9%97%B4%EF%BC%88%E5%8D%B3%E6%9B%9D%E5%85%89%E7%9A%84%E6%A0%B7%E6%9C%AC%E7%A9%BA%E9%97%B4%EF%BC%8C%E4%B8%8B%E5%9B%BE%E6%9C%80%E5%A4%96%E5%B1%82%E5%9C%88%EF%BC%89%E5%90%8C%E6%97%B6%E5%AD%A6%E4%B9%A0%E7%82%B9%E5%87%BB%E7%8E%87%28post-view%20click-through%20rate%2C%20CTR%29%E5%92%8C%E8%BD%AC%E5%8C%96%E7%8E%87%28post-click%20conversion%20rate%2C,CVR%29%E3%80%82%20%E7%94%A8%E6%88%B7%E5%9C%A8%E8%B4%AD%E7%89%A9%E6%97%B6%E9%83%BD%E9%81%B5%E5%BE%AA%E4%B8%80%E4%B8%AA%E9%A1%BA%E5%BA%8F%EF%BC%9Aimpression%20%E2%86%92%20click%20%E2%86%92%20conversion%E3%80%82

- Multi task learning summary https://blog.csdn.net/m0_52122378/article/details/113593246

- Multitasking learning applications https://cloud.tencent.com/developer/article/1652175

- Zhe Zhao, Lichan Hong, et al. Recommending What Video to Watch: A Multi-task Ranking System.

- A recommendation system for attack : How to improve the interaction rate of users through multi-objective learning 100%?

边栏推荐

- Five methods of JS array summation

- Install wpr Exe command

- 数组怎么转对象,对象怎么转数组

- Introduction to the use of splice() method

- Thread operation principle

- Reliable remote code execution (1)

- 喜歡就去行動

- Tencent's open source project "Yinglong" has become a top-level project of Apache: the former long-term service wechat payment can hold a million billion level of data stream processing

- Differences among cookies, session, localstorage and sessionstorage

- Can text pictures be converted to word? How to extract text from pictures

猜你喜欢

![[JS reverse sharing] community information of a website](/img/71/8b77c6d229b1a8301a55dada08b74f.png)

[JS reverse sharing] community information of a website

齐次坐标的理解

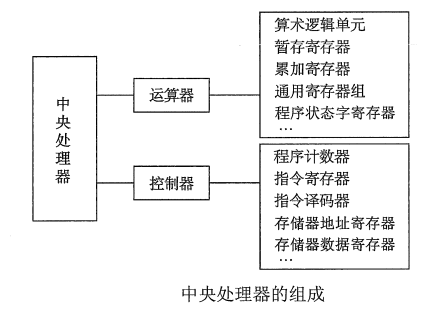

计组_cpu的结构和工作流程

Moving Tencent to the cloud cured their technical anxiety

Hbuilder makes hero skin lottery games

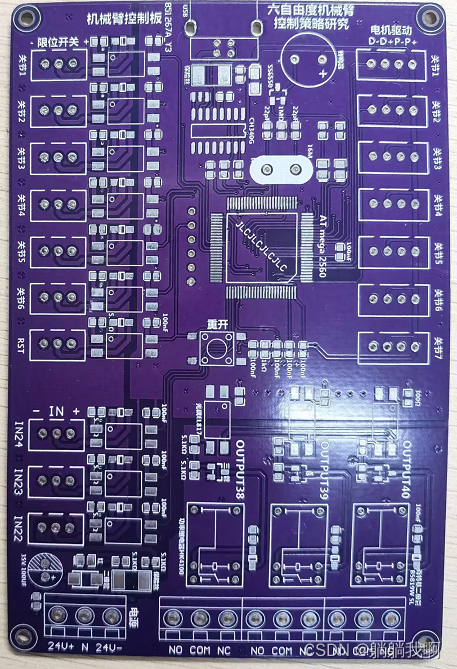

Quick completion guide for mechanical arm (zero): main contents and analysis methods of the guide

Rising bubble canvas breaking animation JS special effect

今日睡眠质量记录76分

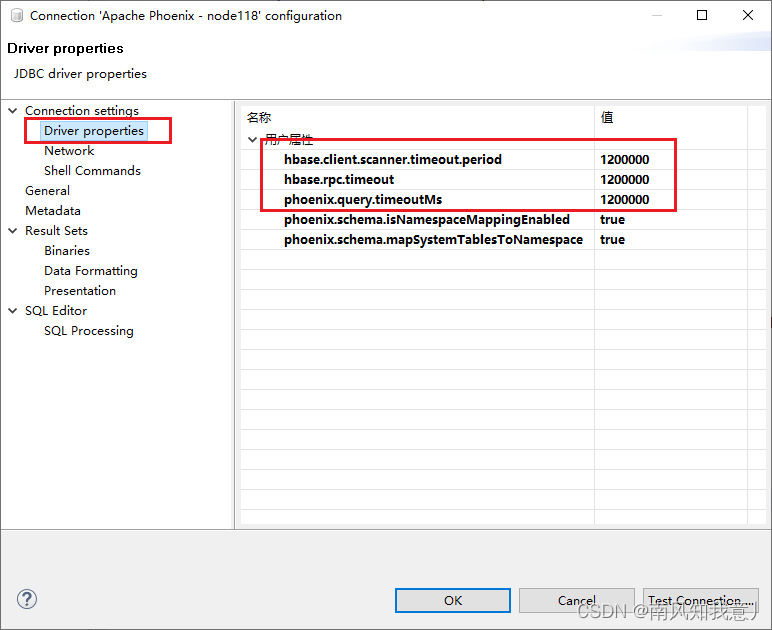

Solve the timeout of Phoenix query of dbeaver SQL client connection

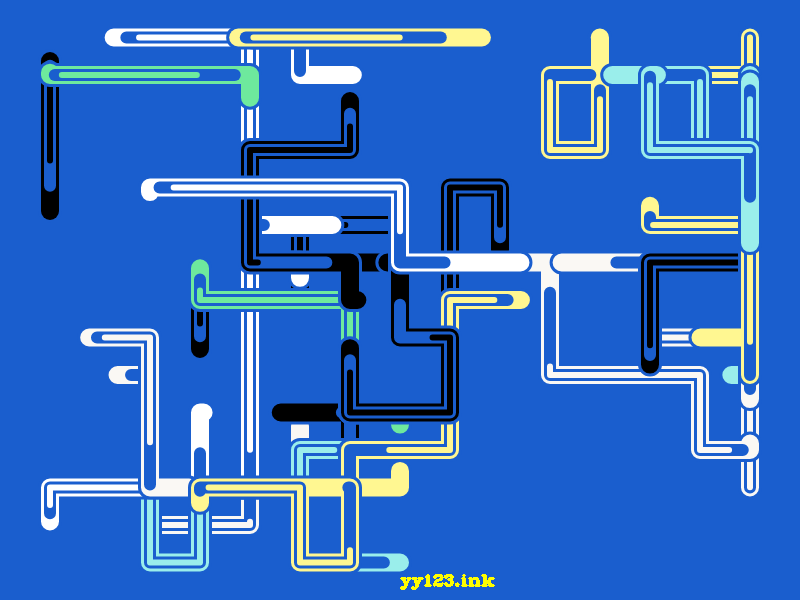

Canvas pipe animation JS special effect

随机推荐

服乔布斯不服库克,苹果传奇设计团队解散内幕曝光

A group of skeletons flying canvas animation JS special effect

[activities this Saturday] NET Day in China

【IEEE出版】2022年自然语言处理与信息检索国际会议(ECNLPIR 2022)

Fashionable pop-up mode login registration window

程序员在技术之外,还要掌握一个技能——自我营销能力

js数组求和的5种方法

Extremenet: target detection through poles, more detailed target area | CVPR 2019

Which map navigation is easy to use and accurate?

RPM installation percona5.7.34

≥ 2012r2 configure IIS FTP

Centripetalnet: more reasonable corner matching, improved cornernet | CVPR 2020 in many aspects

什么是递归?

Group policy export import

Déplacer Tencent sur le cloud a guéri leur anxiété technologique

How to use arbitrarygen code generator what are the characteristics of this generator

Moving Tencent to the cloud cured their technical anxiety

"Adobe international certification" Adobe Photoshop adjusts cropping, rotation and canvas size

Internship experience sharing in ByteDance 𞓜 ten thousand word job guide

Detailed explanation of SQL Sever basic data types