当前位置:网站首页>How to design a second kill system?

How to design a second kill system?

2022-07-28 13:11:00 【Dig your server cable】

Two core issues :

Concurrent reading

Write concurrently

Spike overall architecture : Steady and fast

steady : The system architecture is highly available , Ensure the smooth completion of the activities

accurate : Not oversold

fast :rt low , Overall link collaborative optimization

<– Deepen –>

High performance :

Involving a large number of concurrent reads and writes , How to support high concurrency ?

1. Dynamic and static separation of design data

2. Discovery and isolation of hot spots

3. Peak clipping and layered filtering of requests

4. The ultimate optimization of the server

Uniformity :

The core : How to design the inventory reduction plan of secsha

High availability :

planB The bottom line

Design seckill system 5 There are two principles of architecture :4 want 1 Don't

1. Keep as little data as possible

1.1 Users can request less data , The requested data includes the data uploaded to the system and the data returned by the system to the user ( Web page data ), Because data transmission on the network takes time , Secondly, whether it is requesting data or returning data, it needs to be processed by the server , Like serialization 、 Compress 、 Character encoding and so on , These processes are very expensive cpu act , Therefore, reducing the amount of data transmitted can significantly reduce cpu Use . For example, simplify the seckill page size , Remove unnecessary page decoration effects and so on .

1.2 The less data you rely on, the better , A lot of clients / Front end students hope to call as few interfaces as possible to get more information , No on-demand request , For example, the page only shows song information , But the interface except song information , Also returned the tag 、 Style and other unnecessary information , Calling other services involves serialization and deserialization , This is also cpu A killer , At the same time, the whole request rt It will also rise ,rt slow , Machine load load It will also rise , Cause a chain reaction .

2. Minimize the number of requests

After the page requested by the user returns , Browser rendering depends on multiple css/javascript/ Pictures and other files , Such requests compare to the core data required by the page , Define it as “ Additional request ”( There is no need to re request the download every time , Set value ), It can be done by merging css Document and JavaScript file , The multiple JavaScript The files are merged into one file , stay URL Use commas to separate (https://g.xxx.com/tm/xx-b/4.0.94/mods/??module-preview/index.xtpl.js,module-jhs/index.xtpl.js,module-focus/index.xtpl.js). In this way, a single file is still stored separately on the server , Only the server will have a component to resolve this URL, Then dynamically merge these files and return to .

3. The path should be as short as possible

Merge and deploy multiple interdependent applications in phase I , Remote RPC Call to JVM Method calls between internal , Suppose the node service availability is 99.9%, Suppose a request passes through five nodes , Then the availability of the entire request becomes 99.9% Of 5 Power , About equal to 99.5%.

4. Rely on as little as possible

Simply put, we should rank the services that the system depends on according to their importance , such as getSongs Get song information interface , Then the song service is 0 level , artist / Album information data 1 level , label / Music genre and other services belong to 2 level , During the promotion period , Can be right or wrong 0 Level of service , Ensure the stability of core services .

5. Distributed , Don't have a single point

Single point means no backup , The risk is out of control , For any service, it should be distributed , Avoid binding the state of the service to the machine .

Second kill system deduction process

1W QPS

Product purchase page -> Server side -> Database addition, deletion, modification and query

10W QPS

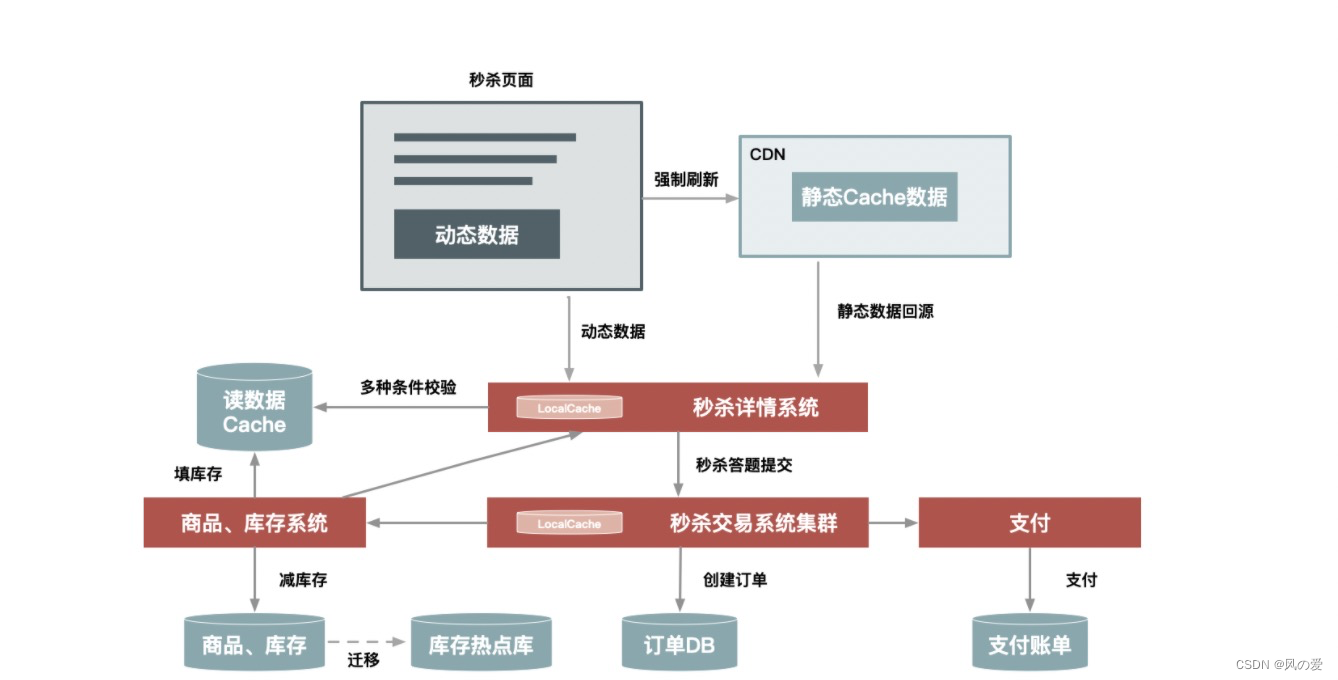

The core : Seckill system upgrade

Make independent clusters on system deployment , Resist the flow peak

Put inventory data into cache , Improve reading performance

Add second kill answer , Prevent second killer from grabbing orders

100W QPS

Separation of page motion and static , Minimize page refreshes and dynamic resource requests .

The server caches seckill products locally , There is no need to call the background service that depends on the system to obtain data , You don't even need to query data in the public cache cluster , Further reduce the pressure on the server

Add system current limiting protection

Reduce system pressure through strategies

How to do a good job of dynamic and static separation ? What are the options

How to distinguish between dynamic data and static data ?

The main difference is whether the data output in the page is consistent with URL、 browser 、 Time 、 Geographically relevant , And whether it contains Cookie Private data , That is, whether the data displayed on the page contains personalized data . Two examples :

1. News websites , too , user 、 Time 、 The change of region and other information will not affect the display of the page , This kind of data static page .

2. Hand panning home page , One thousand thousand , Basically, it's all personalized data , This kind of page belongs to dynamic page .

How to cache static data ?

1. Static transformation , In short, direct caching http Connect , For example, static data storage CDN, Put the content on the server closest to the user , Reduce network delay between backbone network and metropolitan area network .

2. Choose an appropriate way to cache static data , Written in different languages Cache The efficiency of processing cache varies ,java The system itself is not suitable for processing a large number of connection requests , Each connection consumes more memory ,servlet Container parsing http Slow protocol , May not be in java This layer of the system does caching , And in the Web On the server , shielding java Some weaknesses at the language level , Can be in nginx,apache Layer for global control ,Web The server is also better at handling large concurrent static file requests .

Several architectural schemes of dynamic and static separation ?

The static data and dynamic data have been separated through transformation , So how to further recombine these dynamic and static data in the system architecture , Then complete output to users ?

Three options :

1. Physical machine single machine deployment

2. Unified cache layer

3. On cdn

besides , just so so :

1. The entire page is cached in the user's browser

2. Actual valid requests are reduced , It's just the user's click on the refresh loot button

Static resources go cdn cache

Dynamic content server cache

The third chapter Deal with the hot data of the system pertinently

What is a hot spot ?

Hot spots are divided into hot spot operation and hot spot data

Hot spot operation :

Like joining the shopping cart 、 Goods details 、 Trading orders, etc

Hot data :

Such as popular goods and so on

How to deal with hot data ?

There are usually several ways to deal with hot data : First, optimization , Second, restrictions , Third, isolation .

Optimize :

1. cache lru

2. For example, for the visited product ID Do consistency Hash, And then according to Hash Make barrels , One processing queue is set for each bucket , This limits hot goods to a request queue , Prevent too much server resources from being occupied by some hot products , So that other requests can never get the processing resources of the server .(Q: Points barrels ? How do you do it? )

3. Isolation

Business isolation . Make second kill a marketing activity , Sellers need to sign up separately to participate in secsha marketing activities , Technically , After the seller signs up, we have known hot spots , Therefore, preheating can be done in advance .

System isolation . System isolation is more about runtime isolation , It can be deployed in groups and in addition 99% Separate . Secsha can apply for a separate domain name , The purpose is to make requests fall into different clusters .

Data isolation . Most of the data called by seckill are hot data , For example, a separate will be enabled Cache Clustering or MySQL Database to put hot data , I don't want to 0.01% Your data has an opportunity to influence 99.99% data .

Chapter four How to cut the flow peak

Ideas :

Nondestructive : line up 、 Answer the questions 、 Layered filtering

lossy : Current limiting 、 Compulsory measures such as machine load protection

line up ( Simply put, it means changing from one step to many steps ):

Message queuing buffers instantaneous traffic

Thread pool lock waiting

fifo . First in, last out and other memory queuing algorithm

Request serialization file , Then read the files sequentially , similar mysql binlog

Answer the questions :

efficacy : Prevent swiping cheating

Delay the request

Layered filtering : Minimize useless requests , Such as reducing unnecessary consistency verification, etc

The fifth chapter What are the factors that affect performance ? How to improve the performance of the system

There are usually two factors : The server time and threads of a response

Response time is generally determined by cpu Execution time and thread waiting time , The thread waiting time is not useful through the test , Because this can be compensated by increasing the number of threads , Mainly still cpu Execution time has a real impact on performance , because cpu The execution of is really consuming the resources of the server , If you reduce cpu Half the execution time , You can double that qps

It should be noted that : Different systems pay different attention to bottlenecks , For example, for caching systems , It's memory that constrains it , And for storage systems I/O It's easier to be a bottleneck .

For the second kill system , Bottlenecks occur more often in CPU On

How to optimize the system

1. Reduce coding

java Coding runs slowly , yes java Language itself determines , A lot of scenarios , As long as it involves string operations ( Such as input and output operation ,I/O operation ) They are all quite vast cpu resources , Whether it's a disk i/o Or network? i/o, Because you need to convert characters into bytes , And this process must transcode .

meanwhile , The encoding of each character needs to look up the table , And this kind of table lookup is very resource intensive , So reducing the conversion between characters and bytes is effective .

How to reduce coding ? Convert some data into bytes in advance , Reduce static data encoding conversion

2. Reduce serialization

Serialization is also Java A natural enemy of performance , Because serialization and encoding often happen at the same time , Reducing serialization also reduces coding .

Serialization is mostly in RPC What happened in , Therefore, it is necessary to reduce the number of applications RPC call , A common solution is merge deployment , Merge and deploy multiple applications with strong relevance , for instance , It is to merge and deploy two different applications originally on different machines to one machine , The same tomcat in , And you can't take this machine socket, In order to avoid serialization .

3.Java Ultimate optimization

Most requests go directly to Nginx Server or Web The proxy server returned , This can reduce the serialization and deserialization of data ,java The layer only handles dynamic requests for a small amount of data .

Reduce data . in fact , There are two areas that particularly affect performance , One is the inevitable conversion of characters to bytes when the server processes data , Two is HTTP Do when you ask Gzip Compress , And network transmission time , These are closely related to data size .

Data classification . That is to ensure that the first screen is the first 、 Important information comes first , The secondary information is loaded asynchronously , In this way, the user's experience of data acquisition will be improved

4. Concurrent read optimization

The current locking scheme adopted here is through tair To do , Is there any other plan besides this ?

application layer LocalCache, Cache commodity related data on the single machine of secsha system . It is generally divided into dynamic data and static data, which are processed separately .

Static data : For example, product title 、 Describe the data that will not change by itself , Push the whole amount to the seckill machine before seckill , And cache until the end of the second kill .

Dynamic data : For example, dynamic data such as inventory , Will be used ” Passive failure “ Cache for a certain period of time ( It's usually a few seconds ), After the failure, go to the cache to pull the latest data .

There is a thing that ordinary people can't understand , Frequently updated data like inventory , Once the data is inconsistent , Will it lead to oversold ?

This requires the layered verification principle of data introduced earlier , The reading scenario allows some dirty data , Because the misjudgment here will only lead to a small number of orders that were originally out of stock being mistaken for inventory , You can wait until the data is actually written to ensure the final consistency , By balancing high availability and consistency of data , To solve the problem of high concurrency data reading .

Besides , We should do a good job of optimization , You also need to do a good application baseline , For example, performance baseline ( When does the performance suddenly drop )、 Cost baseline ( Double last year 11 How many machines were used )、 Link baseline ( What happened to our system ), You can continuously focus on the performance of the system through these baselines , Improve the coding quality in the code , Get rid of unreasonable calls in business , Continuous improvement in architecture and call link .

The core of performance optimization is just one word - reduce

If you continue to reduce

1: Asynchronism - Reduce waiting time for response

2: Drop log - Reduce the interaction of local disks

3: Multi level cache - Reduce the data acquisition path

4: Subtraction - Remove non core functions or supplementary functions

Sub database and sub table ,ID route

Chapter six The core logic of inventory reduction design of second kill system

There are generally two steps to purchase : Order and pay

There are generally the following ways to reduce inventory :

Order less stock : The possible problem is to place an order maliciously , So as to affect the seller's commodity sales

Payment minus stock : Possible problems : Inventory oversold , Because the order and payment are separated , It may cause many sellers to place orders successfully , The order quantity exceeds the inventory , You can only prompt failure when making payment , Affect the shopping experience .

Withholding stock : Merge the two operations , When placing an order, it should be withheld first , Release inventory without payment within a specified period of time , using “ Withholding stock ” The way , Although it alleviates the above problems to a certain extent , But for malicious orders , Although the effective payment time is set to 10 minute , But malicious buyers can place orders again in ten minutes , Or order many pieces at a time to reduce the inventory . In this case , The solution is to combine safety and anti cheating measures to stop .

for example , Identify and mark buyers who often place orders without payment ( Can be marked when the buyer orders without reducing inventory )、 Set the maximum number of purchases for certain categories ( for example , At most, one person can buy 3 Pieces of ), And limit the number of repeated orders without payment . in the light of “ Inventory oversold ” This situation , stay 10 The number of orders placed in minutes may still exceed the stock number , In this case, we can only treat it differently : For ordinary goods, the order quantity exceeds the inventory quantity , It can be solved by replenishment ; But some sellers don't allow negative inventory at all , That can only prompt the buyer to pay for the shortage of stock .

How to reduce inventory in large seckill system ?

Determine the appropriate scenario : Order less stock

There is data consistency in placing orders and reducing inventory , The main thing is to ensure that the inventory data cannot be negative when large concurrent requests are made , That is to ensure that the value of the inventory field in the database cannot be negative , There are generally the following solutions :

Judge by transactions in the application , Ensure that the inventory after inventory reduction cannot be negative ;

Directly set the field data of the database as unsigned certificate , In this way, when the inventory reduction field value is less than zero, it will be executed directly SQL Statement error ;

Use case when Judgment statement

UPDATE item SET inventory = CASE WHEN inventory >= xxx THEN inventory-xxx ELSE inventory END

The ultimate optimization of inventory reduction of secsha system

Follow up

Chapter vii. Plan B

Where should high availability construction start

Architecture phase :

In the architecture stage, the scalability and fault tolerance of the system are mainly considered , To avoid single point problems in the system , Do a lot of machine room unit deployment , Disaster tolerance in other places . Choose different design structures at different stages of the system , such as qps Only 10 when , It may be enough to simply add, delete, modify and check , When the system traffic goes up , Measures such as distribution and micro service splitting are needed to ensure the high availability of the system .

Encoding phase :

The most important thing in the coding phase is to ensure the robustness of the code , Have the idea of defensive programming , For example, when it comes to remote invocation , Set a reasonable timeout exit mechanism , Prevent being pulled down by other systems , You should also expect the returned result set of the call , Prevent the result from going beyond the processing range of the system , Have enough knowledge of the system you are responsible for , What services , What data is the core service 、 Core Data , What can be downgraded 、 Exceptions can be allowed , Which services need detailed data , Security services 24 Hours of normal availability .

Testing phase :

Ensure the coverage of test cases , Extreme data are often used to test the robustness of the system .

Release stage :

Release in batches , Safety production environment small flow verification , The rollback mechanism should be supported in the release phase .

Operation phase :

Monitoring is the core , Be able to find and locate problems in time .

Failure stage :

When a failure occurs, the first thing to consider is stop loss , Stop loss first , Restore the service , Finally, clean the data .

Special period :

Such as big promotion 、 Second kill and other scenes , Refer to the whole article .

When the application cannot bear the flood peak of large flow , How to deal with the operation stage ?

Three ways : Downgrade 、 Current limiting and denial of service

1. Downgrade

The premise of formulating the degradation plan is , We should be familiar with the system we are responsible for , Including core functions 、 Rely on third-party services 、 Dependent services are stratified according to important registrations . Degradation is generally divided into two types : Business downgrade 、 Data degradation and service degradation .

Business degradation is for a product , Downgrade some product functions , For example, the promotion season of Taobao's double 11 , Core traffic focuses on a few core functions , At this time, some non core products can be offline , Improve system resistance .

Data degradation refers to the degradation of the total amount of returned data to a certain extent 、 For example, return the total number of songs by 50 Degraded to 10 strip , The reduction of data volume also means that the time-consuming of data coding will be reduced 、 Thread processing time is reduced ,cpu Reduce .

Service degradation refers to the degradation of third-party services that the system depends on , For example, get product details , The product itself will obtain other additional information besides the product itself ( Such as product evaluation , Rely on the comment system ), The invocation of these third-party services will add additional network overhead , At the same time, more data means that data serialization and coding also need to cost more cpu, Affect performance .

So when the system reaches a certain capacity , We need to set up different for different systems “ reserve plan ”, It is a purposeful , Planned execution process , Degradation can be achieved through the plan system .

2. Current limiting

Compared with demotion , The current limiting behavior is more extreme , When the system capacity reaches the bottleneck , Demotion will not solve the problem , At this time, the system can be protected by limiting part of the flow .

Before the system goes online , We must have an estimate of our system water level in advance through pressure measurement , Control the current limiting threshold below this water level .

Common current limiting methods are based on QPS And the number of threads . Can be in java Layer do , Better yet, on the top web The server , Such as nginx Medium current limiting .

3. Denial of service

Denial of service is the most extreme means to resist the peak of traffic , To prevent the worst from happening , Prevent the server from completely failing to provide services for a long time due to server collapse . For example nginx Set overload protection on the , Refuse directly when the machine load reaches a certain value http Request , And back to 503 Error code .

nigin Gateway layer + lua Script , Read tair/redis/diamond, Dynamic configuration of current limiting

The lottery system prevents oversold

First step , Take the goods key

The second step , Write db,key Is the only primary key , When writing the winning record, others key Can't write in

Taobao's direct db Inventory reduction guarantee , The problem is that there is a large amount of goods , Concurrent high time , Write only this row of data in the database ,db The pressure is very high , There is no line to queue

One key,1000 ten thousand people , How make ?

One key, be assigned to 1000 A barrel ,999 One is empty , As long as the prize is handed out ok, Don't care about chronological order

vip Large quantity of goods , adopt redis Atomic lock

边栏推荐

- Sub thread update UI full solution

- 04 pyechars geographic chart (example code + effect diagram)

- Black cat takes you to learn EMMC Protocol Part 24: detailed explanation of EMMC bus test program (cmd19 & cmd14)

- Solution to the binary tree problem of niuke.com

- Ruan Bonan of Green Alliance Technology: cloud native security from the open source shooting range

- Black cat takes you to learn EMMC protocol chapter 27: what is EMMC's dynamic capacity?

- 黑猫带你学UFS协议第2篇:UFS相关名词释义

- 20220728 common methods of object class

- Dimming and color matching cool light touch chip-dlt8ma12ts-jericho

- How to open the power saving mode of win11 system computer

猜你喜欢

Application and download of dart 3D radiative transfer model

Leetcode: array

Xampp Chinese tutorial guide

Ccf201912-2 recycling station site selection

Introduction to border border attribute

Shenwenbo, researcher of the Hundred Talents Program of Zhejiang University: kernel security in the container scenario

![[July 5 event preview] Flink Summit](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[July 5 event preview] Flink Summit

云原生—运行时环境

BiliBili Yang Zhou: above efficiency, efficient delivery

如何在 TiDB Cloud 上使用 Databricks 进行数据分析 | TiDB Cloud 使用指南

随机推荐

RGB game atmosphere light touch chip-dlt8s04a-jericho

Use and source code of livedata in jetpack family bucket

【嵌入式C基础】第6篇:超详细的常用的输入输出函数讲解

Sliding Window

The largest rectangle in leetcode84 histogram

Redefinition problem of defining int i variable in C for loop

.net for subtraction, intersection and union of complex type sets

【嵌入式C基础】第3篇:常量和变量

Connected Block & food chain - (summary of parallel search set)

With 433 remote control UV lamp touch chip-dlt8sa20a-jericho

Machine learning practice - neural network-21

04 pyechars geographic chart (example code + effect diagram)

Block reversal (summer vacation daily question 7)

Code layered management of interface testing based on RF framework

Stepless dimming colorful RGB mirror light touch chip-dlt8s12a-jericho

Black cat takes you to learn EMMC protocol chapter 27: what is EMMC's dynamic capacity?

黑猫带你学UFS协议第2篇:UFS相关名词释义

Jetpack Compose 完全脱离 View 系统了吗?

【嵌入式C基础】第1篇:基本数据类型

Full disclosure! Huawei cloud distributed cloud native technology and Practice