当前位置:网站首页>Matlab uses deep learning recurrent neural network RNN long-term and short-term memory LSTM to predict waveform time series data

Matlab uses deep learning recurrent neural network RNN long-term and short-term memory LSTM to predict waveform time series data

2022-06-22 07:11:00 【Extension Research Office】

Link to the original text :http://tecdat.cn/?p=27279

The source of the original text is : The official account of the tribal public

This example shows how to use long-term and short-term memory (LSTM) Network prediction time series .

Related video :

LSTM Neural network architecture and principle and its application in Python Application of prediction in

LSTM Network is a kind of cyclic neural network (RNN), It processes input data by cycling time steps and updating network status . The network state contains information that is remembered in all previous time steps . You can use LSTM The network uses the previous time step as input to predict the time series or the subsequent values of the series . To train Practice LSTM Network time series prediction , Please train regression with sequence output LSTM The Internet , And the response ( The goal is ) It's a training sequence , Its value is shifted by one time step . let me put it another way , At each time step of the input sequence ,LSTM Network learning predicts the value of the next time step .

There are two prediction methods : Open loop prediction and closed loop prediction .

Open loop prediction only uses the input data to predict the next time step in the sequence . When predicting subsequent time steps , You collect real values from the data source and use them as input .

Closed loop prediction predicts subsequent time steps in the sequence by using the previous prediction as input . under these circumstances , The model does not need real values to predict .

This figure shows an example sequence , It contains the predicted value using closed-loop prediction .

This example uses a waveform data set , It includes 2000 Three synthetic generated waveforms of different lengths with three channels . This example training LSTM The network uses closed-loop and open-loop prediction to predict the future value of the waveform given the value of the previous time step .

Load data

Check the size of the first few sequences .

data(1:5)

Check the number of channels . To train the Internet , Each sequence must have the same number of channels .

nufdmChahgnnhels = 3The first few sequences in the visualization diagram .

for i = 1:4

nexttsdile

staasdcgafdgkedplot(dadgta{i}')

Divide the data into training set and test set . take 90% The observations of are used for training , The rest is used to test .

Prepare training data

To predict the value of the future time step of the sequence , Please specify the target as the training sequence , Its value moves by one time step . let me put it another way , At each time step of the input sequence ,LSTM Network learning predicts the value of the next time step . The predictor is a training sequence without a final time step .

for n = 1:nasumel(dddataTrainsf)

Xd = dataTrgainsg{n};

XgfTrdfain{n} = dfX(:,1:efgdnd-1);

TTraign{n} = gXd(:,2:efnd);

endIn order to better simulate and prevent training divergence , Please normalize the forecast variable and the target to have zero mean and unit variance . When making predictions , The test data must also be standardized using the same statistics as the training data . To easily calculate the mean and standard deviation of all series , Please connect the series in the time dimension .

Definition LSTM Network architecture

Create a LSTM Back to the web .

Use a sequence input layer whose input size matches the number of channels of input data .

Use with 128 A hidden unit of LSTM layer . The number of hidden units determines how much information the layer learns . Using more hidden cells can produce more accurate results , But it is more likely to lead to over fitting of training data .

To output a sequence with the same number of channels as the input data , Please include a full connection layer whose output size matches the number of channels of input data .

Last , Include a regression layer .

Specify training options

Specify training options .

Use Adam Optimize training .

Training 200 individual epoch. For larger datasets , You may not need to train as much epoch To get a good fit .

In each small batch , Left fill sequence , Make them the same length .

trainingOptions("adam", ...

MaxEpochs=200, ...

Training neural network

Train with the specified training options LSTM The Internet .

Test network

Use the same steps as the training data to prepare the test data for prediction .

The test data are standardized using statistics calculated from the training data . Specify the target as the test sequence , Its value is offset by one time step , Specify the prediction variable as a test sequence without a final time step .

for n = 1:sifze(dsdatagsdTest,1)

Xsdg = datsdagesdt{n};

XTdsgsst{n} = (Xd(:,1:dend-1) - muX) ./ sdgdigmaX;

TTedgst{n} = (X(:,2:enddg) - muT) ./ sisggaT;

endUse test data to predict . Specify the same padding options as the training .

YTasedst = prsdfdict(nedst,fXTsdest,SeqfuencePaddfsdingDidfrecdtionf="ledfft");To assess accuracy , For each test sequence , Calculate the root mean square error between the prediction and the target (RMSE).

rmse(i) = sqrt(mean((YTesdst{i} - TTfedft{i}).^2,"all"));

Visualize errors in histograms . Lower values indicate higher accuracy .

Calculate the average of all test observations RMSE.

mean(rmse)

Predict future time steps

Given an input time series or series , To predict the value of multiple future time steps . For each prediction , Use the previous forecast as input to the function .

Visualize one of the test sequences in the diagram .

figure

stfackddefdsplot(X'

Open loop prediction

Open loop prediction only uses the input data to predict the next time step in the sequence . When predicting subsequent time steps , You collect real values from the data source and use them as input . for example , Suppose you want to use the time step 1 To t-1 To predict the time step of the sequence t Value . Step in time t+1 To make predictions , Please wait until you record the time step t And use it as input to make the next prediction . When you have a real value to provide to the network before making the next forecast , Please use open-loop prediction .

Before using the input data 75 Time steps to update network status .

ngdfget = resasegftSdtsfte(net);

offssdet = 75;

[nefgt,~] = predfgdictAndUpdateStdfgate(nfget,X(:,1:offsedfd));In order to predict further predictions , Update network status . Predict the value of the remaining time steps of the test observation by looping in the time steps of the data and using them as inputs to the network .

Compare the predicted value with the target value .

for i = 1:numCashdananels

nexdttdfgileg

ploft(T(i,:))

holfgd on

plot(offfset:gnumTimeSdfghjteps,[T(i,ofkklkset) Y(i,:)],'--')

Closed loop prediction

Closed loop prediction predicts subsequent time steps in the sequence by using the previous prediction as input . under these circumstances , The model does not need real values to predict . for example , Suppose you want to use only in time steps 1 To t-1 To predict the time step of the sequence t To t+k Value . Step in time i To make predictions , Please use the time step i-1 As input . Use closed-loop prediction to predict multiple subsequent time steps , Or when you don't provide the real value to the network before making the next prediction .

Initialize the network state by first using the function reset state , then Use the first few time steps of the input data resetState Make an initial prediction .Z Before using the input data 75 Time steps to update network status .

newt = resetyeriuiutState(net);

ofrfstydet = sizety(X,2);

[nest,h] = peeredictAnytdUpdtateState(net,X);In order to predict further predictions , Cycle time steps and update network status . By iteratively passing the previous prediction value to the network, the next 200 Time steps . Since the network does not need input data to make any further prediction , So you can specify any number of time steps to predict .

numPreddshictihgonTimeshgSteps = 200;

dghXt = Z(:,endesrgs);

Ysf = zergfsos(sfgsnumChannels,numPrhedictionTimlhelhhjSteps);

for t = 1:numPredicthjjionlkjTimeSteps

[nexfdt,Y(:,t)] = predic'ltAndUpdatlkeStak;lte(net,Xt);

gXt = Y(:,t);

endVisualize the predicted values in the graph .

numTimdgegSteps = offset + numPralkjedicltionTimeSteps;

figure

t = tiledlayjout(numklChannels,1);

for i = 1:numChannselgs

nextgtilgfhe

plogghft(T(i,1:ogfhvset))

hobld bvon

plot(offsenbt:nmnumTimesbn,Stesdps,[T(i,a) Y(i,:)],'--')

Closed loop prediction allows you to predict any number of time steps , But it may be less accurate than the open-loop prediction , Because the network cannot access the real value during the prediction process .

The most popular insights

1. be used for NLP Of Python: Use Keras Multi label text for LSTM Neural network classification

3.python stay Keras Use in LSTM Solve the sequence problem

4.Python of use PyTorch Machine learning classification prediction bank customer churn model

5.R Language diversity Copula GARCH Model time series prediction

6. stay r Used in language GAM( Generalized model addition ) Time series analysis of power load

8.R Language estimates vary with time VAR An empirical study of model time series and a case study

9. Using the generalized additive model GAM Time series analysis

边栏推荐

- Introduction to 51 Single Chip Microcomputer -- digital clock

- Mid year summary of 33 year old programmer

- 猿辅导最强暑假计划分享:暑假计划这样做,学习玩耍两不误

- 精益生产|精益管理

- Quelles industries les diplômés recherchent - ils en 2022?

- Introduction to 51 Single Chip Microcomputer -- the use of Keil uvision4

- Notes on advanced combinatorics -- Conclusion

- Vue failed to connect to MySQL database

- 33歲程序員的年中總結

- JDBC query result set, which is converted into a table

猜你喜欢

【GAN】《ENERGY-BASED GENERATIVE ADVERSARIAL NETWORKS》 ICLR‘17

Correspondence between pytorch and torchvision

Canoe learning notes (2) diagram of trace window introduction

Buuctf part Title WP

Canoe learning notes (1) illustration of new project and channel configuration steps

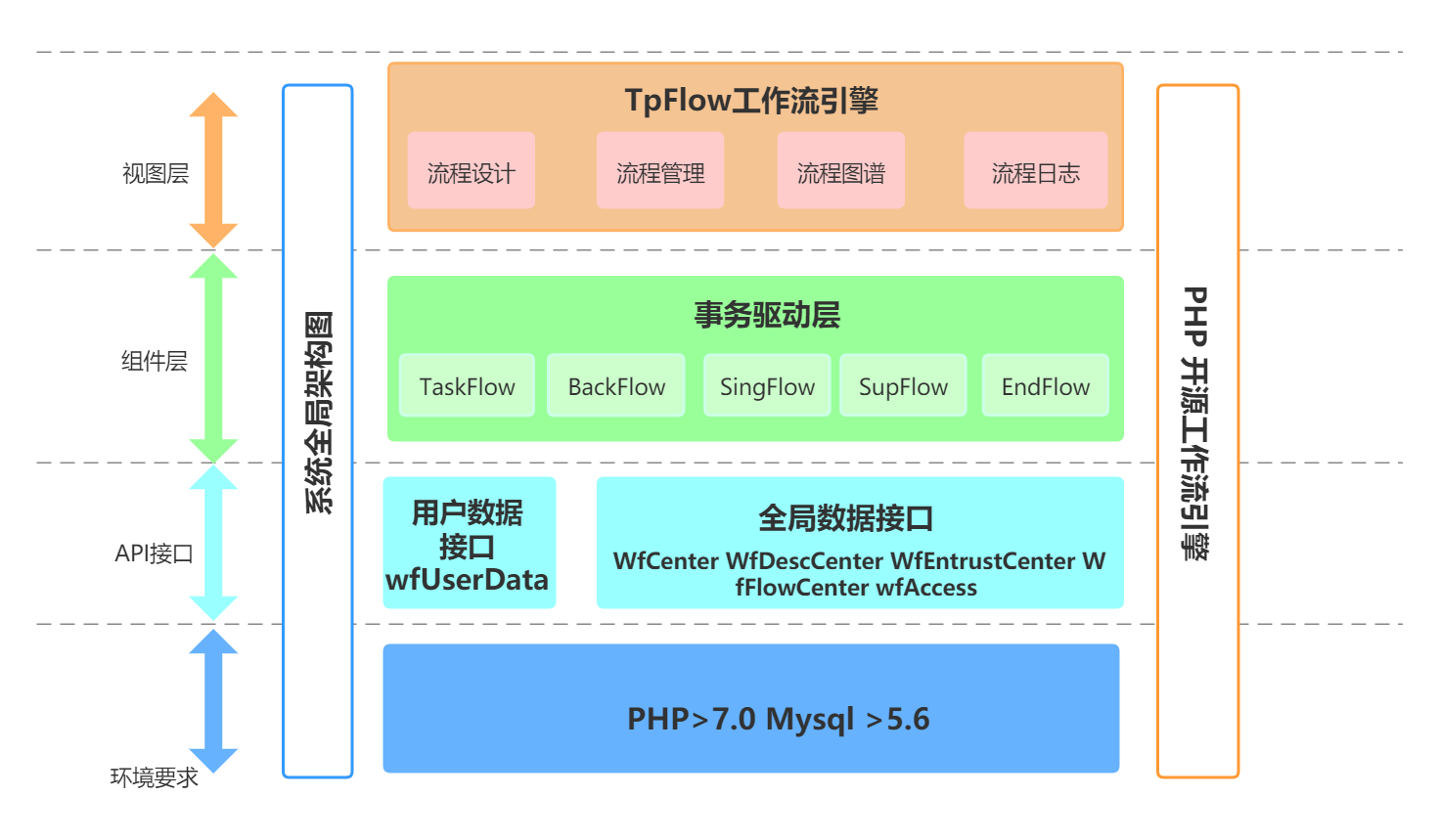

Tpflow V6.0.6 正式版发布

Canoe learning notes (3) graphic window introduction diagram

Cesium loading 3D tiles model

About structure (notes, for personal use)

C语言——深入理解数组

随机推荐

JS中对数组进行去重的几种方法

June 21, 2022: golang multiple choice question, what does the following golang code output? A:3; B:4; C:100; D: Compilation failed. package main import ( “fmt“ ) func

Wildfire stm32f407zgt6 learning notes beginner level chapter basic knowledge points

Qt development simple Bluetooth debugging assistant (low power Bluetooth)

Examples of Algebra: understanding of normal subgroups and quotient groups

Tpflow V6.0.6 正式版发布

[anomaly detection] malware detection: mamadroid (dnss 2017)

June training (day 22) - orderly gathering

【GAN】SAGAN ICML‘19

TypeScript & 详细解释 in、keyof、extends、索引签名、Record、typeof 的含义(不定时更新)

Buuctf part Title WP

Yolov1 (prediction process)

[internship] cross domain problems

从暴力递归到动态规划

校招路上的坑

2022-06-21:golang选择题,以下golang代码输出什么?A:3;B:4;C:100;D:编译失败。 package main import ( “fmt“ ) func

C语言——深入理解数组

Golang appelle sdl2, lit l'audio PCM et signale une erreur lors de l'exécution externe du Code.

33歲程序員的年中總結

Canoe learning notes (2) diagram of trace window introduction