当前位置:网站首页>Data integration framework seatunnel learning notes

Data integration framework seatunnel learning notes

2022-06-12 05:49:00 【coder_ szc】

List of articles

summary

Introduce

SeaTunnel Is an easy-to-use data integration framework , In the enterprise , Because the development time or development department is not common , There are often multiple heterogeneous 、 Information systems running on different hardware and software platforms run at the same time . Data integration is the integration of different sources 、 Format 、 Data of characteristic properties are logically or physically organically concentrated , So as to provide comprehensive data sharing for enterprises .SeaTunnel Support real-time synchronization of massive data . It can synchronize tens of billions of data stably and efficiently every day , And has been used near 100 The production of this company .

SeaTunnel The predecessor was Waterdrop( Chinese name : Water drop ) since 2021 year 10 month 12 Renamed as SeaTunnel.2021 year 12 month 9 Japan ,SeaTunnel Officially passed Apache The voting resolution of the software foundation , The outstanding performance that passes by all votes becomes Apache NREL incubator subcontract .2022 year 3 month 18 The community officially released the first Apache edition v2.1.0.

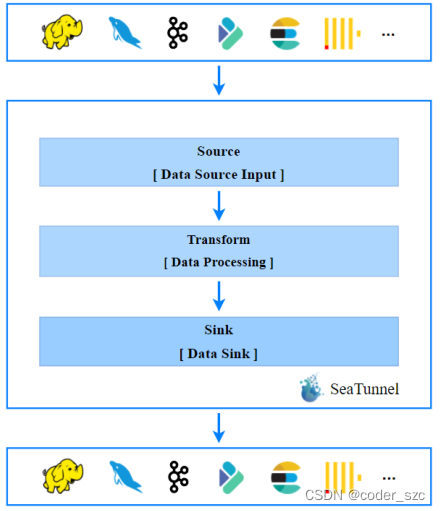

Essentially ,SeaTunnel Not right Saprk and Flink Internal modification of , But in Spark and Flink Made a layer of packaging on the basis of . It mainly uses Design patterns that control inversion , This is also SeaTunnel The basic idea of realization .SeaTunnel Daily use of , Edit the configuration file . The edited configuration file is created by SeaTunnel Convert to concrete Spark or Flink Mission , As shown in the figure

Application scenarios

at present SeaTunnel The long board is that it has a wealth of connectors , And because it takes Spark and Flink For the engine . Therefore, it can well synchronize distributed massive data . Usually SeaTunnel Will be used to make warehousing tools , Or used for data integration , The picture below is SeaTunnel workflow :

Official website address :https://seatunnel.apache.org/zh-CN/

Plug in support

Spark Connector plug-in support :

Flink Connector plug-in support :

Spark-Flink Transformation plug-in support :

install and configure

install

By SeaTunnel2.1.0, The supported environments are Spark2.X、Flink1.9.0 And above ,JDK>=1.8.

First , Download decompression SeaTunnel:

[[email protected] szc]# wget https://downloads.apache.org/incubator/seatunnel/2.1.0/apache-seatunnel-incubating-2.1.0-bin.tar.gz

[[email protected] szc]# tar -zxvf apache-seatunnel-incubating-2.1.0-bin.tar.gz

To configure

Get into apache-seatunnel-incubating-2.1.0/config/ Catalog , edit seatunnel.sh file :

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# Home directory of spark distribution.

SPARK_HOME=${SPARK_HOME:-/opt/spark}

# Home directory of flink distribution.

FLINK_HOME=${FLINK_HOME:-/opt/flink}

# Control whether to print the ascii logo

export SEATUNNEL_PRINT_ASCII_LOGO=true

By default ,SPARK_HOME and FLINK_HOME The values of the corresponding system environment variables are used , without , Use :- Value after , Modify as needed .

Use

Case study 1 introduction

Create job profile example01.conf, The contents are as follows :

# To configure Spark or Flink Parameters of

env {

# You can set flink configuration here

execution.parallelism = 1

#execution.checkpoint.interval = 10000

#execution.checkpoint.data-uri = "hdfs://scentos:9092/checkpoint"

}

# stay source Configure the data source in the block to which it belongs

source {

SocketStream{

host = scentos

result_table_name = "fake"

field_name = "info"

}

}

# stay transform The transformation plug-in is declared in the block of

transform {

Split{

separator = "#" # use # Split the input into name and age Two fields

fields = ["name","age"]

}

sql {

sql = "select info, split(info) as info_row from fake" # call split() Function to split

}

}

# stay sink Block to declare where to output

sink {

ConsoleSink {

}

}

Then , Execute first :

[[email protected] szc]# nc -lk 9999

Resubmit the job :

[[email protected] apache-seatunnel-incubating-2.1.0]# bin/start-seatunnel-flink.sh --config config/example01.conf

appear Job has been submitted with JobID XXXXXXX after , You can go to nc -lk Input in the terminal :

[[email protected] szc]# nc -lk 9999

szc#23^H

szcc#26

dawqe

jioec*231#1

And to the Flink Of webUI View output :

thus , We have run through an official case . It uses Socket For data sources . after SQL To deal with , Final output to the console . In the process , We haven't written a specific flink Code , There is no manual call jar package . We just declare the data processing flow in a configuration file . Behind the back , yes SeaTunnel Help us translate the configuration file into specific flink Mission . Configuration change , Low code , Easy to maintain yes SeaTunnel The most striking feature .

Case study 2 The ginseng

A copy of example01.conf:

[[email protected] config]# cp example01.conf example02.conf

modify SQL Plug in part :

# To configure Spark or Flink Parameters of

env {

# You can set flink configuration here

execution.parallelism = 1

#execution.checkpoint.interval = 10000

#execution.checkpoint.data-uri = "hdfs://scentos:9092/checkpoint"

}

# stay source Configure the data source in the block to which it belongs

source {

SocketStream{

host = scentos

result_table_name = "fake"

field_name = "info"

}

}

# stay transform The transformation plug-in is declared in the block of

transform {

Split{

separator = "#"

fields = ["name","age"]

}

sql {

sql = "select * from (select info, split(info) as info_row from fake) where age > '"${age}"'" # Nest a subquery , Re pass where To filter

}

}

# stay sink Block to declare where to output

sink {

ConsoleSink {

}

}

start-up nc -lk after , adopt -i Yes seaTunnel Pass on the reference :

[[email protected] apache-seatunnel-incubating-2.1.0]# bin/start-seatunnel-flink.sh --config config/example02.conf -i age=25

Multiple parameters require multiple -i To pass , among -i You can change to -p、-m etc. , As long as it's not –config or –variable that will do .

The test inputs are as follows :

[[email protected] szc]# nc -lk 9999

szc#26

szc002:#21

szc003#27

Output is as follows :

so ,21 Year old szc002 It's filtered out .

边栏推荐

- C语言-数组的定义方式

- 登录验证过滤器

- Stack and queue classic interview questions

- IO to IO multiplexing from traditional network

- RTMP streaming +rtmp playback low delay solution in unity environment

- Tabulation skills and matrix processing skills

- March 23, 2021

- Lock and reentrankload

- Flex/fixed upper, middle and lower (mobile end)

- [machine learning] first day of introduction

猜你喜欢

Makefile文件编写快速掌握

![[gin] gin framework for golang web development](/img/15/68c4fd217555f940b3cd3d10fcd54f.jpg)

[gin] gin framework for golang web development

yolov5

38. appearance series

网络加速谁更猛?CDN领域再现新王者

How long is the company's registered capital subscribed

Available RTMP and RTSP test addresses of the public network (updated in March, 2021)

登录验证过滤器

Tabulation skills and matrix processing skills

Select gb28181, RTSP or RTMP for data push?

随机推荐

Makefile文件编写快速掌握

从传统网络IO 到 IO多路复用

Thesis reading_ Figure neural network gin

Select gb28181, RTSP or RTMP for data push?

How much Ma is the driving current of SIM card signal? Is it adjustable?

Win10 desktop unlimited refresh

Rtmp/rtsp/hls public network real available test address

Halcon uses points to fit a plane

C语言-数组的定义方式

CCF noi2022 quota allocation scheme

MySQL notes

Mysql笔记

将一个文件夹图片分成训练集和测试集

[machine learning] first day of introduction

Research Report on market supply and demand and strategy of China's digital camera lens industry

Webrtc AEC process analysis

beginning一款非常优秀的emlog主题v3.1,支持Emlog Pro

Redis memory obsolescence strategy

【长时间序列预测】Aotoformer 代码详解之[4]自相关机制

Laravel8 when search