当前位置:网站首页>Introduction to CUDA Programming minimalist tutorial

Introduction to CUDA Programming minimalist tutorial

2022-06-25 02:50:00 【Startling Hong Yibo】

from CUDA A minimalist tutorial on getting started with programming - You know

Preface

2006 year ,NVIDIA The company released CUDA,CUDA yes Based on the NVIDIA Of CPUs A general parallel computing platform and programming model on , be based on CUDA Programming can take advantage of GPUs Parallel computing engine for , To solve more complex computing problems more efficiently . In recent years ,GPU One of the most successful applications is the field of deep learning , be based on GPU Parallel computing has become the standard configuration of training deep learning model . at present , Abreast of the times CUDA Version is CUDA 9.

GPU It is not an independent computing platform , And need to work with CPU Working together , It can be seen as CPU The coprocessor , So when we're saying GPU In parallel computing , Actually, it means based on CPU+GPU Heterogeneous computing architecture . In heterogeneous computing architecture ,GPU And CPU adopt PCIe Bus Connect and work together ,CPU The location is called the host side (host), and GPU The location is called the equipment end (device), As shown in the figure below .

be based on CPU+GPU Heterogeneous computing . source :Preofessional CUDA C Programming

You can see GPU Including more computing cores , It is especially suitable for data parallel computing intensive tasks , Such as large matrix operation , and CPU There are fewer computing cores , But it can realize complex logical operations , Therefore, it is suitable for controlling intensive tasks . in addition ,CPU Upper Threads are heavyweight , Context switching is expensive , however GPU Due to the existence A lot of cores , Its threads are lightweight . therefore , be based on CPU+GPU Heterogeneous computing platforms can complement each other ,CPU Responsible for dealing with complex logic Serial program , and GPU Focus on data intensive Parallel computing Program , So as to maximize the effect .

be based on CPU+GPU Heterogeneous computing application execution logic . source :Preofessional CUDA C Programming

CUDA yes NVIDIA Developed by the company GPU Programming model , It provides GPU Simple programming interface , be based on CUDA Programming can build on GPU Computing applications .CUDA Provides support for other programming languages , Such as C/C++,Python,Fortran Other languages , Here we choose CUDA C/C++ The interface of CUDA Explain programming . The development platform is Windows 10 + VS 2013,Windows Under the system CUDA Installation tutorial can refer to here .

CUDA Programming languages supported by the programming model

CUDA Fundamentals of programming model

In the given CUDA Before the programming instance of , First of all, here CUDA Some concepts and basic knowledge in programming model are briefly introduced .CUDA The programming model is a heterogeneous model , need CPU and GPU Working together . stay CUDA in ,host and device It's two important concepts , We use it host Refer to CPU And its memory , While using device Refer to GPU And its memory .CUDA The program contains both host Program , Contain, device Program , They are in CPU and GPU Up operation . meanwhile ,host And device Can communicate with each other , In this way, data can be copied between them .

Typical CUDA The execution process of the program is as follows

- Distribute host Memory , And data initialization ;

- Distribute device Memory , and from host Copy data to device On ;

- call CUDA Kernel function of stay device Complete the specified operation on ;

- take device Copy the result of the operation on to host On ;

- Release device and host Memory allocated on .

The most important procedure in the above process is to call CUDA Kernel function to perform parallel computing ,kernel yes CUDA An important concept in ,kernel Is in device Functions executed in parallel in the upper thread , The kernel function uses __global__ Symbol declaration , When calling, you need to use <<<grid, block>>> To specify the kernel Number of threads to execute , stay CUDA in , Each thread executes a kernel function , And each thread will be assigned a unique thread number thread ID, This ID The value can be passed through the built-in variable of the kernel function threadIdx To obtain a .

because GPU It's actually a heterogeneous model , So we need to distinguish host and device The code on , stay CUDA Is distinguished by function type qualifiers host and device The function on , The main three Function type qualifier as follows :

__global__: stay device On the implementation , from host Call in ( Some specific GPU You can also get it from device On the call ), The return type must bevoid, Variable parameters are not supported , Cannot be a class member function . Use it carefully__global__Defined kernel It's asynchronous , It means host Don't wait for kernel After execution, proceed to the next step .__device__: stay device On the implementation , Only from device Call in , Not with__global__Simultaneous use .__host__: stay host On the implementation , Only from host On the call , Generally omit and do not write , Not with__global__Simultaneous use , But it can be compared with__device__, At this point, the function will be in device and host All compile .

Have a deep understanding of kernel, You have to deal with kernel Have a clear understanding of the thread hierarchy . First GPU There are many parallelized lightweight threads on the .kernel stay device When executing on, it actually starts many threads , One kernel All threads that are started are called a grid (grid), Threads on the same grid share the same global memory space ,grid Is the first level of thread structure , And the grid can be divided into many Thread block (block), A thread block contains many threads , This is the second level . The two-tier organization structure of threads is shown in the figure below , This is a gird and block Are all 2-dim Thread organization .grid and block Are defined as dim3 Variable of type ,dim3 It can be regarded as containing three unsigned integers (x,y,z) Member's structure variable , In defining , The default value is initialized to 1. therefore grid and block Can be flexibly defined as 1-dim,2-dim as well as 3-dim structure , For the structure in the figure ( The main horizontal direction is x Axis ), Defined grid and block As shown below ,kernel When calling, you must also pass Perform configuration <<<grid, block>>> To specify the kernel Number and structure of threads used .

dim3 grid(3, 2);

dim3 block(5, 3);

kernel_fun<<< grid, block >>>(prams...);

Kernel A two-tier thread organization on (2-dim)

therefore , A thread needs two built-in coordinate variables (blockIdx,threadIdx) To uniquely identify , They are all dim3 Type variable , among blockIdx Indicates where the thread is located grid Position in , and threaIdx Indicates where the thread is located block Position in , As shown in figure of Thread (1,1) Satisfy :

threadIdx.x = 1

threadIdx.y = 1

blockIdx.x = 1

blockIdx.y = 1Threads on a thread block are placed on the same streaming multiprocessor (SM) Upper , But individually SM Our resources are limited , This results in a limited number of threads in the thread block , modern GPUs The thread block can support up to 1024 individual . occasionally , We need to know that a thread is blcok The overall situation in ID, At this time, we must also know block Organizational structure of , This is through the thread's built-in variables blockDim To obtain a . It gets the size of each dimension of the thread block . For one 2-dim Of block Failed to re upload and cancel the transfer , Threads Of ID The value is , If it is 3-dim Of block , Threads Of ID The value is . In addition, threads have built-in variables gridDim, Used to obtain the size of each dimension of the grid block .

kernel This thread structure is naturally suitable for vector,matrix Such as operation , For example, we will use the above figure 2-dim Structure to realize the addition of two matrices , Each thread is responsible for processing the addition of two elements at each location , The code is as follows . The thread block size is (16, 16), And then N*N The size of the matrix is divided into different thread blocks to perform addition .

// Kernel Definition

__global__ void MatAdd(float A[N][N], float B[N][N], float C[N][N])

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

if (i < N && j < N)

C[i][j] = A[i][j] + B[i][j];

}

int main()

{

...

// Kernel Thread configuration

dim3 threadsPerBlock(16, 16);

dim3 numBlocks(N / threadsPerBlock.x, N / threadsPerBlock.y);

// kernel call

MatAdd<<<numBlocks, threadsPerBlock>>>(A, B, C);

...

}In addition, here is a brief introduction CUDA Memory model , As shown in the figure below . You can see , Each thread has its own private local memory (Local Memory), Each thread block contains shared memory (Shared Memory), Can be shared by all threads in the thread block , Its life cycle is consistent with the thread block . Besides , All threads have access to global memory (Global Memory). You can also access some read-only memory blocks : Constant memory (Constant Memory) And texture memory (Texture Memory). Memory structure involves program optimization , They are not discussed in depth here .

CUDA Memory model

There's also an important point , You need to GPU Have a basic understanding of the hardware implementation of . It says kernel Thread organization hierarchy , So one kernel It actually starts a lot of threads , These threads are logically parallel , But in the physical layer, it is not necessarily . This is actually the same as CPU Multithreading has similarities , Multithreading without multi-core support , It is also impossible to achieve parallelism in the physical layer . But fortunately, GPU There is a lot of CUDA The core , make the best of CUDA The core can give full play to GPU The ability of parallel computing .GPU One of the core components of hardware is SM, I've said that before ,SM The English name is Streaming Multiprocessor, Stream multiprocessor .SM Its core components include CUDA The core , Shared memory , Register, etc ,SM Hundreds of threads can be executed concurrently , Concurrency depends on SM Number of resources owned . When one kernel When executed , its gird Thread blocks in are allocated to SM On , A thread block can only be in one SM Was dispatched to .SM In general, multiple thread blocks can be scheduled , It depends SM Own ability . So it's possible that one kernel Each thread block of is allocated multiple SM, therefore grid It's just logic , and SM It's the physical layer of execution .SM It's using SIMT (Single-Instruction, Multiple-Thread, Single instruction multithreading ) framework , The basic execution unit is the thread bundle (warps), The thread bundle contains 32 Threads , These threads execute the same instructions at the same time , But each thread contains its own instruction address counter and register status , It also has its own independent execution path . So although the threads in the thread bundle execute from the same program address at the same time , But it may have different behaviors , For example, when you encounter a branch structure , Some threads may enter this branch , But others may not execute , They can only wait , because GPU Specifies that all threads in the thread bundle execute the same instruction in the same cycle , Thread bundle differentiation can lead to performance degradation . When the thread block is divided into a SM Upper time , It will be further divided into multiple thread bundles , Because that's what it is SM The basic execution unit of , But one SM The number of concurrent thread bundles is limited . This is because of resource constraints ,SM To allocate shared memory for each thread block , Separate registers should also be allocated to the threads in each thread bundle . therefore SM The configuration of will affect the number of thread blocks and thread bundles it supports . All in all , That is, the grid and thread blocks are only logical partitions , One kernel In fact, all threads in the physical layer are not necessarily concurrent at the same time . therefore kernel Of grid and block Different configurations of , Performance will vary , This should be paid special attention to . also , because SM The basic execution unit of contains 32 Thread bundle of threads , therefore block The size is usually set to 32 Multiple .

CUDA The logic layer and physical layer of programming

It's going on CUDA Before programming , You can check your own GPU Hardware configuration , In this way, we can have a specific target , You can get... Through the following procedure GPU Configuration properties of :

int dev = 0;

cudaDeviceProp devProp;

CHECK(cudaGetDeviceProperties(&devProp, dev));

std::cout << " Use GPU device " << dev << ": " << devProp.name << std::endl;

std::cout << "SM The number of :" << devProp.multiProcessorCount << std::endl;

std::cout << " Shared memory size per thread block :" << devProp.sharedMemPerBlock / 1024.0 << " KB" << std::endl;

std::cout << " Maximum number of threads per thread block :" << devProp.maxThreadsPerBlock << std::endl;

std::cout << " Every EM Is the maximum number of threads :" << devProp.maxThreadsPerMultiProcessor << std::endl;

std::cout << " Every SM The maximum number of thread bundles :" << devProp.maxThreadsPerMultiProcessor / 32 << std::endl;

// Output is as follows

Use GPU device 0: GeForce GT 730

SM The number of :2

Shared memory size per thread block :48 KB

Maximum number of threads per thread block :1024

Every EM Is the maximum number of threads :2048

Every EM The maximum number of thread bundles :64ok ,GT 730 The graphics card is really a little scum , Only 2 individual SM, Sobbing ......

Vector addition example

got it CUDA Programming based , Let's have a simple practical battle , utilize CUDA Programming the addition of two vectors , Before implementation , Just a quick introduction CUDA Memory management in programming API. The first is device Memory allocated on the cudaMalloc function :

cudaError_t cudaMalloc(void** devPtr, size_t size);The sum of this function C In language malloc similar , But in device Apply for a certain byte size of video memory , among devPtr Is a pointer to the allocated memory . At the same time, free up the allocated memory usage cudaFree function , This sum C In language free Function corresponds to . Another important function is responsible for host and device Data communication between cudaMemcpy function :

cudaError_t cudaMemcpy(void* dst, const void* src, size_t count, cudaMemcpyKind kind)among src Point to the data source , and dst It's the target area ,count Is the number of bytes copied , among kind Controls the direction of replication :cudaMemcpyHostToHost, cudaMemcpyHostToDevice, cudaMemcpyDeviceToHost And cudaMemcpyDeviceToDevice, Such as cudaMemcpyHostToDevice take host Copy data to device On .

Now let's implement an example of vector addition , here grid and block All designed as 1-dim, First define kernel as follows :

// Two vector addition kernel,grid and block All are one-dimensional

__global__ void add(float* x, float * y, float* z, int n)

{

// Get global index

int index = threadIdx.x + blockIdx.x * blockDim.x;

// step

int stride = blockDim.x * gridDim.x;

for (int i = index; i < n; i += stride)

{

z[i] = x[i] + y[i];

}

}among stride As a whole grid Number of threads for , Sometimes a vector has a lot of elements , At this point, multiple elements can be implemented in each thread ( The total number of elements / Total threads ) Addition of , It is equivalent to using multiple grid To deal with it , This is a kind of grid-stride loop The way , However, in the following example, a thread only processes one element , therefore kernel The inner loop is not executed . Let's implement vector addition :

int main()

{

int N = 1 << 20;

int nBytes = N * sizeof(float);

// apply host Memory

float *x, *y, *z;

x = (float*)malloc(nBytes);

y = (float*)malloc(nBytes);

z = (float*)malloc(nBytes);

// Initialization data

for (int i = 0; i < N; ++i)

{

x[i] = 10.0;

y[i] = 20.0;

}

// apply device Memory

float *d_x, *d_y, *d_z;

cudaMalloc((void**)&d_x, nBytes);

cudaMalloc((void**)&d_y, nBytes);

cudaMalloc((void**)&d_z, nBytes);

// take host Copy the data to device

cudaMemcpy((void*)d_x, (void*)x, nBytes, cudaMemcpyHostToDevice);

cudaMemcpy((void*)d_y, (void*)y, nBytes, cudaMemcpyHostToDevice);

// Definition kernel Implementation configuration of

dim3 blockSize(256);

dim3 gridSize((N + blockSize.x - 1) / blockSize.x);

// perform kernel

add << < gridSize, blockSize >> >(d_x, d_y, d_z, N);

// take device Copy the results to host

cudaMemcpy((void*)z, (void*)d_z, nBytes, cudaMemcpyDeviceToHost);

// Check the execution results

float maxError = 0.0;

for (int i = 0; i < N; i++)

maxError = fmax(maxError, fabs(z[i] - 30.0));

std::cout << " maximum error : " << maxError << std::endl;

// Release device Memory

cudaFree(d_x);

cudaFree(d_y);

cudaFree(d_z);

// Release host Memory

free(x);

free(y);

free(z);

return 0;

}Here our vector size is 1<<20, and block The size is 256, that grid Size is 4096,kernel The thread hierarchy of is shown in the figure below :

kernel Thread hierarchy . source :https://devblogs.nvidia.com/even-easier-introduction-cuda/

Use nvprof Tools can analyze kernel Operation of the , The results are shown below , You can see kernel The function takes about 1.5ms.

nvprof cuda9.exe

==7244== NVPROF is profiling process 7244, command: cuda9.exe

maximum error : 4.31602e+008

==7244== Profiling application: cuda9.exe

==7244== Profiling result:

Type Time(%) Time Calls Avg Min Max Name

GPU activities: 67.57% 3.2256ms 2 1.6128ms 1.6017ms 1.6239ms [CUDA memcpy HtoD]

32.43% 1.5478ms 1 1.5478ms 1.5478ms 1.5478ms add(float*, float*, float*, int)You adjust block Size , Compare... Under different configurations kernel Operation of the , What I'm testing here is when block by 128 when ,kernel Time consuming 1.6ms, and block by 512 when kernel Time consuming 1.7ms, When block by 64 when ,kernel Time consuming 2.3ms. It doesn't seem to be block The bigger the better , Instead, choose .

In the above implementation , We need to be alone in host and device Memory allocation on the , And copy the data , It's easy to make mistakes . Fortunately CUDA 6.0 Introduce unified memory (Unified Memory) To avoid this trouble , To put it simply, unified memory uses a managed memory to jointly manage host and device Memory in , And automatically in host and device Data transmission in .CUDA Use in cudaMallocManaged Function to allocate managed memory :

cudaError_t cudaMallocManaged(void **devPtr, size_t size, unsigned int flag=0);Use unified memory , The above procedure can be simplified as follows :

int main()

{

int N = 1 << 20;

int nBytes = N * sizeof(float);

// Request managed memory

float *x, *y, *z;

cudaMallocManaged((void**)&x, nBytes);

cudaMallocManaged((void**)&y, nBytes);

cudaMallocManaged((void**)&z, nBytes);

// Initialization data

for (int i = 0; i < N; ++i)

{

x[i] = 10.0;

y[i] = 20.0;

}

// Definition kernel Implementation configuration of

dim3 blockSize(256);

dim3 gridSize((N + blockSize.x - 1) / blockSize.x);

// perform kernel

add << < gridSize, blockSize >> >(x, y, z, N);

// Sync device Ensure that the results can be accessed correctly

cudaDeviceSynchronize();

// Check the execution results

float maxError = 0.0;

for (int i = 0; i < N; i++)

maxError = fmax(maxError, fabs(z[i] - 30.0));

std::cout << " maximum error : " << maxError << std::endl;

// Free memory

cudaFree(x);

cudaFree(y);

cudaFree(z);

return 0;

}Compared to the previous code , Using unified memory is simpler , It is worth noting that kernel Execution is related to host Asynchronous , Because managed memory automatically transfers data , Here want to use cudaDeviceSynchronize() Function guarantees device and host Sync , In this way, you can correctly access kernel The result of the calculation .

Matrix multiplication example

Finally, let's implement a slightly more complex example , Is the multiplication of two matrices , Set the input matrix as

Failed to re upload and cancel the transfer and , In order to get . The implementation idea is that each thread calculates An element value of , For matrix operation , It should be selected grid and block by 2-D Of . First define the structure of the matrix :

// Matrix type , Line first ,M(row, col) = *(M.elements + row * M.width + col)

struct Matrix

{

int width;

int height;

float *elements;

};

Matrix multiplication implementation mode

Then realize the kernel function of matrix multiplication , Here we define two auxiliary __device__ The function is used to obtain the element value of the matrix and assign a value to the matrix element , The specific code is as follows :

// Get matrix A Of (row, col) Elements

__device__ float getElement(Matrix *A, int row, int col)

{

return A->elements[row * A->width + col];

}

// For matrix A Of (row, col) Element assignment

__device__ void setElement(Matrix *A, int row, int col, float value)

{

A->elements[row * A->width + col] = value;

}

// matrix multiplication kernel,2-D, Each thread calculates an element

__global__ void matMulKernel(Matrix *A, Matrix *B, Matrix *C)

{

float Cvalue = 0.0;

int row = threadIdx.y + blockIdx.y * blockDim.y;

int col = threadIdx.x + blockIdx.x * blockDim.x;

for (int i = 0; i < A->width; ++i)

{

Cvalue += getElement(A, row, i) * getElement(B, i, col);

}

setElement(C, row, col, Cvalue);

}Finally, we use unified memory to write a test example of matrix multiplication :

int main()

{

int width = 1 << 10;

int height = 1 << 10;

Matrix *A, *B, *C;

// Request managed memory

cudaMallocManaged((void**)&A, sizeof(Matrix));

cudaMallocManaged((void**)&B, sizeof(Matrix));

cudaMallocManaged((void**)&C, sizeof(Matrix));

int nBytes = width * height * sizeof(float);

cudaMallocManaged((void**)&A->elements, nBytes);

cudaMallocManaged((void**)&B->elements, nBytes);

cudaMallocManaged((void**)&C->elements, nBytes);

// Initialization data

A->height = height;

A->width = width;

B->height = height;

B->width = width;

C->height = height;

C->width = width;

for (int i = 0; i < width * height; ++i)

{

A->elements[i] = 1.0;

B->elements[i] = 2.0;

}

// Definition kernel Implementation configuration of

dim3 blockSize(32, 32);

dim3 gridSize((width + blockSize.x - 1) / blockSize.x,

(height + blockSize.y - 1) / blockSize.y);

// perform kernel

matMulKernel << < gridSize, blockSize >> >(A, B, C);

// Sync device Ensure that the results can be accessed correctly

cudaDeviceSynchronize();

// Check the execution results

float maxError = 0.0;

for (int i = 0; i < width * height; ++i)

maxError = fmax(maxError, fabs(C->elements[i] - 2 * width));

std::cout << " maximum error : " << maxError << std::endl;

return 0;

}The size of the matrix here is , Designed thread block The size is (32, 32), that grid The size is (32, 32), The final test results are as follows :

nvprof cuda9.exe

==16304== NVPROF is profiling process 16304, command: cuda9.exe

maximum error : 0

==16304== Profiling application: cuda9.exe

==16304== Profiling result:

Type Time(%) Time Calls Avg Min Max Name

GPU activities: 100.00% 1.32752s 1 1.32752s 1.32752s 1.32752s matMulKernel(Matrix*, Matrix*, Matrix*)

API calls: 83.11% 1.32762s 1 1.32762s 1.32762s 1.32762s cudaDeviceSynchronize

13.99% 223.40ms 6 37.233ms 37.341us 217.66ms cudaMallocManaged

2.81% 44.810ms 1 44.810ms 44.810ms 44.810ms cudaLaunch

0.08% 1.3300ms 94 14.149us 0ns 884.64us cuDeviceGetAttribute

0.01% 199.03us 1 199.03us 199.03us 199.03us cuDeviceGetName

0.00% 10.009us 1 10.009us 10.009us 10.009us cuDeviceTotalMem

0.00% 6.5440us 1 6.5440us 6.5440us 6.5440us cudaConfigureCall

0.00% 3.0800us 3 1.0260us 385ns 1.5400us cudaSetupArgument

0.00% 2.6940us 3 898ns 385ns 1.5390us cuDeviceGetCount

0.00% 1.9250us 2 962ns 385ns 1.5400us cuDeviceGet

==16304== Unified Memory profiling result:

Device "GeForce GT 730 (0)"

Count Avg Size Min Size Max Size Total Size Total Time Name

2051 4.0000KB 4.0000KB 4.0000KB 8.011719MB 21.20721ms Host To Device

270 45.570KB 4.0000KB 1.0000MB 12.01563MB 7.032508ms Device To HostOf course , This is not the most efficient implementation , You can continue to optimize later ...

Summary

Finally, there is only one sentence :CUDA It's easy to get started , But it's hard to go deep ! I hope it's not from getting started to giving up ...

Reference material

- John Cheng, Max Grossman, Ty McKercher. Professional CUDA C Programming, 2014.

- CUDA docs.

- An Even Easier Introduction to CUDA.

- Unified Memory in CUDA 6.

- Maximizing Unified Memory Performance in CUDA.

边栏推荐

- 高数 | 精通中值定理 解题套路汇总

- 14 bs对象.节点名称.name attrs string 获取节点名称 属性 内容

- Enlightenment of using shadergraph to make edge fusion particle shader

- @PostConstruct

- Pit entry machine learning: I. Introduction

- Processon producer process (customized)

- Resolution of cross reference in IDA

- Tell you about mvcc sequel

- yarn : 无法加载文件 C:\Users\xxx\AppData\Roaming\npm\yarn.ps1,因为在此系统上禁止运行脚本

- GO同步等待组

猜你喜欢

![[analysis of STL source code] functions and applications of six STL components (directory)](/img/f2/872fd93ef52b1424343ba634be24f6.png)

[analysis of STL source code] functions and applications of six STL components (directory)

automated testing

Leetcode 210: curriculum II (topological sorting)

AI clothing generation helps you complete the last step of clothing design

高速缓存Cache详解(西电考研向)

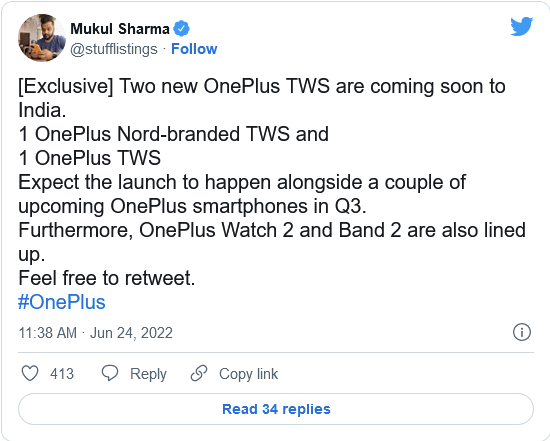

It is said that Yijia will soon update the product line of TWS earplugs, smart watches and bracelets

Detailed explanation of cache (for the postgraduate entrance examination of XD)

Pit entry machine learning: I. Introduction

Once beego failed to find bee after passing the go get command Exe's pit

3 years of testing experience. I don't even understand what I really need on my resume. I need 20K to open my mouth?

随机推荐

使用ShaderGraph制作边缘融合粒子Shader的启示

都2022年了,你还不了解什么是性能测试?

Once beego failed to find bee after passing the go get command Exe's pit

ACM. HJ70 矩阵乘法计算量估算 ●●

对进程内存的实践和思考

Network planning | [four network layers] knowledge points and examples

Can automate - 10k, can automate - 20K, do you understand automated testing?

Call system function security scheme

jwt

ProcessOn制作ER过程(自定义)

高速缓存Cache详解(西电考研向)

AOSP ~ default attribute value

Summary of knowledge points of computer level III (database) test preparation topics

After reciting the eight part essay, I won the hemp in June

QT package the EXE file to solve the problem that "the program input point \u zdapvj cannot be located in the dynamic link library qt5cored.dll"

微信小程序获取扫描二维码后携带的参数

折叠屏将成国产手机分食苹果市场的重要武器

Pit entry machine learning: I. Introduction

李宏毅《机器学习》丨6. Convolutional Neural Network(卷积神经网络)

Talking about the advantages of flying book in development work | community essay solicitation