当前位置:网站首页>yandex robots txt

yandex robots txt

2022-07-28 05:23:00 【oHuangBing】

robots.txt It is a text file containing website index parameters , For search engine robots .

Yandex Support for... With advanced functions Robots Exclusion agreement .

When crawling a website ,Yandex The robot will load robots.txt file . If the latest request for this file shows a website page or part is prohibited , Robots will not index them .

Yandex robots.txt Document requirements

Yandex Robots can handle robots.txt, However, the following requirements should be met :

The file size does not exceed 500KB.

It is named "robots " Of TXT file , robots.txt.

The file is located in the root directory of the website .

This file can be used by robots : The server hosting the website is HTTP Code response , Status as 200 OK. Check the response of the server

If the document does not meet the requirements , The website is considered to be open indexed , That is to say Yandex Search engines can access web content at will .

Yandex Support from robots.txt Redirect files to files located on another website . under these circumstances , The instructions in the object file are taken into account . This kind of redirection may be very useful when moving websites .

Yandex visit robots.txt Some rules of

stay robots.txt In file , The robot will check to User-agent: The first record , And look for characters Yandex( Case is not important ) or *. If User-agent: Yandex String detected ,User-agent: * String will be ignored . If User-agent: Yandex and User-agent: * String not found , Robots will be considered to have unlimited access .

You can have the Yandex The robot inputs separate instructions .

For example, the following examples :

User-agent: YandexBot # Writing method for index crawler

Disallow: /*id=

User-agent: Yandex # Will be for all YandexBot work

Disallow: /*sid= # In addition to the main indexing robots

User-agent: * # Yes YandexBot It won't work

Disallow: /cgi-bin

According to the standard , You should be in every User-agent Insert a blank line before the instruction .# The character specifies a comment . Everything after this character , Until the first line break , Will be ignored .

robots.txt Disallow And Allow Instructions

Disallow Instructions , Use this instruction to prohibit indexing site sections or individual pages . Example :

Pages containing confidential data .

Pages with site search results .

Website traffic statistics .

Repeat the page .

All kinds of logs .

Database service page .

Here is Disallow Examples of instructions :

User-agent: Yandex

Disallow: / # It is forbidden to crawl the entire website

User-agent: Yandex

Disallow: /catalogue # Do not grab to /catalogue Opening page .

User-agent: Yandex

Disallow: /page? # It is forbidden to grab URL The page of

robots.txt Allow Instructions

This directive allows indexing of site sections or individual pages . Here is an example :

User-agent: Yandex

Allow: /cgi-bin

Disallow: /

# Prohibit indexing any page , Except for '/cgi-bin' The opening page

User-agent: Yandex

Allow: /file.xml

# Allow Indexing file.xml file

robots.txt Combined instructions

In the corresponding user agent block Allow and Disallow The order will be based on URL Prefix length ( From shortest to longest ) Sort , And apply it in order . If there are several instructions that match a specific website page , The robot will choose the last instruction in the sorting list . such ,robots.txt The order of instructions in the file will not affect the way the robot uses them .

# robots.txt File example :

User-agent: Yandex

Allow: /

Allow: /catalog/auto

Disallow: /catalog

User-agent: Yandex

Allow: /

Disallow: /catalog

Allow: /catalog/auto

# Prohibit indexing with '/catalog' Opening page

# But you can index it with '/catalog/auto' The beginning page address

summary

So that's about Yandex Reptiles for robots.txt Some rules of writing , You can specify the configuration , Allow or prohibit Yandex Reptiles Crawl or disable crawl pages .

Reference material

边栏推荐

- C language: realize the simple function of address book through structure

- FreeRTOS个人笔记-任务通知

- C language characters and strings

- Table image extraction based on traditional intersection method and Tesseract OCR

- Struct模块到底有多实用?一个知识点立马学习

- 【ARXIV2205】EdgeViTs: Competing Light-weight CNNs on Mobile Devices with Vision Transformers

- Flink mind map

- FreeRTOS personal notes - task notification

- Testcafe's positioning, operation of page elements, and verification of execution results

- Have you learned the common SQL interview questions on the short video platform?

猜你喜欢

Paper reading notes -- crop yield prediction using deep neural networks

在ruoyi生成的对应数据库的代码 之后我该怎么做才能做出下边图片的样子

Message forwarding mechanism -- save your program from crashing

Data security is gradually implemented, and we must pay close attention to the source of leakage

The solution after the samesite by default cookies of Chrome browser 91 version are removed, and the solution that cross domain post requests in chrome cannot carry cookies

flink思维导图

基于MPLS构建虚拟专网的配置实验

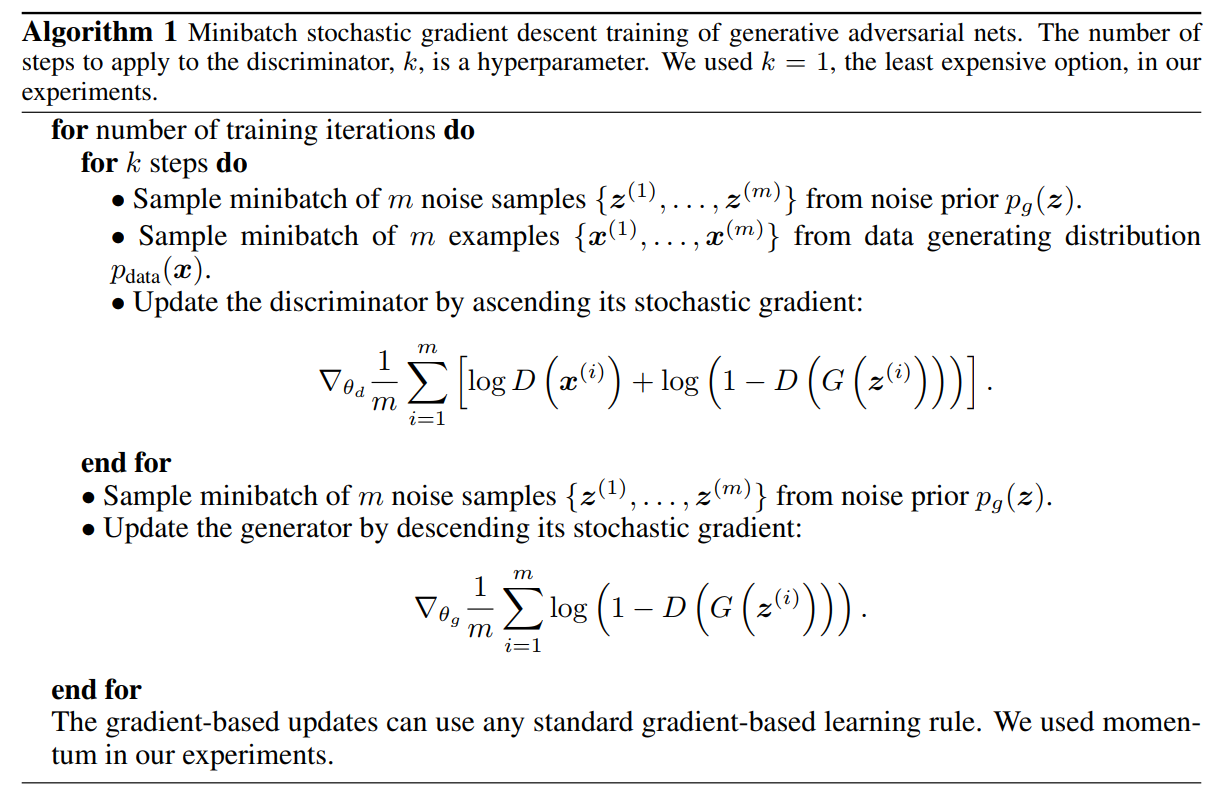

Gan: generative advantageous nets -- paper analysis and the mathematical concepts behind it

The most detailed installation of windows10 virtual machine, install virtual machine by hand, and solve the problem that the Hyper-V option cannot be found in the home version window

Check box error

随机推荐

I've been in an outsourcing company for two years, and I feel like I'm going to die

SMD component size metric English system corresponding description

在外包公司两年了,感觉快要废了

MySQL(5)

Flink mind map

HDU 3592 World Exhibition (differential constraint)

Array or object, date operation

How should programmers keep warm when winter is coming

HashSet add

MySQL(5)

Interpretation of afnetworking4.0 request principle

Professor dongjunyu made a report on the academic activities of "Tongxin sticks to the study of war and epidemic"

C language: realize the simple function of address book through structure

Database date types are all 0

New modularity in ES6

Using RAC to realize the sending logic of verification code

基于MPLS构建虚拟专网的配置实验

Implementation of simple upload function in PHP development

在ruoyi生成的对应数据库的代码 之后我该怎么做才能做出下边图片的样子

MySQL date and time function, varchar and date are mutually converted