当前位置:网站首页>MR-WordCount

MR-WordCount

2022-06-28 05:38:00 【小山丘】

pom.xml

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>3.2.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<!--主函数入口-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>2.4</version>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<classpathPrefix>lib/</classpathPrefix>

<mainClass>com.mr.demo.wordcount.WordCount</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

<!--jdk定义-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

WordCount.java

MapReduce编程案例

package com.flink.mr.demo.wordcount;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

import java.net.URI;

public class NeoWordCount {

public static class NeoWordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

private final LongWritable ONE = new LongWritable(1);

private final Text outputK = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

for (String s : value.toString().split(" ")) {

outputK.set(s);

context.write(outputK, ONE);

}

}

}

public static class NeoWordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

private final LongWritable outputV = new LongWritable();

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0;

for (LongWritable value : values) {

sum += value.get();

}

outputV.set(sum);

context.write(key, outputV);

}

}

public static void main(String[] args) throws Exception {

/*GenericOptionsParser parser = new GenericOptionsParser(args);

Job job = Job.getInstance(parser.getConfiguration());

args = parser.getRemainingArgs();*/

System.setProperty("HADOOP_USER_NAME","bigdata");

Configuration config = new Configuration();

config.set("fs.defaultFS","hdfs://10.1.1.1:9000");

config.set("mapreduce.framework.name","yarn");

config.set("yarn.resourcemanager.hostname","10.1.1.1");

// 跨平台参数

config.set("mapreduce.app-submission.cross-platform","true");

Job job = Job.getInstance(config);

job.setJar("D:\\bigdata\\mapreduces\\flink-mr.jar");

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputKeyClass(LongWritable.class);

job.setMapperClass(NeoWordCountMapper.class);

job.setReducerClass(NeoWordCountReducer.class);

job.setCombinerClass(NeoWordCountReducer.class);

Path inputPath = new Path("/user/bigdata/demo/001/input");

FileInputFormat.setInputPaths(job, inputPath);

Path outputPath = new Path("/user/bigdata/demo/001/output");

FileSystem fs = FileSystem.get(new URI("hdfs://10.1.1.1:9000"),config,"bigdata");

if(fs.exists(outputPath)){

fs.delete(outputPath,true);

}

FileOutputFormat.setOutputPath(job, outputPath);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

环境要求

1.本地打包形成

D:\bigdata\mapreduces\flink-mr.jar

2.Hadoop环境

10.1.1.1

3.文件准备

hdfs://10.1.1.1:9000/user/bigdata/demo/001/input

上传几个文件用于分析

4.运行本示例,提交MR任务到集群

边栏推荐

- 阴阳师页面

- Concurrent wait/notify description

- Detailed usage configuration of the shutter textbutton, overview of the shutter buttonstyle style and Practice

- 線條動畫

- What is the difference between AC and DC?

- Gee learning notes 3- export table data

- Online yaml to JSON tool

- Jenkins继续集成2

- UICollectionViewDiffableDataSource及NSDiffableDataSourceSnapshot使用介绍

- 上海域格ASR CAT1 4g模块2路保活低功耗4G应用

猜你喜欢

![[JVM] - Division de la mémoire en JVM](/img/d8/29a5dc0ff61e35d73f48effb858770.png)

[JVM] - Division de la mémoire en JVM

![A full set of excellent SEO tutorials worth 300 yuan [159 lessons]](/img/d7/7e522143b1e6b3acf14a0894f50d26.jpg)

A full set of excellent SEO tutorials worth 300 yuan [159 lessons]

Detailed usage configuration of the shutter textbutton, overview of the shutter buttonstyle style and Practice

sklearn 特征工程(总结)

Gee learning notes 3- export table data

Blog login box

上海域格ASR CAT1 4g模块2路保活低功耗4G应用

Oracle 常用基础函数

联想混合云Lenovo xCloud,新企业IT服务门户

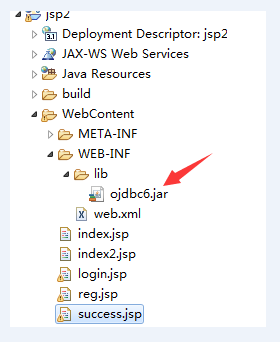

jsp连接oracle实现登录注册(简单)

随机推荐

如何做好水库大坝安全监测工作

【Linux】——使用xshell在Linux上安装MySQL及实现Webapp的部署

Typescript interface

函数栈帧的创建和销毁

jq图片放大器

1404. 将二进制表示减到1的步骤数

学术搜索相关论文

Prove that there are infinite primes / primes

Typescript base type

8VC Venture Cup 2017 - Elimination Round D. PolandBall and Polygon

Jenkins持续集成1

JSP connects with Oracle to realize login and registration (simple)

5G网络整体架构

独立站卖家都在用的五大电子邮件营销技巧,你知道吗?

Interpretation of cloud native microservice technology trend

What does mysql---where 1=1 mean

Cryptography notes

JSP connecting Oracle to realize login and registration

联想混合云Lenovo xCloud,新企业IT服务门户

博客登录框