当前位置:网站首页>Final consistency of MESI cache in CPU -- why does CPU need cache

Final consistency of MESI cache in CPU -- why does CPU need cache

2022-07-04 16:22:00 【zxhtom】

「 This is my participation 2022 For the first time, the third challenge is 4 God , Check out the activity details :2022 For the first time, it's a challenge 」

Preface

- We have released the lock chapter 【java How objects are distributed in memory 】、【java What locks are there 】、【synchronized and volatile】. In the above analysis volatile When I reorder instructions, I see an article introducing CPU Cache consistency issues .

- because volatile The prohibition of instruction reordering is due to the implementation of memory barrier . Another feature is memory visibility, which is implemented through CPU Of MESI To achieve .

- When A The thread brushes the modified data back to the main memory ,CPU At the same time, it informs other threads that the corresponding data in the thread is invalid , Need to get it again

What is? MESI

- MESI In fact, it is the abbreviation of four words , They are a state that describes the copy of data in the thread . We go through MESI Come and see before volatile The process of realizing memory visibility

- CPU Caching data is not the data needed for caching, but based on blocks , Here is 64KB Is the smallest unit . So we modified 1 Other values near the value of one place will also become invalid , In turn, other threads will synchronize data , This is pseudo sharing . Actually, here we are mysql So is design , When we facilitate the query, the data is also divided into the smallest pages , Page size 16KB.

CPU Why cache is needed

- The data stored inside the computer is also stored in blocks , Such a storage method leads to our inability to humanize , Arbitrary access will increase the number of our interactions . although CPU Soon , But the speed of memory can't keep up CPU The speed of , Therefore, it is the best way to access the data by packaging .

- The same is true of reading bytes in our network development . Every time we normally read 1024 byte , This reduces our network interaction

CPU With MESI, Why? Java still more volatile

- First Java In order to improve the efficiency of virtual machine, instruction rearrangement will occur , This is also volatile One of the characteristics

- CPU Of MESI What is guaranteed is a single CPU Visible at a single location . however volatile It's all CPU The operation of . therefore volatile It's necessary

In a typical system , There may be several caches ( In a multicore system , Each core will have its own cache ) Shared main memory bus , Each corresponding

CPUWill issue a read-write request , And the purpose of caching is to reduceCPUThe number of times to read and write shared main memory .

- A cache is divided into

InvalidIt can be satisfied out of state cpu Read request for , OneInvalidMust be read from main memory ( becomeSperhapsEstate ) To satisfyCPURead request for . - A write request is only if the cache line is M perhaps E State can only be executed , If the cache line is in

Sstate , The cache row in other caches must be changed toInvalidstate ( It's not allowed to be differentCPUModify the same cache line at the same time , It is not allowed to modify data at different locations in the cache row ). This operation is often done by broadcasting , for example :RequestFor Ownership(RFO). - Cache can change a non at any time M The state of the cache line is invalid , Or become

Invalidstate , And oneMThe cache line of the state must first be written back to main memory . - One is in

MThe state cache line must always listen for all attempts to read the cache line relative to main memory , This operation must write the cache row back to main memory in the cache and change the state to S The state was delayed . - One is in S The state cache line must also listen for requests from other caches to invalidate the cache line or to own the cache line , And make the cache line invalid (

Invalid). - One is in E The state cache line must also listen to other caches reading the cache line in main memory , Once there's this kind of operation , The cache line needs to become

Sstate . - about

MandEState is always accurate , They are consistent with the true state of the cache line . andSThe state may be inconsistent , If a cache will be inSThe cache line of the state is invalidated , And the other cache might actually have - It's time to cache , But the cache does not promote the cache row to

Estate , This is because other caches don't broadcast their notification to void the cache line , Also, since the cache does not hold the cache linecopyThe number of , therefore ( Even with such a notice ) There is no way to determine whether you have exclusive access to the cache line . - In the sense above E State is a speculative optimization : If one

CPUWant to modify a position inSState cache line , The bus transaction needs to transfer all of the cache rowscopybecomeInvalidstate , And modifyEState caching does not require bus transactions .

Case list

- There is an introduction to CPU The cache data unit is 64K . Join us Java Two variables manipulated by multithreading are in the same block , Then a thread is modified a Variable , Another thread operates b Variables also involve data synchronization . Here we can see a code provided by dismounted soldier Daniel , I run it locally , It's fun .

@Data

class Store{

private volatile long p1,p2,p3,p4,p5,p6,p7;

private volatile long p;

private volatile long p8,p9,p10,p11,p12,p13,p14;

}

public class StoreRW {

public static Store[] arr = new Store[2];

public static long COUNT = 1_0000_0000l;

static {

arr[0] = new Store();

arr[1] = new Store();

}

public static void main(String[] args) throws InterruptedException {

Store store = new Store();

final Thread t1 = new Thread(new Runnable() {

@Override

public void run() {

for (long i = 0; i < COUNT; i++) {

arr[0].setP(i);

}

}

});

final Thread t2 = new Thread(new Runnable() {

@Override

public void run() {

for (long i = 0; i < COUNT; i++) {

arr[1].setP(i);

}

}

});

final long start = System.currentTimeMillis();

t1.start();

t2.start();

t1.join();

t2.join();

final long end = System.currentTimeMillis();

System.out.println(end - start);

}

}

Copy code - The code is simple , That is, two threads constantly operate two variables . If we remove redundant attributes from the object . like this Store Only keep p An attribute

@Data

class Store{

private volatile long p;

}

Copy code - Running our program found that it was basically stable in 100 millisecond . If I add something irrelevant 14 individual long Properties of type . Then the program can be stable in 70 millisecond . Here, the running time of the program depends on the configuration of the computer . But no matter how the configuration is, you can definitely see whether to add it or not 14 The difference between variables .

- This is about CPU Cache unit . If there is only one attribute . that a r r Two objects in the array are likely to be in the same cache block . So thread A operation a object , So thread B There will be a synchronization . But add 14 Variables can guarantee a r r The two objects of the array are definitely not in the same unit block

- Because with 14 After variables , One Store Take up 15*8=120 Bytes . Then put two anyway Store Definitely not in the same block . and p The variable is still in the middle . That's why this effect appears .

- For this operation, some people will think that the code is not aesthetic , But it does improve performance .JDK Comments are also provided for this

@sun.misc.Contended; But I tested it and felt whether the performance was improved 14 Variables are large . Teacher ma

summary

- That's all for today's introduction . Mainly with MESI The understanding of the .

Reference article

边栏推荐

- Salient map drawing based on OpenCV

- Qt---error: ‘QObject‘ is an ambiguous base of ‘MyView‘

- Audio and video technology development weekly | 252

- MySQL learning notes - data type (numeric type)

- Digital recognition system based on OpenCV

- Unity脚本API—Component组件

- 【读书会第十三期】视频文件的编码格式

- MySQL - MySQL adds self incrementing IDs to existing data tables

- Redis' optimistic lock and pessimistic lock for solving transaction conflicts

- Proxifier global agent software, which provides cross platform port forwarding and agent functions

猜你喜欢

数据湖治理:优势、挑战和入门

Unity动画Animation Day05

![[Previous line repeated 995 more times]RecursionError: maximum recursion depth exceeded](/img/c5/f933ad4a7bc903f15beede62c6d86f.jpg)

[Previous line repeated 995 more times]RecursionError: maximum recursion depth exceeded

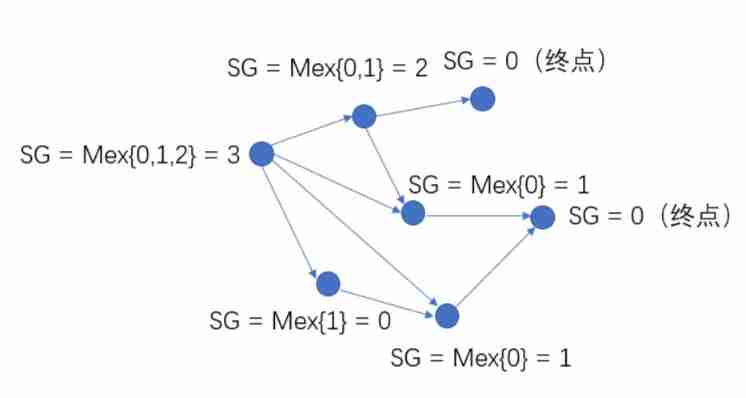

Game theory

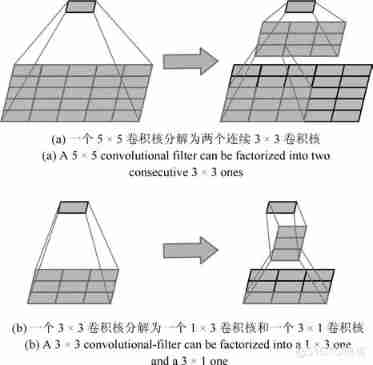

Overview of convolutional neural network structure optimization

![[North Asia data recovery] a database data recovery case where the partition where the database is located is unrecognized due to the RAID disk failure of HP DL380 server](/img/21/513042008483cf21fc66729ae1d41f.jpg)

[North Asia data recovery] a database data recovery case where the partition where the database is located is unrecognized due to the RAID disk failure of HP DL380 server

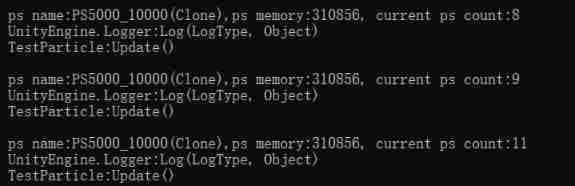

Will the memory of ParticleSystem be affected by maxparticles

AutoCAD - set color

Ten clothing stores have nine losses. A little change will make you buy every day

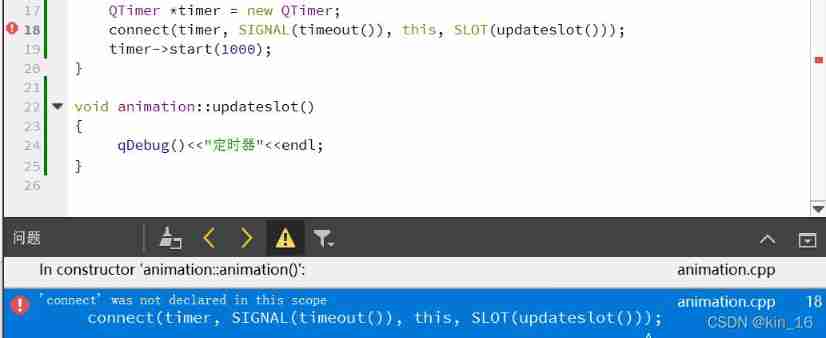

error: ‘connect‘ was not declared in this scope connect(timer, SIGNAL(timeout()), this, SLOT(up

随机推荐

JS tile data lookup leaf node

CMPSC311 Linear Device

Lombok使用引发的血案

An article learns variables in go language

[flask] ORM one to many relationship

Vscode prompt Please install clang or check configuration 'clang executable‘

odoo数据库主控密码采用什么加密算法?

Cut! 39 year old Ali P9, saved 150million

The new generation of domestic ORM framework sagacity sqltoy-5.1.25 release

Neuf tendances et priorités du DPI en 2022

Data Lake Governance: advantages, challenges and entry

Common knowledge of unity Editor Extension

Redis' optimistic lock and pessimistic lock for solving transaction conflicts

Unity animation day05

函数式接口,方法引用,Lambda实现的List集合排序小工具

Socks agent tools earthworm, ssoks

Unity脚本常用API Day03

Shell 编程基础

Understand the context in go language in an article

MySQL federated primary key_ MySQL creates a federated primary key [easy to understand]