当前位置:网站首页>Q&A:Transformer, Bert, ELMO, GPT, VIT

Q&A:Transformer, Bert, ELMO, GPT, VIT

2022-07-03 20:14:00 【Zhou Zhou, Zhou Dashuai】

The rainy weather in the South has become an extravagant hope to go out , Even if winter is long and boring , But the real spring will also come quietly .

Such a beginning is rare , Then why should we play with words today ? Because of a cold, it finally recovered ! So make a summary of the recent scientific research work , But many places dare not think about it , The water is too deep , I can't hold it , Just write the common question and answer

One 、Q&A:Transformer

1. Transformer Why use the long attention mechanism ?

You can think , This thing is , We're doing it self-attention When , Yes, it is q Find the relevant k. however “ relevant ” This matter , There are many different forms , There are many different definitions . So , Maybe we can't have only one q, There should be different q, Different q Responsible for different kinds of correlation . The more written expression is : Bulls guarantee transformer You can notice the information of different subspaces , Capture richer feature information .

2. Transformer Calculation attention Why do you choose dot multiplication instead of addition ?

K and Q Calculation dot-production To get a attention score matrix , Used to correct V Weighted .K and Q Used a different  ,

,  To calculate , It can be understood as a projection on different spaces . Because of this projection of different spaces , Increased expression ability , It's calculated in this way attention score matrix The generalization ability of is higher .

To calculate , It can be understood as a projection on different spaces . Because of this projection of different spaces , Increased expression ability , It's calculated in this way attention score matrix The generalization ability of is higher .

3. Why are you doing softmax You need to be right about attention Conduct scaled Well ?

transformer Medium attention Why? scaled? https://www.zhihu.com/question/339723385/answer/782509914

https://www.zhihu.com/question/339723385/answer/782509914

4. Transformer in encoder and decoder How they interact ?

Before that Why transformer?( 3、 ... and ) Yes Specifically mentioned encoder and decoder How to transmit messages between , Here is a brief statement :q From decoder,k Follow v come from encoder, This step is called Cross attention. Specific reference :Why transformer?( 3、 ... and ) https://blog.csdn.net/m0_57541899/article/details/122761220?spm=1001.2014.3001.5501

https://blog.csdn.net/m0_57541899/article/details/122761220?spm=1001.2014.3001.5501

5. Why? transformer Block use LayerNorm instead of BatchNorm Well ?

Two 、Q&A:Bert and its family

1. Bert What kind of thing are you doing ?

Bert The thing to do is : Lose one word sequence to Bert, And then every one word Will throw up one embedding It's over when I come out for you . As for Bert What does the architecture look like inside , As mentioned before Bert It's the same architecture as transformer encoder The architecture is the same . that transformer encoder What's in it ? Certainly self-attention layer. that self-attention layer What are you doing ? Namely input a sequence, then output a sequence. Now the whole Bert Architecture is input word sequence, And then it output Corresponding word Of embedding.

2. Bert This nerve How was it trained ?

The first training method is Mask language model (Mask Language Model,MLM); The second training method is The next sentence predicts (Next Sentence Prediction,NSP); More specifically, in the previous Bert and its family——Bert I have written :Bert and its family——Bert https://blog.csdn.net/m0_57541899/article/details/122789735?spm=1001.2014.3001.55013. Bert Of mask What are the advantages and disadvantages of this method ?

https://blog.csdn.net/m0_57541899/article/details/122789735?spm=1001.2014.3001.55013. Bert Of mask What are the advantages and disadvantages of this method ?

Bert Of mask The way : In the choice mask Of 15% Among the words ,80% Under the circumstances mask Drop the word ,10% In this case, replace... With an arbitrary word , The remaining 10% In this case, keep the original vocabulary unchanged . advantage :(1) Be randomly selected mask Of 15% Among the words , With 10% The probability of using any word substitution to predict the correct word , Equivalent to text error correction task , by Bert The model endows certain text error correction ability ;(2) Be randomly selected mask Of 15% Among the words , With 10% The probability of keeping the original vocabulary unchanged , Relieved fine-tune The problem of input mismatch between time and pre training ( During the pre training, there are mask, and fine-tune When the input is complete sentences , That is, the input does not match ). shortcoming : For words composed of two or more consecutive words , Random mask Split the correlation between consecutive words , Make it difficult for the model to learn the semantic information of words .

4. The model has , How to use it Bert Well ?

An intuitive idea is to Bert Of model Train with your next task , How about that Bert With the next downstream Our tasks are combined ? stay Bert and its family——Bert Four examples are given for reference :Bert and its family——Bert https://blog.csdn.net/m0_57541899/article/details/122789735?spm=1001.2014.3001.55015. Bert What is the loss function corresponding to the two pre training tasks of ?

https://blog.csdn.net/m0_57541899/article/details/122789735?spm=1001.2014.3001.55015. Bert What is the loss function corresponding to the two pre training tasks of ?

Bert The loss function consists of two parts , The first part is from Mask language model (Mask Language Model,MLM), The other part comes from The next sentence predicts (Next Sentence Prediction,NSP). Through the joint learning of these two tasks , You can make Bert Learned embedding both token Level information , It also contains sentence level semantic information . The specific loss function is : . What does the parameter mean , Check it out

. What does the parameter mean , Check it out

6. ELMO What kind of thing are you doing ?

ELMO The thing to do is :

Bert and its family——ELMO https://blog.csdn.net/m0_57541899/article/details/122775529?spm=1001.2014.3001.55017. GPT What kind of thing are you doing ?

https://blog.csdn.net/m0_57541899/article/details/122775529?spm=1001.2014.3001.55017. GPT What kind of thing are you doing ?

You may have guessed that I'm going to give another link, right , Yes , Guess what

3、 ... and 、Q&A:VIT

About vision transformer There are too many excellent blog, So here are just a few common questions

1. layer normalization & batch normalization The difference between ?

Layer normalization Do more than BN It's simpler : Enter a vector , Output another vector , Don't consider batch, It's for the same feature The difference in it dimension Calculation mean Follow std, and BN Is different from feature The same inside dimension Calculation mean Follow std

2. Why CLS token Well ?

By analogy Bert:Bert Insert a before the text CLS Symbol , The output vector corresponding to the symbol is used as the semantic representation of the whole article for text classification , It can be understood as : And other words already in the text / Word comparison , This symbol without obvious semantic information will be more “ fair ” Integrate the words in the text / Semantic information of words

3. Multi-head Attention The essence of

Sometimes we can't have only one q, There should be different q, Responsible for different kinds of correlation , Its essence is : Under the condition that the total number of parameters remains unchanged , Will be the same q,k,v Map to the original high latitude subspace attention Calculation , let me put it another way , Is to find the relationship between sequences from different angles , And at the end concat Integrate the association relationships captured in different subspaces

4. VIT Principle analysis

The theoretical analysis :

Code implementation :

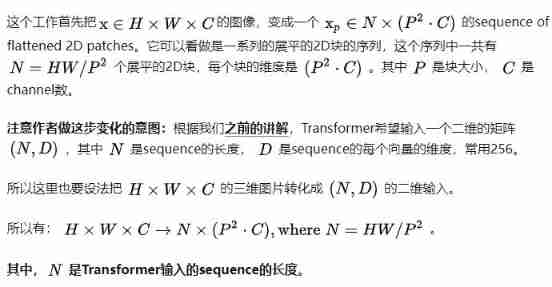

For standard Transformer modular , The required input is token( vector ) Sequence , Two dimensional matrix [num_token, token_dim] =[196,768]

In the code implementation , Directly through a convolution layer . With ViT-B/16 For example , The convolution kernel size used is 16x16,stride by 16, The number of convolution kernels is 768,[224, 224, 3] -> [14, 14, 768] -> [196, 768]

In the input TransformerEncoder You need to add [class]token as well as Position Embedding. Splicing [class]token: Cat([1, 768], [196, 768]) -> [197, 768], superposition PositionEmbedding: [197, 768] -> [197, 768]

边栏推荐

- Implementation of stack

- 2022-06-30 網工進階(十四)路由策略-匹配工具【ACL、IP-Prefix List】、策略工具【Filter-Policy】

- Leetcode daily question solution: 540 A single element in an ordered array

- Kubernetes cluster builds efk log collection platform

- An old programmer gave it to college students

- Global and Chinese market of liquid antifreeze 2022-2028: Research Report on technology, participants, trends, market size and share

- 2.1 use of variables

- BOC protected tryptophan porphyrin compound (TAPP Trp BOC) Pink Solid 162.8mg supply - Qiyue supply

- Pat grade B 1009 is ironic (20 points)

- Strict data sheet of new features of SQLite 3.37.0

猜你喜欢

IP address is such an important knowledge that it's useless to listen to a younger student?

Don't be afraid of no foundation. Zero foundation doesn't need any technology to reinstall the computer system

How to improve data security by renting servers in Hong Kong

Vscode reports an error according to the go plug-in go get connectex: a connection attempt failed because the connected party did not pro

原生表格-滚动-合并功能

Teach you how to quickly recover data by deleting recycle bin files by mistake

2022-06-25 advanced network engineering (XI) IS-IS synchronization process of three tables (neighbor table, routing table, link state database table), LSP, cSNP, psnp, LSP

Change deepin to Alibaba image source

Part 28 supplement (XXVIII) busyindicator (waiting for elements)

2.6 formula calculation

随机推荐

Assign the CMD command execution result to a variable

Global and Chinese markets of cast iron diaphragm valves 2022-2028: Research Report on technology, participants, trends, market size and share

2022-06-30 網工進階(十四)路由策略-匹配工具【ACL、IP-Prefix List】、策略工具【Filter-Policy】

How to do Taobao full screen rotation code? Taobao rotation tmall full screen rotation code

JMeter plug-in installation

Parental delegation mechanism

PR notes:

MySQL dump - exclude some table data - MySQL dump - exclude some table data

Global and Chinese market of liquid antifreeze 2022-2028: Research Report on technology, participants, trends, market size and share

FPGA 学习笔记:Vivado 2019.1 工程创建

Rd file name conflict when extending a S4 method of some other package

FAQs for datawhale learning!

Global and Chinese markets of lithium chloride 2022-2028: Research Report on technology, participants, trends, market size and share

Global and Chinese market of two in one notebook computers 2022-2028: Research Report on technology, participants, trends, market size and share

Plan for the first half of 2022 -- pass the PMP Exam

2.3 other data types

2022 Xinjiang latest road transportation safety officer simulation examination questions and answers

Leetcode daily question solution: 540 A single element in an ordered array

JMeter connection database

Wechat applet quick start (including NPM package use and mobx status management)