当前位置:网站首页>Gym welcomes the first complete environmental document, which makes it easier to get started with intensive learning!

Gym welcomes the first complete environmental document, which makes it easier to get started with intensive learning!

2022-07-03 19:41:00 【Datawhale】

Intensive learning lab

Official website :http://www.neurondance.com/

Forum :http://deeprl.neurondance.com/

edit :OpenDeepRL

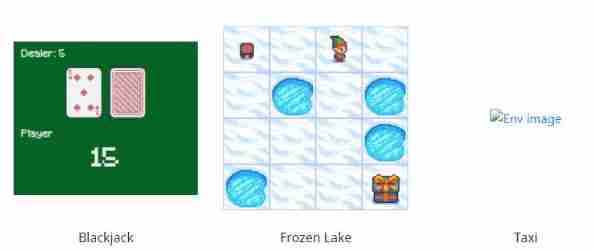

OpenAI Gym It is an environmental toolkit for developing and comparing reinforcement learning algorithms , It supports training agents (agent) Do anything —— From walking to playing Pong Games like go are all in range . It is compatible with other numerical libraries , Such as pytorch、tensorflow perhaps theano Kuo et al . Now the main support is python Language

Previously officially provided gym The document mainly consists of two parts :

Test problem set , Every problem becomes an environment (environment): It can be used in reinforcement learning algorithm development , These environments have shared interfaces , Allows users to design general algorithms , for example :Atari、CartPole etc. .

OpenAI Gym service : Provide a site and api , Allow users to compare the performance of their trained algorithms .

among Gym With simple interface 、pythonic, And can express general RL problem , It is well known in the field of reinforcement learning .

Gym Release 8 After year , Welcome to the first complete environmental document :https://www.gymlibrary.ml/

The whole document mainly includes the following parts :

API

Vector API

Spaces

Environments

Environment Creation

Third Party Environment

Wrappers

Tutorials

API

This example will run CartPole-v0 Environment instance 1000 Time steps , Render the environment at every step . You should see a pop-up window , Present the classic cart bar problem

Vector API

Vectorization environment (Vectorized Environments) It's running multiple ( Independent ) The environment of the sub environment , You can run in sequence , You can also use multiprocessing to run in parallel . Vectorization environment takes a batch of actions as input , And return a batch of observation results . This is especially useful , for example , When a strategy is defined as a neural network that operates on a set of observations . among Vector API Contains :

Gym Provides two types of vectorization environments :

gym.vector.SyncVectorEnv, The sub environments are executed in sequence .

gym.vector.AsyncVectorEnv, The sub environment uses multiprocessing for parallel execution . This will create a process for each sub environment .

And gym.make similar , You can use gym.vector.make Function runs a vectorized version of the registered environment . This will run multiple copies of the same environment ( By default, it is parallel ). The following example runs in parallel 3 individual CartPole-v1 Environmental copy , take 3 A vector of binary actions ( One for each sub environment ) As input , And returns... Stacked along the first dimension 3 An array of observations , Array of rewards returned for each sub environment , And a Boolean array , Indicates whether the plot in each sub environment has ended .

>>> envs = gym.vector.make("CartPole-v1", num_envs=3)

>>> envs.reset()

>>> actions = np.array([1, 0, 1])

>>> observations, rewards, dones, infos = envs.step(actions)

>>> observations

array([[ 0.00122802, 0.16228443, 0.02521779, -0.23700266],

[ 0.00788269, -0.17490888, 0.03393489, 0.31735462],

[ 0.04918966, 0.19421194, 0.02938497, -0.29495203]],

dtype=float32)

>>> rewards

array([1., 1., 1.])

>>> dones

array([False, False, False])

>>> infos

({}, {}, {})Space

Space It mainly defines the effective format of environmental observation and action space . Contains Seed function 、Sample And other various function interfaces :

Environments

The environmental part is Gym The core content , The whole is divided into the following categories :

The specific contents are as follows :

Toy Text

All toy text environments are native to us Python library ( for example StringIO) Created . These environments are designed to be very simple , With small discrete state and action space , So it's easy to learn . therefore , They are suitable for debugging the implementation of reinforcement learning algorithm . All environments can be configured through the parameters specified in each environment document .

Atari

Atari Environment learning environment through arcade (ALE) [1] To simulate .

Mujoco

MuJoCo Represents multi joint dynamics with contact . It is a physics engine , For promoting robots 、 Biomechanics 、 Research and development of graphics and animation and other fields that need fast and accurate simulation .

These environments also need to be installed MuJoCo engine . By 2021 year 10 month ,DeepMind It has acquired MuJoCo, And in 2022 Open source in , Free to all . Can be found on their website and GitHub Find information about installation in the repository MuJoCo Description of the engine . take MuJoCo And OpenAI Gym When used together, it also needs to install the frame mujoco-py, Can be in GitHub The framework is found in the repository ( Use the above command to install this dependency ).

There are ten Mujoco Environmental Science :Ant、HalfCheetah、Hopper、Hupper、Humanoid、HumanoidStandup、IvertedDoublePendulum、InvertedPendulum、Reacher、Swimmer and Walker. The initial state of all these environments is random , To increase randomness , Add Gaussian noise to the fixed initial state .Gym in MuJoCo The state space of the environment consists of two parts , They are flattened and connected : Body parts ('mujoco-py.mjsim.qpos') Or the position of the joint and its corresponding speed ('mujoco-py.mjsim. qvel'). Usually , Some of the first location elements will be omitted from the state space , Because rewards are calculated based on their values , The algorithm is left to infer these hidden values indirectly .

Besides , stay Gym Environment , This set of environments can be considered to be more difficult to solve through strategies . You can change it XML File or adjust the parameters of its class to configure the environment .

Classic Control

There are five classic control environments :Acrobot、CartPole、Mountain Car、Continuous Mountain Car and Pendulum. The initial state of all these environments within a given range is random . Besides ,Acrobot Noise has been applied to the action taken . in addition , For these two mountain bike environments , The mountain climbing cars are lack of power , So it takes some effort to climb to the top of the mountain . stay Gym Environment , This set of environments can be considered as environments that are easier to solve by strategy . All environments can be highly configured through the parameters specified in each environment document .

Box2D

These environments all involve Toy Games Based on physical control , Using a box2d Physics and based on PyGame Rendering of . These environments are created by Oleg Klimov stay Gym Early contributions , Since then, it has become a popular toy benchmark . All environments can be highly configured through the parameters specified in each environment document .

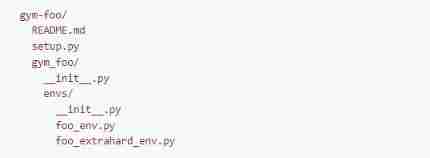

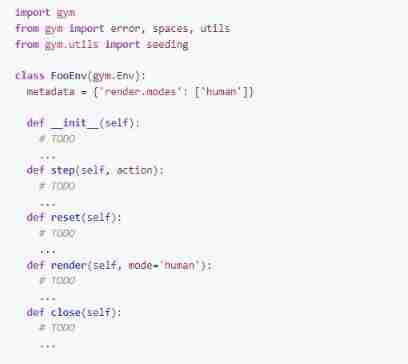

Environment Creation

How to Gym Create a new environment

This document provides an overview of OpenAI Gym Contains the creation of new environments and related useful wrappers 、 Utilities and tests .

Example custom environment

This includes a custom environment Python A simple skeleton of the repository structure of the package . For a more complete example, please refer to :https://github.com/openai/gym-soccer.

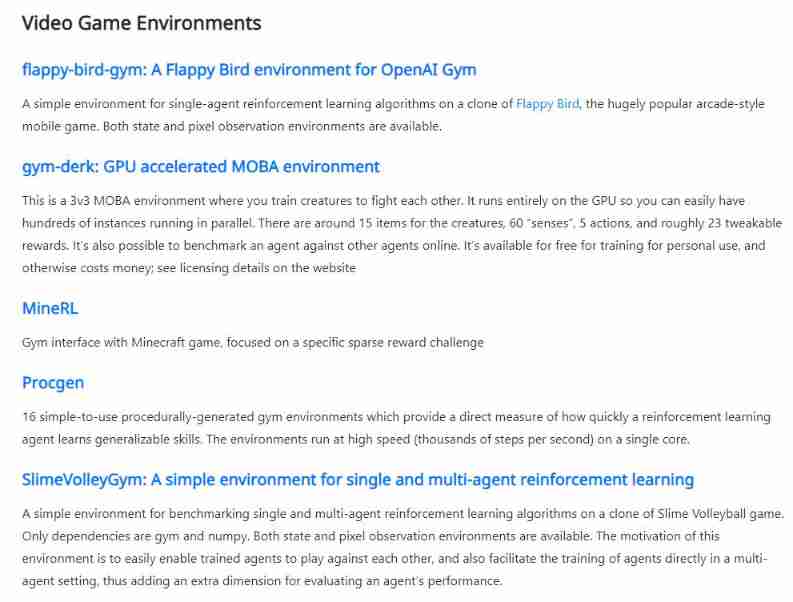

Third Party Environments

The third-party environment mainly includes 61 Kind of :

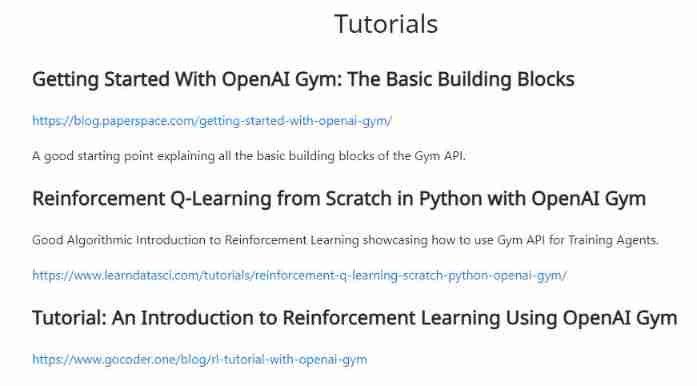

Finally, some introductory tutorials are provided

Dry goods learning , spot Fabulous Three even ↓

边栏推荐

- Day11 ---- 我的页面, 用户信息获取修改与频道接口

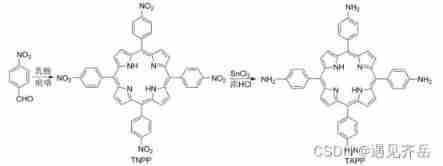

- Bright purple crystal meso tetra (4-aminophenyl) porphyrin tapp/tapppt/tappco/tappcd/tappzn/tapppd/tappcu/tappni/tappfe/tappmn metal complex - supplied by Qiyue

- PR 2021 quick start tutorial, how to create new projects and basic settings of preferences?

- Free hand account sharing in September - [cream Nebula]

- Bad mentality leads to different results

- Buuctf's different flags and simplerev

- Day10 -- forced login, token refresh and JWT disable

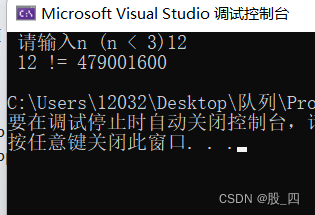

- 第一章:求n的阶乘n!

- The necessity of lean production and management in sheet metal industry

- These problems should be paid attention to in the production of enterprise promotional videos

猜你喜欢

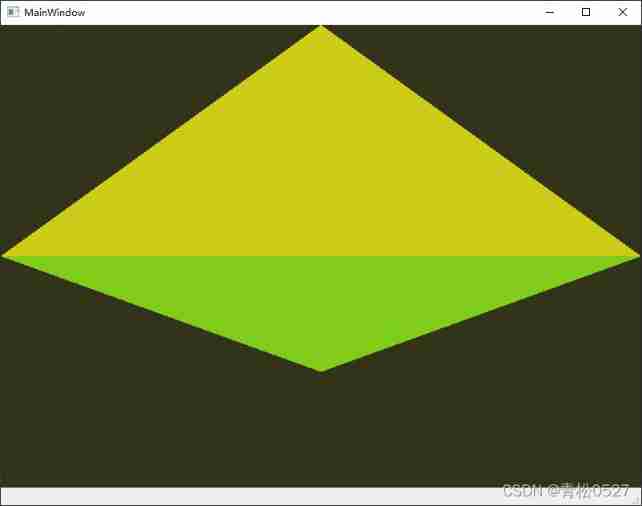

01 - QT OpenGL display OpenGL window

2022-06-25 网工进阶(十一)IS-IS-三大表(邻居表、路由表、链路状态数据库表)、LSP、CSNP、PSNP、LSP的同步过程

Day18 - basis of interface testing

2022-07-02 网工进阶(十五)路由策略-Route-Policy特性、策略路由(Policy-Based Routing)、MQC(模块化QoS命令行)

The earliest record

Chapter 1: find the factorial n of n!

04 -- QT OpenGL two sets of shaders draw two triangles

Chapter 20: y= sin (x) /x, rambling coordinate system calculation, y= sin (x) /x with profile graphics, Olympic rings, ball rolling and bouncing, water display, rectangular optimization cutting, R que

第二章:4位卡普雷卡数,搜索偶数位卡普雷卡数,搜索n位2段和平方数,m位不含0的巧妙平方数,指定数字组成没有重复数字的7位平方数,求指定区间内的勾股数组,求指定区间内的倒立勾股数组

Bright purple crystal meso tetra (4-aminophenyl) porphyrin tapp/tapppt/tappco/tappcd/tappzn/tapppd/tappcu/tappni/tappfe/tappmn metal complex - supplied by Qiyue

随机推荐

2020 intermediate financial management (escort class)

2022 - 06 - 30 networker Advanced (XIV) Routing Policy Matching Tool [ACL, IP prefix list] and policy tool [Filter Policy]

5. MVVM model

BOC protected phenylalanine zinc porphyrin (Zn · TAPP Phe BOC) / iron porphyrin (Fe · TAPP Phe BOC) / nickel porphyrin (Ni · TAPP Phe BOC) / manganese porphyrin (Mn · TAPP Phe BOC) Qiyue Keke

4. Data binding

3. Data binding

Professional interpretation | how to become an SQL developer

FPGA learning notes: vivado 2019.1 project creation

Compared with 4G, what are the advantages of 5g to meet the technical requirements of industry 4.0

05 -- QT OpenGL draw cube uniform

2. Template syntax

Free sharing | linefriends hand account inner page | horizontal grid | not for sale

Difference between surface go1 and surface GO2 (non professional comparison)

Octopus online ecological chain tour Atocha protocol received near grant worth $50000

5- (4-nitrophenyl) - 10,15,20-triphenylporphyrin ntpph2/ntppzn/ntppmn/ntppfe/ntppni/ntppcu/ntppcd/ntppco and other metal complexes

Bad mentality leads to different results

02 -- QT OpenGL drawing triangle

Sentinel source code analysis part I sentinel overview

Luogu-p1107 [bjwc2008] Lei Tao's kitten

Merge K ascending linked lists