当前位置:网站首页>Introduction to machine learning compilation course learning notes lesson 2 tensor program abstraction

Introduction to machine learning compilation course learning notes lesson 2 tensor program abstraction

2022-06-30 22:34:00 【herosunly】

In this lesson slides Links are as follows :https://mlc.ai/summer22-zh/slides/2-TensorProgram.pdf;notes Links are as follows :https://mlc.ai/zh/chapter_tensor_program/.

List of articles

1. The outline of this lesson

- Metatensor function ( Tensor operator )

- Tensor program abstraction

Through this lesson, I have a deeper understanding of batch processing . Batch processing and small batch gradient descent are similar ( The premise is that the calculation is independent ). This will be described in more detail later .

2. Metatensor function

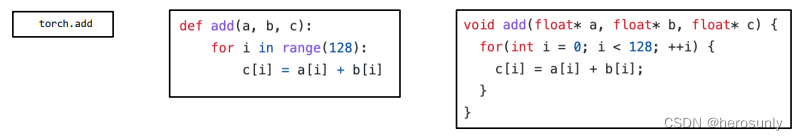

A meta tensor function is a single element ( Minimum particle size ) The tensor function of . With PyTorch Take addition in as an example :

import torch

C = torch.empty((128,), dtype=torch.float32)

a = torch.tensor(np.arange(128, dtype="float32"))

b = torch.tensor(np.ones(128, dtype="float32"))

torch.add(a,b,out=c) # torch.add can be viewed as a primitive tensor function

that torch.add How to achieve it ? Or in actual deployment , How to put different steps ( Multiple tensor functions ) Merge together ?

abstract And Realization Is one of the core concepts of this course . For the same tensor operator , There are different ways to achieve :

The second representation is a refinement of the first representation , The third representation is the use of low-level languages (C Language , Even in assembly language ) For more efficient implementation .

One most common MLC process that many frameworks offer is to transform the implementations of primitive functions(or dispatch them in runtime) to more optimized ones based on the environment.

A common method in tensor operator transformation is to map it directly to the corresponding operator library . For example CUDA Environment , You can use cudaAdd. Another common one is Finer grained program transformation . The former can be directly represented by symbols , The latter needs to be expressed in a circular form .

3. Tensor program abstraction

The tensor operator transformation mentioned above includes two common forms , One is to map directly to the corresponding operator library , The other is to carry out finer grained program transformation . The latter is called Tensor function abstraction .

The picture below is TVM An example in :

Tensor function abstraction includes three key elements :

- Multidimensional input 、 Output data .

- loop .

- In each cycle , Make the corresponding calculation .

It involves a problem : That is why we don't use C Language and other low-level languages . It mainly lies in that the core of machine learning compilation is not only that the program ape can complete the coding , It is more important to complete the tensor operator transformation , That is, given a tensor, it will be transformed from the original form to a more optimized form ( Try different transformations , Finally find the most optimized form ). If we can explore the equivalent space corresponding to the possible tensor function through the program , Can greatly improve efficiency .

3.1 Why do we need tensor program abstraction

stay Program-based transformnations Loop splitting in , In essence, it is to transform a single-layer cycle into a double-layer cycle ( Loop splitting is a common transformation ), and Transformed program It involves parallelization and vectorization , Vectorization here means that each step is based on the length 4 Unit of ( The batch ) To do an operation .

3.2 Common tensor transformations

3.2.1 Loop split

The equivalent substitution includes : x = x o ∗ 4 + x i x=x_o*4+x_i x=xo∗4+xi

3.2.2 Cyclic rearrangement

The essence of cycle rearrangement is to replace the sequence of two cycles .

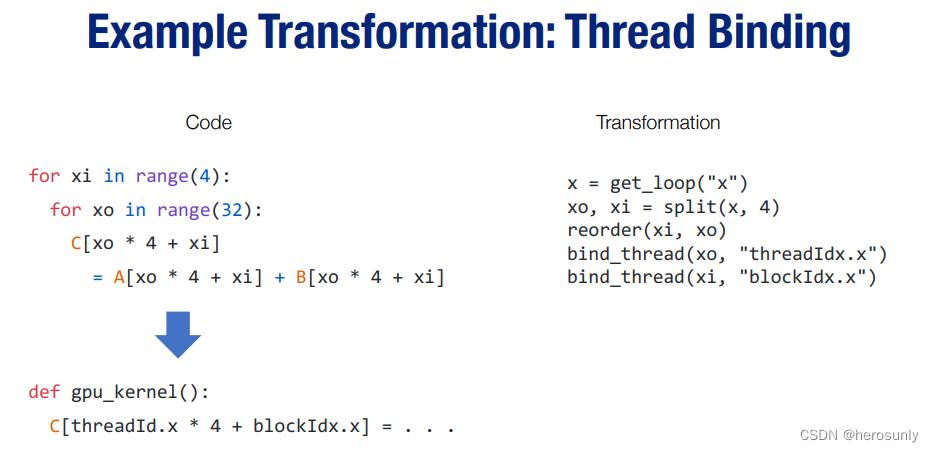

3.2.3 Thread binding

If in GPU Parallelize on , You can put xi and xo Corresponding to GPU On two threads of . By binding GPU The thread of gets the final result ( More in-depth explanations will be given in subsequent chapters ).

Try different transformation combinations in different hardware environments , Then, according to the test, the optimal transformation is obtained in the equivalent space corresponding to the tensor function . One thing to note is that , The transformation here must be equivalent . Here's a counterexample , In essence, it can be expressed as C = A + C C=A+C C=A+C,C For others index Under the C The results are dependent ( stay xo = 4, xi = 0 The situation will be right xo = 0, xi = 1 Results in dependency , And if we change the order of the loop xo = 0, xi = 1 It will be circulated to ), If it's just simple reorder, The order of the loop will change, causing the dependency to be broken , The left side of the equation will appear C To the right of the equation C There is no result yet . So there is no loop rearrangement here .

for xo in range(32):

for xi in range(4):

C[xo * 4 + xi] = A[xo * 4 + xi] + C[max(xo * 4 + xi – 1, 0)]

3.3 Other structures in tensor program abstraction

According to the above , We cannot change the program arbitrarily ( For example, some calculations will depend on the order between loops ). But fortunately , Most of the metatensor functions we are interested in have good properties ( For example, between loop iterations independence ).

The tensor program can combine this additional information into a part of the program , To make program transformation more convenient .

among vi = T.axis.spatial(128, i) Not only is it equivalent to vi=i, And show that vi Represents an iterator , And all iterations in the iterator are independent . This information is not necessary to execute the program , But it will make it more convenient for us to change this program . In this case , We know we can Safely parallel Or reorder all and vi About the cycle , As long as the actual implementation vi Value of from 0 To 128 The order of change .

23:20

4. Tensor program transformation practice

The first thing to say is : If there is already Colab account number , It is more recommended to directly use Colab Environmental Science , Then you can directly use the following pip Installation package .

4.1 Installation package

The official installation order is as follows , But because of https://mlc.ai/wheels You need a proxy to connect , If not Colab The following commands are not recommended for environment installation :

python3 -m pip install mlc-ai-nightly -f https://mlc.ai/wheels

The recommended method is to manually download a file that is suitable for the environment wheel file , Then install :

Personal environment is Linux+Python3.8 Environmental Science , The download link of the corresponding file is :https://github.com/mlc-ai/utils/releases/download/v0.9.dev0/mlc_ai_nightly-0.9.dev1661%2Bg2b34ced6c-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

4.2 Construct tensor program

import numpy as np

import tvm

from tvm.ir.module import IRModule

from tvm.script import tir as T

@tvm.script.ir_module

class MyModule:

@T.prim_func

def main(A: T.Buffer[128, "float32"],

B: T.Buffer[128, "float32"],

C: T.Buffer[128, "float32"]):

# extra annotations for the function

T.func_attr({

"global_symbol": "main", "tir.noalias": True})

for i in range(128):

with T.block("C"):

# declare a data parallel iterator on spatial domain

vi = T.axis.spatial(128, i)

C[vi] = A[vi] + B[vi]

TVMScript It's a way for us to Python The way to represent tensor programs in the form of abstract syntax trees . Notice that this code does not actually correspond to a Python Program , Instead, it corresponds to a tensor program in the compilation process of machine learning .TVMScript The language of is designed to communicate with Python Corresponding to grammar , And in Python On the basis of grammar, additional structure is added to help program analysis and transformation .

print(type(MyModule)) # tvm.ir.module.IRModule

MyModule yes IRModule An example of a data structure , yes A set of tensor functions .

We can go through script Function to get this IRModule Of TVMScript Express . This function is useful for checking between step-by-step program transformations IRModule Very helpful for .

print(MyModule.script())

@tvm.script.ir_module

class Module:

@tir.prim_func

def func(A: tir.Buffer[128, "float32"], B: tir.Buffer[128, "float32"], C: tir.Buffer[128, "float32"]) -> None:

# function attr dict

tir.func_attr({

"global_symbol": "main", "tir.noalias": True})

# body

# with tir.block("root")

for i in tir.serial(128):

with tir.block("C"):

vi = tir.axis.spatial(128, i)

tir.reads(A[vi], B[vi])

tir.writes(C[vi])

C[vi] = A[vi] + B[vi]

4.3 Compile and run

Use build The function will have a IRModule Into executable functions , among llvm Express CPU As a hardware execution environment .

rt_mod = tvm.build(MyModule, target="llvm") # The module for CPU backends.

type(rt_mod)

tvm.driver.build_module.OperatorModule

After compilation ,mod Contains a set of executable functions . We can get the corresponding functions by their names .

func = rt_mod["main"]

func

<tvm.runtime.packed_func.PackedFunc>

First we construct three tensors , among c Used to receive output :

a = tvm.nd.array(np.arange(128, dtype="float32"))

b = tvm.nd.array(np.ones(128, dtype="float32"))

c = tvm.nd.empty((128,), dtype="float32")

func(a, b, c)

print(c)

[ 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14.

15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. 26. 27. 28.

29. 30. 31. 32. 33. 34. 35. 36. 37. 38. 39. 40. 41. 42.

43. 44. 45. 46. 47. 48. 49. 50. 51. 52. 53. 54. 55. 56.

57. 58. 59. 60. 61. 62. 63. 64. 65. 66. 67. 68. 69. 70.

71. 72. 73. 74. 75. 76. 77. 78. 79. 80. 81. 82. 83. 84.

85. 86. 87. 88. 89. 90. 91. 92. 93. 94. 95. 96. 97. 98.

99. 100. 101. 102. 103. 104. 105. 106. 107. 108. 109. 110. 111. 112.

113. 114. 115. 116. 117. 118. 119. 120. 121. 122. 123. 124. 125. 126.

127. 128.]

4.4 Tensor program transformation

Now we begin to transform the tensor program . A tensor program can be implemented through an auxiliary called scheduling (schedule) The data structure is transformed .

sch = tvm.tir.Schedule(MyModule)

type(sch)

tvm.tir.schedule.schedule.Schedule

We first try to split the loop .

# Get block by its name

block_c = sch.get_block("C")

# Get loops surrounding the block, Get block Outside the corresponding loop

(i,) = sch.get_loops(block_c)

# Tile the loop nesting.

i_0, i_1, i_2 = sch.split(i, factors=[None, 4, 4])

print(sch.mod.script())

take for i in tir.serial(128) Medium i Changed to i_0, i_1 and i_2. Another thing to note factors=[None, 4, 4] and tir.grid(8, 4, 4) The one-to-one correspondence between vi Equivalent to tir.axis.spatial(128, i_0 * 16 + i_1 * 4 + i_2). The latter is represented by a triple cycle .

@tvm.script.ir_module

class Module:

@tir.prim_func

def func(A: tir.Buffer[128, "float32"], B: tir.Buffer[128, "float32"], C: tir.Buffer[128, "float32"]) -> None:

# function attr dict

tir.func_attr({

"global_symbol": "main", "tir.noalias": True})

# body

# with tir.block("root")

for i_0, i_1, i_2 in tir.grid(8, 4, 4):

with tir.block("C"):

vi = tir.axis.spatial(128, i_0 * 16 + i_1 * 4 + i_2)

tir.reads(A[vi], B[vi])

tir.writes(C[vi])

C[vi] = A[vi] + B[vi]

In addition, you can rearrange the loop , For example, will i_2 Move to i_1 On the outside .

sch.reorder(i_0, i_2, i_1)

print(sch.mod.script())

@tvm.script.ir_module

class Module:

@tir.prim_func

def func(A: tir.Buffer[128, "float32"], B: tir.Buffer[128, "float32"], C: tir.Buffer[128, "float32"]) -> None:

# function attr dict

tir.func_attr({

"global_symbol": "main", "tir.noalias": True})

# body

# with tir.block("root")

for i_0, i_2, i_1 in tir.grid(8, 4, 4):

with tir.block("C"):

vi = tir.axis.spatial(128, i_0 * 16 + i_1 * 4 + i_2)

tir.reads(A[vi], B[vi])

tir.writes(C[vi])

C[vi] = A[vi] + B[vi]

Another common transformation is parallelization , For example, parallel operation on the outermost loop .

sch.parallel(i_0)

print(sch.mod.script())

@tvm.script.ir_module

class Module:

@tir.prim_func

def func(A: tir.Buffer[128, "float32"], B: tir.Buffer[128, "float32"], C: tir.Buffer[128, "float32"]) -> None:

# function attr dict

tir.func_attr({

"global_symbol": "main", "tir.noalias": True})

# body

# with tir.block("root")

for i_0 in tir.parallel(8):

for i_2, i_1 in tir.grid(4, 4):

with tir.block("C"):

vi = tir.axis.spatial(128, i_0 * 16 + i_1 * 4 + i_2)

tir.reads(A[vi], B[vi])

tir.writes(C[vi])

C[vi] = A[vi] + B[vi]

5. summary

2.4. summary

The meta tensor function represents the single unit computation in the computation of machine learning model .

- A machine learning compilation process can selectively transform the implementation of meta tensor functions .

A tensor program is an efficient abstraction of a tensor function .

- Key ingredients include : Multidimensional arrays 、 A nested loop 、 Calculation statement .

- Program transformation can be used to speed up the execution of tensor programs .

- Additional structures in tensor programs can provide more information for program transformation .

边栏推荐

- CNN classic network model details -lenet-5 (pytorch Implementation)

- Flip the linked list ii[three ways to flip the linked list +dummyhead/ head insertion / tail insertion]

- Where can I find the computer device manager

- leetcode:104. Maximum depth of binary tree

- A new one from Ali 25K came to the Department, which showed me what the ceiling is

- vncserver: Failed command ‘/etc/X11/Xvnc-session‘: 256!

- Domestic database disorder

- What does the &?

- d编译时计数

- B_ QuRT_ User_ Guide(35)

猜你喜欢

与AI结对编程式是什么体验 Copilot vs AlphaCode, Codex, GPT-3

Graduation project

B_ QuRT_ User_ Guide(33)

latex中 & 号什么含义?

Nansen复盘加密巨头自救:如何阻止百亿多米诺倾塌

How to design test cases

Why does the computer speed slow down after vscode is used for a long time?

Braces on the left of latex braces in latex multiline formula

Where can I find the computer version of wechat files

Uniapp life cycle / route jump

随机推荐

2022-06-30: what does the following golang code output? A:0; B:2; C: Running error. package main import “fmt“ func main() { ints := make

[450. delete nodes in binary search tree]

【BSP视频教程】BSP视频教程第19期:单片机BootLoader的AES加密实战,含上位机和下位机代码全开源(2022-06-26)

Anfulai embedded weekly report no. 271: June 20, 2022 to June 26, 2022

Uniapp rich text editor

10 airbags are equipped as standard, and Chery arizer 8 has no dead corner for safety protection

Redis' transaction and locking mechanism

Some memory problems summarized

How to realize the center progress bar in wechat applet

Store Nagios monitoring information into MySQL

[golang] golang实现截取字符串函数SubStr

In depth analysis of Apache bookkeeper series: Part 4 - back pressure

Spark - understand partitioner in one article

[career planning for Digital IC graduates] Chap.1 overview of IC industry chain and summary of representative enterprises

Redis' cache penetration, cache breakdown and cache avalanche

电脑设备管理器在哪里可以找到

Golang application ━ installation, configuration and use of Hugo blog system

Uniapp life cycle / route jump

msf之ms17-010永恒之蓝漏洞

RP prototype resource sharing - shopping app