当前位置:网站首页>It's so difficult for me. Have you met these interview questions?

It's so difficult for me. Have you met these interview questions?

2022-06-24 11:06:00 【Nightmares】

1、 How much data access does your project have ?

According to the actual situation of the project , Here is a chestnut for you .

If traditional projects are not sass Such , Apply to enterprises , Users are usually in 1000 about , Concurrent words rarely occur , Usually by redis cache 、 These threads can handle .

Internet Project , Generally, most of the project days are spent in 1W about , Concurrent in 200 To 500 between , Considering the increasing number of users in the later stage , The treatment scheme is more extensive .

DNS、 Pre service 、 After 、 Cache optimization 、 Thread pool 、nginx、 Page static 、 colony 、 High availability Architecture 、 Separation of reading and writing, etc . There are many plans , You can choose according to the actual needs of the project .

2、 use redis Is to do that piece of processing ?

We also mentioned above ,redis Mainly for cache processing . Such as file 、 The storage of information , Different data types are applied according to different business scenarios , It also solves the following problem 4 topic , There are specific explanations in the previous articles , I won't repeat it here .

secondly ,redis Also involves :redis Cluster building 、 An avalanche 、 through 、 Uniformity 、 Read / write separation 、 The main equipment 、 Expiration time settings 、 Comparison between non relational databases of the same type , Some may ask how the data is stored, which can be explained in combination with the above types , These are basically the points .

3、 If redis How to deal with the collapse ?

The cause of avalanche :

Cache avalanche is simply understood as : Due to the original cache failure ( Or the data is not loaded into the cache ), Period before new cache ( Cache normally from Redis In order to get , Here's the picture ) All requests that should have access to the cache are going to query the database , And for databases CPU And memory , Serious will cause database downtime , Cause the system to crash .

The basic solution is as follows :

First of all , Most system designers consider using lock or queue to ensure that there will not be a large number of threads to read and write the database at one time , Avoid too much pressure on the database when the cache fails , Although it can relieve the pressure of database to a certain extent, it also reduces the throughput of the system .

second , Analyze user behavior , Try to distribute the cache failure time evenly .

Third , If it's because a cache server is down , You can think about making decisions , such as :redis The main equipment , But double caching involves updating transactions ,update Maybe reading dirty data , It needs to be solved .

Redis Solutions to the avalanche effect :

1、 You can use distributed locks , Local lock for stand-alone version

When suddenly there are a lot of requests to the database server , Request restrictions . Use the mechanism , Ensure that there is only one thread ( request ) operation . Otherwise, wait in line ( Cluster distributed lock , Single machine local lock ). Reduce server throughput , Low efficiency .

Ensure that only one thread can enter In fact, there can only be one request performing the query operation .

You can also use the current limiting strategy here .

2、 Message oriented middleware

If there are a lot of requests for access ,Redis No value , The query results will be stored in the message oriented middleware ( Take advantage of MQ Out of step characteristics ).

3、 Level 1 and level 2 caching Redis+Ehchache

4、 Equal share distribution Redis Of key The expiration time of

Different key, Set different expiration times , Make the cache failure time as uniform as possible .

4、redis What data types do you often use ?

String—— character string 、Hash—— Dictionaries 、List—— list 、Set—— aggregate 、Sorted Set—— Ordered set

5、 How to ensure redis Cache consistency issues ?

1. First option : Adopt the strategy of delay double deletion

Before and after writing the library redis.del(key) operation , And set a reasonable timeout .

The pseudocode is as follows

public void write(String key,Object data){ redis.delKey(key); db.updateData(data); Thread.sleep(500); redis.delKey(key); }

2. The concrete step is :

1) So let's delete the cache

2) Write the database again

3) Sleep 500 millisecond

4) Delete cache again

that , This 500 How to determine the millisecond , How long should I sleep ?

Need to evaluate the time taken to read the business logic of your project . The purpose of this is , Is to make sure that the read request ends , Write requests can remove cached dirty data caused by read requests .

Of course, this strategy also needs to consider redis Time consuming to synchronize with database master and slave . Last write data sleep time : On the basis of reading the business logic of data , Add a few hundred ms that will do . such as : Sleep 1 second .

3. Set cache expiration time

In theory , Set the expiration time for the cache , Is a solution that guarantees ultimate consistency . All writes are subject to the database , As long as the cache expiration time is reached , The subsequent read requests will naturally read the new value from the database and backfill the cache .

4. The disadvantages of the scheme

Combined with double deletion strategy + Cache timeout settings , The worst case scenario is that the data is inconsistent within the timeout period , It also increases the time-consuming of writing requests .

2、 Second option : Update cache asynchronously ( Based on subscription binlog The synchronization mechanism of )

1. The whole idea of Technology :

MySQL binlog Incremental subscription consumption + Message queue + Incremental data update to redis

1) read Redis: The heat data is basically Redis

2) Write MySQL: Addition, deletion and modification are all operations MySQL

3) to update Redis data :MySQ Data operation of binlog, To update to Redis

2.Redis to update

1) Data operation is mainly divided into two parts :

One is the total amount ( Write all data to at once redis)

One is incremental ( Real time updates )

This is incremental , refer to mysql Of update、insert、delate Change data .

2) Read binlog Post analysis , Using message queues , Push to update the redis Cache data .

So once MySQL A new write has been created in 、 to update 、 Delete and other operations , You can put the binlog Relevant information is pushed to Redis,Redis According to binlog Records in , Yes Redis updated .

In fact, this mechanism , Is very similar MySQL The master-slave backup mechanism of , because MySQL The master and the slave are also available binlog To achieve data consistency .

This can be used in combination with canal( Alibaba's open source framework ), Through this framework, we can make a comparison between MySQL Of binlog Subscribe to , and canal It is imitation mysql Of slave Database backup request , bring Redis The same effect is achieved with the data update of .

Of course , You can also use other third parties to push messages here :kafka、rabbitMQ Wait for push update Redis.

6、 For example, you are in springboot A custom attribute is defined in , How to bean Reference inside ?

1. stay Spring Boot Can scan under the bag

The tool class written is SpringUtil, Realization ApplicationContextAware Interface , And add Component annotation , Give Way spring It's time to scan bean

2. be not in Spring Boot Under the scanning package

It's easy to deal with this situation , Write first SpringUtil class , You also need to implement interfaces :ApplicationContextAware

7、JWT Execution principle ?

Mainly pay attention to its certification and authorization , Second, long token 、 These points can also be synchronized to see the problem of short token invalidation .

8、 During registration, the user clicks many times due to network fluctuation , The database is not checked for unique index , How do you deal with it ? The user sends two requests , How do you handle it? ?

First, intercept it , Set in the 1 Multiple requests initiated within minutes are processed only once ;

Put it in the message queue ;

The unique index is not verified , This part can be queried from the cache first , No more database ;

The two requests are concurrent operations :

Threads have their own distribution type 、 Application of locking mechanism 、redis cache 、 Queues, etc. can be processed , It can be explained in detail according to the scene .

9、spring Have you read the source code ? One bean What is the injection process of ?

This is the time to test our basic skills .

First create a bean class , among @Configuration Notes and @ComponentScan Annotation is necessary , If you write a prefix , So you need @ConfigurationProperties annotation ;

Then in the configuration file , such as application.propertity Injection properties in .

10、springboot I want to write a bean Injection into IOC How to make containers ?

The first way : To be injected bean Class add @Service perhaps @Component Etc

The second way : Use @Configuration and @Bean Annotations to configure bean To ioc Containers

The third way : Use @Import annotation

The fourth way :springboot Automatic assembly mechanism

11、mybatis prevent SQL How to inject ? Why? # No. can prevent SQL Inject ?

#{} Is a precompiled , yes Safe ;${} Is not precompiled , Just take the value of the variable , It's not safe , There is SQL Inject ;

PreparedStatement.

12、vue How to handle the interface documents with the back end ?

The investigation should be right vue Understanding , Front end interaction . Can be obtained from vue Some common applications of .

The above is an enumeration of some parameter methods , As a reference .

13、AOP Have you used it ? How do you record the time spent on each interface ?

First , You need to create a class , Then add two comments to the class name

@Component @Aspect

@Component Annotation is to let this class be spring As a bean management ,@Aspect Annotations indicate that the class is a facet object .

The annotation meaning of each method in the class is as follows :

@Pointcut Matching rules for defining sections , If you want colleagues to match more than one , have access to || hold

The two rules are connected , Please refer to the above code for details

@Before The target method is called before execution

@After Call after execution of target method

@AfterReturning Call after execution of target method , You can get the results back , The order of execution is @After after

@AfterThrowing Called when the target method performs an exception

@Around Call the actual target method , You can do something before calling the target method , It can also be found in the target method

Do some operations after calling . The usage scenarios are : Things Management 、 Access control , Log printing 、 Performance analysis and so on .

The above is the meaning and function of each annotation , The two key notes are @Pointcut and @Around annotation ,@Pointcut Used to specify section rules , Decide where to use this section ;@Around Will actually call the target method , In this way, we can do some processing before and after the call of the target method , Things, for example 、 jurisdiction 、 Log, etc. .

It should be noted that , The order of execution of these methods :

Before executing the target method : Enter the first around , Enter again before After the target method is executed : Enter the first around , Enter again after , The last to enter afterreturning

The actual log information is as follows , You can see the execution order of each method :

in addition , Use spring aop Need to be in spring Add the following line of configuration , In order to open aop :

<aop:aspectj-autoproxy/>

meanwhile ,maven You need to add dependent jar package :

<dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjrt</artifactId> <version>1.6.12</version> </dependency> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> <version>1.6.12</version>

</dependency>

To sum up ,Spring AOP In fact, it is to use dynamic proxy to process the section layer uniformly , The ways of dynamic proxy are :JDK Dynamic proxy sum cglib A dynamic proxy ,JDK Dynamic proxy is implemented based on interface , cglib Dynamic proxies are implemented based on subclasses .spring The default is JDK A dynamic proxy , If there is no interface ,spring Will automatically use cglib A dynamic proxy .

14、 Do you understand design patterns ? What design patterns have been used ?

Single case 、 factory 、 agent 、 Decorators, etc , It can be explained in combination with the actual application in the project .

15、mybatis visit mapper How did you get there ?

mybatis Run through resources Put the core configuration file, that is mybatis.xml File load in , And then through xmlConfigBulider Parsing , After parsing, put the results into configuration in , And pass it as a parameter to build() In the method , And return a defaultSQLSessionFactory. Let's call again openSession() Method , To get SqlSession, In the build SqlSession At the same time, we also need transaction and executor Used for subsequent operations .

16、 Are transactions used in the code ? Is it annotation transaction or programming transaction ?

It is usually implemented with annotations , See yesterday's interview questions for details .

17、 Is the project online ?

Traditional projects cannot be seen directly , You can prepare some screenshots , Bring it to the interview , Actually do PPT demonstration ;

It is also good to explain the Internet project directly when it is ready for the interview on the mobile phone .

Here is a point , Prepare for the interview in advance . What am I going to talk about , Well? .

Consider several points : What is it? 、 Why? 、 How do you do it? 、 Harvest .

边栏推荐

- Window function row in SQL Server_ number()rank()dense_ rank()

- math_等比数列求和推导&等幂和差推导/两个n次方数之差/

- The record of 1300+ times of listing and the pursuit of ultimate happiness

- Functions of document management what functions does the document management software have

- Go basic series | 4 Environment construction (Supplement) - gomod doubts

- splice()方法的使用介绍

- RPM installation percona5.7.34

- [IEEE] International Conference on naturallanguageprocessing and information retrieval (ecnlpir 2022)

- Act as you like

- Ppt drawing related, shortcut keys, aesthetics

猜你喜欢

Plant growth H5 animation JS special effect

Moving Tencent to the cloud cured their technical anxiety

Fashionable pop-up mode login registration window

喜欢就去行动

初识string+简单用法(一)

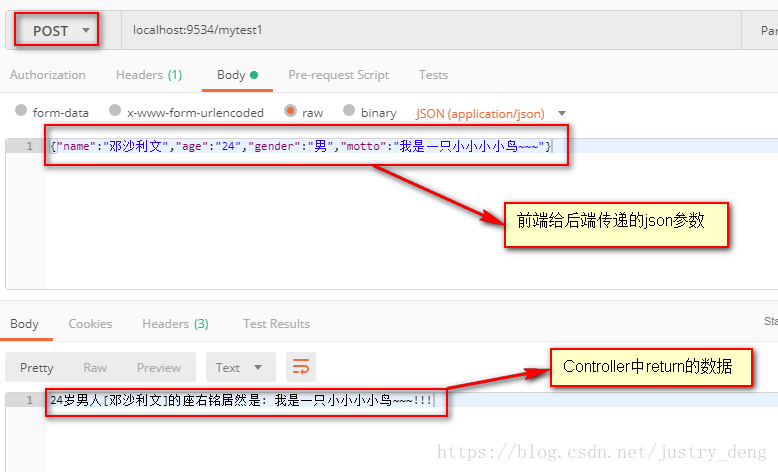

@RequestBody注解

Window function row in SQL Server_ number()rank()dense_ rank()

Quick completion guide for manipulator (III): mechanical structure of manipulator

Cool interactive animation JS special effects implemented by p5.js

Today in history: Turing's birth day; The birth of the founder of the Internet; Reddit goes online

随机推荐

How to improve the quality of Baidu keyword?

Nxshell session management supports import and export

Self service troubleshooting guide for redis connection login problems

Centripetalnet: more reasonable corner matching, improved cornernet | CVPR 2020 in many aspects

Tke deployment kubord

Five methods of JS array summation

08. Tencent cloud IOT device side learning - device shadow and attributes

Extremenet: target detection through poles, more detailed target area | CVPR 2019

Learn to use the kindeditor rich text editor. Click to upload a picture. The mask is too large or the white screen solution

[Qianfan 618 countdown!] IAAs operation and maintenance special preferential activities

How to open a video number?

Maui's way of learning -- Opening

Smart energy: scenario application of intelligent security monitoring technology easycvr in the petroleum energy industry

Charles packet capturing tool tutorial

程序员在技术之外,还要掌握一个技能——自我营销能力

Canvas falling ball gravity JS special effect animation

[net action!] Cos data escort helps SMEs avoid content security risks!

Tencent geek challenge small - endless!

Solve the timeout of Phoenix query of dbeaver SQL client connection

Svg+js drag slider round progress bar