当前位置:网站首页>Introduction to Flink operator

Introduction to Flink operator

2022-07-27 19:36:00 【Lin Musen^~^】

Source Operator

Flink Of API Hierarchy It's streaming / The development of batch applications provides different levels of abstraction

The first layer is the lowest abstraction, which is stateful real-time stream processing , Abstract implementation is Process Function, Used for bottom processing

The second level of abstraction is Core APIs, Many applications don't need to use the lowest level abstraction mentioned above API, But use Core APIs Development

- For example, various forms of user-defined transformation (transformations)、 join (joins)、 polymerization (aggregations)、 window (windows) And status (state) Operation etc. , This layer API Each programming language has its own class for the data types processed in .

The third level of abstraction is Table API. It's a table Table Declarative programming centered on API,Table API It's very simple to use, but its expression ability is poor

- Similar to the operations in the relational model in the database , such as select、project、join、group-by and aggregate etc.

- Allows users to write applications with Table API And DataStream/DataSet API A mixture of

The top abstraction of the fourth layer is SQL, This layer of program expression is similar to Table API, But its program implementation is SQL Query expression

- SQL Abstract and Table API The relationship between abstractions is very close

Be careful :Table and SQL There are many changes , It's still developing , Just know roughly , The core is the first and second layers

- Flink Programming model

Source source

Element set

- env.fromElements

- env.fromColletion

- env.fromSequence(start,end);

file / file system

- env.readTextFile( Local files );

- env.readTextFile(HDFS file );

be based on Socket

- env.socketTextStream(“ip”, 8888)

Customize Source, Implement interface custom data source ,rich dependent api Richer

- The degree of parallelism is 1

- SourceFunction

- RichSourceFunction

- Parallelism is greater than 1

- ParallelSourceFunction

- RichParallelSourceFunction

- The degree of parallelism is 1

Connectors Docking with third-party systems ( be used for source perhaps sink Fine )

- Flink Provided by itself Connector for example kafka、RabbitMQ、ES etc.

- Be careful :Flink The program must be packaged with the corresponding connetor Related classes are packed in , Otherwise it will fail

Apache Bahir The connector

- There are also kafka、RabbitMQ、ES More connectors

Sink Operator

- Sink output source

- predefined

- writeAsText ( Be overdue )

- Customize

- SinkFunction

- RichSinkFunction

- Rich dependent api Richer , More Open、Close Method , Used to initialize connections, etc

- flink Official supply Bundle Connector

- kafka、ES etc.

- Apache Bahir

- kafka、ES、Redis etc.

- predefined

Transformation

Map and FlatMap

KeyBy

filter Filter

sum

reduce function

sum

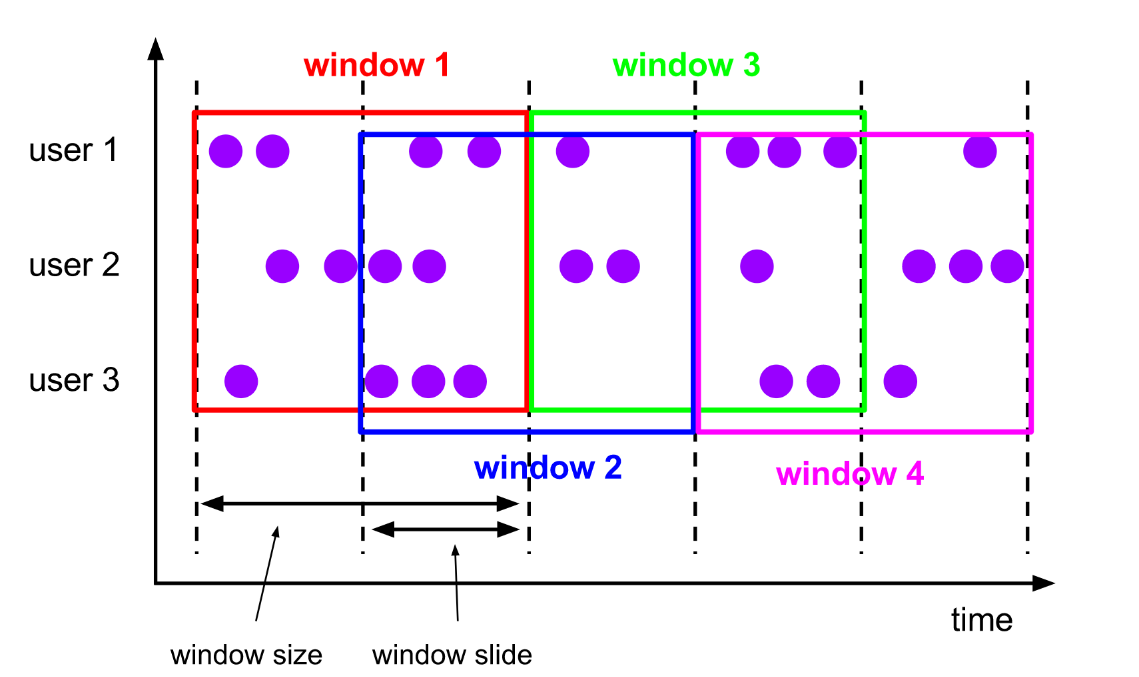

Window slide

- background

- Data flow is always generated , Business needs to aggregate statistics , Like every 10 Seconds count the past 5 Minutes of hits 、 Turnover, etc

- Windows You can split an infinite data stream into a finite size “ bucket buckets”, Then the program can calculate the data in its window

- The window thinks Bucket bucket , A window segment is a bucket , such as 8 To 9 The point is a bucket ,9 To 10 The point is a bucket

- classification

- time Window Time window , That is, according to certain time rules, it is used as window statistics

- time-tumbling-window Time scroll window ( With the more )

- time-sliding-window Time slide window ( With the more )

- session WIndow Session window , That is, statistics of data in a session , Relatively little use

- count Window Number window , That is, according to a certain amount of data as a window statistics , Relatively little use

- time Window Time window , That is, according to certain time rules, it is used as window statistics

Window properties

- The sliding window Sliding Windows

- The window has a fixed size

- Window data overlaps

- Example : Every time 10s The latest statistics 1min Number of orders in

Scroll the window Tumbling Windows

- The window has a fixed size

- Window data does not overlap

- Example : Every time 10s The latest statistics 10s Number of orders in

- Window size size and Sliding interval slide

- tumbling-window: Scroll the window : size=slide, Such as : every other 10s Count the recent 10s The data of

- sliding-window: The sliding window : size>slide, Such as : every other 5s Count the recent 10s The data of

- size<slide When , Every time 15s Count the recent 10s The data of , So in the middle 5s 's data will be lost , So there is no need to

Flink The state of State management

- What is? State state

- Data flow processing is inseparable from state management , For example, window aggregation Statistics 、 duplicate removal 、 Sort, etc

- It's a Operator The running state of / Historical value , Is maintained in memory

- technological process : The subtask of an operator receives the input stream , Get the corresponding state , Calculate new results , Then update the results to the status

Stateful and stateless Introduction

- Stateless Computing : How many times does the same data enter the operator , All the same output , such as filter

- Stateful computation : Historical status needs to be considered , The same input will have different outputs , such as sum、reduce Aggregation operation

State management classification

- ManagedState( With the more )

- Flink management , Automatic storage recovery

- Subdivide into two categories

- Keyed State Keying state ( With the more )

- Yes KeyBy Just use this , Only for KeyStream in , Every key There are state , Is based on KeyedStream Upper state

- General is to use richFlatFunction, Or other richfunction Inside , stay open() Initialization in the declaration cycle

- ValueState、ListState、MapState And so on

- Operator State Operator state ( Less use , part source Will use )

- ListState、UnionListState、BroadcastState And so on

- Keyed State Keying state ( With the more )

- RawState( Less use )

- Users manage and maintain

- Storage structure : Binary array

- ManagedState( With the more )

State data structure ( Status values may exist in memory 、 disk 、DB Or other distributed storage )

- ValueState Simply store a value (ThreadLocal / String)

- ValueState.value()

- ValueState.update(T value)

- ListState list

- ListState.add(T value)

- ListState.get() // Get one Iterator

- MapState Mapping type

- MapState.get(key)

- MapState.put(key, value)

- ValueState Simply store a value (ThreadLocal / String)

Flink Of Checkpoint-SavePoint And end to end (end-to-end) State consistency

What is? Checkpoint checkpoint

- Flink All of the Operator The current State Global snapshot of

- By default checkpoint Is disabled

- Checkpoint It's a State Data is stored regularly and persistently , To prevent loss

- Call by hand checkpoint, It's called savepoint, Mainly for flink Cluster maintenance and upgrading

- The bottom layer uses Chandy-Lamport Distributed snapshot algorithm , Ensure the consistency of data in distributed environment

Open the box ,Flink Bundled with these checkpoint storage types :

- Job manager checkpoint storage JobManagerCheckpointStorage

- File system checkpoint storage FileSystemCheckpointStorage

Savepoint And Checkpoint The difference

- Similar to the difference between backup and recovery logs in traditional databases

- Checkpoint The main purpose of is to provide information for jobs that fail unexpectedly 【 Restart the recovery mechanism 】,

- Checkpoint The life cycle of Flink management , namely Flink establish , Manage and delete Checkpoint - No user interaction required

- Savepoint Created by the user , Own and delete , Mainly 【 upgrade Flink edition 】, Adjust user logic

- Remove conceptual differences ,Checkpoint and Savepoint The current implementation of basically uses the same code and generates the same format

边栏推荐

- 2022 Ningde Vocational College Teachers' practical teaching ability improvement training - network construction and management

- S32K系列芯片--简介

- C language: C language code style

- c语言:9、main函数中的return

- Basic use of Nacos (1) - getting started

- HDU1323_ Perfection [water question]

- SQL time processing (SQL server\oracle)

- Kettle references external scripts to complete phone number cleaning, de duplication and indentation

- Original pw4203 step-down 1-3 lithium battery charging chip

- 汉字查拼音微信小程序项目源码

猜你喜欢

The first entry-level operation of kettle (reading excel, outputting Excel)

v-if,v-else,v-for

Daily question (02): inverted string

Error analysis of building Alibaba cloud +typera+picgo map bed

kettle switch / case 控件实现分类处理

mysql学习笔记(1)——变量

c语言:12、gdb工具调试c程序

Using vscode to build u-boot development environment

C语言案例:密码设置及登录> 明解getchar与scanf

Analysis of Eureka server

随机推荐

Summary of "performance test" of special test

C language: 14. Preprocessing

C language: 11. Pipeline

ipfs通过接口获得公钥、私钥,并加密存储。第一弹

我想咨询下,我们的maxcompute spark程序需要访问redis,开发环境和生产环境redi

27、golang基础-互斥锁、读写锁

每日一题(02):倒置字符串

正十七边形尺规作图可解性复数证明

ES6 new method

C language: 13. Pointer and memory

Use fastjson JSON (simple and rough version)

go-zero单体服务使用泛型简化注册Handler路由

idea优化小攻略

kettle 合并记录 数据减少

kettle8.2 安装及常见问题

New system installation mysql+sqlyog

Chinese character search Pinyin wechat applet project source code

Down sampling - signal phase and aliasing

Idea optimization strategy

Browser rendering principle analysis suggestions collection