当前位置:网站首页>Analysis of istio -- observability

Analysis of istio -- observability

2022-06-29 14:21:00 【Cloud native community】

Preface

The term observability was born in the control theory decades ago , The extent to which a system can infer its internal state from its external output . In recent years , With micro Services 、 Containerization 、serverless And so on , Great changes have taken place in the construction, deployment, implementation and operation of applications , The service links are complicated 、 The trend of microservices and distribution is enhanced 、 A series of changes such as environmental containerization make observability play an important role in cloud primary system . Usually , Observability is divided into Metrics ( indicators )、Tracing ( track )、Logging ( journal ) In the third part of .

Logging Is a text record of events that occurred at a specific time , It includes timestamp indicating the time when the event occurred and providing up and down ⽂ The payload of .Metrics It is through the aggregation of data , Measure your behavior at a specific time , The indicator data is cumulative , You can observe the status and trend of the system .Tracing Request oriented , Represents the end-to-end call journey of a request through a distributed system , It can analyze the cause of abnormal points or faults in the request .

Istio Generate detailed telemetry data for all service communications in the grid . This telemetry technology makes Isito Provides observability of service behavior , Enable the operation and maintenance personnel to troubleshoot 、 Maintain and optimize applications , Without any additional burden on the service developers . stay Istio1.7 Before the release , install Istio The observability plug-in will also be installed by default Kiali、Prometheus、Jaeger etc. . And in the Istio1.7 And later versions , These observability plug-ins will no longer be installed . You need to manually use the following commands to install :

kuebctl apply -f sample/addons/Metrics

Prometheus Grab Isito To configure

Istio Use Prometheus collection Metrics, envoy The exposed port of the data surface standard is 15020 、 The exposure path is stats/prometheus .Istiod The exposed port of the control surface index is 15014 , Path is metrics.Istio Would be envoy and istiod add to annotation, then Prometheus Through these annotation Perform service discovery , To capture and inject in real time envoy Service for , as well as istiod The index information of .

The service is injecting envoy when , Would be pod Automatically add the following annotation ( This function can be realized through –set meshConfig.enablePrometheusMerge=false To disable , Default on ):

prometheus.io/path: /metricsprometheus.io/port: 9100prometheus.io/scrape: trueCreating istiod when , Also for istiod Of pod Add add the following annotation:

prometheus.io/port: 15014prometheus.io/scrape: truePrometheus adopt kuberneters_sd_configs Configure service discovery for Istio Index information .

kubernetes_sd_configs: - role: pod relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_scrape - action: replace regex: (https?) source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_scheme target_label: __scheme__ - action: replace regex: (.+) source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_path target_label: __metrics_path__ - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_pod_annotation_prometheus_io_port target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - action: replace source_labels: - __meta_kubernetes_pod_name target_label: kubernetes_pod_name - action: drop regex: Pending|Succeeded|Failed|Completed source_labels: - __meta_kubernetes_pod_phase__meta_kubernetes_pod_annotation Express pod in annotation Value , And Istio In the component annotation Corresponding .Prometheus The default port for collecting indicator information is 9090, The default path for collecting indicator information is /metrics , And Istio The indicator information provided is different , So it needs to pass relabel_configs Re label , Take exposure as Istio Index the actual port and path . It will also maintain pod In the original label Information , And right pod Filter the status of .

Besides , For standalone deployment Prometheus The situation of , According to the above, you can make a comparison between Prometheus To configure .

Customize Metrics

In practice ,Istio The indicators provided by itself may not be able to meet the needs , Existing indicators need to be modified , Or when you need to add new indicator information ,Istio Support customization metrics.

Istio Use EnvoyFilter From definition Istio Pilot Generated Envoy To configure . Use EnvoyFilter You can modify the values of some fields , Add a specific filter , Listeners, etc. . In a namespace There can be more than one EnvoyFilter, All... Will be processed in the order they were created EnvoyFilter. stay EnvoyFilter It can be done in INBOUND、OUTBOUND、GATEWAY Filter settings for three dimensions .

The following example will be in istio_request_total In this indicator , add to request.host as well as destination.port Two dimensions of information , At the same time bookinfo Of review In service , according to request Request information for , add to request_operation Dimension information of .

First create a EnvoyFilter filter , according to bookinfo in reviews Requested path as well as method, Yes istio_operationId Respectively provided ListReviews、GetReview、CreateReview Three different types of values .WorkloadSelector For those configured with this patch Pods/VMs Group , If this parameter is omitted, this patch will be applied to the same namespace Under all workloads .configPathchs Used to define one or more patches with matching conditions .

apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: istio-attributegen-filter spec: workloadSelector: labels: app: reviews configPatches: - applyTo: HTTP_FILTER match: context: SIDECAR_INBOUND proxy: proxyVersion: '1\.12.*' listener: filterChain: filter: name: "envoy.http_connection_manager" subFilter: name: "istio.stats" patch: operation: INSERT_BEFORE value: name: istio.attributegen typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.http.wasm.v3.Wasm value: config: configuration: "@type": type.googleapis.com/google.protobuf.StringValue value: | { "attributes": [ { "output_attribute": "istio_operationId", "match": [ { "value": "ListReviews", "condition": "request.url_path == '/reviews' && request.method == 'GET'" }, { "value": "GetReview", "condition": "request.url_path.matches('^/reviews/[[:alnum:]]*$') && request.method == 'GET'" }, { "value": "CreateReview", "condition": "request.url_path == '/reviews/' && request.method == 'POST'" } ] } ] } vm_config: runtime: envoy.wasm.runtime.null code: local: { inline_string: "envoy.wasm.attributegen" }And then in stats-filter-1.12.yaml Add the following configuration ( Example use Istio Version is 1.12), by request_total Indicators are added istio_operation、request_host、destination_port These three dimensional information .

name: istio.stats typed_config: '@type': type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.http.wasm.v3.Wasm value: config: configuration: "@type": type.googleapis.com/google.protobuf.StringValue value: | { "metrics": [ { "name": "requests_total", "dimensions": { "request_operation": "istio_operationId" "destination_port": string(destination.port) "request_host": request.host } }] }Last in meshconfig Lower open extraStatTags, take istio_operationId、request_host、destination_port iw .

apiVersion: v1 data: mesh: |- defaultConfig: extraStatTags: - destination_port - request_host - request_operationInquire about istio_request_total You can see the following indicator dimensions , Indicates that the configuration is successful .

istio_requests_total{app="reviews",destination_port="9080",request_host="reviews:9080",request_operation="GetReview",......}Prometheus Federation Yes Istio Multi cluster support

stay Istio In the multi cluster scenario , Each cluster will deploy independent Prometheus Collect your own indicator information , When it is necessary to aggregate multiple cluster indicators and provide a unified Prometheus When visiting the address , Will use Prometheus Federation Aggregate multi cluster data into an independent Prometheus For instance .

In aggregation Prometheus Add a similar configuration to the instance :

scrape_configs: - job_name: 'federate' scrape_interval: 15s honor_labels: true metrics_path: '/federate' params: 'match[]': - '{job="<job_name>"}' static_configs: - targets: - 'source-prometheus-1:9090' - 'source-prometheus-2:9090' - 'source-prometheus-3:9090'Tracing

Istio Recommended distributed tracking tools Jaeger、Zipkin All pass OpenTracing Specification for implementation . In distributed tracking , There is Trace and Span Two important concepts :

- Span: The basic component of distributed tracking , Represents a single unit of work in a distributed system , every last Span Can include other Span References to . Multiple Span Together they make up Trace.

- Trace: The complete request execution process recorded in the microservice , A complete Trace By one or more Span form .

Istio Agent can send automatically Span, But you need to add the following HTTP Request header information , In this way, multiple Span Correctly linked to the same trace .

- x-request-id

- x-b3-traceid

- x-b3-spanid

- x-b3-parentspanid

- x-b3-sampled

- x-b3-flags

- x-ot-span-context

Customize Tracing To configure

Istio Can be different to pod Configure different full link tracing . By giving POD Add name is proxy.istio.io/config Of annotation, To configure the Pod Tracking sampling rate of 、 Customize tag etc. .

metadata: annotations: proxy.istio.io/config: | tracing: sampling: 10 custom_tags: my_tag_header: header: name: hostIt can also be done through meshconfig Make a global tracing To configure .

apiVersion: v1 data: mesh: |- defaultConfig: tracing: sampling: 1.0 max_path_tag_length: 256 custom_tags: clusterID: environment: name: ISTIO_META_CLUSTER_IDIt should be noted that , The above configuration must be restarted to take effect , This is because config The time point of action is Istio Inject sidecar When , This configuration tracing, about Envoy Come on , It's on bootstrap In the configuration , It cannot be changed or modified online .

Logging

Istio The flow of service communication in the grid can be detected , And generate a detailed telemetry data log . By default ,Istio adopt meshConfig.accessLogFile=/dev/stdout Open the Envoy Access log , Log information can go through stdout standard output . Besides , You can also use accessLogEncoding and accessLogFormat Format log .

Envoy Logs can be used kubectl logs Command to view , But when Pod After being destroyed , The old log will no longer exist , If you want to view historical log data , Need to use EFK、Loki And other tools to persist logs .

Istio visualization

Kiali yes Istio Service grid visualizer , Service extension and supplement diagram is provided 、 Full link tracking 、 Index telemetry 、 Configuration verification 、 Health check and other functions .Kiali need Prometheus Provide them with indicator information , It can also be configured Jaeger and Grafana , Realize distributed tracking and monitoring data visualization .

By default ,Kiali Use prometheus.isito-system:9090 As its Prometheus data source . When not used Istio Provided sample/addon, Independent deployment Prometheus when , Need to be in Kiali Of configmap Add the following configuration :

spec: external_services: prometheus: url: <prometheus Actual address >Besides , If you need to Kali The distributed tracking and monitoring data visualization function is added to the , It can also be in external_services The configuration Jaeger and Grafana The actual address of .

Kiali Multi cluster support

Kiali Default on Istio Multi cluster support , If you need to turn off this support , You can make the following settings :

spec: kiali_feature_flags: clustering: enabled: falseUsually , Every istiod A... Will be deployed on the control surface Kiali. stay Isito Multi set group master-slave mode , Only the host cluster is deployed Kiali, Access to applications from the cluster , At this point through Kiali The corresponding traffic information cannot be queried . It needs to be a master-slave cluster Prometheus Federate configuration , Only in this way can the indicator traffic information of the cluster itself be queried .

reference

边栏推荐

- 留给比亚迪的时间还有三年

- 微信小程序:(更新)云开发微群人脉

- 用手机在指南针上开户靠谱吗?这样炒股有没有什么安全隐患

- Persistence mechanism of redis

- Wechat applet: repair collection interface version cloud development expression package

- 微信小程序:修复采集接口版云开发表情包

- .NET程序配置文件操作(ini,cfg,config)

- [network bandwidth] Mbps & Mbps

- "Dead" Nokia makes 150billion a year

- 【VEUX开发者工具的使用-getters使用】

猜你喜欢

微信小程序:全新独家云开发微群人脉

内网穿透(nc)

![[blackduck] configure the specified Synopsys detect scan version under Jenkins](/img/85/73988e6465e8c25d6ab8547040a8fb.png)

[blackduck] configure the specified Synopsys detect scan version under Jenkins

![[Jenkins] pipeline controls the sequential execution of multiple jobs for timed continuous integration](/img/04/a650ab76397388bfb62d0dd190dbd0.png)

[Jenkins] pipeline controls the sequential execution of multiple jobs for timed continuous integration

goby全端口扫描

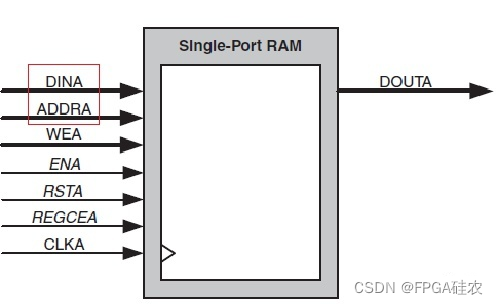

单端口RAM实现FIFO

![[use of veux developer tools - use of getters]](/img/85/7a8d0f9d0c86eb3963db280da70049.png)

[use of veux developer tools - use of getters]

Turbulent intermediary business, restless renters

【jenkins】pipeline控制多job顺序执行,进行定时持续集成

投资人跌下神坛:半年0出手,转行送外卖

随机推荐

unity吃豆人小游戏,迷宫实现

MySQL数据库:使用show profile命令分析性能

systemd调试

微信小程序:装B神器P图修改微信流量主小程序源码下载趣味恶搞图制作免服务器域名

微信小程序:云开发表白墙微信小程序源码下载免服务器和域名支持流量主收益

Turbulent intermediary business, restless renters

你还在用命令看日志?快用 Kibana 吧,一张图胜过千万行日志

微信小程序:大红喜庆版UI猜灯谜又叫猜字谜

numpy数组创建

Follow me study hcie big data mining Chapter 1 Introduction to data mining module 1

【shell】jenkins shell实现自动部署

Redis的事务机制

节点数据采集和标签信息的远程洪泛传输

delphi7中 url的编码

[important notice] the 2022 series of awards and recommendations of China graphics society were launched

Application of ansvc reactive power compensation device in a shopping mall in Hebei

Wechat applet: Halloween avatar box generation tool

I talked about exception handling for more than half an hour during the interview yesterday

Nuscenes configuration information about radar

Can Ruida futures open an account? Is it safe and reliable?