当前位置:网站首页>Migrate data from Mysql to tidb from a small amount of data

Migrate data from Mysql to tidb from a small amount of data

2022-07-03 06:05:00 【Tianxiang shop】

This document describes how to use TiDB DM ( hereinafter referred to as DM) In full quantity + Incremental mode data to TiDB. In this article “ Small data volume ” Usually refers to TiB Below grade .

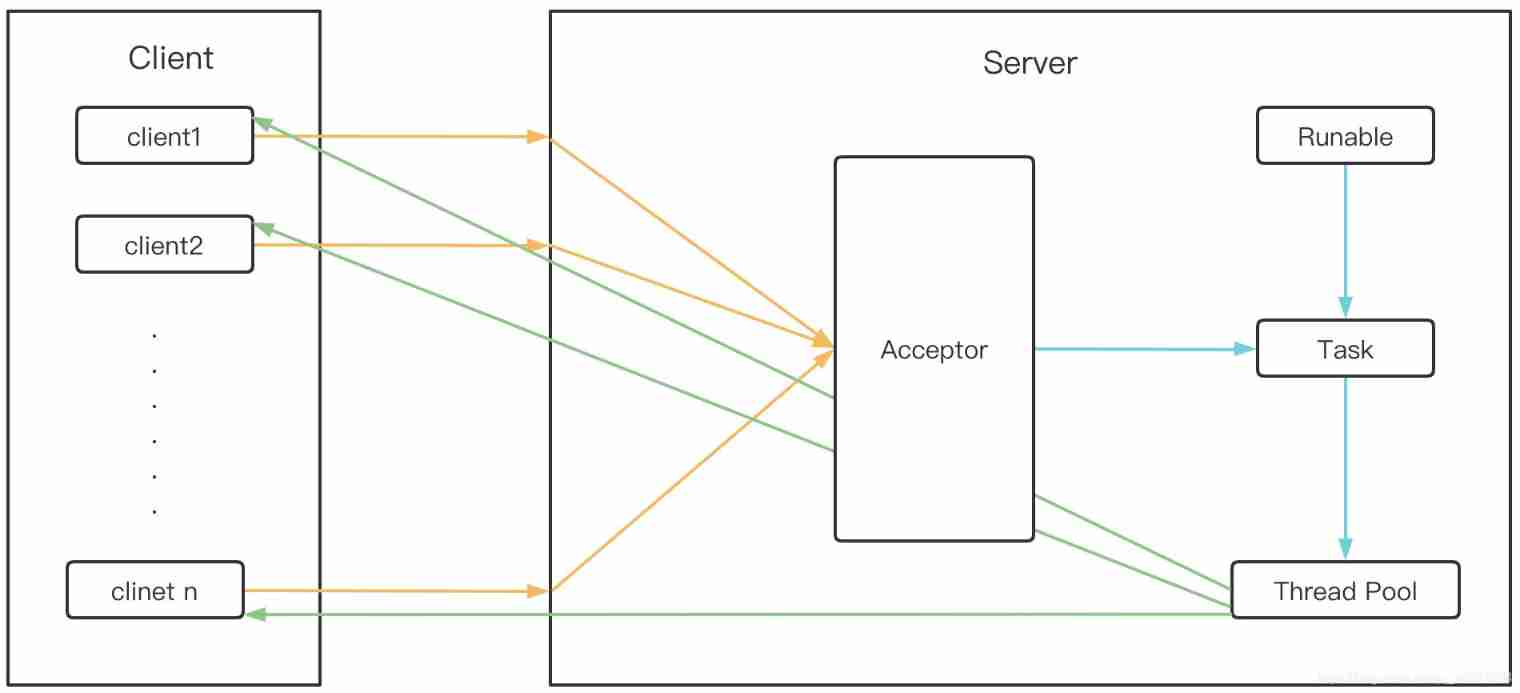

generally speaking , Receive information such as the number of table structure indexes 、 Hardware and network environment impact , Migration rate at 30~50GB/h Unequal . Use TiDB DM The migration process is shown in the following figure .

Prerequisite

The first 1 Step : create data source

First , newly build source1.yaml file , Write the following :

# Unique name , Do not repeat . source-id: "mysql-01" # DM-worker Whether to use the global transaction identifier (GTID) Pull binlog. The premise of use is upstream MySQL Enabled GTID Pattern . If there is master-slave automatic switching in the upstream , Must be used GTID Pattern . enable-gtid: true from: host: "${host}" # for example :172.16.10.81 user: "root" password: "${password}" # Clear text password is supported but not recommended , It is recommended to use dmctl encrypt Encrypt the plaintext password and use port: 3306

secondly , After executing the following command in the terminal , Use tiup dmctl Load the data source configuration into DM In the cluster :

tiup dmctl --master-addr ${advertise-addr} operate-source create source1.yaml

The parameters in this command are described as follows :

| Parameters | describe |

|---|---|

--master-addr | dmctl Any of the clusters to be connected DM-master Node {advertise-addr}, for example :172.16.10.71:8261 |

operate-source create | towards DM The cluster loads the data source |

The first 2 Step : Create migration tasks

newly build task1.yaml file , Write the following :

# Task name , Multiple tasks running at the same time cannot have the same name . name: "test" # Task mode , May be set as # full: Only full data migration # incremental: binlog Real time synchronization # all: Total quantity + binlog transfer task-mode: "all" # The downstream TiDB Configuration information . target-database: host: "${host}" # for example :172.16.10.83 port: 4000 user: "root" password: "${password}" # Clear text password is supported but not recommended , It is recommended to use dmctl encrypt Encrypt the plaintext password and use # All upstream tasks required by current data migration MySQL The instance configuration . mysql-instances: - # Upstream instance or replication Group ID. source-id: "mysql-01" # The configuration item name of the black-and-white list of the library name or table name that needs to be migrated , Used to reference the black and white list configuration of the global , See the following for the global configuration `block-allow-list` Configuration of . block-allow-list: "listA" # Black and white list global configuration , Each instance refers to . block-allow-list: listA: # name do-tables: # White list of upstream tables that need to be migrated . - db-name: "test_db" # The library name of the table to be migrated . tbl-name: "test_table" # Name of the table to be migrated .

The above is the minimum task configuration for performing migration . More configuration items about tasks , You can refer to DM Introduction to the complete configuration file of the task .

The first 3 Step : Start the task

Before you start the data migration task , It is recommended to use check-task Command to check whether the configuration conforms to DM Configuration requirements for , To avoid errors in the later stage .

tiup dmctl --master-addr ${advertise-addr} check-task task.yaml

Use tiup dmctl Execute the following command to start the data migration task .

tiup dmctl --master-addr ${advertise-addr} start-task task.yaml

The parameters in this command are described as follows :

| Parameters | describe |

|---|---|

--master-addr | dmctl Any of the clusters to be connected DM-master Node {advertise-addr}, for example : 172.16.10.71:8261 |

start-task | Parameter is used to start the data migration task |

If the task fails to start , You can change the configuration according to the prompt of the returned result start-task task.yaml Command to restart the task . Please refer to Faults and handling methods as well as common problem .

The first 4 Step : View task status

If you need to know DM Whether there are running migration tasks and task status in the cluster , You can use tiup dmctl perform query-status Command to query :

tiup dmctl --master-addr ${advertise-addr} query-status ${task-name}

Detailed interpretation of query results , Please refer to State of the query .

The first 5 Step : Monitor tasks and view logs ( Optional )

To view the historical status of the migration task and more internal operation indicators , Please refer to the following steps .

If you use TiUP Deploy DM When the cluster , Deployed correctly Prometheus、Alertmanager And Grafana, Use the IP And port entry Grafana, choice DM Of Dashboard see DM Relevant monitoring items .

DM At run time ,DM-worker, DM-master And dmctl Will output relevant information through the log . The log directory of each component is as follows :

- DM-master Log directory : adopt DM-master Process parameters

--log-fileSet up . If you use TiUP Deploy DM, The log directory is located in/dm-deploy/dm-master-8261/log/. - DM-worker Log directory : adopt DM-worker Process parameters

--log-fileSet up . If you use TiUP Deploy DM, The log directory is located in/dm-deploy/dm-worker-8262/log/.

边栏推荐

- 1. 兩數之和

- Leetcode problem solving summary, constantly updating!

- arcgis创建postgre企业级数据库

- Ext4 vs XFS -- which file system should you use

- Exportation et importation de tables de bibliothèque avec binaires MySQL

- Oauth2.0 - use database to store client information and authorization code

- Why should there be a firewall? This time xiaowai has something to say!!!

- Core principles and source code analysis of disruptor

- [minesweeping of two-dimensional array application] | [simple version] [detailed steps + code]

- Strategy pattern: encapsulate changes and respond flexibly to changes in requirements

猜你喜欢

QT read write excel -- qxlsx insert chart 5

![[teacher Zhao Yuqiang] MySQL high availability architecture: MHA](/img/a7/2140744ebad9f1dc0a609254cc618e.jpg)

[teacher Zhao Yuqiang] MySQL high availability architecture: MHA

![[advanced pointer (2)] | [function pointer, function pointer array, callback function] key analysis + code explanation](/img/9b/a309607c037b0a18ff6b234a866f9f.jpg)

[advanced pointer (2)] | [function pointer, function pointer array, callback function] key analysis + code explanation

Bio, NiO, AIO details

![[teacher Zhao Yuqiang] redis's slow query log](/img/a7/2140744ebad9f1dc0a609254cc618e.jpg)

[teacher Zhao Yuqiang] redis's slow query log

Kubernetes notes (10) kubernetes Monitoring & debugging

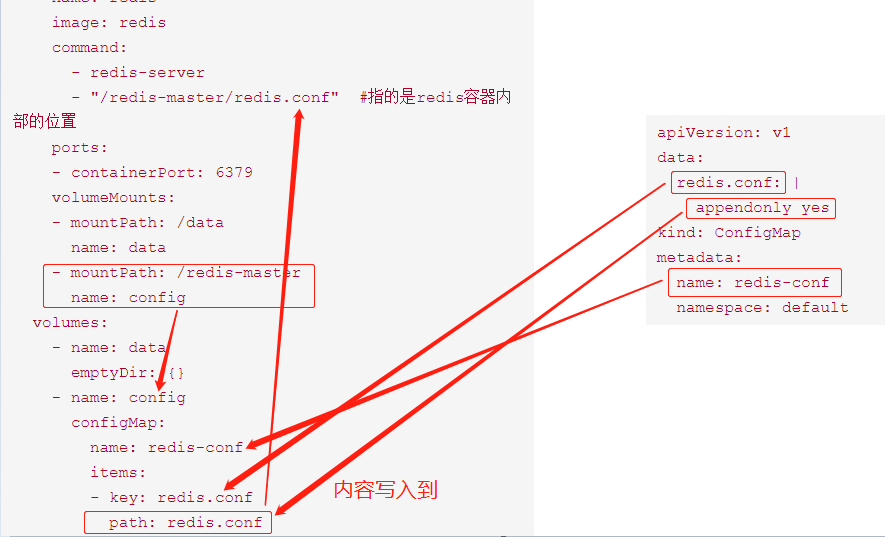

Kubernetes resource object introduction and common commands (V) - (configmap)

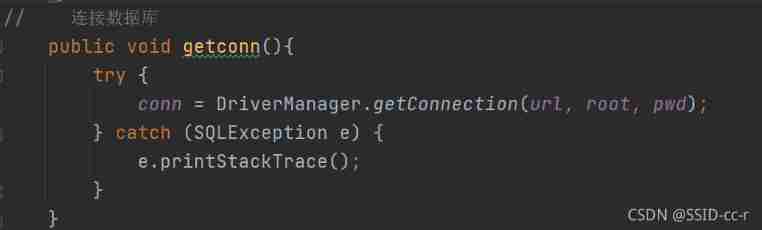

JDBC connection database steps

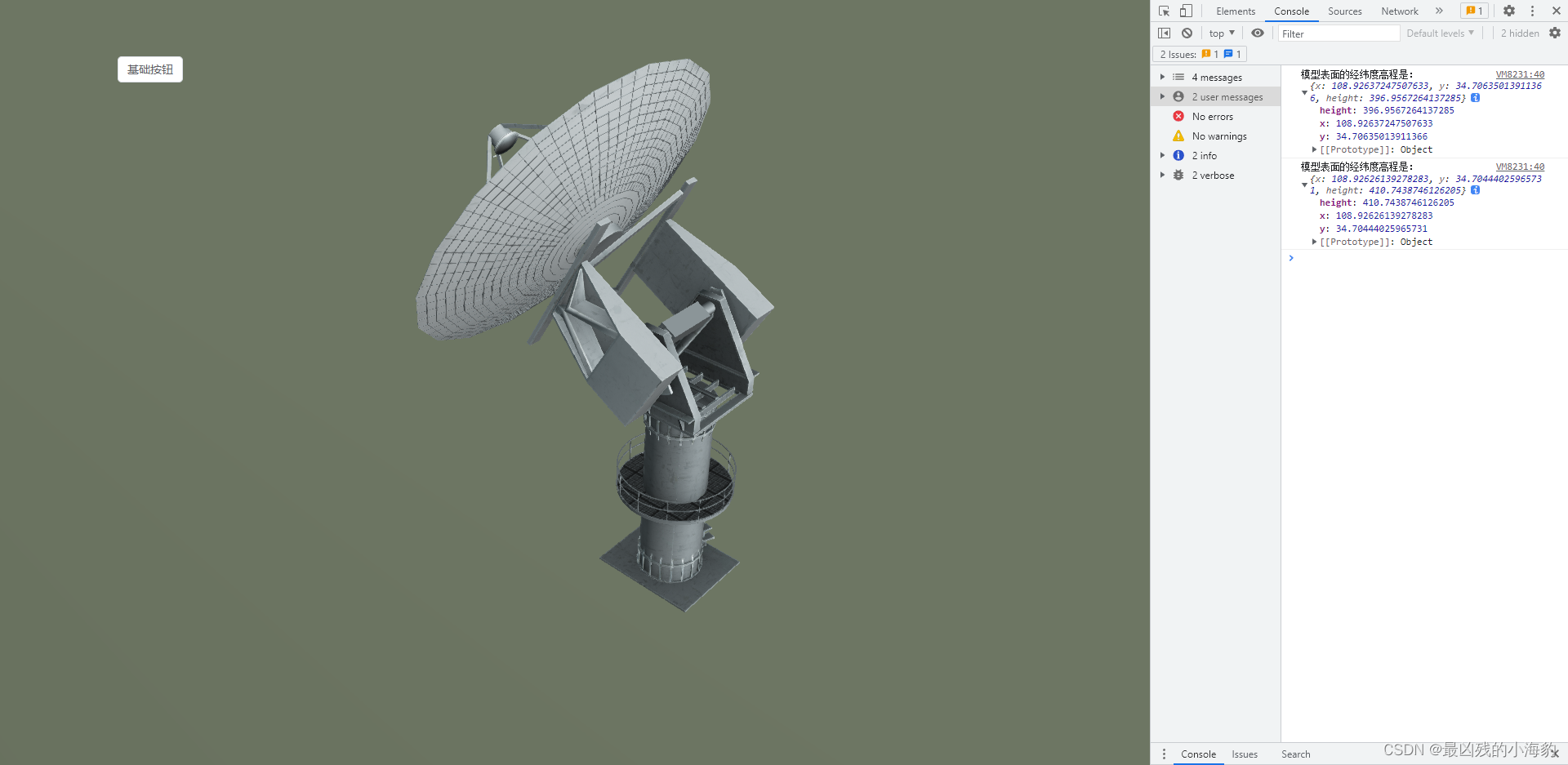

Cesium 点击获取模型表面经纬度高程坐标(三维坐标)

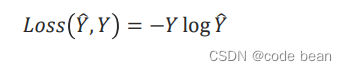

Loss function in pytorch multi classification

随机推荐

[Shangshui Shuo series together] day 10

Simple handwritten ORM framework

Crontab command usage

BeanDefinitionRegistryPostProcessor

Cesium entity(entities) 实体删除方法

Leetcode solution - 02 Add Two Numbers

Method of finding prime number

Strategy pattern: encapsulate changes and respond flexibly to changes in requirements

tabbar的设置

arcgis创建postgre企业级数据库

[trivia of two-dimensional array application] | [simple version] [detailed steps + code]

Installation of CAD plug-ins and automatic loading of DLL and ARX

Sorry, this user does not exist!

Jedis source code analysis (II): jediscluster module source code analysis

88. Merge two ordered arrays

智牛股--03

The programmer shell with a monthly salary of more than 10000 becomes a grammar skill for secondary school. Do you often use it!!!

Simple solution of small up main lottery in station B

Maximum likelihood estimation, divergence, cross entropy

JS implements the problem of closing the current child window and refreshing the parent window