当前位置:网站首页>Attention mechanism yyds, AI editor finally bid farewell to P and destroyed the whole picture

Attention mechanism yyds, AI editor finally bid farewell to P and destroyed the whole picture

2022-06-26 07:31:00 【QbitAl】

Abundant color From the Aofei temple

qubits | official account QbitAI

“Attention is all you need!”

This famous saying has been confirmed in new fields .

come from Shenzhen University And the latest achievements of Tel Aviv University , By means of GAN Introduction in Attention mechanism , Successfully solved some problems when editing face “ Shake your hands ” problem :

For example, when changing a person's hairstyle background Mess up ;

Add beard time Affect the hair 、 even to the extent that Whole face It doesn't look like the same person anymore :

This new model with attention mechanism , Clear and refreshing when modifying the image , Not at all Outside the target area Any impact .

How to do that ?

Introduce attention map

This model is called FEAT (Face Editing with Attention), It's in StyleGAN Based on the generator , Introduce attention mechanism .

Specifically, use StyleGAN2 Face editing in a hidden space .

Its mapper (Mapper) Build on previous methods , By learning the offset of latent space (offset) To modify the image .

In order to modify only the target area ,FEAT Here we introduce Pay attention to the picture (attention map), The features obtained by the source latent code are fused with the features of the shift latent code .

To guide the editor , The model also introduces CLIP, It can use text to learn the offset and generate an attention map .

FEAT The specific process is as follows :

First , Given a sheet with n A feature image . As shown in the figure above , Light blue represents the characteristics , The yellow part marks the number of channels .

Then under the guidance of text prompts , For all that can predict the corresponding offset (offset) The style code of (style code) Generate mapper .

The mapper is biased by latent code (wj+ Δj) modify , Generate Map image .

We'll go on with the , Generated with the attention module attention map take Original image and mapped image Of the i Layer features are fused , Generate the editing effect we want .

among , The architecture of the attention module is as follows :

The left side is for feature extraction StyleGAN2 generator , On the right is... For making attention map Attention Network.

Do not modify the image outside the target area

In the experimental comparison link , The researchers will first FEAT Compared with two recently proposed text-based operation models :TediGAN and StyleCLIP.

among TediGAN Encode both images and text into StyleGAN In the latent space ,StyleCLIP Then three kinds of CLIP And StyleGAN Combined technology .

You can see ,FEAT The precise control of the face is realized , No impact outside the target area .

and TediGAN Not only did you not succeed in changing your hairstyle , Also put Skin colour Darken 了 ( The first line is the rightmost ).

In the second group of changes in expression , also hold sex other It's changed ( The second line is the rightmost ).

StyleCLIP Overall effect ratio TediGAN Much better , But the price is to become A messy background ( The third column in the last two pictures , The background of each effect is affected ).

And then FEAT And InterFaceGAN and StyleFlow Compare .

among InterfaceGAN stay GAN Perform linear operation in latent space , and StyleFlow Then the nonlinear editing path is extracted from the latent space .

give the result as follows :

This is a group of bearded editors , You can see InterfaceGAN and StyleFlow In addition to this operation, it is necessary to Hair and eyebrows Made minor changes .

besides , Both methods also need to mark data for supervision , Can not be like FEAT Do the same Zero sample operation .

stay Quantitative experiments in ,FEAT It also shows its advantages .

In the editing results of the five attributes ,FEAT Than TediGAN and StyleCLIP In visual quality (FID score ) And feature retention (CS and ED score ) Better performance in .

About author

One work Hou Xianxu From Shenzhen University .

He graduated from China University of mining and technology, majoring in geography and geology , Ph.D. in computer science from the University of Nottingham , His main research interests are computer vision and deep learning .

The corresponding author is Shen Linlin , Master supervisor of pattern recognition and intelligent system major of Shenzhen University , At present, the research direction is face / The fingerprint / Biometrics such as palmprint 、 Medical image processing 、 Pattern recognition system .

He graduated from Shanghai Jiaotong University with a master's degree in Applied Electronics , Doctor also graduated from the University of Nottingham . The number of Google academic citations has reached 7936 Time .

Address of thesis :

https://arxiv.org/abs/2202.02713

边栏推荐

- How can I find the completely deleted photos in Apple mobile phone?

- GMP模型

- ES字符串类型(Text vs keyword)的选择

- Machine learning - Iris Flower classification

- 一文深入底层分析Redis对象结构

- How to publish function computing (FC) through cloud effect

- ZRaQnHYDAe

- Item2 installation configuration and environment failure solution

- Scratch program learning

- 【推荐一款实体类转换工具 MapStruct,性能强劲,简单易上手 】

猜你喜欢

5,10,15,20-tetraphenylporphyrin (TPP) and metal complexes fetpp/mntpp/cutpp/zntpp/nitpp/cotpp/pttpp/pdtpp/cdtpp supplied by Qiyue

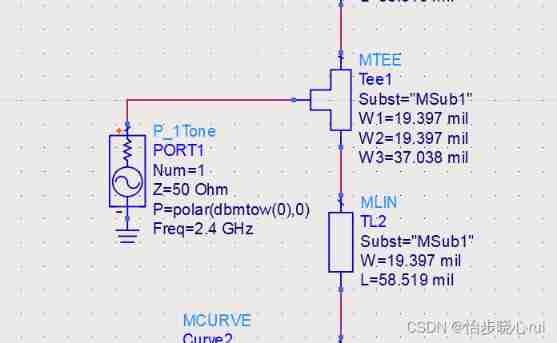

13. Mismatch simulation of power synthesis for ads usage recording

MySQL

If you don't understand, please hit me

Porphyrin based polyimide ppbpis (ppbpi-pa, ppbpi-pepa and ppbpi-pena); Crosslinked porphyrin based polyimide (ppbpi-pa-cr, ppbpi-pepa-cr, ppbpi-pena-cr) reagent

Crosslinked porphyrin based polyimide ppbpi-2, ppbpi-1-cr and ppbpi-2-cr; Porous porphyrin based hyperbranched polyimide (ppbpi-1, ppbpi-2) supplied by Qiyue

Stm32f1 and stm32subeide programming example - thermal sensor driver

Alkynyl crosslinked porphyrin based polyimide materials (ppbpi-h-cr, ppbpi Mn cr.ppbpi Fe Cr); Metalloporphyrin based polyimide (ppbpi Mn, ppbpi FE) supplied by Qiyue

Tetra - (4-pyridyl) porphyrin tpyp and metal complexes zntpyp/fetpyp/mntpyp/cutpyp/nitpyp/cotpyp/ptpyp/pdtpyp/cdtpyp (supplied by Qiyue porphyrin)

Redis(4)----浅谈整数集合

随机推荐

3D porphyrin MOF (mof-p5) / 3D porphyrin MOF (mof-p4) / 2D cobalt porphyrin MOF (ppf-1-co) / 2D porphyrin COF (POR COF) / supplied by Qiyue

Jemter 压力测试 -基础请求-【教学篇】

MySQL operation database

5,10,15,20-tetraphenylporphyrin (TPP) and metal complexes fetpp/mntpp/cutpp/zntpp/nitpp/cotpp/pttpp/pdtpp/cdtpp supplied by Qiyue

$a && $b = $c what???

Es string type (text vs keyword) selection

Analyze 5 indicators of NFT project

Redis系列——5种常见数据类型day1-3

5,10,15,20-tetra (4-bromophenyl) porphyrin (h2tppbr4) /5.2.15,10,15,20-tetra [4-[(3-aminophenyl) ethynyl] phenyl] porphyrin (tapepp) Qiyue porphyrin reagent

Calculate division in Oracle - solve the error report when the divisor is zero

Golang源码包集合

Is it safe for individuals to buy stocks with compass software? How to buy stocks

Database persistence

Scratch program learning

JS modularization

一文搞懂Glide,不懂来打我

Solution to the problem of multi application routing using thinkphp6.0

SQL query statement

Summary of domestic database examination data (continuously updated)

【推荐10个 让你轻松的 IDEA 插件,少些繁琐又重复的代码】