当前位置:网站首页>谷粒商城学习笔记,第五天:ES全文检索

谷粒商城学习笔记,第五天:ES全文检索

2020-11-09 16:11:00 【有一个小阿飞】

谷粒商城学习笔记,第五天:ES全文检索

一、基本概念

注:ES7和8以后就不再支持type了

1、Index索引

相当于MySQL中的Database

2、Type类型(ES8以后就不支持了)

相当于MySQL中的table

3、Document文档(JSON格式)

相当于MySQL中的数据

倒排索引:

正向索引:

当用户在主页上搜索关键词“华为手机”时,假设只存在正向索引(forward index),那么就需要扫描索引库中的所有文档,

找出所有包含关键词“华为手机”的文档,再根据打分模型进行打分,排出名次后呈现给用户。因为互联网上收录在搜索引擎中的文档的

数目是个天文数字,这样的索引结构根本无法满足实时返回排名结果的要求。

倒排索引:

一个倒排索引由文档中所有不重复词的列表构成,对于其中每个词,有一个包含它的文档列表。我们首先将每个文档的拆分成单独的词,

创建一个包含所有不重复词条的排序列表,然后列出每个词条出现在哪个文档。

得到倒排索引的结构如下:

| 关键词 | 文档ID |

|---|---|

| 关键词1 | 文档1,文档2 |

| 关键词2 | 文档2,文档7 |

从词的关键字,去找文档。

二、Docker安装ES

1、下载镜像文件

docker pull elasticsearch:7.4.2

docker pull kibana:7.4.2 ##可视化检索数据

2、安装

##现在本地创建挂在路径

mkdir -p /opt/software/mydata/elasticsearch/config

mkdir -p /opt/software/mydata/elasticsearch/data

mkdir -p /opt/software/mydata/elasticsearch/plugins

##设置读写权限

chmod -R 777 /opt/software/mydata/elasticsearch/

##配置文件:远程访问

echo "http.host:0.0.0.0" >> /opt/software/mydata/elasticsearch/config/elasticsearch.yml

##安装ES

docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms64m -Xms128m" \

-v /opt/software/mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v /opt/software/mydata/elasticsearch/data:/usr/share/elasticsearch/data \

-v /opt/software/mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-d elasticsearch:7.4.2

##查看日志

docker logs elasticsearch

##安装Kibana

docker run --name kibana -p 5601:5601 \

-e ELASTICSEARCH_HOSTS=http://182.92.191.49:9200 \

-d kibana:7.4.2

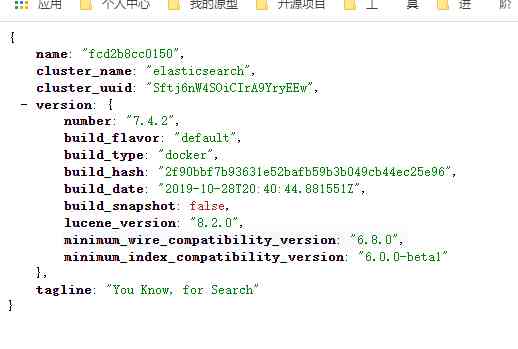

验证

访问:http://你的服务器IP:9200 es

http://你的服务器IP:5601 kibana

如果是阿里的服务器,要先在安全组规则中放开9200 5601

9200作为Http协议,主要用于外部通讯

9300作为Tcp协议,jar之间就是通过tcp协议通讯,ES集群之间是通过9300进行通讯

三、初步检索

3.1、_cat

| 命令 | 作用 |

|---|---|

| GET /_cat/nodes | 查看所有节点 |

| GET /_cat/health | 查看ES健康状况 |

| GET /_cat/master | 查看ES主节点 |

| GET /_cat/indices | 查看所有索引:show databases |

3.2、新增数据:put/post

##put和post 都可以做新增和修改操作

##post 可以带ID也可以不带ID,第一次新增,第二次修改

##put 必须带ID,否则报错,同样第一次表新增,第二次表修改。[因为必须带ID,所有一般用来做修改]

##语法

POST http://IP:9200/index/type/id id可带可不带

{

name: "lee"

}

PUT http://IP:9200/index/type/id id必须带

{

name: "lee"

}

3.3、查询数据:GET&乐观锁

##语法

GET http://IP:9200/customer/external/1

结果:

{

"_index": "customer", ##索引

"_type": "external", ##类型

"_id": "1", ##doc的ID

"_version": 5, ##版本号,添加修改次数

"_seq_no": 5, ##并发控制字段,每次更新就会+1,用来做乐观锁

"_primary_term": 1, ##同上,主分片重新分配,如重启,就会变化,用来做乐观锁

"found": true, ##true表示查找到了

"_source": {

"name": "ren"

}

}

##乐观锁:为了防止并发修改

##我们在修改时可以做如下操作:

PUT http://IP:9200/customer/external/1?_seq_no=5&_primary_term=1

{

"name":"ren"

}

3.4、更新数据:PUT/POST

##覆盖更新:将原来的数据先删除,再新增

POST http://IP:9200/customer/external/1

{

"name":"lee",

"gender":"male",

"age":20

}

PUT http://IP:9200/customer/external/1

{

"name":"ren",

"gender":"male"

}

##局部更新:只修改列出来的字段

POST http://IP:9200/customer/external/1/_update

{

"doc":{

"name":"lee_ren",

}

}

3.5、删除数据和索引:DELETE

##删除数据

DELETE http://IP:9200/customer/external/1

##删除索引(没有删除type)

DELETE http://IP:9200/customer

3.6、批量导入数据:bulk

注意:独立操作,没有事务

##########语法:action表示POST PUT DELETE

{ action: { metadata }}

{ request body }

{ action: { metadata }}

{ request body }

#########批量新增

http://IP:9200/_bulk

{"create":{"_index":"haoke","_type":"user","_id":"aaa"}}

{"id":1001,"name":"name1","age":20,"sex":"男"}

{"create":{"_index":"haoke","_type":"user","_id":"bbb"}}

{"id":1002,"name":"name2","age":21,"sex":"女"}

{"create":{"_index":"haoke","_type":"user","_id":"ccc"}}

{"id":1003,"name":"name3","age":22,"sex":"女"}

或者:

{"index":{"_index":"haoke","_type":"user","_id":"aaa"}}

{"id":1001,"name":"name1","age":20,"sex":"男"}

{"index":{"_index":"haoke","_type":"user","_id":"bbb"}}

{"id":1002,"name":"name2","age":21,"sex":"女"}

{"index":{"_index":"haoke","_type":"user","_id":"ccc"}}

{"id":1003,"name":"name3","age":22,"sex":"女"}

#########批量删除

POST http://IP:9200/_bulk

{"delete":{"_index":"haoke","_type":"user","_id":"aaa"}}

{"delete":{"_index":"haoke","_type":"user","_id":"bbb"}}

{"delete":{"_index":"haoke","_type":"user","_id":"ccc"}}

########批量修改

POST http://IP:9200/_bulk

请求body:

{"index":{"_index":"haoke","_type":"user","_id":"aaa"}}

{"id":1001,"name":"name111","age":20,"sex":"男"}

{"index":{"_index":"haoke","_type":"user","_id":"bbb"}}

{"id":1002,"name":"name222","age":21,"sex":"女"}

{"index":{"_index":"haoke","_type":"user","_id":"ccc"}}

{"id":1003,"name":"name333","age":22,"sex":"女"}

#########批量局部修改

POST http://IP:9200/_bulk

请求body:

{"update":{"_index":"haoke","_type":"user","_id":"aaa"}}

{"doc":{"name":"name111a"}}

{"update":{"_index":"haoke","_type":"user","_id":"bbb"}}

{"doc":{"name":"name222b"}}

{"update":{"_index":"haoke","_type":"user","_id":"ccc"}}

{"doc":{"name":"name333c"}}

elasticsearch自带的批量 测试数据:

https://download.elastic.co/demos/kibana/gettingstarted/8.x/accounts.zip

四、检索进阶

第一种检索方式:

##将所有的请求参数,放在URI路径中

GET http://IP:9200/bank/_search?q=*&sort=account_number:asc

[q=*表示查询所有,sort表示排序字段为account_number 升序,默认from为0 size为10]

第二种检索方式:Query DSL

##将所有的请求参数,放在body体中

GET http://IP:9200/bank/_search

{

"query": {

"match_all": {}

},

"sort": {

"account_number": "asc"

}

}

[query表示查询 match_all表示查询所有,sort表示排序,默认from为0 size为10]

4.1、Query DSL基本语法

GET http://IP:9200/bank/_search

{

"query": {

"match_all": {}

},

"sort": {

"account_number": "asc"

}

"from": 0,

"size": 5,

"_source": ["account_number","balance"]

}

[query表示查询 match_all表示查询所有,sort表示排序,from表示从第几个数据开始查]

[size表示查询记录数,_source表示要返回的字段]

4.2、Query DSL:match

##match 精确匹配,

##非String类型的字段 相当于 == 号

##String类型的字段 相当于like,(分词后的==精确匹配)

GET http://IP:9200/bank/_search

{

"query": {

"match": {

"address": "mill"

}

}

}

##match加上keyword,是精确匹配 address必须是990 Mill Road,大小写也要区分

##注意macth+keyword同match_phrase的区别:

##macth+keyword必须是一模一样的 == ,match_phrase是包含就可以 like,且不区分大小写

GET http://IP:9200/bank/_search

{

"query": {

"match": {

"address.keyword": "990 Mill Road"

}

}

}

4.3、Query DSL:match_phrase

##match_phrase 短语匹配,

##match会将 "mill road"分成两个词"mill"和"road"进行匹配,

##match_phrase 则会将"mill road"作为一个完整的词进行匹配(大小写 和 中间多余的空格不会影响查询结果)

GET http://IP:9200/bank/_search

{

"query": {

"match_phrase": {

"address": "mill road"

}

}

}

4.4、Query DSL:multi_match

##multi_match多字段匹配

##相当于 username == "aa" or nickname == "aa"

GET http://IP:9200/bank/_search

{

"query": {

"multi_match": {

"query":"mill movico",

"fields":["address","city"]

}

}

}

##es会将mill和movico分词,结果类似于 address == mill or address == movico or city == mill or city == movico

4.5、Query DSL:bool

##bool复合查询

##bool组合几个查询,他们是and的关系

##must是必须满足,must_not是必须不满足,should是满足也可以不满足也可以,但满足后排序会靠前些

##must和should会提高相关得分score,must_not不会影响得分(filter也不会影响得分)

GET /bank/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"address":"mill"

}

},

{

"match": {

"gender": "m"

}

}

],

"must_not": [

{

"match": {

"age": "28"

}

}

],

"should": [

{

"match": {

"city": "blackgum"

}

}

]

}

}

}

4.6、Query DSL:filter

##filter结果过滤

##filter类似must,但不影响得分score结果

##must和should会影响相关结果的score,但filter不会

##range类似between

GET /bank/_search

{

"query": {

"bool": {

"filter": {

"range": {

"age": {

"gte": 10,

"lte": 30

}

}

}

}

}

}

4.7、Query DSL:term

##term等同于match,但term只能用于精确的非text文本格式的查找(不能分词)

##match可以进行text的分词后的查找的

##约定:非text字段用term,text字段用match

##注意例如:city:"hebei"是String可以用term查找

##address:"hebei handan"是text需要用match查找

GET /bank/_search

{

"query": {

"term": {

"address": "mill"

}

}

}

五、聚合:aggregations

##聚合:从数据中分组和提取数据

##类似于SQL中的group by\count\avg等

##聚合类型 term avg平均 min最小 max最大 sum综合

##注意:文本和字符串形式的聚合使用keyword聚合

##语法:

"aggs": {

"aggs name聚合名称": {

"aggs type聚合类型": {

"field": "聚合的字段",

"size": "想要多少个结果"

},

....子聚合

},

...其他聚合

}

##eg

##1、搜索address中包含mill的所有人的年龄分布以及平均年龄和平均薪资

##[size:0 显示搜索数据0条,即不显示搜索结果]

## 其他聚合

GET /bank/_search

{

"query": {

"match": {

"address": "mill"

}

},

"aggs": {

"ageAggs": {

"terms": {

"field": "age",

"size": 10

}

},

"ageAvgAggs":{

"avg": {

"field": "age"

}

},

"balanceAvgAggs":{

"avg": {

"field": "balance"

}

}

},

"size":0

}

##2、按照年龄聚合,并查处每个年龄段的平均薪资

##子聚合

GET /bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAggs": {

"terms": {

"field": "age",

"size": 10

},

"aggs": {

"balanceAvg": {

"avg": {

"field": "balance"

}

}

}

}

},

"size": 0

}

##3、查处所有年龄分布,并且这些年龄段中的平均薪资以及年龄段中M男性的平均薪资和F女性的平均薪资

##[gender是文本形式的,无法进行聚合,用gender.keyword聚合]

GET /bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAggs": {

"terms": {

"field": "age",

"size": 10

},

"aggs": {

"ageBalanceAvg": {

"avg": {

"field": "balance"

}

},

"genderAggs": {

"terms": {

"field": "gender.keyword"

},

"aggs": {

"genderBalanceAvg": {

"avg": {

"field": "balance"

}

}

}

}

}

}

},

"size": 0

}

六、映射:Mapping

##映射:mapping类似SQL中定义字段的数据类型

##1、查看映射

GET /bank/_mapping

##2、创建映射

PUT /myindex

{

"mappings": {

"properties": {

"name":{"type": "text"},

"age":{"type": "integer"},

"email":{"type": "keyword"},

"birthday":{"type": "date"}

}

}

}

##3、在已有的mapping上,添加新的映射字段

PUT /myindex/_mapping

{

"properties":{

"employee_id":{"type":"long"}

}

}

##4、修改:已存在映射的已存在字段是不能修改的,只能添加新的字段

##如果非要想 修改已有的映射,需要重新创建映射mapping,然后再迁移数据

##如:就有的bank索引是带有type的,现在我们创建新的映射并去掉bank

##创建新的mapping

PUT /newbank

{

"mappings": {

"properties": {

"account_number": {

"type": "long"

},

"address": {

"type": "text"

},

"age": {

"type": "integer"

},

"balance": {

"type": "long"

},

"city": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"email": {

"type": "keyword"

},

"employer": {

"type": "text"

},

"firstname": {

"type": "text"

},

"gender": {

"type": "keyword"

},

"lastname": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"state": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

}

}

##数据迁移:将原来bank中的数据迁移到newbank中

##数据迁移

POST _reindex

{

"source": {

"index": "bank",

"type": "account"

},

"dest": {

"index": "newbank"

}

}

##注意上面是ES旧版本带有type类型的数据迁移,新版本的数据不带type,迁移语法如下:

POST _reindex

{

"source": {

"index": "bank"

},

"dest": {

"index": "newbank"

}

}

七、分词

7.1、分词

一个tokenizer(分词器)接收一个字符流,将之分割为独立的tokens(词元,通常是独立的单词),然后输出tokens流。

##分词器

POST _analyze

{

"analyzer": "standard",

"text": "Besides traveling and volunteering, something else that’s great for when you don’t give a hoot is to read."

}

7.2、IK分词器

下载地址 GitHub medcl/elasticsearch-analysis-ik

##安装IK分词器: 下载到elasticsearch下的plugins目录下

wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-analysis-ik-7.4.2.zip

##解压

unzip elasticsearch-analysis-ik-7.4.2.zip

##修改权限

chmod -R 777 ik/

##检测是否安装好了

docker exec -it fcd2b /bin/bash

cd bin

elasticsearch-plugin list

##重启es

docker restart fcd2

测试:

##中文分词

POST _analyze

{

"analyzer": "ik_smart",

"text": "我是中国河北人"

}

##中文分词

POST _analyze

{

"analyzer": "ik_max_word",

"text": "我是中国河北人"

}

7.3、自定义扩展词库

7.3.1、Docker安装Nginx

##下载nginx

docker pull nginx:1.10

##启动运行

docker run -p 80:80 --name nginx -d nginx:1.10

##复制docker容器中nginx的配置文件到本地

cd /opt/software/mydata/

mkdir nginx

cd nginx

mkdir html

mkdir logs

docker container cp nginx:/etc/nginx .

##安装新的nginx

docker run -p 80:80 --name nginx \

-v /opt/software/mydata/nginx/html:/usr/share/nginx/html \

-v /opt/software/mydata/nginx/logs:/var/log/nginx \

-v /opt/software/mydata/nginx/conf:/etc/nginx \

-d nginx:1.10

测试:

##在/opt/software/mydata/nginx/html下创建index.html

##内容:

<h1>welcome to nginx</h1>

##浏览器访问

http://IP:80/

7.3.2、扩展词库

创建词库

##在/opt/software/mydata/nginx/html下创建一个文件:es/fenci.txt

##内容:

乔碧萝

徐静蕾

##浏览器访问:

http://IP/es/fenci.txt

es关联词库

##配置es plugins目录下IK分词器conf目录下的配置文件IKAnalyzer.cfg.xml

<!--用户可以在这里配置远程扩展字典 -->

<entry key="remote_ext_dict">http://你的IP/es/fenci.txt</entry>

##重启es

docker restart

##测试

POST _analyze

{

"analyzer": "ik_smart",

"text": "我不认识乔碧萝殿下"

}

版权声明

本文为[有一个小阿飞]所创,转载请带上原文链接,感谢

https://my.oschina.net/ngc7293/blog/4710092

边栏推荐

- MES system is different from traditional management in industry application

- 缓存的数据一致性

- Looking for a small immutable dictionary with better performance

- 详解三种不同的身份验证协议

- 电商/直播速看!双11跑赢李佳琦就看这款单品了!

- 树莓派内网穿透建站与维护,使用内网穿透无需服务器

- Simple use of AE (after effects)

- Installation and testing of Flink

- CAD2020下载AutoCAD2020下载安装教程AutoCAD2020中文下载安装方法

- Mobile security reinforcement helps app achieve comprehensive and effective security protection

猜你喜欢

SEO解决方案制定,如何脱离杯弓蛇影?

Do you think it's easy to learn programming? In fact, it's hard! Do you think it's hard to learn programming? In fact, it's very simple!

5分钟GET我使用Github 5 年总结的这些骚操作!

Installation and testing of Flink

Flink的安装和测试

超大折扣力度,云服务器 88 元秒杀

shell脚本快速入门----shell基本语法总结

CAD2020下载AutoCAD2020下载安装教程AutoCAD2020中文下载安装方法

全栈技术实践经历告诉你:开发一个商城小程序要多少钱?

5 minutes get I use GitHub's five-year summary of these complaints!

随机推荐

电商/直播速看!双11跑赢李佳琦就看这款单品了!

使用基于GAN的过采样技术提高非平衡COVID-19死亡率预测的模型准确性

SEO solution development, how to break away from the shadow of the bow?

帮助企业摆脱困境,名企归乡工程师:能成功全靠有它!

In the third stage, day16 user module jumps to SSO single sign on jsonp / CORS cross domain user login verification

Using art template to obtain weather forecast information

我在传统行业做数字化转型(1)预告篇

你以为学编程很简单吗,其实它很难!你以为学编程很难吗,其实它很简单!

Revealing the logic of moving path selection in Summoner Canyon?

Solution to the failure of closing windows in Chrome browser JS

融云集成之避坑指南-Android推送篇

详解三种不同的身份验证协议

乘风破浪的技术大咖再次集结 | 腾讯云TVP持续航行中

Mit6.824 distributed system course translation & learning notes (3) GFS

深入分析商淘多用户商城系统如何从搜索着手打造盈利点

Talking about PHP file fragment upload from a requirement improvement

AutoCAD2020 完整版安装图文教程、注册激活破解方法

明年起旧版本安卓设备将无法浏览大约30%的网页

International top journal radiology published the latest joint results of Huawei cloud, AI assisted detection of cerebral aneurysms

【亲测有效】Github无法访问或者访问速度的解决方案