explain

This series of articles is about the famous MIT6.824 Distributed systems course Translation supplement and learning summary of , It's like learning and recording .

If there are omissions and mistakes , Also please correct me :)

Continuous updating ing...

translate & Add

The paper

The Google File System

Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung

SOSP 2003

Why do we need to read this paper ?

Distributed storage is an important concept

- Interface / semantics What should it look like ?

- How the interior works ?

GFS The paper covers 6.824 Many of the themes in : parallel , Fault tolerance , Copy , Uniformity

Good systematic paper —— The details cover from application to network

Successful practical application design

Why distributed storage is difficult ?

High performance --> Data fragmentation between multiple machines

Multiple servers --> Often make mistakes

Fault tolerance --> Copy

Copy --> atypism

Better consistency --> Low performance

For consistency , What to do with ?

The ideal model : The same behavior as a server

The server uses hard disk storage

The server only performs one client operation at a time ( Even if it's concurrent )

Read what was written before , Even if the server crashes or restarts

therefore : hypothesis C1 and C2 Write concurrently , Wait until the write operation is finished ,C3 and C4 read . What do they get ?

C1: Wx1

C2: Wx2

C3: ---- Rx?

C4: -------- Rx?

answer :1 perhaps 2, however C3 and C4 You'll see the same value .

This is a “ strong ” The model of consistency .

But a single server has poor fault tolerance .

For fault tolerant replication , It's hard to achieve strong consistency

A simple , But there's a problem with replication solutions :

- Two replicated servers ,S1 and S2

- Multiple clients to two servers , Send write requests in parallel

- Multiple clients to one of them , Send read request

In our example ,C1 and C2 The write requests of may arrive at two servers in different order

- If C3 from S1 Read , You might see x=1

- If C4 from S2 Read , You might see x=2

Or if S1 Accepted a read request , But sending a write request to S2 when , The client crashed , What will happen? ?

It's not strong consistency !

Better consistency often requires communication , To ensure the consistency of the replica set —— It's going to be slow !

There is a lot of trade-off between performance and consistency , I'll see later

GFS

Content :

Many of Google's services require a large, fast, unified file storage system , such as :Mapreduce, Reptiles / Indexes , The logging stored / analysis ,Youtube(?)

On the whole ( For a single data center ): Any client can read any file , Allow multiple applications to share data

On multiple servers / Automation on the hard disk “ section ” Each file , Improve the performance of parallelism , Increase space availability

Automatic recovery of errors

There is only one data center per deployment

Only Google Apps and users

It aims at the sequential operation of large files ; Read or add . It's not the low latency of storing small data DB

stay 2003 What's new in the year ? How they were SOSP Accept ?

It's not distributed 、 Partition 、 Fault tolerance these basic ideas

On a large scale

Practical experience in industry

Successful cases of weak consistency

A separate master Success stories of

Architectural Overview

client ( library ,RPC —— But not like Unix The file system is so visible )

Each file is partitioned into separate 64MB A block of data

Block server , Copy every block of data 3 Share

The data blocks of each file are distributed on multiple block servers , For parallel reading and writing ( such as ,MapReduce), And only one big file is allowed master(!),master It's also copied

Division of work :master Write the name , The data block server is responsible for writing data

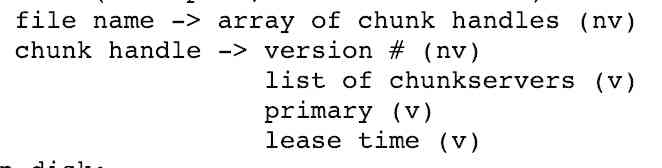

Master The state of

stay RAM in ( Speed , It should be a little bit small ):

On the hard disk :

- journal

- checkpoint

Why there are logs and checkpoints ?

Why large blocks of data ?

When the client C Want to read data , What are the steps ?

- C Send the file name and offset to master M( If there is no cache )

- M Find the block of data for this offset handle

- M Reply to the list of the latest version of the chunk server

- C cache handle And block server list

- C Send the request to the nearest chunk server , Including data blocks handle And offset

- The chunk server reads block files from the hard disk , And back to

master How to know that a chunk server contains a specified chunk ?

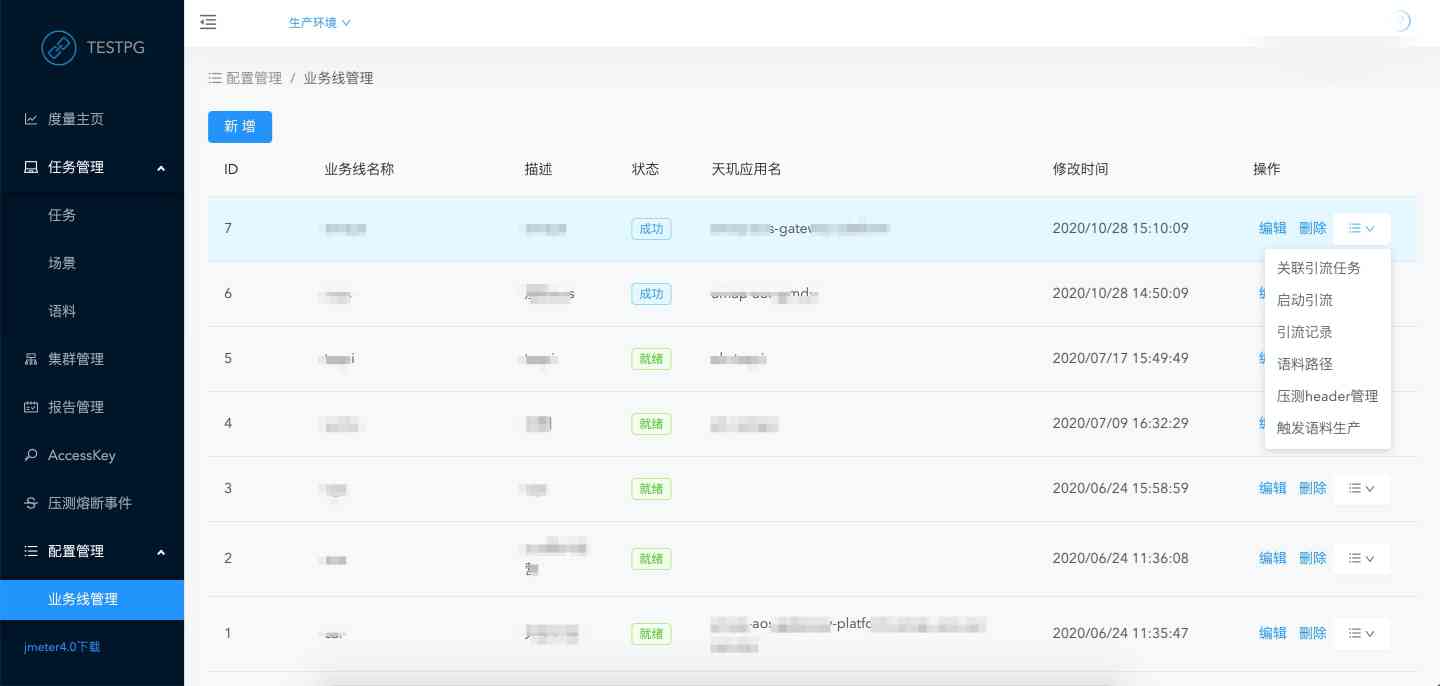

When C Want to add... To the record , What are the steps ?

The picture of the paper 2

- C inquiry M The last block of the file

- If M Found that the data block has no master record ( Or the lease has expired )

2a. If you don't write the latest version of the chunk server , error

2b. Select the primary and backup nodes from the latest version

2c. Version number increased , Write to the hard disk log

2d. Tell the master record and the backup record who they are , And the new version

2e. Copy and write a new version on the hard disk - M tell C Master record and backup record

- C Send data to all ( It's just temporary ...), And wait for

- C tell P Need to add

- P Check if the lease has expired , Whether the data block has space

- P Determine the offset ( The end of the data block )

- P Write block files ( One Linux file )

- P Tell all backup nodes the offset , Tell them they need to be appended to the block file

- P Wait for all backup nodes to reply , Timeout is an error , for example : There is not enough space on the hard disk

- P tell C ok Or error

- If something goes wrong ,C Try again from the beginning

GFS What kind of consistency assurance is provided to the client

You need to tell the app to use it in some form GFS.

It's a possibility :

If the master node tells the client that a record was successfully appended , All the readers then open the file , You can see the additional records .

( But it's not that the failed additions are invisible , Or say , All readers will see the same document content , Records in the same order )

How do we know GFS Whether these guarantees have been fulfilled ?

Look at how it handles different kinds of errors : collapse , collapse + restart , collapse + replace , Information loss , Partition .

Please think about it GFS How to guarantee key features .

* An additional client failed at the wrong time , What do I do ?

There are no inappropriate moments ?

* An append client cached an expired ( FALSE ) Master node , What do I do ?

* A read client caches a list of expired backup nodes , What do I do ?

* A master node crashes + restart , Will it lead to the loss of files ?

Or forget which chunk servers store the specified blocks ?

* Two clients append records at the same time .

Will they cover each other's records ?

* When the primary node sends an append request to all backup nodes , The master node crashes , What will happen? ?

If a backup node does not see the append request , Will it be selected as the new master node ?

* Block server S4 Store old out of date block backups , It's offline .

The primary node and other surviving backup nodes crash .

S4 resurrection ( Before the primary node and the backup node ).

master Will choose S4( Contains expired data blocks ) As the master node ?

It is appropriate to select the node with expired data as the left node , Or choose the node without backup ?

* If the backup node always fails to write , What should the master node do ?

such as , crash , There is not enough space on the hard disk , Or hard disk failure .

Should the master discard the node from the backup node list ?

Then it returns success to the client that appended the record ?

Or the master keeps sending requests , Think the requests have failed ,

Every write request to the client returns a failure ?

* If the primary node S1 Is active , Serving client requests , however master and S1 The network between them is blocked ?

“ Network partition ”.

master Will you choose a new master node ?

Will there be two master nodes ?

If the append request is sent to a master node , A read request is sent to another , Whether the guarantee of consistency has been broken ?

“ Split brain ”

* If there is a partition of the master node is processing the server side of the additional request , But its lease has expired ,master A new master node has been selected , Will this new master have the latest data processed by the previous master ?

* If master What happens to failure ?

After replacement master Before I know it master All the information about ?

such as , The version number of each data block ? Master node ? The lease expires ?

* Who judges master Whether it crashes and needs to be replaced ?

master The backup will ping master Do you ? If there is no response, replace it ?

* If the whole environment loses power , What will happen? ?

If power is restored , Restart all the machines , What will happen ?

* hypothesis master Want to create a new block backup .

Maybe there are too few backups .

If it's the last block of data in a file , Is being added .

How to ensure that the new backup has no error appending ? After all, it's not a backup node yet .

* Does a scene exist , Will break GFS Guarantee ?

for example , Append succeeded , But subsequent readers didn't see the record .

all master A backup of permanently lost state information ( Permanent hard disk error ). Maybe even earlier : There will be no answer , Not the wrong data .“ fault - stop it ”

All block servers for a block lose hard disk files . again ,“ fault - stop it ”; It's not the worst result

CPU,RAM, There is an error value in the network or hard disk . The checksums will check something , But not all

The clock is not synchronized correctly , So the lease doesn't work properly . therefore , There will be multiple master nodes , Maybe the write request is on a node , The read request is on another node .

GFS Which applications are allowed to behave irregularly ?

Will all clients see the same file content ? Can a client see a record , But other clients can't see ? Does a client read the file twice and see the same content ?

Can all clients see the same order of additional records ?

Will these nonstandard behaviors bring trouble to the application ?

such as MapReduce?

How can ability have no abnormality —— Strong consistency ?

for example , All clients see the same file content .

It's hard to give a real answer , But there are some caveats .

- The master node should detect duplicate client write requests . Or the client should not send this request .

- All backup nodes should complete each write operation , Or not at all . Or write in temporarily , Write until all nodes are determined . Write operations are performed only when all nodes agree to complete !

- If the master node crashes , Some backup nodes will error the last part of the operation . The new master node will communicate with all the backup nodes , Find out all the recent operations , And synchronize it to other backup nodes .

- Avoid clients reading from the expired previous backup node , Or all nodes have to communicate with the master , Or all backup nodes should also have leases .

You'll be experimenting 3 and 4 See these things !

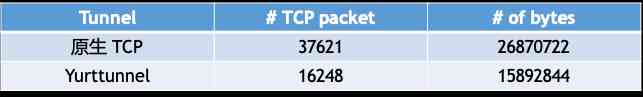

performance ( Chart 3)

Massive aggregate throughput of reads (3 Copy sets , Open )

- 16 Data servers , in total 94MB/s Or every chunk server 6MB/s, That's good ? The sequential throughput of a hard disk is about 30MB/s, A network card's about 10MB/s

- Close to network saturation ( Switch link )

- therefore : The performance of a single server is low , But it's scalable . Which one is more important ?

- form 3 It shows GFS product 500MB/s, It's very high

The writing of different files is lower than the maximum possible , The author thinks it's the influence of the Internet ( But it didn't specify )

Concurrent appending of a single file , Limited to servers that store the last chunk of data

15 It's hard to predict after new year , for example , How fast is the hard disk ?

Some questions to consider

How to support small files well ?

How to support tens of millions of files ?

GFS Can it be a widely used file system ? There are backups in different cities ? There are multiple backups in the same data center , Fault tolerance is not high !

GFS How long does it take to recover from an error ? A master node / How long does it take for the backup node to fail ?master The error? ?

GFS How to deal with slow block servers ?

And GFS Engineer's Retrospective dialogue :

The number of files is the biggest problem

- The final number is the chart 2 Of 1000 times

- stay master Can't store in the memory of

- master Garbage collection , Scan all files and data blocks , Very slowly

Thousands of customers will give master Of CPU There's a lot of pressure

Applications need to conform to GFS Semantic and restrictive ways to design

master At first, it was completely manual , need 10 About minutes

BigTable It's a solution for multiple small files ,Colossus It can be on more than one station master On the slice master data

summary

performance , Fault tolerance , Case studies of consistency , by MapReduce Application customization

Good ideas :

- Global file cluster system as an infrastructure

- Will name (master) And storage ( Block server ) Separate

- Fragmentation of parallel throughput

- Big papers / Data blocks , Reduce overhead

- The master node is used to write sequentially

- lease , In order to prevent the master node of block server from brain cracking

What's not enough :

Single master Performance of , Run out of memory and CPU

Block servers are not efficient for small files

master There is no automatic fail over

Maybe the consistency is still weak