当前位置:网站首页>Baidu app's continuous integration practice based on pipeline as code

Baidu app's continuous integration practice based on pipeline as code

2022-07-05 09:46:00 【Baidu geek said】

The full text 8150 word , Estimated reading time 21 minute

One 、 summary

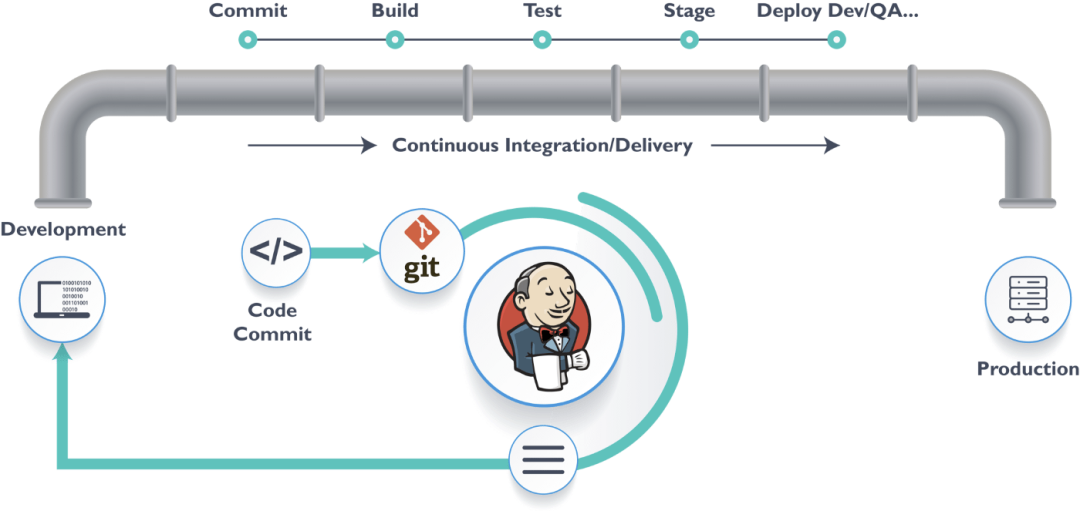

Baidu APP After years DevOps Construction , A set of plans has been formed 、 Development 、 test 、 Standard workflows and toolsets integrated into delivery . among , Continuous integration (Continuous integration, abbreviation CI) As DevOps One of the core processes , Perform preset automation tasks by frequently integrating code into the backbone and production environments .

CI It's always been us Baidu mobile R & D platform ——Tekes, Support Baidu APP An important entry point in the R & D process , Our automated R & D process ( Components are automatically published 、 Access, etc ) It's all based on CI Practice , Has supported Baidu APP release 50+ Time , admittance 40w+ Components and SDK. But when these automated R & D processes are exported to other product lines , But there's a problem : Different product lines have customized requirements for R & D process , for example Baidu APP It needs to be checked before the release of the Zhongtai chemical components API And dependent changes , And initiate manual approval in case of incompatible changes ; and good-looking APP You only need to show the incompatible changes in the report after publishing , This leads to the fact that our preset pipeline templates cannot be reused directly . To solve this problem , A feasible method is to let the product line use structured language to describe a set of functions or features required by their R & D process , Then the corresponding pipeline is automatically generated according to the description , This idea is actually Pipeline as Code( Pipeline is code ,PaC).

Two 、Pipeline as Code

Pipeline as Code yes “as Code” A kind of sport , quote Gitlab Official website Pipeline as Code The explanation of :

Pipeline as code is a practice of defining deployment pipelines through source code, such as Git. Pipeline as code is part of a larger “as code” movement that includes infrastructure as code. Teams can configure builds, tests, and deployment in code that is trackable and stored in a centralized source repository. Teams can use a declarative YAML approach or a vendor-specific programming language, such as Jenkins and Groovy, but the premise remains the same.

mention “as Code” , We are most likely to think of Infrastructure as Code ( Infrastructure is code ,IaC) , IaC Is to put the infrastructure 、 Use of resources and environment DSL(Domain Specified Language, Domain specific languages ) code , for example Ansible Of playbook It's based on YML Of DSL, In a macOS Standardized installation on the system Xcode Of playbook A simple example is as follows :

# playbook

- name: Install Xcode

block:

- name: check that the xcode archive is valid

command: >

pkgutil --check-signature {

{ xcode_xip_location }} |

grep \"Status: signed Apple Software\"

- name: Clean up existing Xcode installation

file:

path: /Applications/Xcode.app

state: absent

- name: Install Xcode from XIP file Location

command: xip --expand {

{ xcode_xip_location }}

args:

chdir: /Applications

poll: 5

async: "{

{ xcode_xip_extraction_timeout }}" # Prevent SSH connections timing out waiting for extraction

- name: Accept License Agreement

command: "{

{ xcode_build }} -license accept"

become: true

- name: Run Xcode first launch

command: "{

{ xcode_build }} -runFirstLaunch"

become: true

when: xcode_major_version | int >= 13

when: not xcode_installed or xcode_installed_version is version(xcode_target_version, '!=')

Like Ansible, We have also used a similar scheme to conduct DSL Code and incorporate into version control , In addition to solving the problem of differential configuration of different product lines we encounter , He has many other advantages , for example :

“

Let the product line team only focus on the current version of the assembly line DSL, It is convenient for members of the team to jointly maintain and upgrade ;

The environment configuration of the pipeline itself is also DSL Part of , Eliminate the specificity of pipeline environment caused by configuration confusion ;

DSL Very easy to copy and link code snippets , Can be CI After the script is componentized, it can be configured as DSL unit .

But before we introduce our plan , We First, I will introduce two representative schemes in the industry :Jenkins Pipeline and Github Actions.

Jenkins Pipeline yes Jenkins2.0 Launched a set of Groovy DSL grammar , Run the original independently on multiple Job Or the tasks of multiple nodes are managed and maintained in the form of code .

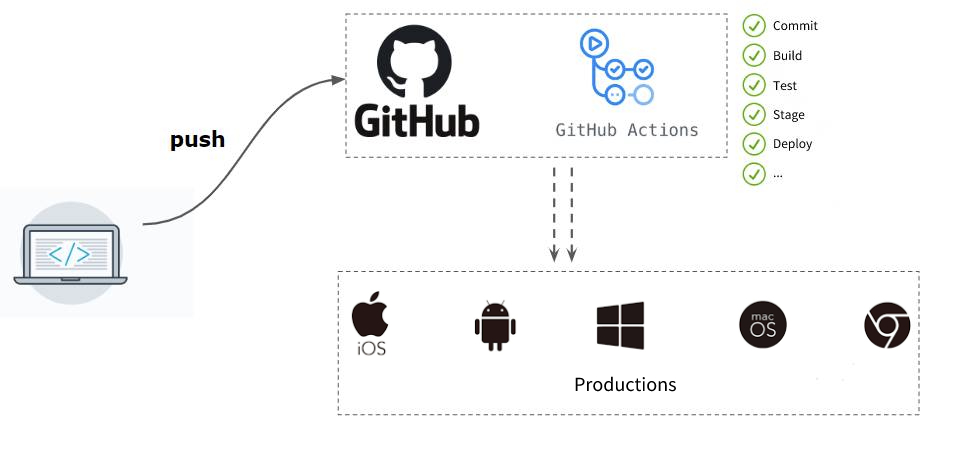

GitHub Actions yes GitHub Automation services launched , By configuring a warehouse based on YML Of DSL File to create a workflow , When the warehouse triggers an event , The workflow will run .

Let's give a simple example Xcode engineering Compile examples to experience both DSL The difference in grammar , There are three steps :

“

Checkout: from Git Server pull-down source code

Build: perform xcodebuild Compile command

UseMyLibrary: Reference custom script methods

Jenkins Pipeline Of DSL as follows :

// Jenkinsfile(Declarative Pipeline)

@Library('my-library') _

pipeline {

agent {

node {

label 'MACOS'

}

}

stages {

stage('Checkout') {

steps {

checkout scm

}

}

stage('Build') {

steps {

sh 'xcodebuild -workspace projectname.xcworkspace -scheme schemename -destination generic/platform=iOS'

}

}

stage('UseMyLibrary') {

steps {

myCustomFunc 'Hello world'

}

}

}

}

Github Actions Of DSL as follows :

# .github/workflows/ios.yml

name: iOS workflow

on: [push]

jobs:

build:

runs-on: macos-latest

steps:

- name: Checkout

uses: actions/[email protected]

- name: Build

run: xcodebuild -workspace projectname.xcworkspace -scheme schemename -destination generic/platform=iOS

- name: UseMyLibrary

uses: my-library/[email protected]

with:

args: Hello world

You can see both DSL The statements are very clear and concise , Many grammars can even be converted to each other , actually DSL No PaC The primary selection basis , It depends on what kind of continuous integration system the business uses .

Jenkins It is a completely self managed continuous integration system , Use Groovy Script to define Pipeline It also provides great flexibility . and Github Actions And Github Highly integrated , You can use it directly , also Workflow Of YML It is a component design , The structure is clear , Grammar is simple . It can be said that each has its own advantages . The continuous integration system used by our business is developed by Baidu iPipe, We're doing it PaC The first principle in our practice is to use the company's infrastructure , Avoid making wheels repeatedly , Therefore, we have adopted an innovative scheme and system ——Tekes Actions.

3、 ... and 、Tekes Actions

As can be seen from the naming Tekes Actions By reference Github Actions ,DSL Grammar is also basically copied Github Actions, for example Baidu APP Component publishing process DSL as follows :

# baiduapp/ios/publish.ymlname: 'iOS Module Publish Workflow'author: 'zhuyusong'description: 'iOS Component release process 'on: events: topic_merge: branches: ['master', 'release/**'] repositories: ['baidu/baidu-app/*', 'baidu/third-party/*']jobs: publish: name: 'publish modules using Easybox' runs-on: macos-latest steps: - name: 'Checkout' uses: actions/[email protected] - name: 'Setup Easybox' uses: actions/[email protected] with: is_public_storage: true - name: 'Build Task use Easybox' uses: actions/[email protected]: component_check: true quality_check: true - name: 'Publish Task use Easybox' uses: actions/[email protected] - name: 'Access Task use Easybox' uses:actions/[email protected]

In fact, the last section mentioned DSL Not in itself PaC The key point , We chose to use Github Actions Of DSL The main reason is that its componentized workflow can work well with us Tekes Platform and the company's continuous integration system .

Let's start with Github Actions Workflow of official documents , Attach the schematic diagram :

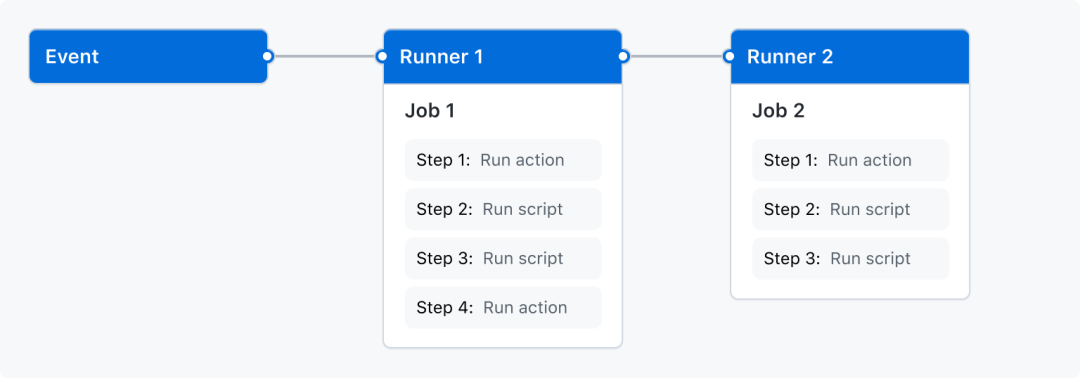

A workflow contains one or more jobs (Job), By events (Event) Trigger , stay Github Managed or self managed runners (Runner) Up operation . Here are the core components :

1. Homework : Each job consists of a set of steps (Step) form , Every Step Yes, you can run the operation (Action) perhaps Shell Single task of command ,Action You can think of it as a packaged stand-alone script , You can customize or reference third parties .

2. event : An event is a specific activity that triggers a workflow , For example, push code 、 Pull a branch 、 Create questions, etc . Originally Github Provides a very rich set of types , Can be conveniently used as Github Actions The trigger source of .

3. Actuator : A runner is a service that runs a triggered workflow ,GitHub Provide Linux、Windows and macOS The runner of the virtual machine environment , You can also create a self managed runner to run in a custom environment .

Action It is the core and basic element of the workflow , It can be said that it is because of Action This reusable and extensible design , to Github Actions Ecology brings great vitality ,Github It not only provides many official Action, And also built a Action market , You can search all kinds of three parties Action, Make it very easy to implement a workflow .

Tekes Actions stay Homework 、 Events and operators Each of the three components has its own unique design :

On homework ,Tekes adopt Action The idea of reusable extensions , Build your own for many years CI Script decomposition into Tekes Official Action, The product line can be freely plugged into its own R & D process , Realize customized pipeline ; At the same time CI The ability of , Set up an open Action Product warehouse , Support Ensure componentization 、 quality 、 Performance and other roles You can upload your own Action, To establish together Tekes ecology .

Incident , because Tekes In building mobile DevOps Service abstracts its own event type , We regard this as Tekes Actions Trigger source of workflow . And because our event is not a one-to-one relationship with the warehouse , We also designed a product line process choreography service and an event processing service , The former is used to help the product line manage the workflow DSL file , The latter is used to determine which events should trigger which product line and which workflow .

On the operator , We fully implement the... That the runner uses to interpret the execution workflow DSL, Including listening for trigger events , Dispatch Job, download Action, Execute the script , Upload logs and other functions , And support Cli Command local call , It is convenient for pipeline developers to debug in their own local workspace ; At the same time, the remote runner runs in our virtual machine cluster , When the workflow is triggered, we pass iPipe Agent To dispatch .

iPipe Agent It's based on iPipe Proxy service for , It can be directly dispatched to our virtual machine cluster and assigned a brand-new virtual machine containing the specified system and runtime .

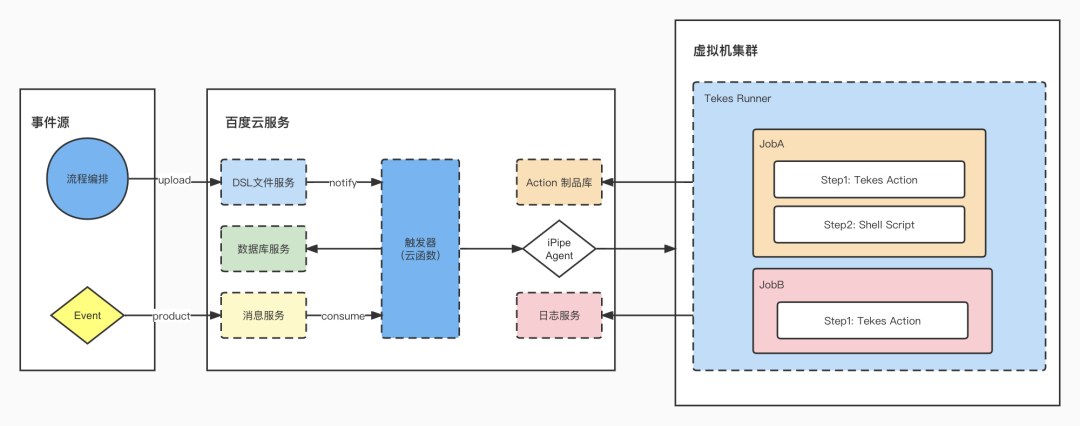

Whole Tekes Actions The project architecture of is shown in the figure below :

Engineering ,Tekes Actions A completely Baidu cloud based Serverless service , The core event processing service is just a cloud function service , This cloud function service is responsible for handling two event sources :

1. Process planning . When a product line is created and updated, a YML file , Upload to DSL File service Of this product line ,DSL File service The cloud function will be notified of the file addition and update events of , Trigger rules for adding and updating database service storage ;

2. event . for example DevOps Composite events generated by services , These events are published to the message service specific topic in , The cloud function subscribes to this topic To receive events , It is used to match the workflow trigger rules of each product line stored in the database service , And schedule a runner when the match is successful .

Four 、Tekes Runner

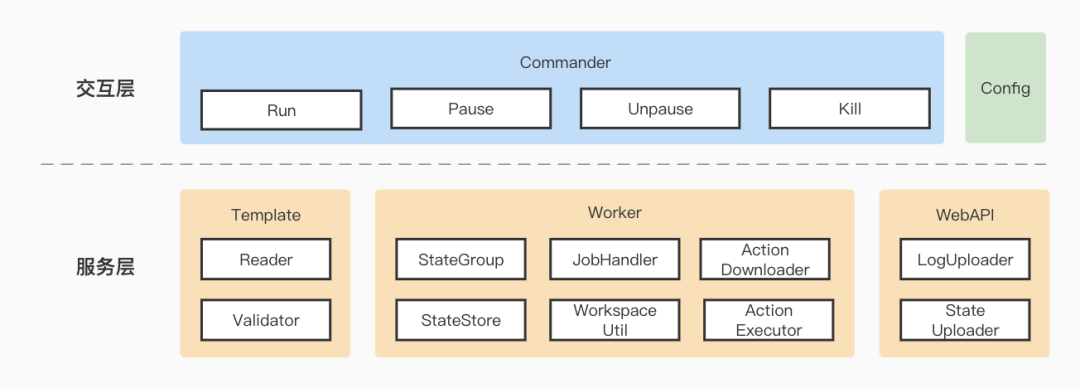

Tekes Runner Is to run Tekes Actions Tools for workflow , The architecture is as follows :

Tekes Runner The service layer consists of Template、Worker and WebAPI Three modules , Read and verify respectively DSL file 、 Manage workflows and communicate with back-end services , among WebAPI Modules can be closed in the configuration file .

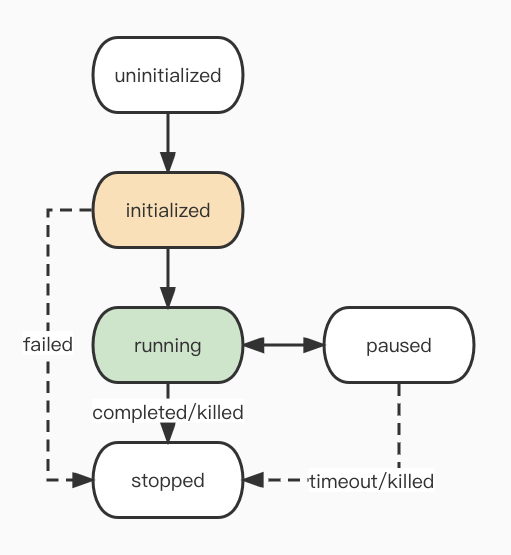

Tekes Runner The command module in the interaction layer provides four Cli command , Namely Run、Pause、Unpause and Kill, Are commands that manage the workflow lifecycle . The workflow life cycle is shown in the following figure :

When executed Run On command , Tekes Runner First, it will be initialized according to the configuration Tekes Actions Workflow , Including reading the corresponding workflow YML, Download and verify dependent Action, Create a workspace, etc , Enter after success initialized state .

Then, according to the parsed Workflow object Create a set of state machines and run , Each job corresponds to a simple finite state machine (Finite State Machine, FSM), At this point, enter running state .

The current state of the state machine is the current execution stage , The event that triggers the state migration is the result of the script running .

When all state machines of the whole state unit are migrated to the end state ( Whether it's successful or not ), The workflow will end , Get into stopped state . Besides , If the workflow times out or receives an external Kill command , Will also enter stopped state .

Now we have implemented a simple runner that can run scripts locally , But there are a few details that need further elaboration :

Details 1. Action and Runner How to interact

Action and Runner Interaction is a very common behavior , For example, from Runner Get input write output , Or notify the execution result Runner.

Action and Runner There are three ways to interact :

environment variable :Runner Pass needs to Action Write the parameters of to the environment variable , Generally include Action The required input and some context ;

Workspace files :Runner Pass needs to Action The files of are placed in a specific folder in the workspace , It's usually Action Required intermediates ;

Action Printing of :Runner In execution Action In the process of monitoring Action Print content of ,Runner and Action A set of printing statements with special command identifiers are agreed , When Runner When listening to such statements, it will parse and execute the preset commands , Including setting output , Print logs and upload products .

Details 2. Pause/Unpause The role of

Workflow sometimes inevitably inserts manual approval 、 Long waiting tasks such as code review , For example, the admittance of components to the product line requires the approval of the product line leader . If the workflow is not suspended , That means there is a Action Keep blocking threads or polling , This is undoubtedly a huge waste of resources .

When executed Pause On command , Runner Will persist the current context and end the process . Relative , When executed Unpause On command ,Runner Context will be recovered from persistence , Then continue to run the current phase .

Details 3. WebAPI The role of

in the majority of cases ,Runner Is executed in a remote virtual machine , from ipipe agent Dispatch , And is recycled after the workflow is executed . Therefore, a mechanism is needed to save the local log and persistence context into a service , This is actually WebAPI The role of .

Take a look back. Tekes Actions Engineering architecture diagram of , The log service actually plays the role of saving workflow logs and context , Other downstream services can also query workflow execution and detailed logs through our log service .

Four 、 Conclusion

Pipeline as Code It is an efficient pipeline management form , It's also CI/CD Into a DevOps A new trend in . With the help of PaC, Incredible flexibility for the entire assembly line , Also give the team around the construction of the assembly line 、 Communication and collaboration have brought about beneficial changes .

Build well PaC Need some pre dependencies , Including cloud native platforms and continuous integration tools , We Tekes It is also based on the company's strong infrastructure and its rich continuous integration practice , Then it refers to the mature solutions in the industry , Standing on the shoulders of giants, reaching for the stars . I hope our article can bring some reference to you in solving the problem of continuous integration .

Reference resources

[1] Continuous integration of Wikipedia

https://zh.wikipedia.org/zh-sg/%E6%8C%81%E7%BA%8C%E6%95%B4%E5%90%88

[2] What is CI/CD

https://www.redhat.com/zh/topics/devops/what-is-ci-cd

[3] What is pipeline as code

https://about.gitlab.com/topics/ci-cd/pipeline-as-code/

[4] Pipeline as Code

https://www.jenkins.io/doc/book/pipeline-as-code/

[5] Pipeline is code

https://insights.thoughtworks.cn/pipeline-as-code/

[6] Interpreting infrastructure as code

https://insights.thoughtworks.cn/nfrastructure-as-code/

[7] Ansible Authoritative guide

https://ansible-tran.readthedocs.io/en/latest/docs/playbooks_intro.html

[8] How to Create a Jenkins Shared Library

https//www.tutorialworks.com/jenkins-shared-library/

[9] Migrating from Jenkins to GitHub Actions

https://docs.github.com/cn/actions/migrating-to-github-actions/migrating-from-jenkins-to-github-actions

[10] Compare and contrast GitHub Actions and Azre Pipelines

https://docs.microsoft.com/en-us/dotnet/architecture/devops-for-aspnet-developers/actions-vs-pipelines

Recommended reading :

Go Language use MySQL Common fault analysis and countermeasures

Analysis on the wallet system architecture of Baidu trading platform

Wide table based data modeling application

Design and exploration of Baidu comment center

Data visualization platform based on template configuration

How to correctly evaluate the video quality

Small program startup performance optimization practice

How do we get through low code “⽆⼈ District ” Of :amis The key design of love speed

边栏推荐

- Why do offline stores need cashier software?

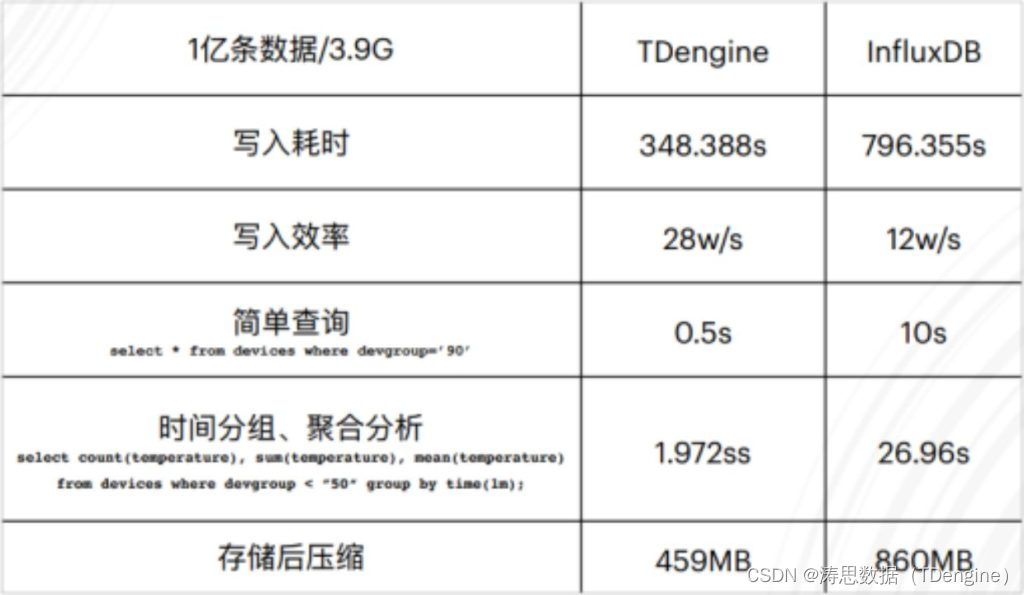

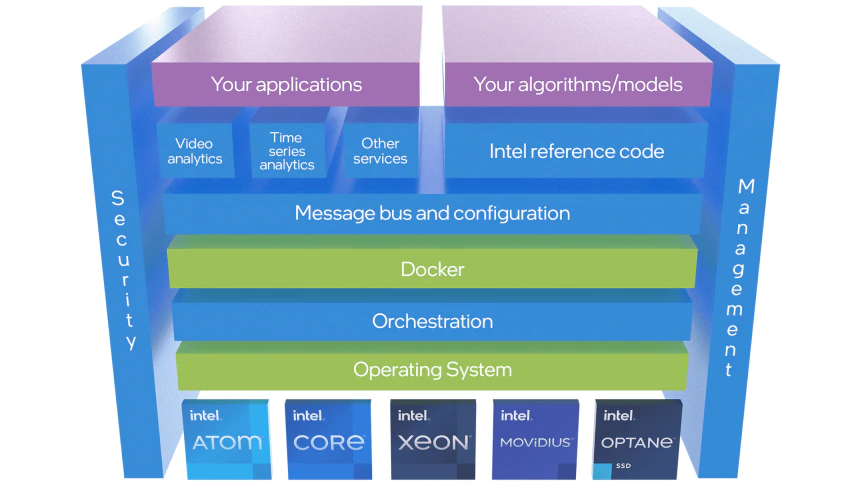

- Tdengine already supports the industrial Intel edge insight package

- [ctfhub] Title cookie:hello guest only admin can get flag. (cookie spoofing, authentication, forgery)

- [object array A and object array B take out different elements of ID and assign them to the new array]

- Node の MongoDB Driver

- Kotlin introductory notes (VIII) collection and traversal

- Three-level distribution is becoming more and more popular. How should businesses choose the appropriate three-level distribution system?

- A keepalived high availability accident made me learn it again

- 搞数据库是不是越老越吃香?

- LeetCode 31. 下一个排列

猜你喜欢

idea用debug调试出现com.intellij.rt.debugger.agent.CaptureAgent,导致无法进行调试

How do enterprises choose the appropriate three-level distribution system?

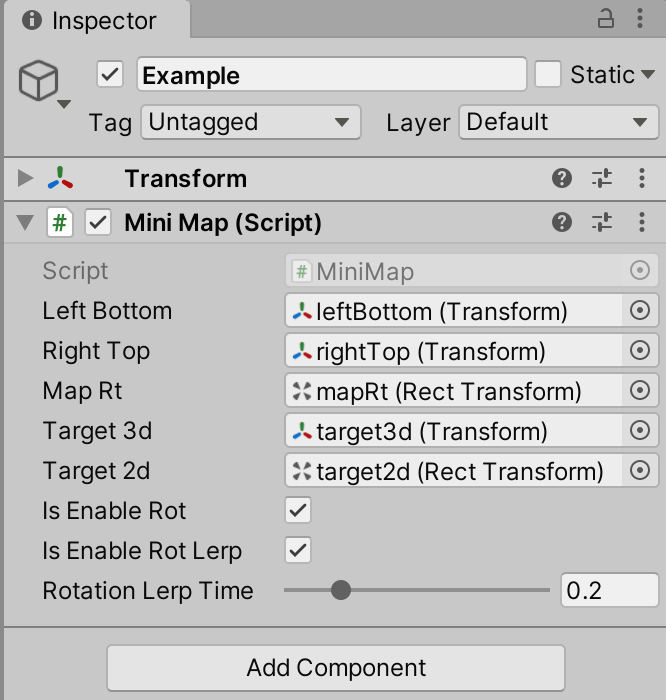

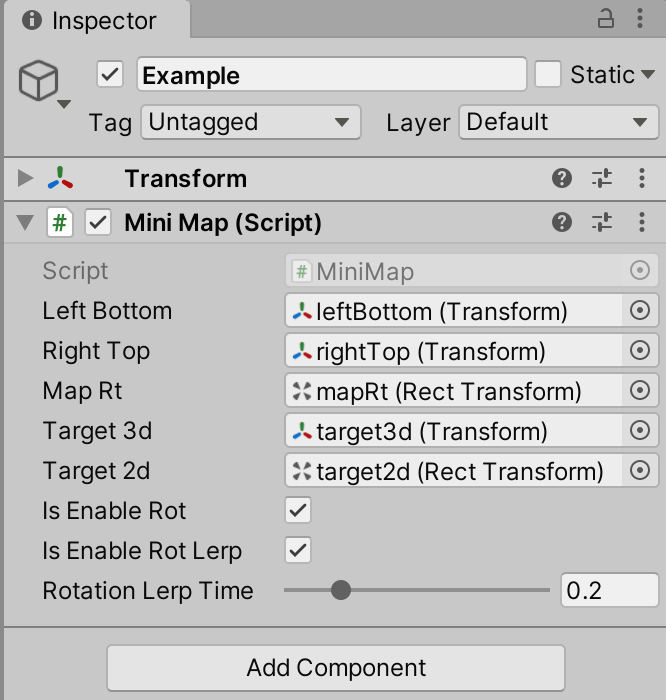

Unity skframework framework (XXIII), minimap small map tool

观测云与 TDengine 达成深度合作,优化企业上云体验

mysql安装配置以及创建数据库和表

Unity SKFramework框架(二十三)、MiniMap 小地图工具

Tdengine already supports the industrial Intel edge insight package

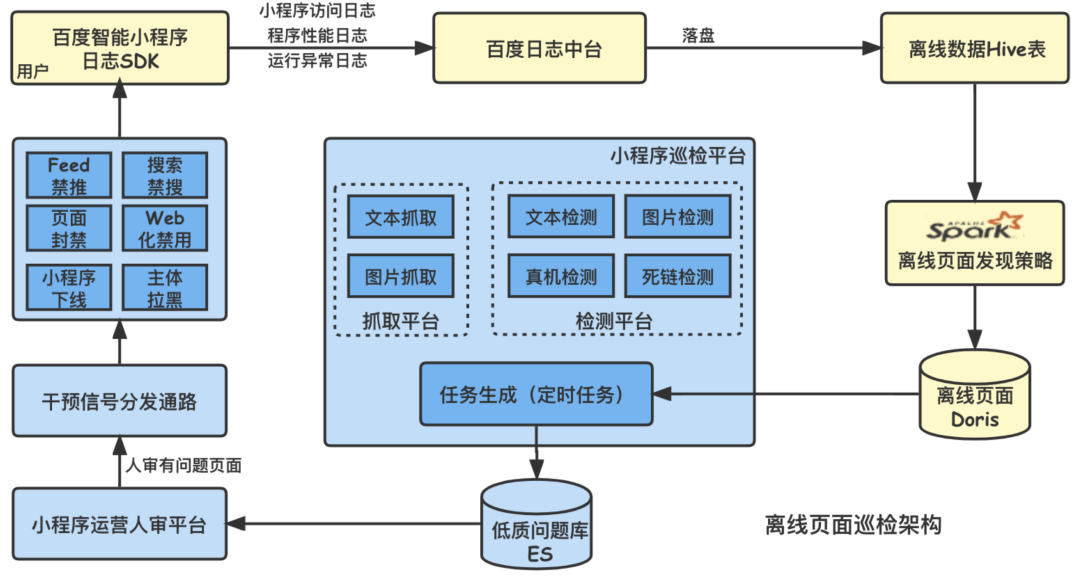

Evolution of Baidu intelligent applet patrol scheduling scheme

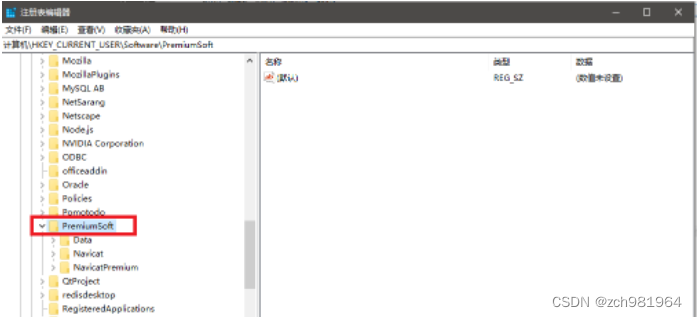

Solve the problem of no all pattern found during Navicat activation and registration

百度交易中台之钱包系统架构浅析

随机推荐

干货整理!ERP在制造业的发展趋势如何,看这一篇就够了

Idea debugs com intellij. rt.debugger. agent. Captureagent, which makes debugging impossible

LeetCode 556. 下一个更大元素 III

Node の MongoDB Driver

Dry goods sorting! How about the development trend of ERP in the manufacturing industry? It's enough to read this article

Android privacy sandbox developer preview 3: privacy, security and personalized experience

Android 隐私沙盒开发者预览版 3: 隐私安全和个性化体验全都要

[sorting of object array]

From "chemist" to developer, from Oracle to tdengine, two important choices in my life

Go 语言使用 MySQL 的常见故障分析和应对方法

[sourcetree configure SSH and use]

LeetCode 31. 下一个排列

百度智能小程序巡檢調度方案演進之路

【两个对象合并成一个对象】

[object array A and object array B take out different elements of ID and assign them to the new array]

Develop and implement movie recommendation applet based on wechat cloud

OpenGL - Coordinate Systems

Oracle combines multiple rows of data into one row of data

Kotlin introductory notes (III) kotlin program logic control (if, when)

tongweb设置gzip