当前位置:网站首页>[flinlk] dynamic Kerberos authentication in Flink pit

[flinlk] dynamic Kerberos authentication in Flink pit

2022-06-10 12:51:00 【Ninth senior brother】

1. summary

Reprint and supplement :Flink Xiaokengzhi kerberos Dynamic authentication

2. Official website

This paragraph is excerpted from the official website :Kerberos Authentication Setup and Configuration

To configure :Kerberos-based Authentication / Authorization

Flink adopt Kafka Connectors provide first-class support , It can be done to Kerberos Configured Kafka Install for authentication . Just in flink-conf.yaml Middle configuration Flink. Like this for Kafka Enable Kerberos Authentication :

- Configure... By setting the following Kerberos Notes

security.kerberos.login.use-ticket-cache: By default , This value istrue,Flink Will try atkinitUsed in the managed ticket cache Kerberos Notes . Be careful ! stay YARN The upper part Flink jobs Use in Kafka Connector , Using the ticket cache Kerberos Authorization will not work . Use Mesos The same is true when deploying , because Mesos Deployment does not support authorization using ticket cache .security.kerberos.login.keytabandsecurity.kerberos.login.principal: To use Kerberos keytabs, You need to set values for these two properties .

- take

KafkaClientAppend tosecurity.kerberos.login.contexts: This tells Flink Will be configured Kerberos The bill is provided to Kafka Login context for Kafka Authentication .

Once enabled based on Kerberos Of Flink After security , Just include the following two settings in the provided property configuration ( By passing to the inside Kafka client ), You can use Flink Kafka Consumer or Producer towards Kafk a Authentication :

- take

security.protocolSet toSASL_PLAINTEXT( The default isNONE): Used with Kafka broker Protocol for communication . Use independent Flink When the deployment , You can also useSASL_SSL; Please be there. here See how to create a SSL To configure Kafka client . - take

sasl.kerberos.service.nameSet tokafka( The default iskafka): This value applies to Kafka broker Configuredsasl.kerberos.service.nameMatch . A service name mismatch between the client and server configurations will cause authentication to fail .

of Kerberos Security Flink More information about configuration , Please see the [ here ]({ {< ref “docs/deployment/config” >}}}). You can also be in [ here ]({ {< ref “docs/deployment/security/security-kerberos” >}}) Learn more Flink How to internally set up based on kerberos The security of .

2. Kerberos authentication

2.1 Mode one ( Is limited to YARN)

stay YARN In mode , You can deploy one without keytab The safety of the Flink colony , Use only the ticket cache ( from kinit management ). This avoids the complexity of generating key tables , And avoid delegating it to the cluster manager . under these circumstances ,Flink CLI obtain Hadoop Delegate token ( be used for HDFS and HBase etc. ). The main drawback is that clusters are necessarily transient , Because the generated delegate token will expire ( Usually within a week ).

Use the following steps to run a safe Flink colony kinit:

- Use kinit Command login .

- The normal deployment Flink colony .

notes :tgt There is a validity period , You can't use it after it expires , This approach is not suitable for long-term tasks .

2.2 Mode two

In a native Kubernetes、YARN and Mesos Safe operation in mode Flink The steps of clustering

1. On the client side Flink Add security related configuration options to the configuration file ( See you here ).

security.kerberos.login.keytab: /kerberos/flink.keytab

security.kerberos.login.principal: flink

security.kerberos.login.contexts: Client

security.kerberos.login.use-ticket-cache: true

Be careful : there principal No host name , If the host name is taken, it may be as follows

security.kerberos.login.use-ticket-cache: true

security.kerberos.login.keytab: /home/xx/xx.keytab

# todo: Need here hdfs Configuration of

security.kerberos.login.principal: mr/[email protected] domain name .COM

security.kerberos.login.contexts: KafkaClient,Client

security.kerberos.login.use-ticket-cache: true

But there will be problems with this certification , Because if you are node1 When you submit a task , Will be looking for node1 Of the machine /home/xx/xx.keytab file , Then, if any , Then you can use it , But when you submit a task to the cluster , Because it's distributed , You introduced mr/[email protected] domain name .COM Only suitable Node1 Of keytab, and node2,node3 Of keytab Are respectively bound to node2 and Node3 Of , And then you use mr/[email protected] domain name .COM De certification , You can't find it .

There will be an error 【kafka】kerberos client is being asked for a password not available to garner authentication informa

So there are several solutions

- Generate a principal Does not contain the host name , All hosts are available

- Under the fixed path of each host /home/xx/xx.keytab The file contains all hosts principal. You can use the following command to add

ktadd /home/mr/mr.keytab mr/[email protected] domain name .COM

ktadd /home/mr/mr.keytab mr/[email protected] domain name .COM

ktadd /home/mr/mr.keytab mr/[email protected] domain name .COM

- Generate a globally available keytab, And 2 identical

But the other side has never generated , cause sb. to die of anger , Netizens also said that a global

Then I found another solution

solve

The final solution is , We don't use their keytab and jaas file , Put them in jaas Make a copy of the document

The original

[[email protected] flink]# cat /etc/zdh/kafka/conf.zdh.kafka/jaas.conf

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/home/mr/mr.keytab" # This file is different on each server

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/home/mr/mr.keytab" # This file is different on each server

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/home/mr/mr.keytab" # This file is different on each server

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

[[email protected] f

We put zdh2 Service /home/mr/mr.keytab file , Copy it , On other servers /usr/hdp/soft/zaas/keytab/ Under the table of contents

Changed to the following ,

[[email protected] keytab]# pwd

/usr/hdp/soft/zaas/keytab

[[email protected] keytab]# cat jaas.conf

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/usr/hdp/soft/zaas/keytab/mr.keytab" # Corresponding zdh2 Server's keytab

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/usr/hdp/soft/zaas/keytab/mr.keytab" # Corresponding zdh2 Server's keytab

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/usr/hdp/soft/zaas/keytab/mr.keytab" # Corresponding zdh2 Server's keytab

storeKey=true

useTicketCache=false

principal="mr/[email protected]";

};

Our catalogue is in the form of

[[email protected] keytab]# ll

total 8

-rw-r--r--. 1 root root 637 Apr 14 02:36 jaas.conf

-rwxr-xr-x. 1 root root 238 Apr 14 02:25 mr.keytab

[[email protected] keytab]#

[[email protected] keytab]# ll

total 8

-rw-r--r--. 1 root root 637 Apr 14 02:37 jaas.conf

-rwxrwxrwx. 1 root root 238 Apr 14 02:27 mr.keytab

[[email protected] keytab]# ll

total 8

-rw-r--r--. 1 root root 637 Apr 14 02:37 jaas.conf

-rwxrwxrwx. 1 root root 238 Apr 14 02:27 mr.keytab

Note here krb5.conf It is generally the same on all servers , If it's not the same , Do the same , Copy a server and put it together .

There is indeed a small pit here :【FLinlk】Flink Xiaokengzhi kerberos Dynamic authentication

notes : Here we come across what we call a pit ,kerberos Authentication precedes command line parsing , The configuration defined on the command line is invalid during the authentication phase

2. Ensure that the key table file exists in security.kerberos.login.keytab In the indicated path on the client node

3. The normal deployment Flink colony .

stay YARN、Mesos And native Kubernetes In mode ,keytab Will automatically copy from the client to Flink Containers .

notes : Here we come across what we call a pit ,kerberos Authentication precedes command line parsing , The configuration defined on the command line is invalid during the authentication phase

therefore , We currently cannot specify a different... On the command line keytab and principal Overwrite the configuration in the file , So as to achieve multi-user authentication , That is, at present, we can only pass flink User to YARN Submit the assignment , There is no user distinction , We can't control permissions .

Then how to solve this problem , So that each user can submit jobs in his own name , Here we just need to solve the problem that command line parsing precedes kerberos Certification is OK ( Modify source code )

2.2.1 . Violence resolver

Flink Built in command line parser , If we use our own command line parser ( Fewer changes will reduce bug). We may need to be compatible with all parsers at the same time , And when adding a new parser, it also adds restrictions , There may be no corresponding parameter in a parser , The resolution will not take effect .

therefore , We take the simplest and most violent approach , Define a parser by yourself , Parse the command line parameters to override the parameters defined in the configuration file .

The specific steps are as follows :

1. Customize a command line parser .

// be located org.apache.flink.client.cli.CliFrontendParser Next

public static class ExtendedGnuParser extends GnuParser {

private final boolean ignoreUnrecognizedOption;

public ExtendedGnuParser(boolean ignoreUnrecognizedOption) {

// GnuParser、DefaultParser An exception will be thrown when an undefined parameter is encountered , This is for compatibility

this.ignoreUnrecognizedOption = ignoreUnrecognizedOption;

}

protected void processOption(String arg, ListIterator<String> iter) throws ParseException {

boolean hasOption = this.getOptions().hasOption(arg);

if (hasOption || !this.ignoreUnrecognizedOption) {

super.processOption(arg, iter);

}

}

}

Define command line parameters and parse , Also overwrite the configuration file parameters .

// be located org.apache.flink.client.cli.CliFrontend

// Its this.configuration For the configuration read from the configuration file .

public Configuration compatCommandLineSecurityConfiguration(String[] args)

throws CliArgsException, FlinkException, ParseException {

// Define a custom parameter resolution Options.

Options securityOptions = new Options()

.addOption(Option.builder("D")

.argName("property=value")

.numberOfArgs(2)

.valueSeparator('=')

.desc("Allows specifying multiple generic configuration options.")

.build());

// Parse the parameters passed in from the command line

CommandLine commandLine =

new CliFrontendParser.ExtendedGnuParser(true)

.parse(securityOptions, args);

// Parameters read from the configuration file .

Configuration securityConfiguration = new Configuration(this.configuration);

// Replace the... In the configuration file with the parameters passed in from the command line security Parameters

Properties dynamicProperties = commandLine.getOptionProperties("D");

for(Map.Entry<Object, Object> entry: dynamicProperties.entrySet()){

securityConfiguration.setString(

entry.getKey().toString(),

entry.getValue().toString()

);

}

return securityConfiguration;

}

After modification, we compile mvn clean package -DskipTests -Dfast

After compilation ,flink A... Will appear in the root directory build-target Soft connection , Points to the compiled flink The real installation package .

So let's revise that flink Name of the installation package , from flink-1.13.2 It is amended as follows flink-1.13.2.1 To distinguish between , Upload it online at the same time .

边栏推荐

- STM32 learning notes (2) -usart (basic application 1)

- In the era of digital economy, where should retail stores go

- Tidb elementary course experience 8 (cluster management and maintenance, adding a tikv node)

- CMakeLists.txt 如何编写

- JTAG to Axi master debugging Axi Bram controller

- Xshell 评估期已过怎么办? 按照以下步骤即可解决!

- KITTI 相关信息汇总

- (4) Classes and objects (1)

- ASP.NET 利用ImageMap控件设计导航栏

- Use soapUI tool to generate SMS interface code

猜你喜欢

(7) Deep and shallow copy

SAP Field Service Management 和微信集成的案例分享和实现介绍

Altium Designer重拾之开篇引入

PCB学习笔记(2)-3D封装相关

Today, a couple won the largest e-commerce IPO in Hong Kong

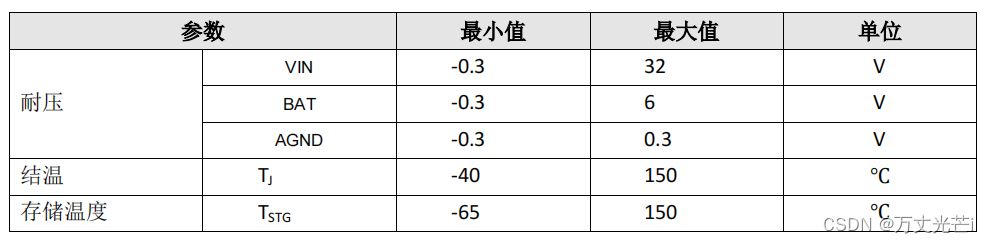

FM4057S单节锂电池线性充电芯片的学习

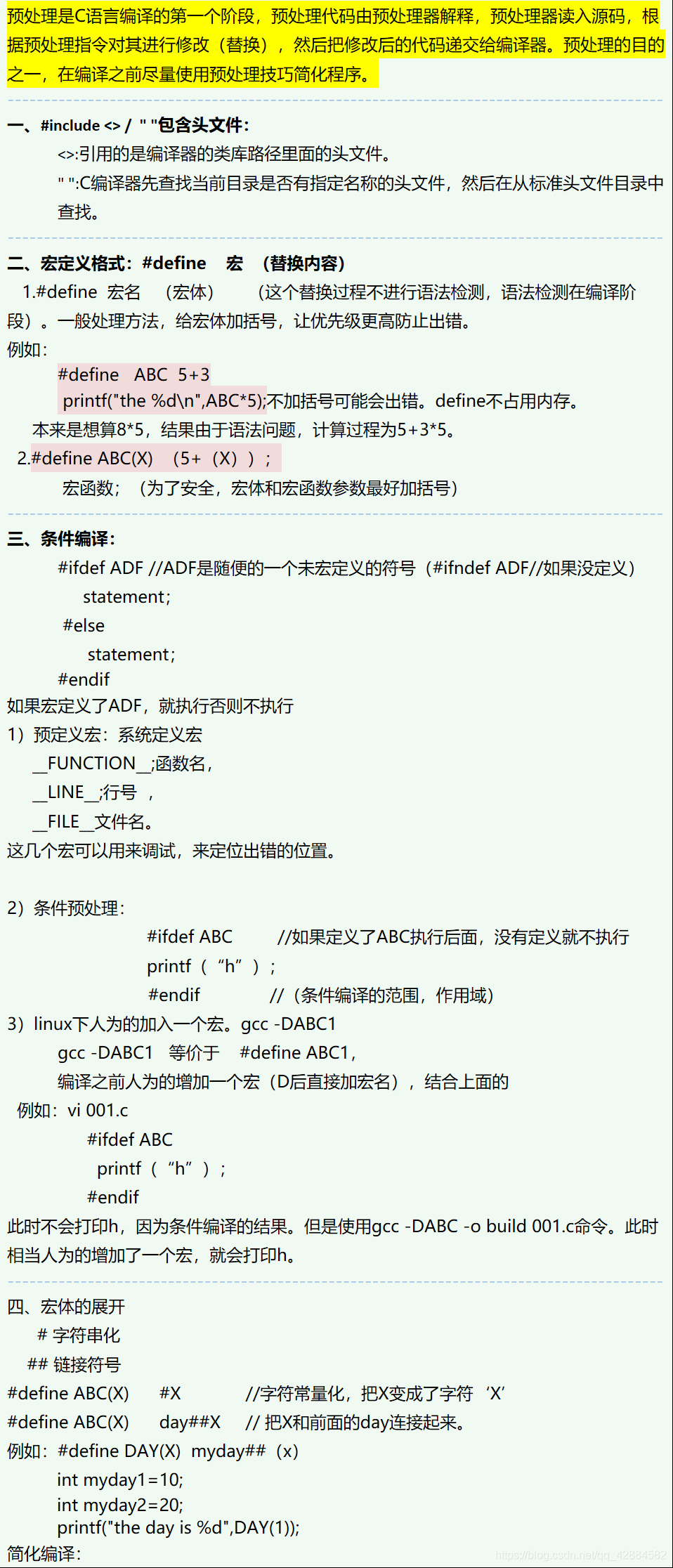

(一)预处理总结

蔚来:“拿捏”了数据,“扭捏”着未来

C # balanced weight distribution

(5) Class, object and class file splitting operation (2)

随机推荐

Collected data, must see

request获取请求服务器ip地址

Slide the navigation fixed head upwards

The Japanese version of arXiv is a cool batch: only 37 papers have been received after more than 2 months

Const Modified member function

【移动机器人】轮式里程计原理

(1) Pretreatment summary

eseses

Minimalist random music player

UML类图

[golang] when creating a structure with configuration parameters, how should the optional parameters be transferred?

日本版arXiv凉得一批:2个多月了,才收到37篇论文

C # balanced weight distribution

SparkStreaming实时数仓 问题&回答

手机厂商“返祖”,只有苹果说不

ASP.NET 利用ImageMap控件设计导航栏

Wei Lai: "pinches" the data and "pinches" the future

OFFICE技术讲座:标点符号-英文-大全

Count the number and average value of natural numbers whose sum of bits within 100 is 7

Automatic mapping of tailored landmark representations for automated driving and map learning