当前位置:网站首页>Sentinel series introduction to service flow restriction

Sentinel series introduction to service flow restriction

2022-06-13 05:40:00 【codain】

0、 Preface

In the official explanation Sentinel Before , You need to know some common algorithms in the industry , Even popular science ,Sentinel The components also use the current limiting methods mentioned below more or less

1、 About the concept

The main purpose is to protect the system by limiting the number of concurrent accesses or the number of requests allowed to be processed within a time window , The current request will be processed when the limit quantity is reached , Adopt the corresponding rejection strategy , For example, the 11th National Congress of the people's Republic of China has promoted , A service can only serve at the same point in time 10w request , But at this time, the request volume is 11w, So this 11w After the request came in, , Among them 10w Can operate normally , Extra 1w You will receive a warm prompt about the busy system , This is current limiting

2、 In fact, in the actual development process , Those who have done mobile terminals probably know , Current limiting is almost everywhere

1、 stay Nginx Add a current limiting module to limit the average access speed

2、 By setting up the database connection pool 、 The size of the thread pool is used to limit the total concurrency

3、 adopt Guava Provided Ratelimiter Limit the access speed of the interface

4、TCP Traffic shaping in communication protocol

3、 In fact, when it comes to the realization of current limiting , The key is the current limiting algorithm , Here are some common current limiting algorithms

1、 Calculator algorithm

Concept : It is a relatively simple algorithm for current limiting , Accumulate the number of visits in the specified period , When the number of visits reaches the set threshold , Starting current limiting strategy , When entering the next time cycle, the number of accesses is cleared

give an example : Limit the total number of requests that can be processed per minute to 100, In the first minute , A total of 60 Time , Then the next minute , Times from 0 Start counting again , If in a minute and a half , The maximum current limiting threshold has been reached 100, So at this point , The program will reject all subsequent requests , In general, this algorithm can be used to limit the frequency of SMS sending , For example, limit the number of SMS messages sent by the same user within one minute

shortcoming : If in the first minute 58 Seconds and the second second second of the second minute , Respectively 100 A request , On the whole, there will be 4 The total number of requests reached... In seconds 200, The set threshold has been exceeded

2、 Sliding window algorithm

Concept : This algorithm is actually to solve the above-mentioned shortcomings , It is a flow control technology , stay TCP Network communication protocol , This algorithm is also used to solve the problem of network congestion , Simply speaking , The principle of sliding window algorithm is to divide several small time windows in the fixed window , Record the number of visits in each small time window , Then slide the window forward according to the time and delete the expired small time window , Finally, you only need to count the total count of all small time windows within the sliding window range

give an example : If we split one minute into 4 A time window , Each time window can handle up to 25 A request , And the size of the sliding window is indicated by the dotted box , Pictured , The current red box size is 2, That is, at most 50 A request , At the same time, the sliding window will move forward with time , Like before 15 After seconds , The window will slide to 15-45s This range , Then re count the data in a new window , This method solves the critical value problem of the fixed window algorithm , That is, the power shortage problem mentioned above

Add :Sentinel In fact, the sliding window algorithm is also used to realize current limiting

3、 Token bucket current limiting algorithm

Concept : It is the most commonly used algorithm in network traffic shaping and rate limiting , For every request that comes in , You need to get a token from the token bucket , Take the token and ask , Otherwise, current limiting will be triggered

principle : Look at the picture , This diagram is a system , The system will put tokens into a token bucket with a fixed capacity at a fixed speed , But if there is a request from the client , You need to get the token from the token bucket first , Then you can normally request

shortcoming : Suppose the token generation speed in the figure is ten per second , That is, the concurrency is ten per second , So if you request to get a token at this time , There are three situations

① Request speed is faster than token generation speed : Then the token will be quickly taken away , Subsequent incoming requests will be throttled

② The request speed is equal to the token generation speed : Then the situation is very harmonious , The system is very stable

③ Request speed is less than token generation speed : This situation actually shows that the current concurrency is very small , The system is adequate to handle

characteristic : Because the token bucket has a fixed capacity , So when the request speed is less than the token generation speed , The token bucket will be full , So the token bucket can handle the burst traffic , That is, the new traffic in an instant , The system can also handle normally

4、 Leaky bucket current limiting algorithm

effect : Control the speed of data injection into the network , Smooth the burst traffic

principle : Pictured , Inside the leaky bucket algorithm , Just like token bucket , Maintained a container , This container , It will flow out of the water at a fixed speed , No matter how fast the water is , The outflow rate of the downflow is moderate and remains unchanged ( Speaking of this , Actually, if you think about it , Is this the same principle that we know about message oriented middleware ? No matter how much data the producer produces , Consumers can handle it as they should )

shortcoming :

① The requested speed is greater than the speed at which water drops are exposed in the leaking bucket : That is, concurrency has exceeded the limit that the service can handle , It will trigger current limiting

② The requested speed is less than or equal to the speed of water drops from the leaking bucket : That is to say, the service can handle the concurrency of the client

Add : Leaky bucket current limiting algorithm and token bucket current limiting algorithm , Actually, a closer look is almost , The biggest difference is , Leaky bucket algorithm can not deal with instant concurrency in a short time , It is more suitable for services with fixed concurrency , For example, the punch card system , An estimated two people per second every morning , Then the system will process and limit the current at a fixed speed

边栏推荐

- Three paradigms of MySQL

- Pyqt5 controls qpixmap, qlineedit qsplitter, qcombobox

- Byte buddy print execution time and method link tracking

- Case -- the HashSet set stores the student object and traverses

- Listiterator list iterator

- [reprint] complete collection of C language memory and character operation functions

- Pyqt5 module

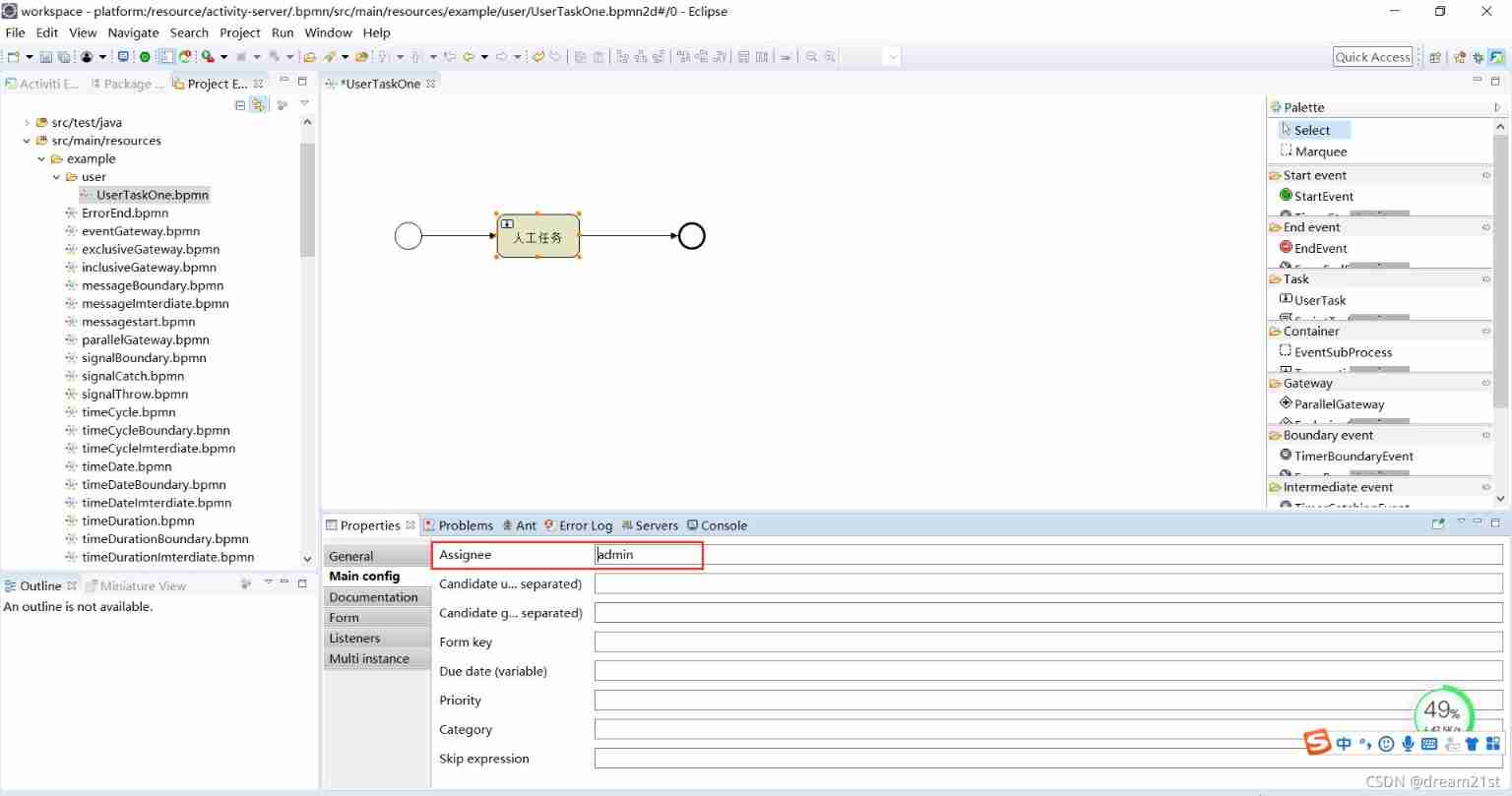

- 16 the usertask of a flowable task includes task assignment, multi person countersignature, and dynamic forms

- 17 servicetask of flowable task

- Solve the problem of garbled code in the MySQL execution SQL script database in docker (no need to rebuild the container)

猜你喜欢

890. Find and Replace Pattern

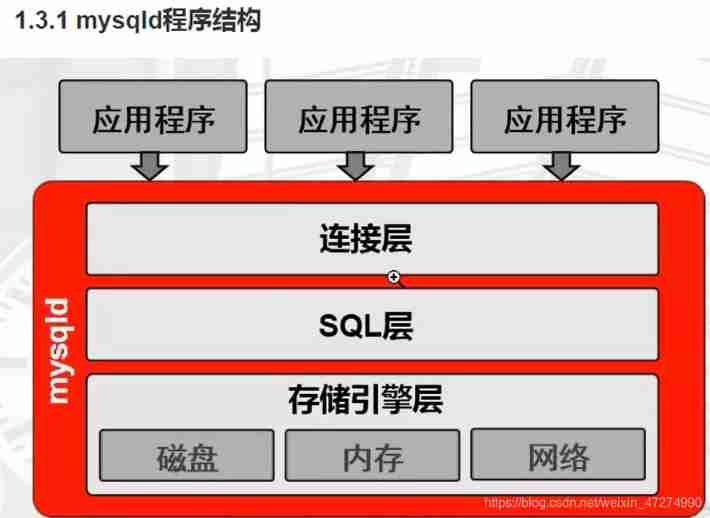

MySQL installation, architecture and management

ZABBIX proxy, sender (without agent monitoring), performance optimization

16 the usertask of a flowable task includes task assignment, multi person countersignature, and dynamic forms

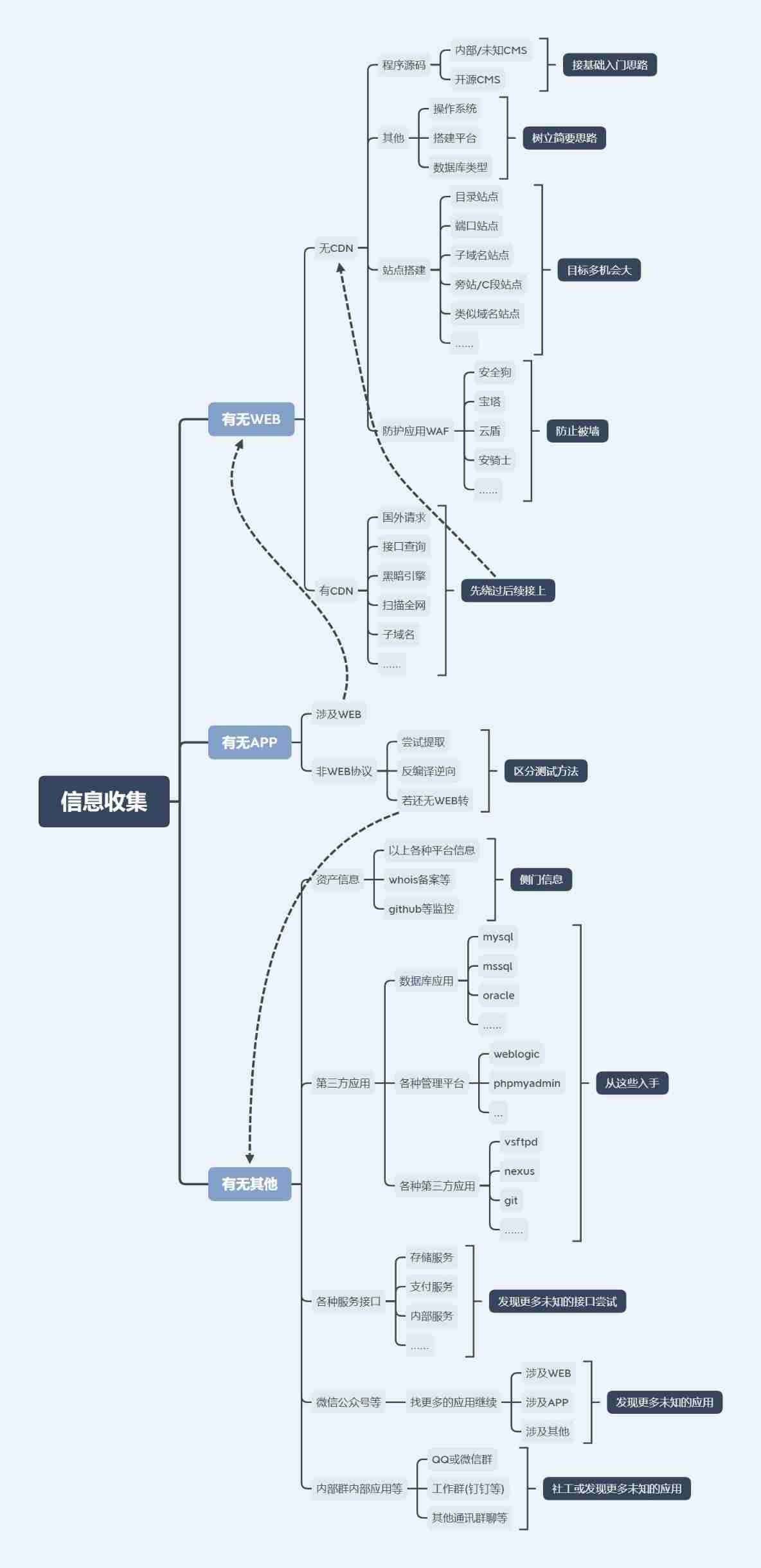

Information collection for network security (2)

Enhanced for loop

MongoDB 多字段聚合Group by

The reason why the process cannot be shut down after a spark job is executed and the solution

Metartc4.0 integrated ffmpeg compilation

JS output uincode code

随机推荐

11 signalthrowingevent and signalboundaryevent of flowable signal event

Case -- the HashSet set stores the student object and traverses

About the solution of pychart that cannot be opened by double clicking

MySQL installation, architecture and management

Case - grade sorting - TreeSet set storage

Set the correct width and height of the custom dialog

Shell instance

Pyqt5 controls qpixmap, qlineedit qsplitter, qcombobox

Pycharm错误解决:Process finished with exit code -1073741819 (0xC0000005)

12 error end event and terminateendevent of end event

17.6 unique_ Lock details

13 cancelendevent of a flowable end event and compensationthrowing of a compensation event

Summary of the 11th week of sophomore year

Unity game optimization (version 2) learning record 7

890. Find and Replace Pattern

Windbos common CMD (DOS) command set

How to Algorithm Evaluation Methods

Fast power code

NVIDIA Jetson Nano/Xavier NX 扩容教程

float类型取值范围