当前位置:网站首页>Stacked noise reducing auto encoder (sdae)

Stacked noise reducing auto encoder (sdae)

2022-07-26 14:40:00 【The way of code】

Automatic encoder (Auto-Encoder,AE)

Self encoder (autoencoder) It is a kind of neural network , After training, you can try to copy input to output . There is a hidden layer inside the self encoder h, Can produce code (code) Indicates input . The network can be seen as composed of two parts : A function h = f(x) Represents the encoder and a decoder that generates the reconstruction r = g(h). We should not design the self encoder so that the input to output is exactly the same . This usually requires imposing some constraints on the self encoder , So that it can only copy approximately , And can only copy the input similar to the training data .

The automatic coder consists of a three-layer network , The number of neurons in the input layer is equal to that in the output layer , The number of neurons in the middle layer is less than that in the input layer and output layer . To build an automatic encoder, you need to complete the following three tasks : Build encoder , Build the decoder , Set a loss function , Used to measure information lost due to compression ( Self encoder is lossy ). Encoder and decoder are generally parameterized equations , And the loss function is derivable , Typically, a neural network is used . The parameters of the encoder and decoder can be optimized by minimizing the loss function .

Automatic coding machine (Auto-encoder) Is a self supervised algorithm , It is not an unsupervised algorithm , It does not need to label the training samples , Its label is generated from the input data . Therefore, the self encoder can easily train a specific encoder for the input of a specified class , Without having to do any new work . The automatic encoder is data dependent , Only those data similar to the training data can be compressed . such as , Use the automatic encoder trained by face to compress other pictures , For example, the performance of trees is very poor , Because the features it learns are related to the face .

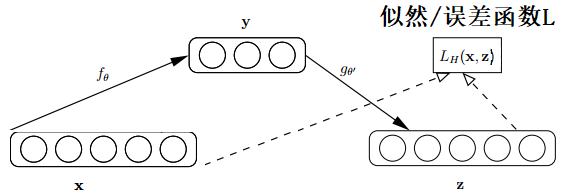

Automatic encoder operation process : original input( Set to x) Weighted (W、b)、 mapping (Sigmoid) And then get y, Right again y The inverse weighted mapping back becomes z. Train the two groups through repeated iterations (W、b), The purpose is to make the output signal as similar as the input signal . After the training, the automatic encoder can be composed of two parts :

1. Input layer and middle layer , This network can be used to compress the signal

2. Middle layer and output layer , We can restore the compressed signal

Noise reduction automatic encoder (Denoising Auto Encoder,DAE)

Noise reduction automatic encoder is based on automatic encoder , Noise is added to the input data of the input layer to prevent over fitting , The method improves the robustness of the learned encoder , yes Bengio stay 08 Year paper :Extracting and composing robust features with denoising autoencoders Proposed . The schematic diagram of noise reduction automatic encoder in this paper is as follows , Be similar to dropout, among x Is the raw input data , Noise reduction automatic encoder with a certain probability ( Binomial distribution is usually used ) Set the value of the input layer node to 0, Thus, the model input with noise is obtained xˆ.

This broken data is very useful , There are two reasons : 1. Through the comparison with non-destructive data training , Broken data trained Weight The noise is relatively low . Noise reduction is therefore named . The reason is not hard to understand , Because the input noise is accidentally given to when erasing × It fell off . 2. To some extent, the generation gap between training data and test data has been reduced . Because parts of the data are × It fell off , So the damage data is close to the test data to some extent . Training 、 The tests must have similarities and differences , Of course, we ask for the same thing and the different things .

Stack noise reduction automatic encoder (Stacked Denoising Auto Encoder,SDAE)

SDAE The idea is to put more DAE Stacked together to form a deep structure . The input will only be corroded during training ( Add noise ), There is no need to corrode after training . The structure is shown in the following figure :

Layer by layer greedy training : Each self coding layer carries out unsupervised training separately , To minimize input ( The input is the hidden layer output of the previous layer ) And the error between the reconstruction results is the training target . front K Layer training is ready , You can train K+1 layer , Because the forward propagation has been obtained K Layer output , Reuse K The output of the layer is treated as K+1 Input training K+1 layer .

once SDAE Training done , Its high-level features can be used as the input of the traditional monitoring algorithm . Of course , You can also add a layer at the top logistic regression layer(softmax layer ), Then use the tape label To further the network fine-tuning (fine-tuning), Supervised training with samples .

Learn more about programming , Please pay attention to my official account :

边栏推荐

- Use cpolar to build a commercial website (apply for website security certificate)

- Wechat applet - "do you really understand the use of applet components?

- Leetcode148 sort linked list (merge method applied to merge)

- 【方差分析】之matlab求解

- [Yugong series] July 2022 go teaching course 017 - if of branch structure

- [deep learning] fully connected network

- Install dexdump on win10 and remove the shell

- .net6 encounter with the League of heroes - create a game assistant according to the official LCU API

- Flask send_ Absolute path traversal caused by file function

- 31. Opinion-based Relational Pivoting forCross-domain Aspect Term Extraction 阅读笔记

猜你喜欢

![Matlab solution of [analysis of variance]](/img/30/638c4671c3e37b7ce999c6c98e3700.png)

Matlab solution of [analysis of variance]

Error reported by Nacos enabled client

![[ostep] 02 virtualized CPU - process](/img/0b/3f151ccf002eb6c0469bf74072a3c5.png)

[ostep] 02 virtualized CPU - process

Multi task text classification model based on tag embedded attention mechanism

VBA 上传图片

如何评价测试质量?

Fill in the questionnaire and receive the prize | we sincerely invite you to fill in the Google play academy activity survey questionnaire

OpenCV中图像算术操作与逻辑操作

![[integer programming]](/img/e5/aebc5673903f932030120822e4331b.png)

[integer programming]

Seata deployment and microservice integration

随机推荐

[draw with toolbar]

Summary and analysis of image level weakly supervised image semantic segmentation

[dry goods] data structure and algorithm principle behind MySQL index

Learning basic knowledge of Android security

Win11 running virtual machine crashed? Solution to crash of VMware virtual machine running in win11

嵌入式开发:调试嵌入式软件的技巧

As the "first city" in Central China, Changsha's "talent attraction" has changed from competition to leadership

OpenCV中图像算术操作与逻辑操作

4 kinds of round head arrangement styles overlay styles

Canvas mesh wave animation JS special effect

31. Opinion-based Relational Pivoting forCross-domain Aspect Term Extraction 阅读笔记

unity透明通道的小技巧

【方差分析】之matlab求解

【1.2.投资的收益和风险】

Devops system of "cloud native" kubesphere pluggable components

The development of smart home industry pays close attention to edge computing and applet container technology

C winfrom common function integration

First knowledge of opencv4.x --- image perspective transformation

【2022国赛模拟】白楼剑——SAM、回滚莫队、二次离线

Canvas laser JS special effect code