当前位置:网站首页>"Analysis of 43 cases of MATLAB neural network": Chapter 40 research on prediction of dynamic neural network time series -- implementation of NARX based on MATLAB

"Analysis of 43 cases of MATLAB neural network": Chapter 40 research on prediction of dynamic neural network time series -- implementation of NARX based on MATLAB

2022-07-01 12:27:00 【mozun2020】

《MATLAB neural network 43 A case study 》: The first 40 Chapter Research on dynamic neural network time series prediction —— be based on MATLAB Of NARX Realization

1. Preface

《MATLAB neural network 43 A case study 》 yes MATLAB Technology Forum (www.matlabsky.com) planning , Led by teacher wangxiaochuan ,2013 Beijing University of Aeronautics and Astronautics Press MATLAB A book for tools MATLAB Example teaching books , Is in 《MATLAB neural network 30 A case study 》 On the basis of modification 、 Complementary , Adhering to “ Theoretical explanation — case analysis — Application extension ” This feature , Help readers to be more intuitive 、 Learn neural networks vividly .

《MATLAB neural network 43 A case study 》 share 43 Chapter , The content covers common neural networks (BP、RBF、SOM、Hopfield、Elman、LVQ、Kohonen、GRNN、NARX etc. ) And related intelligent algorithms (SVM、 Decision tree 、 Random forests 、 Extreme learning machine, etc ). meanwhile , Some chapters also cover common optimization algorithms ( Genetic algorithm (ga) 、 Ant colony algorithm, etc ) And neural network . Besides ,《MATLAB neural network 43 A case study 》 It also introduces MATLAB R2012b New functions and features of neural network toolbox in , Such as neural network parallel computing 、 Custom neural networks 、 Efficient programming of neural network, etc .

In recent years, with the rise of artificial intelligence research , The related direction of neural network has also ushered in another upsurge of research , Because of its outstanding performance in the field of signal processing , The neural network method is also being applied to various applications in the direction of speech and image , This paper combines the cases in the book , It is simulated and realized , It's a relearning , I hope I can review the old and know the new , Strengthen and improve my understanding and practice of the application of neural network in various fields . I just started this book on catching more fish , Let's start the simulation example , Mainly to introduce the source code application examples in each chapter , This paper is mainly based on MATLAB2015b(32 position ) Platform simulation implementation , This is the research example of dynamic neural network time series prediction in Chapter 40 of this book , Don't talk much , Start !

2. MATLAB Simulation example

open MATLAB, Click on “ Home page ”, Click on “ open ”, Find the sample file

Choose chapter40.m, Click on “ open ”

chapter40.m Source code is as follows :

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% function : Research on dynamic neural network time series prediction - be based on MATLAB Of NARX Realization

% Environmental Science :Win7,Matlab2015b

%Modi: C.S

% Time :2022-06-21

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%% Matlab neural network 43 A case study

% Research on dynamic neural network time series prediction - be based on MATLAB Of NARX Realization

% by Wang Xiao Chuan (@ Wang Xiao Chuan _matlab)

% http://www.matlabsky.com

% Email:[email protected]163.com

% http://weibo.com/hgsz2003

%% Clear environment variables

clear

clc

tic

%% Load data

% load phdata

[phInputs,phTargets] = ph_dataset;

inputSeries = phInputs;

targetSeries = phTargets;

%% Establish a nonlinear autoregressive model

inputDelays = 1:2;

feedbackDelays = 1:2;

hiddenLayerSize = 10;

net = narxnet(inputDelays,feedbackDelays,hiddenLayerSize);

%% Definition of network data preprocessing function

net.inputs{

1}.processFcns = {

'removeconstantrows','mapminmax'};

net.inputs{

2}.processFcns = {

'removeconstantrows','mapminmax'};

%% Time series data preparation

[inputs,inputStates,layerStates,targets] = preparets(net,inputSeries,{

},targetSeries);

%% Training data 、 Validation data 、 Test data division

net.divideFcn = 'dividerand';

net.divideMode = 'value';

net.divideParam.trainRatio = 70/100;

net.divideParam.valRatio = 15/100;

net.divideParam.testRatio = 15/100;

%% Network training function setting

net.trainFcn = 'trainlm'; % Levenberg-Marquardt

%% Error function setting

net.performFcn = 'mse'; % Mean squared error

%% Drawing function settings

net.plotFcns = {

'plotperform','plottrainstate','plotresponse', ...

'ploterrcorr', 'plotinerrcorr'};

%% Network training

[net,tr] = train(net,inputs,targets,inputStates,layerStates);

%% Network testing

outputs = net(inputs,inputStates,layerStates);

errors = gsubtract(targets,outputs);

performance = perform(net,targets,outputs)

%% Calculate the training set 、 Verification set 、 Test set error

trainTargets = gmultiply(targets,tr.trainMask);

valTargets = gmultiply(targets,tr.valMask);

testTargets = gmultiply(targets,tr.testMask);

trainPerformance = perform(net,trainTargets,outputs)

valPerformance = perform(net,valTargets,outputs)

testPerformance = perform(net,testTargets,outputs)

%% Visualization of network training effect

figure, plotperform(tr)

figure, plottrainstate(tr)

figure, plotregression(targets,outputs)

figure, plotresponse(targets,outputs)

figure, ploterrcorr(errors)

figure, plotinerrcorr(inputs,errors)

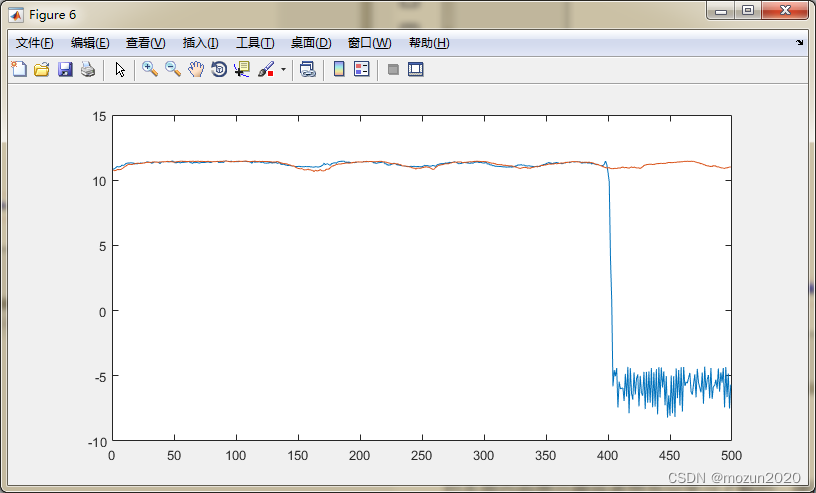

%% close loop The realization of pattern

% change NARX Neural network mode

narx_net_closed = closeloop(net);

view(net)

view(narx_net_closed)

% Calculation 1500-2000 Fitting effect of points

phInputs_c=phInputs(1500:2000);

PhTargets_c=phTargets(1500:2000);

[p1,Pi1,Ai1,t1] = preparets(narx_net_closed,phInputs_c,{

},PhTargets_c);

% Network simulation

yp1 = narx_net_closed(p1,Pi1,Ai1);

plot([cell2mat(yp1)' cell2mat(t1)'])

toc

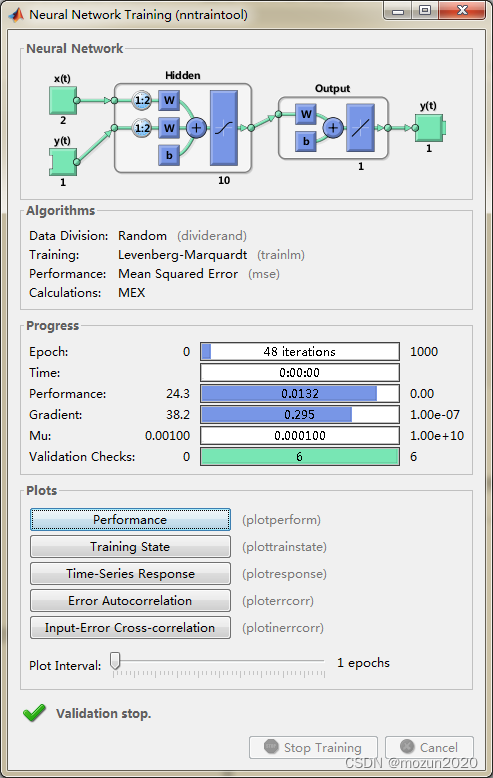

Add completed , Click on “ function ”, Start emulating , The output simulation results are as follows :

performance =

0.0188

trainPerformance =

0.0180

valPerformance =

0.0164

testPerformance =

0.0252

Time has passed 7.422134 second .

( In turn, click Performance,Training State,Time-Series Response,Error Autocorrelation,Input-Error Cross-correlation Corresponding figures can be obtained respectively )

3. Summary

Dynamic neural network has become a new research topic of deep learning . Compared to the static model ( Fixed calculation chart 、 Fixed parameter ), Dynamic network can adjust its own structure or parameters according to different inputs , Formed accuracy 、 Computational efficiency 、 Significant advantages in self adaptation, etc . Interested in the content of this chapter or want to fully learn and understand , It is suggested to study the contents of chapter 40 in the book ( The learning link is attached at the end of the text ). Some of these knowledge points will be supplemented on the basis of their own understanding in the later stage , Welcome to study and exchange together .

边栏推荐

- 【20211129】Jupyter Notebook远程服务器配置

- kubernetes之ingress探索实践

- Application of stack -- bracket matching problem

- Switch basic experiment

- Chain storage of binary tree

- Efforts at the turn of the decade

- Powerful, easy-to-use, professional editor / notebook software suitable for programmers / software developers, comprehensive evaluation and comprehensive recommendation

- leetcode 406. Queue Reconstruction by Height(按身高重建队列)

- The specified service is marked for deletion

- 巩固-C#运算符

猜你喜欢

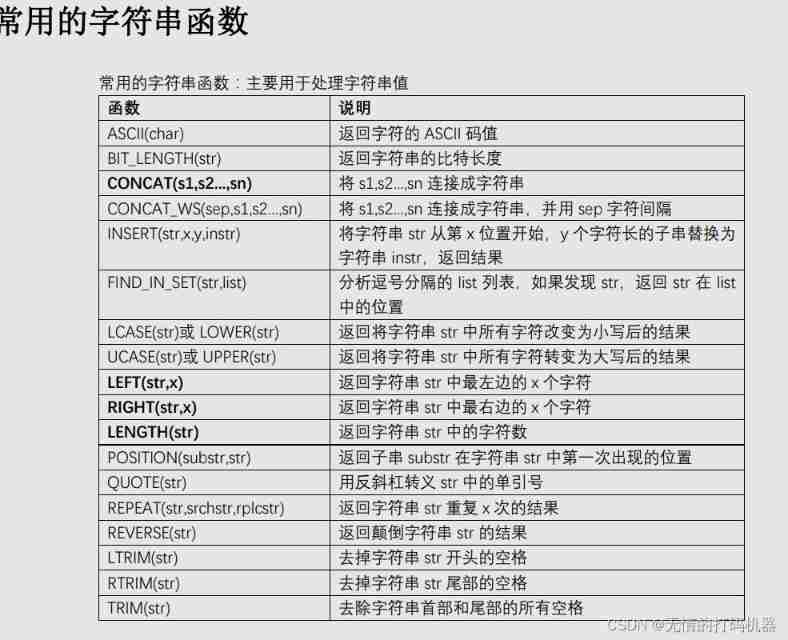

MySQL common functions

Self organization is the two-way rush of managers and members

Onenet Internet of things platform - mqtts product equipment connected to the platform

Queue operation---

91.(cesium篇)cesium火箭發射模擬

91. (chapitre Cesium) simulation de lancement de fusées cesium

LeetCode力扣(剑指offer 31-35)31. 栈的压入弹出序列32I.II.III.从上到下打印二叉树33. 二叉搜索树的后序遍历序列34. 二叉树中和为某一值的路径35. 复杂链表的复制

JS reverse | m3u8 data decryption of a spring and autumn network

91. (cesium chapter) cesium rocket launch simulation

Chapter 14 signals (IV) - examples of multi process tasks

随机推荐

ASTM D 3801固体塑料垂直燃烧试验

GID:旷视提出全方位的检测模型知识蒸馏 | CVPR 2021

IOS interview

Typora realizes automatic uploading of picture pasting

[some notes]

Stack-------

Relationship between accuracy factor (DOP) and covariance in GPS data (reference link)

Onenet Internet of things platform - the console sends commands to mqtt product devices

腾讯安全联合毕马威发布监管科技白皮书,解析“3+3”热点应用场景

91.(cesium篇)cesium火箭發射模擬

LeetCode 454. Add four numbers II

【datawhale202206】pyTorch推荐系统:召回模型 DSSM&YoutubeDNN

Consolidate -c operator

[20220605] Literature Translation -- visualization in virtual reality: a systematic review

JS reverse | m3u8 data decryption of a spring and autumn network

数字信号处理——线性相位型(Ⅱ、Ⅳ型)FIR滤波器设计(2)

2022-06-28-06-29

Ansible Playbook

Golang des-cbc

【20211129】Jupyter Notebook远程服务器配置